PointNet++S3DIS数据集训练报错记录

1.2022-05-27

This error occurs when I execute the train_semseg.py:

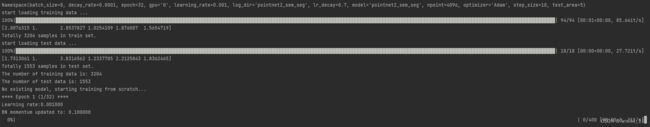

PS F:\pointnet_pointnet2_pytorch-master> python train_semseg.py --model pointnet2_sem_seg --test_area 5 --log_dir pointnet2_sem_seg PARAMETER ... Namespace(batch_size=16, decay_rate=0.0001, epoch=32, gpu='0', learning_rate=0.001, log_dir='pointnet2_sem_seg', lr_decay=0.7, model='pointnet2_sem_seg', npoint=4096, optimizer='Adam', step_size=10, test_area=5)

start loading training data ... 100%|

████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 118/118 [00:09<00:00, 12.18it/s] [1.1122853 1.1530312 1. 2.2862618 2.3985515 2.3416872 1.6953672 2.051836 1.7089869 3.416529 1.840006 2.7374067 1.3777069]

Totally 28940 samples in train set.

start loading test data ... 100%|

██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 46/46 [00:04<00:00, 10.26it/s] [ 1.1516608 1.2053679 1. 11.941072 2.6087077 2.0597224 2.1135178 2.0812197 2.5563374 4.5242124 1.4960177 2.9274836 1.6089553]

Totally 12881 samples in test set.

The number of training data is: 28940

The number of test data is: 12881 Use pretrain model

Learning rate:0.000700

BN momentum updated to: 0.050000

Traceback (most recent call last):

File "train_semseg.py", line 295, in main(args) File "train_semseg.py", line 181, in main for i, (points, target) in tqdm(enumerate(trainDataLoader), total=len(trainDataLoader), smoothing=0.9):

File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\site-packages\torch\utils\data\dataloader.py", line 355, in iter return self._get_iterator()

File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\site-packages\torch\utils\data\dataloader.py", line 301, in _get_iterator return _MultiProcessingDataLoaderIter(self) File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\site-packages\torch\utils\data\dataloader.py", line 914, in init w.start()

File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\multiprocessing\process.py", line 121, in start self._popen = self._Popen(self)

File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\multiprocessing\context.py", line 224, in _Popen return _default_context.get_context().Process._Popen(process_obj)

File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\multiprocessing\context.py", line 326, in _Popen return Popen(process_obj)

File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\multiprocessing\popen_spawn_win32.py", line 93, in init reduction.dump(process_obj, to_child)

File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\multiprocessing\reduction.py", line 60, in dump ForkingPickler(file, protocol).dump(obj)

AttributeError: Can't pickle local object 'main.<locals>.<lambda>'

Traceback (most recent call last): File "", line 1, in

File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\multiprocessing\spawn.py", line 116, in spawn_main exitcode = _main(fd, parent_sentinel)

File "F:\miniconda3\envs\pytorch_1.8_wsh\lib\multiprocessing\spawn.py", line 126, in _main self = reduction.pickle.load(from_parent)

EOFError: Ran out of input

有没有可能是数据集过大的问题

“由于训练集和验证集数据量过大导致的下采样倍数不够。我觉得可以试一试通过.json文件减小训练集和验证集的数目”

删除掉部分数据集,训练数据的数量从原来的47600减少到28900,但还是同样报错。

2.2022-05-31

看到帖子说有可能是数据集的问题,将npy改成hdf5数据集试试

利用tensorflow版本的pointnet 运行sem_seg下的gen_indoor3d_util.py

报错1:运行gen_indoor3d_h5.py时出现报错:

File "F:\pointnetv2-master\sem_seg\indoor3d_util.py", line 126, in sample_data

return np.concatenate([data, dup_data], 0), range(N) + list(sample)

TypeError: unsupported operand type(s) for +: 'range' and 'list'

Traceback (most recent call last):

File "F:/pointnet2_tensorflow/pointnetv2-master/sem_seg/collect_indoor3d_data.py", line 21, in <module>

indoor3d_util.collect_point_label(anno_path, os.path.join(output_folder, out_filename), 'numpy')

File "F:\pointnet2_tensorflow\pointnetv2-master\sem_seg\indoor3d_util.py", line 51, in collect_point_label

data_label = np.concatenate(points_list, 0)

File "<__array_function__ internals>", line 6, in concatenate

ValueError: need at least one array to concatenate

补充:今天遇到这个问题,数据集文件是空的

很奇怪,为什么运行gen_indoor3d_h5.py后,数据集会被删掉呢,生成的npy文件都没了。

前面的都没有问题,但是到F:\pointnetv2-master\data\stanford_indoor3d\Area_1_hallway_6.npy

解决:indoor3d_util.py

range(N)+list(sample) 改成list(range(N))

参考:https://blog.csdn.net/u014311125/article/details/122078418?ops_request_misc=%257B%2522request%255Fid%2522%253A%2522165400530016782391848045%2522%252C%2522scm%2522%253A%252220140713.130102334.pc%255Fall.%2522%257D&request_id=165400530016782391848045&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2allfirst_rank_ecpm_v1~rank_v31_ecpm-3-122078418-null-null.142v11control,157v12control&utm_term=pointnet%2B%2B%E8%AE%AD%E7%BB%83S3DIS%E6%95%B0%E6%8D%AE&spm=1018.2226.3001.4187

报错2:ply_data_all_0.h5’, errno = 2, error message = ‘No such file or directory’, flags = 0, o_flags = 0

解决:data_prep_util.py

将h5_fout = h5py.File(h5_filename)改成h5_fout = h5py.File(h5_filename, ‘w’)

报错3:没有找到Area_5_hallway_6. npy

重新启用了 python collect_indoor3d_data.py ,还是没有生成,真是奇怪。

把meta下面的txt文件中含有Area_5_hallway_6的信息都删掉

emo了,好像又不知道怎么读入hdf5

最终解决:

参考:https://blog.csdn.net/qq_41212586/article/details/116498177

在错误定位的地方找到num_workers,将值改为0。 另有一种情况是将use_multiprocessing的值置为False。

但是我的cpu处理器数量是16个,代码设置的是10个并没有超出啊

调整num_workers的数量为1,4,8,10,运行都会报错。但num_workers=0时,训练速度很慢,16个小时才13epoch。

2.2022-06-01

设置batch_size= 32,训练结果:

2022-06-02 00:32:40,447 - Model - INFO - **** Epoch 8 (32/32) ****

2022-06-02 00:32:40,447 - Model - INFO - Learning rate:0.000343

2022-06-02 01:19:00,425 - Model - INFO - Training mean loss: 0.130799

2022-06-02 01:19:00,426 - Model - INFO - Training accuracy: 0.952489

2022-06-02 01:19:00,529 - Model - INFO - ---- EPOCH 008 EVALUATION ----

2022-06-02 01:34:37,188 - Model - INFO - eval mean loss: 1.027795

2022-06-02 01:34:37,188 - Model - INFO - eval point avg class IoU: 0.519243

2022-06-02 01:34:37,189 - Model - INFO - eval point accuracy: 0.826248

2022-06-02 01:34:37,189 - Model - INFO - eval point avg class acc: 0.610861

2022-06-02 01:34:37,189 - Model - INFO - ------- IoU --------

class ceiling weight: 0.091, IoU: 0.905

class floor weight: 0.199, IoU: 0.974

class wall weight: 0.165, IoU: 0.737

class beam weight: 0.279, IoU: 0.000

class column weight: 0.000, IoU: 0.053

class window weight: 0.019, IoU: 0.571

class door weight: 0.034, IoU: 0.128

class table weight: 0.030, IoU: 0.673

class chair weight: 0.039, IoU: 0.714

class sofa weight: 0.019, IoU: 0.423

class bookcase weight: 0.003, IoU: 0.620

class board weight: 0.110, IoU: 0.525

class clutter weight: 0.012, IoU: 0.427

2022-06-02 01:34:37,190 - Model - INFO - Eval mean loss: 1.027795

2022-06-02 01:34:37,190 - Model - INFO - Eval accuracy: 0.826248

2022-06-02 01:34:37,190 - Model - INFO - Best mIoU: 0.521394

与原作者的对比:

2021-03-23 12:47:10,783 - Model - INFO - Eval mean loss: 0.939576

2021-03-23 12:47:10,783 - Model - INFO - Eval accuracy: 0.823115

2021-03-23 12:47:10,783 - Model - INFO - Best mIoU: 0.526403

---------------------------------------------------------------更新2022年11月-------------------------------------------------------------------------------------

最近在尝试用pointnet++中的sem_seg训练自己的数据集,仿照了S3DIS数据集,但是运行之后,程序在这里不动了,我目前尝试调小了batch_size=8,4,,没什么效果。苦恼

过了四五个小时,右下时间是不动的,进度仍然是0%