文本分类1-统计特征(含tfidf) +lgb

目录

一、文本分类

1、导包

2、数据读取+预处理

3、导入英文停用词

4、构建部分统计特征

5、文本预处理

6、划分训练、测试集

7、构建tf-idf特征

8、建模函数

9、特征分组+lgb模型构建

二、划重点

少走10年弯路

一、文本分类

1、导包

import re

import os

from sqlalchemy import create_engine

import pandas as pd

import numpy as np

import warnings

warnings.filterwarnings('ignore')

import sklearn

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_curve,roc_auc_score

import xgboost as xgb

from xgboost.sklearn import XGBClassifier

import lightgbm as lgb

import matplotlib.pyplot as plt

import gc2、数据读取+预处理

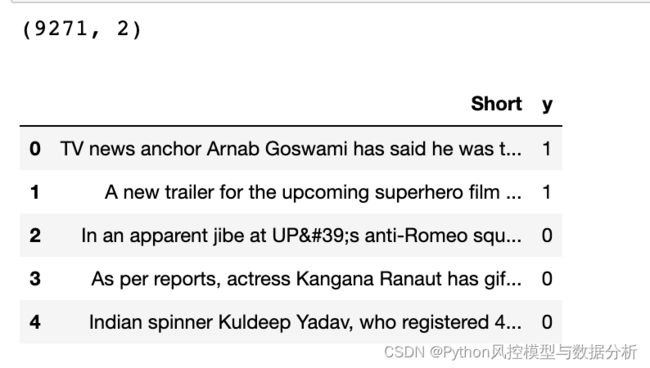

使用文本多分类数据,本文仅使用YouTube、India Today两类文章做二分类

data=pd.read_excel('Inshorts Cleaned Data.xlsx')

def data_preprocess(data):

df=data.drop(['Publish Date','Time ','Headline'],axis=1).copy()

df.rename(columns={'Source ':'Source'},inplace=True)

df=df[df.Source.isin(['YouTube','India Today'])].reset_index(drop=True)

df['y']=np.where(df.Source=='YouTube',1,0)

df=df.drop(['Source'],axis=1)

return df

df=data.pipe(data_preprocess)

print(df.shape)

df.head()3、导入英文停用词

从nltk包倒入停用词,并导包textblob、可用于做英文情感倾向性预测

from nltk.corpus import stopwords

from nltk.tokenize import sent_tokenize

from textblob import TextBlob

stop_english=stopwords.words('english')

stop_spanish=stopwords.words('spanish')

stop_english4、构建部分统计特征

分别统计文本词数、字符数、平均词长度、停用词数量、逗号/句号数量、小写/大写字母数、句子数量,以及情感倾向性评分。

def get_statistical_features(df):

df['word_cnt']=df.Short.apply(lambda x:len(str(x).split()))

df['char_cnt']=df.Short.str.len()

df['avg_word_len']=df.Short.apply(lambda x:np.average([len(word) for word in str(x).split()]))

df['stopword_cnt']=df.Short.apply(lambda x:len([word for word in str(x).split() if word in stop_english]))

df['comma_cnt']=df.Short.str.count(',')

df['period_cnt']=df.Short.str.count('\.')

df['digit_cnt']=df.Short.apply(lambda x:len([word for word in str(x).split() if word.isdigit()]))

df['upper_cnt']=df.Short.apply(lambda x:len([char for char in str(x) if char.isupper()]))

df['sentense_cnt']=df.Short.apply(sent_tokenize).str.len() # 句子数量

df['emotion']=df.Short.apply(lambda x:TextBlob(x).sentiment.polarity) # 情感倾向

df.columns=['sta_'+col if col not in ['Short','y'] else col for col in df.columns]

return df

df=get_statistical_features(df)

df5、文本预处理

处理简写、小写化、去除停用词、词性还原

from nltk.stem import WordNetLemmatizer

from nltk.corpus import stopwords

from nltk.tokenize import sent_tokenize

import nltk

def replace_abbreviation(text):

rep_list=[

("it's", "it is"),

("i'm", "i am"),

("he's", "he is"),

("she's", "she is"),

("we're", "we are"),

("they're", "they are"),

("you're", "you are"),

("that's", "that is"),

("this's", "this is"),

("can't", "can not"),

("don't", "do not"),

("doesn't", "does not"),

("we've", "we have"),

("i've", " i have"),

("isn't", "is not"),

("won't", "will not"),

("hasn't", "has not"),

("wasn't", "was not"),

("weren't", "were not"),

("let's", "let us"),

("didn't", "did not"),

("hadn't", "had not"),

("waht's", "what is"),

("couldn't", "could not"),

("you'll", "you will"),

("i'll", "i will"),

("you've", "you have")

]

result = text.lower()

for word_replace in rep_list:

result=result.replace(word_replace[0],word_replace[1])

# result = result.replace("'s", "")

return result

def drop_char(text):

result=text.lower()

result=re.sub('[^\w\s]',' ',result) # 去掉标点符号、特殊字符

result=re.sub('\s+',' ',result) # 多空格处理为单空格

return result

def stemed_words(text,stop_words,lemma):

word_list = [lemma.lemmatize(word, pos='v') for word in text.split() if word not in stop_words]

result=" ".join(word_list)

return result

def text_preprocess(text_seq):

stop_words = stopwords.words("english")

lemma = WordNetLemmatizer()

result=[]

for text in text_seq:

if pd.isnull(text):

result.append(None)

continue

text=replace_abbreviation(text)

text=drop_char(text)

text=stemed_words(text,stop_words,lemma)

result.append(text)

return result

df['short']=text_preprocess(df.Short)

df[['Short','short']]

6、划分训练、测试集

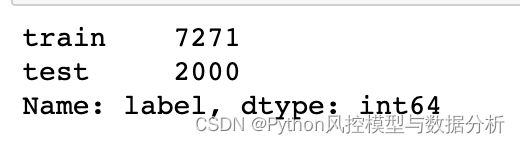

test_index=list(df.sample(2000).index)

df['label']=np.where(df.index.isin(test_index),'test','train')

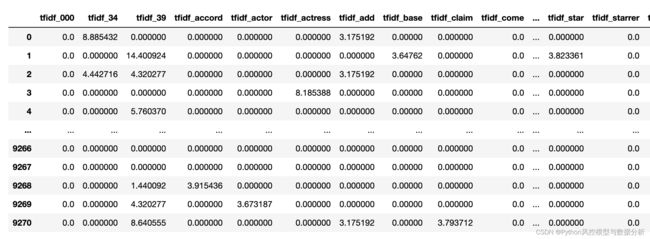

df['label'].value_counts()7、构建tf-idf特征

上一篇仔细写过,细节看这里:tf-idf原理 & TfidfVectorizer参数详解及实战

from sklearn.feature_extraction.text import TfidfVectorizer

def tfidf_init_params():

params={

'analyzer': 'word', # 取值'word'-分词结果为词级、'char'-字符级(结果会出现he is,空格在中间的情况)、'char_wb'-字符级(以单词为边界),默认值为'word'

'binary': False, # boolean类型,设置为True,则所有非零计数都设置为1.(即,tf的值只有0和1,表示出现和不出现)

'decode_error': 'strict',

'dtype': np.float64, # 输出矩阵的数值类型

'encoding': 'utf-8',

'input': 'content', # 取值filename,文本内容所在的文件名;file,序列项必须有一个'read'方法,被调用来获取内存中的字节;content,直接输入文本字符串

'lowercase': True, # boolean类型,计算之前是否将所有字符转换为小写。

'max_df': 1.0, # 词汇表中忽略文档频率高于该值的词;取值在[0,1]之间的小数时表示文档频率的阈值,取值为整数时(>1)表示文档频数的阈值;如果设置了vocabulary,则忽略此参数。

'min_df': 1, # 词汇表中忽略文档频率低于该值的词;取值在[0,1]之间的小数时表示文档频率的阈值,取值为整数时(>1)表示文档频数的阈值;如果设置了vocabulary,则忽略此参数。

'max_features': 50, # int或 None(默认值).设置int值时建立一个词汇表,仅用词频排序的前max_features个词创建语料库;如果设置了vocabulary,则忽略此参数。

'ngram_range': (1, 1), # 要提取的n-grams中n值范围的下限和上限,min_n <= n <= max_n。

'norm': None, # 输出结果是否标准化/归一化。l2:向量元素的平方和为1,当应用l2范数时,两个向量之间的余弦相似度是它们的点积;l1:向量元素的绝对值之和为1

'preprocessor': None, # 覆盖预处理(字符串转换)阶段,同时保留标记化和 n-gram 生成步骤。仅适用于analyzer不可调用的情况。

'smooth_idf': True, # 在文档频率上加1来平滑 idf ,避免分母为0

'stop_words': 'english', # 仅适用于analyzer='word'。取值english,使用内置的英语停用词表;list,自行设置停停用词列表;默认值None,不会处理停用词

'strip_accents': None,

'sublinear_tf': False, # 应用次线性 tf 缩放,即将 tf 替换为 1 + log(tf)

'token_pattern': '(?u)\\b\\w\\w+\\b', # 分词方式、正则表达式,默认筛选长度>=2的字母和数字混合字符(标点符号被当作分隔符)。仅在analyzer='word'时使用。

'tokenizer': None, # 覆盖字符串标记化步骤,同时保留预处理和 n-gram 生成步骤。仅适用于analyzer='word'

'use_idf': True, # 是否计算idf,布尔值,False时idf=1。

'vocabulary': None, # 自行设置词汇表(可设置字典),如果没有给出,则从输入文件/文本中确定词汇表

}

return params

def TfidfVectorizer_train(train_data,params):

tv = TfidfVectorizer(**params)

# 输入训练集矩阵,每行表示一个文本

# 训练,构建词汇表以及词项idf值,并将输入文本列表转成VSM矩阵形式

tv_fit = tv.fit_transform(train_data)

return tv

tfidf_model=TfidfVectorizer_train(df[df.label=='train'].short,tfidf_init_params())

df_tfidf=pd.DataFrame(tfidf_model.transform(df.short).toarray(),columns=tfidf_model.get_feature_names()).add_prefix('tfidf_')

df[df_tfidf.columns]=df_tfidf.copy()

df_tfidf

8、建模函数

def init_params(model_select='lgb'):

params_xgb={

'objective':'binary:logistic',

'eval_metric':'auc',

# 'silent':0,

'nthread':4,

'n_estimators':500,

'eta':0.02,

# 'num_leaves':10,

'max_depth':3,

'min_child_weight':500,

'scale_pos_weight':1,

'gamma':10,

'reg_alpha':2,

'reg_lambda':2,

'subsample':0.8,

'colsample_bytree':0.8,

'seed':123

}

params_lgb={

'boosting_type': 'gbdt',

'objective': 'binary',

'metric':'auc',

'n_jobs': 4,

'n_estimators':params_xgb.get('n_estimators',100),

'learning_rate': params_xgb.get('eta',0.1),

'max_depth':params_xgb.get('max_depth',4),

'num_leaves': params_xgb.get('num_leaves',20),

'max_bin':255,

'subsample_for_bin':100000, # 构建直方图的样本量

'min_split_gain':params_xgb.get('gamma',10),

'min_child_samples':params_xgb.get('min_child_weight',300),

'colsample_bytree': params_xgb.get('colsample_bytree',0.8),

'subsample': params_xgb.get('subsample',0.8),

'subsample_freq': 1, # 每 k 次迭代执行bagging

'feature_fraction_seed':2,

'bagging_seed': 1,

'reg_alpha':params_xgb.get('reg_alpha',10),

'reg_lambda':params_xgb.get('reg_lambda',10),

'scale_pos_weight':params_xgb.get('scale_pos_weight',1), # 等价于is_unbalance=False

'silent':True,

'random_state':1,

'verbose':-1, # 控制模型训练过程的输出信息,-1为不输出信息

}

if model_select=='xgb':

return params_xgb

elif model_select=='lgb':

return params_lgb

def ks_auc_value(y_value,df,model):

y_pred=model.predict_proba(df)[:,1]

fpr,tpr,thresholds= roc_curve(list(y_value),list(y_pred))

ks=max(tpr-fpr)

auc= roc_auc_score(list(y_value),list(y_pred))

return ks,auc

def model_train_sklearn(model_label,train,y_name,model_var,model_select='lgb',params=None):

if params is None:

params=init_params(model_select)

x_train,x_test, y_train, y_test =train_test_split(train[model_var],train[y_name],test_size=0.2, random_state=123)

# bst=xgb.cv(param,train_xgb,num_boost_round=500,nfold=5,metrics={'auc'}

# ,callbacks=[xgb.callback.print_evaluation(show_stdv=False),xgb.callback.early_stop(20)])

# num_round=bst.shape[0]

if model_select=='xgb':

model=XGBClassifier(**params)

elif model_select=='lgb':

model=lgb.LGBMClassifier(**params)

model.fit(x_train,y_train,eval_set=[(x_train, y_train),(x_test, y_test)],eval_metric='auc',verbose=True) # 调参时设verbose=False

train_ks,train_auc=ks_auc_value(y_train,x_train,model)

test_ks,test_auc=ks_auc_value(y_test,x_test,model)

# model_sklearn=model.fit(train[model_var],train[y_name]) # 全集数据训练

all_ks,all_auc=ks_auc_value(train[y_name],train[model_var],model)

dic={

'model_label':model_label,

'train_good':(y_train.count()-y_train.sum()),'train_bad':y_train.sum(),

'test_good':(y_test.count()-y_test.sum()),'test_bad':y_test.sum(),

'all_good':train[train[y_name]==0].shape[0],'all_bad':train[train[y_name]==1].shape[0],

'train_ks':train_ks,'train_auc':train_auc,

'test_ks':test_ks,'test_auc':test_auc,

'all_ks':all_ks,'all_auc':all_auc,

}

return dic,model

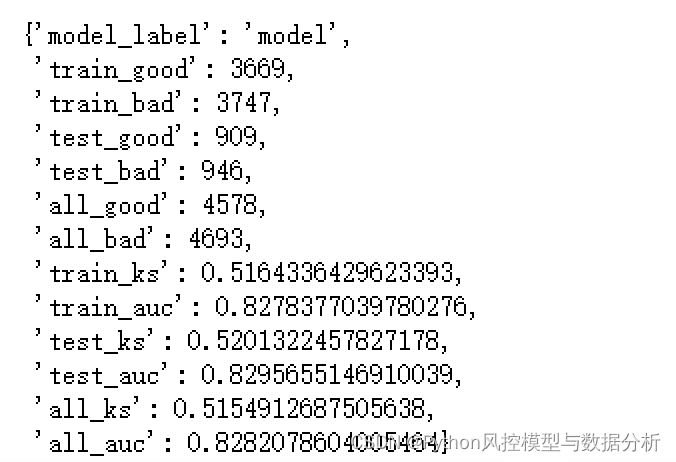

9、特征分组+lgb模型构建

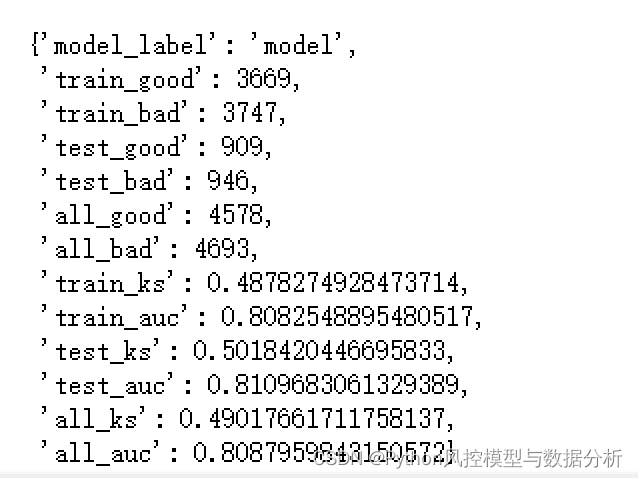

从整体结果可以看到在当前数据集中,因为大量的关键词和类别相扣,所以一般的统计特征效果较差,主要还是tf-idf的关键词特征效果较好。

(1)统计特征+tfidf特征效果

stat_col=[col for col in df.columns if col.startswith('sta')]

tfidf_col=[col for col in df.columns if col.startswith('tfidf')]

result_dic,model=model_train_sklearn('model',df,'y',stat_col+tfidf_col,model_select='lgb')

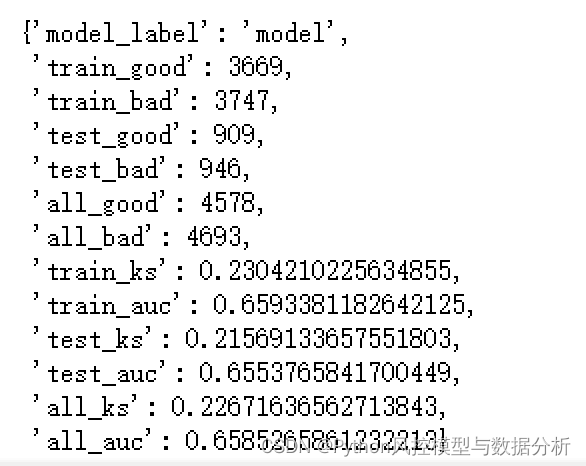

result_dic(2)统计特征效果

result_dic,model=model_train_sklearn('model',df,'y',stat_col,model_select='lgb') # 基础统计特征

result_dic(3)tfidf特征效果

result_dic,model=model_train_sklearn('model',df,'y',tfidf_col,model_select='lgb') # tfidf特征

result_dic二、划重点

少走10年弯路

关注公众号Python风控模型与数据分析,回复 文本分类1 获取本篇数据及代码

还有更多理论、代码分享等你来拿