【Hadoop】Hadoop3基础

文章整理自尚硅谷B站视频:https://www.bilibili.com/video/BV1Qp4y1n7EN?t=7&p=39

文章目录

- 〇、要点

- 一、概念

-

- 1.1 Hadoop是什么

- 1.2 Hadoop发展历史

- 1.3 Hadoop的三大发行版本

- 1.4 Hadoop的优势

- 1.5 Hadoop的组成

-

- 1.5.1 HDFS架构概述

- 1.5.2 Yarn架构概述

- 1.5.3 MapReduce架构概述

- 1.5.4 HDFS、Yarn、MapReduce三者的关系

- 1.6 大数据技术生态体系

- 1.7 推荐系统案例

- 二、环境准备

-

- 2.1 模板虚拟机准备

-

- 2.1.1 硬件

- 2.1.2 操作系统

- 2.1.3 IP和主机名

-

- 2.1.3.1 虚拟机VMnet8

- 2.1.3.2 Win10主机VMnet8

- 2.1.3.3 Linux配置

- 2.2 克隆

- 2.3 安装JDK

- 2.4 安装Hadoop

- 三、Hadoop生产集群搭建

-

- 3.1 本地模式

- 3.2 完全分布式集群

-

- 3.2.1 编写集群分发脚本xsync

-

- 3.2.1.1 scp 安全拷贝:

- 3.2.1.2 rsync 远程同步

- 3.2.1.3 xsync 集群分发脚本(放在了```~/bin```目录下)

- 3.2.2 SSH免密登录

- 3.2.3 集群配置

-

- 3.2.3.1 配置位置:

- 3.2.3.2 配置核心文件

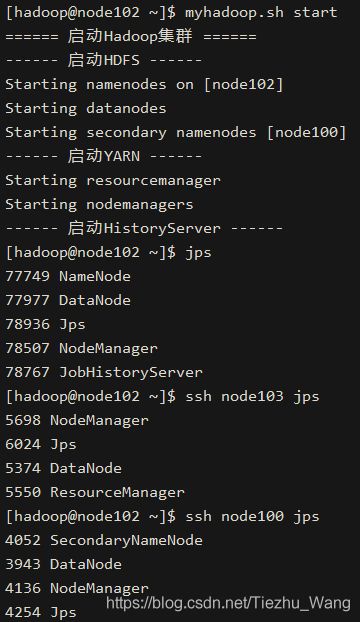

- 3.2.4 启动集群并测试

- 3.2.5 配置历史服务器

- 3.2.6 配置日志的聚集

- 3.2.7 各个服务组件的逐一启动和停止

- 3.2.8 编写Hadoop集群常用脚本

-

- 3.2.8.1 Hadoop集群启停脚本(包括HDFS、YARN、HistoryServer):myhadoop.sh

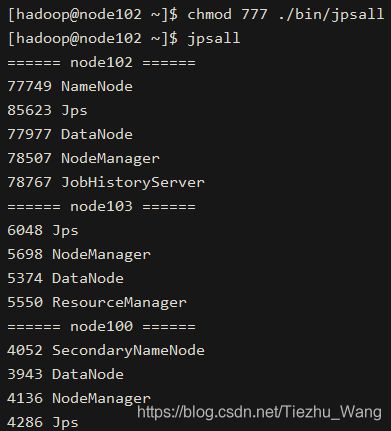

- 3.2.8.2 查看三台服务器的Java进程脚本:jpsall

- 3.2.9 常用端口号

- 3.2.10 常用配置文件

- 3.2.11 集群时间同步

- 四、错误和解决方案

〇、要点

- 1.5.1 HDFS 架构概述

- 1.5.2 YARN 架构概述

- 1.5.3 MapReduce 架构概述

- 2.1.1 Linux 模板虚拟机硬件配置

- 2.1.2 Linux 模板虚拟机操作系统配置

- 2.1.3.1 VMware 虚拟机IP配置

- 2.1.3.2 Win10 VMnet8 IP配置

- 2.1.3.3 Linux 修改IP

- 2.1.3.3 Linux 修改主机名称

- 2.1.3.3 Linux 修改主机名称映射

- 2.1.3.3 Linux 创建新用户并修改权限

- 2.3 Linux JDK 安装

- 2.4 Hadoop 安装

- 3.2 Hadoop 完全分布式集群搭建

- 3.2.1.1 Linux scp 安全拷贝

- 3.2.1.2 Linux rsync 远程同步

- 3.2.1.3 集群分发脚本

- 3.2.2 Linux SSH免密登录

- 3.2.3.1 Hadoop 集群配置的位置

- 3.2.3.2 Hadoop 核心文件的配置

- 3.2.3.2 Hadoop 指定NameNode的地址

- 3.2.3.2 Hadoop 指定hadoop数据测存放目录

- 3.2.3.2 Hadoop 配置HDFS网页登录使用的静态用户

- 3.2.3.2 Hadoop NameNode web端访问地址

- 3.2.3.2 Hadoop SecondaryNameNode web端访问地址

- 3.2.3.2 Hadoop 指定ResourceManager的地址

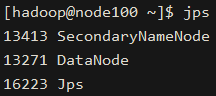

- 3.2.4 浏览器访问HDFS界面

- 3.2.4 Hadoop 集群崩溃的处理办法

- 3.2.5 Hadoop 历史服务器的配置

- 3.2.5 Hadoop 指定历史服务器端地址

- 3.2.5 Hadoop 指定历史服务器web端地址

- 3.2.6 Hadoop 配置日志的聚集

- 3.2.6 Hadoop 开启日志聚集工鞥呢

- 3.2.6 Hadoop 设置日志聚集服务器地址

- 3.2.6 Hadoop 设置日志保留的时间

- 3.2.7 Hadoop 各个组件的分别启动和停止

- 3.2.8 Hadoop 集群常用脚本

- 3.2.9 Hadoop 常用端口号

- 3.2.10 Hadoop 常用配置文件

- 问题1:错误: 找不到或无法加载主类 org.apache.hadoop.mapreduce.v2.app.MRAppMaster

- 问题2:Win10 IP映射失败

一、概念

1.1 Hadoop是什么

hadoop是Apache的分布式系统基础架构,主要解决海量数据的存储和分析计算的问题

1.2 Hadoop发展历史

1.3 Hadoop的三大发行版本

1.4 Hadoop的优势

- 高可靠性:Hadoop底层维护多个数据版本,即使Hadoop某个计算元素或存储出现故障,也不会导致数据的的丢失

- 高扩展性:在集群间分配任务数据,可方便地扩展数以千计的节点(动态增加,动弹删除)

- 高效性:在MapReduce思想下,Hadoop是并行工作的,以加快任务处理速度

- 高容错性:能够自动将失败的任务重新分配

1.5 Hadoop的组成

- Hadoop1.x:Common(辅助工具)、HDFS(数据存储)、MapReduce(计算+资源调度)

- Hadoop2.x:Common、HDFS、MapReduce(计算)、Yarn(资源调度)

- Hadoop3.x:(在组成上没有变化)

1.5.1 HDFS架构概述

Hadoop Distributed File System,Hadoop分布式文件系统

- NameNode(nn):记录每一个文件块存储的位置。存储文件的元数据(文件名、目录结构、属性)和每个文件的块列表和块所在的DataNode等

- DataNode(dn):具体存储数据(每个服务器都是一个DataNode)。在本地文件系统存储块数据,以及块数据的校验和

- SecondaryNameNode(2nn):辅助NameNode工作。每隔一段时间对NameNode元数据备份

1.5.2 Yarn架构概述

Yet Another Resource Negotiator,Hadoop的资源管理器

- ResourceManager(RM):管理整个集群资源(内存、CPU等)

- NodeManager(NM):管理单个节点的资源

- ApplicationMaster(AM):管理单个任务运行的资源

- Container:容器,相当于一台独立的服务器,里面封装了任务运行所需要的资源(内存、CPU、磁盘、网络等)

客户端可以有多个;集群上可以运行多个ApplicationMaster;每个NodeManager上可以有多个Container

1.5.3 MapReduce架构概述

- Map:并行处理输入的数据

- Reduce:对Map结果进行汇总

1.5.4 HDFS、Yarn、MapReduce三者的关系

1.6 大数据技术生态体系

1.7 推荐系统案例

二、环境准备

2.1 模板虚拟机准备

2.1.1 硬件

- 自定义安装

- Linux CentOS 7

- 50G硬盘

2.1.2 操作系统

- 设置时区

- 最小化安装(选择前三个附加选项)

- 安装位置:

- /boot:1G(文件系统改为ext4)

- swap:4G

- /:45G

- KDUMP:取消

- root密码:123456(方便)

2.1.3 IP和主机名

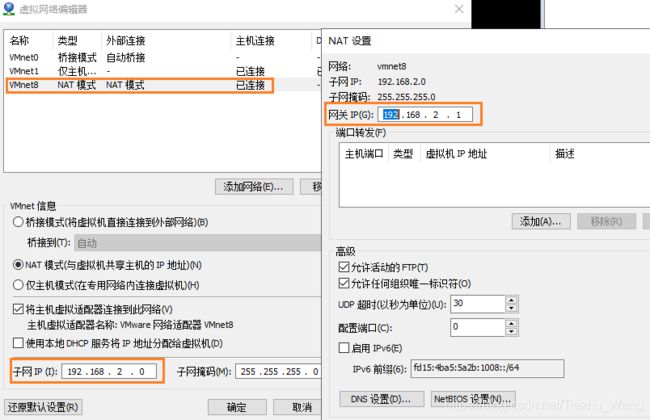

2.1.3.1 虚拟机VMnet8

2.1.3.2 Win10主机VMnet8

2.1.3.3 Linux配置

-

修改IP

- https://blog.csdn.net/Tiezhu_Wang/article/details/116109056

-

修改主机名称

vim /etc/hostname- 改为node100

-

修改主机名称映射

-

vim /etc/hosts -

增加:

192.168.2.100 node100

192.168.2.101 node101

192.168.2.102 node102

192.168.2.103 node103

…

-

-

重启

rebootifconfig或ip addr查看IPhostname查看主机名

-

安装软件

yum install -y epel-releaseyum install -y net-toolsyum install -y vim

-

防火墙关闭

systemctl stop firewalldsystemctl disable firewalld.service

-

创建新用户并修改权限

useradd hadooppasswd hadoop然后设置密码vim /etc/sudoers- %wheel后增加:

hadoop ALL=(ALL) NOPASSWD:ALL

2.2 克隆

- 创建完整克隆

- 修改IP

- 修改主机名称

2.3 安装JDK

(这里在node102安装)

-

解压:

sudo tar -zxvf jdk-8u271-linux-x64.tar.gz -C /opt/module -

修改环境变量

- 增加.sh文件:

sudo vim /etc/profile.d/my_env.sh

#JAVA_HOME export JAVA_HOME=/opt/module/jdk1.8.0_271 export PATH=$PATH:$JAVA_HOME/bin-

source /etc/profile使配置生效 -

java -version查看java版本

- 增加.sh文件:

2.4 安装Hadoop

(这里在node102安装)

-

解压:

sudo tar -zxvf hadoop-3.2.1.tar.gz -C /opt/module/ -

修改环境变量

sudo vim /etc/profile.d/my_env.sh

#HADOOP_HOME export HADOOP_HOME=/opt/module/hadoop-3.2.1 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin-

source /etc/profile使配置生效 -

hadoop version查看hadoop版本

三、Hadoop生产集群搭建

- 存储模式

- 本地:数据存储在Linux本地

- 伪分布式:数据存储在HDFS

- 完全分布式:数据存储在HDFS,多台服务器工作

3.1 本地模式

-

新建文件

vim /opt/module/hadoop-3.2.1/wcinput/word.txt,写入内容:hadoop flink kafka flink flume spark hadoop hadoop -

运行官方示例

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount wcinput/ ./wcoutput -

查看输出:

3.2 完全分布式集群

3.2.1 编写集群分发脚本xsync

3.2.1.1 scp 安全拷贝:

| scp | -r | p d i r / pdir/ pdir/fname | u s e r @ user@ user@host: p d i r / pdir/ pdir/fname |

|---|---|---|---|

| 命令 | 递归 | 要拷贝的文件路径/名称 | 目的地用户@主机:目的地路径/名称 |

- 推:

[hadoop@node102 module]$ scp -r jdk1.8.0_271/ hadoop@node103:/opt/module/ - 拉:

[hadoop@node103 opt]$ scp -r hadoop@node102:/opt/module/hadoop-3.2.1 ./module/ - 转:

[hadoop@node103 opt]$ scp -r hadoop@node102:/opt/module/* hadoop@node104:/opt/module/

3.2.1.2 rsync 远程同步

| rsync | -av | p d i r / pdir/ pdir/fname | u s e r @ user@ user@host: p d i r / pdir/ pdir/fname |

|---|---|---|---|

| 命令 | 归档拷贝/显示赋值过程 | 要拷贝的文件路径/名称 | 目的地用户@主机:目的地路径/名称 |

- 同步:

[hadoop@node102 ~]$ rsync -av /opt/module/hadoop-3.2.1/ hadoop@node103:/opt/module/hadoop-3.2.1/

3.2.1.3 xsync 集群分发脚本(放在了~/bin目录下)

#!/bin/bash

#1.判断参数个数

if [ $# -lt 1 ]

then

echo Not Enough Argument!

exit;

fi

#2.遍历集群所有机器

for host in node100 node102 node103

do

echo ==== $host ====

#3.遍历所有目录,进行发送

for file in $@

do

#4.判断文件是否存在

if [ -e $file ]

then

#5.获取父目录

pdir=$(cd -P $(dirname $file) ; pwd)

#6.获取当前目录的名称

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

done

-

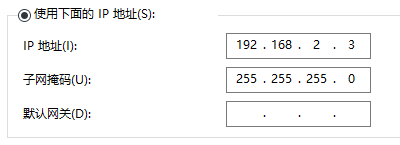

写完后直接执行(同步bin目录):

xsync bin/ -

(同步环境变量,需要sudo):

sudo ~/bin/xsync /etc/profile.d/my_env.sh切换到node103,同步成功:

同步后记得source:

3.2.2 SSH免密登录

-

https://blog.csdn.net/Tiezhu_Wang/article/details/113771406

-

或者(在node102中):

- 使用

ls -al查看隐藏文件(无需此步) ssh-keygen -t rsa生成秘钥ssh-copy-id node103复制node102的id到node103

完成后即可在node102中免密访问node103,在node103中同样执行此步,即可免密访问node102,同步时也无需输入密码。(node100同理)

- 使用

3.2.3 集群配置

3.2.3.1 配置位置:

| node102 | node103 | node100 | |

|---|---|---|---|

| HDFS | NameNode DataNode |

DataNode | SecondaryNameNode DataNode |

| YARN | NodeManager | ResourceManager NodeManager |

NodeManager |

3.2.3.2 配置核心文件

路径:/opt/module/hadoop-3.2.1/etc/hadoop

-

core-site.xml

<configuration> <property> <name>fs.defaultFSname> <value>hdfs://node102:8020value> property> <property> <name>hadoop.tmp.dirname> <value>/opt/module/hadoop-3.2.1/datavalue> property> <property> <name>hadoop.http.staticuser.username> <value>hadoopvalue> property> configuration> -

hdfs-site.xml

<configuration> <property> <name>dfs.namenode.http-addressname> <value>node102:9870value> property> <property> <name>dfs.namenode.secondary.http-addressname> <value>node100:9868value> property> configuration> -

yarn-site.xml

<configuration> <property> <name>yarn.nodemanager.aux-servicesname> <value>mapreduce_shufflevalue> property> <property> <name>yarn.resourcemanager.hostnamename> <value>node103value> property> configuration> -

mapre-site.xml

<configuration> <property> <name>mapreduce.framework.namename> <value>yarnvalue> property> configuration> -

配置后分发

[hadoop@node102 etc]$ xsync /opt/module/hadoop-3.2.1/etc/hadoop/

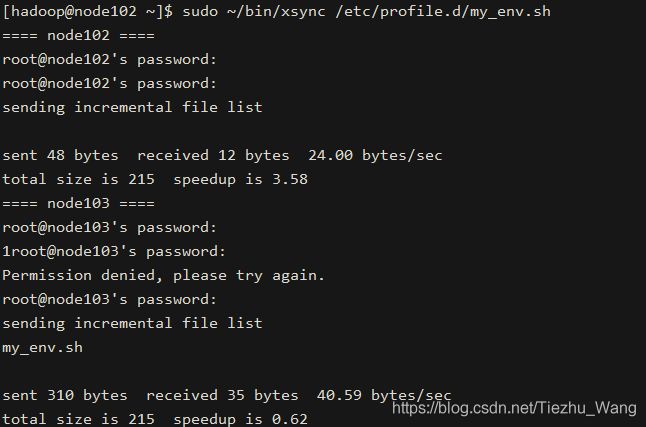

3.2.4 启动集群并测试

-

配置workers:

vim /opt/module/hadoop-3.2.1/etc/hadoop/workersnode102 node103 node100配置后分发:

xsync workers -

启动集群

-

启动Yarn:(在 ResoureManager 运行的机器上启动,这里是node103)

start-yarn.sh -

基本测试

[hadoop@node102 ~]$ hdfs dfs -mkdir /wcinput [hadoop@node102 ~]$ hdfs dfs -ls / Found 1 items drwxr-xr-x - hadoop supergroup 0 2021-04-26 21:18 /wcinput [hadoop@node102 ~]$ hdfs dfs -put input/word.txt /wcinput 2021-04-26 21:20:47,395 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false [hadoop@node102 ~]$ hdfs dfs -cat /wcinput/* 2021-04-26 21:21:12,593 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false hadoop spark flink hadoop flink hadoop flume -

集群崩溃的处理办法

- 关闭所有服务

- 删除每个集群的

data和logs目录(删除历史数据) - 格式化

hdfs namenode -format - 启动后即可正常使用

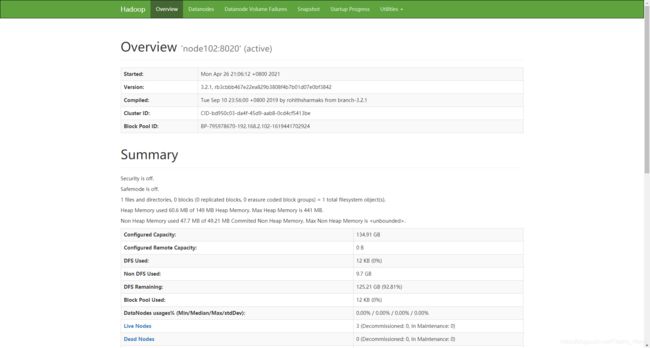

3.2.5 配置历史服务器

为了查看程序的历史运行情况

-

配置

mapred-site.xml<property> <name>mapreduce.jobhistory.addressname> <value>node102:10020value> property> <property> <name>mapreduce.jobhistory.webapp.addressname> <value>node102:19888value> property> -

配置完分发:

xsync mapred-site.xml -

启动hdfs(node102)、yarn(node103)后,启动历史服务器(node102):

-

执行示例WC

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount /wcinput /wcoutput -

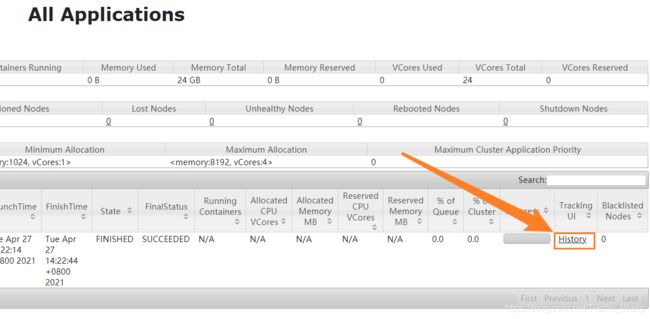

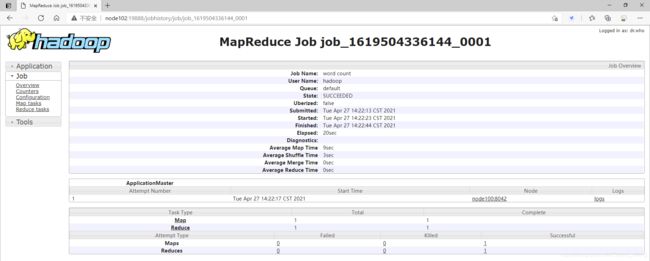

访问

192.168.2.103:8088

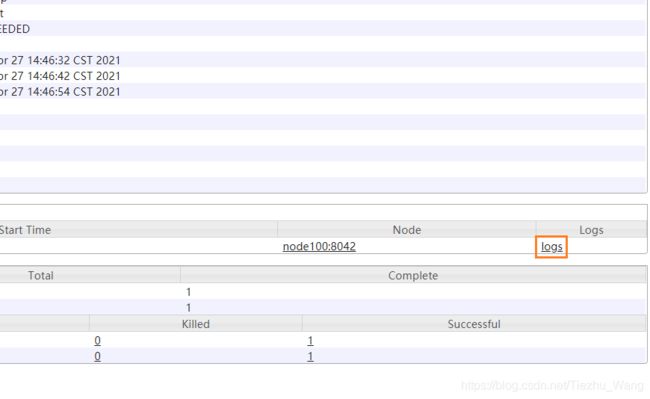

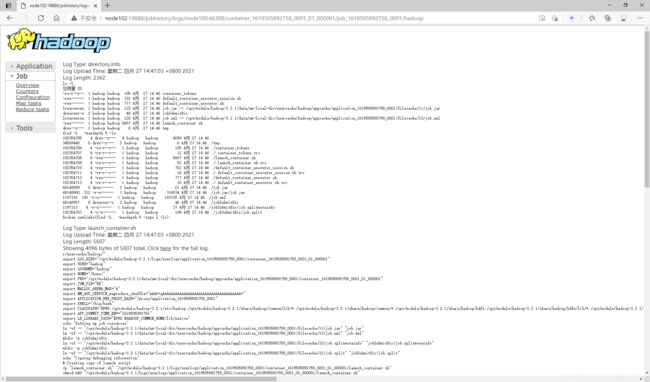

3.2.6 配置日志的聚集

应用运行完成之后,将程序运行日志信息上传到HDFS上

-

配置yarn-site.xml

<property> <name>yarn.log-aggregation-enablename> <value>truevalue> property> <property> <name>yarn.log.server.urlname> <value>http://node102:19888/jobhistory/logsvalue> property> <property> <name>yarn/log-aggregation.retain-secondsname> <value>604800value> property> -

修改后分发

-

关闭historyserver:

mapred --daemon stop historyserver -

重启yarn:先stop再start

-

启动historyserver

-

重新运行一个任务,即可在

node103:8088-history-logs查看到日志信息

3.2.7 各个服务组件的逐一启动和停止

-

HDFS组件

hdfs --daemon start(stop) namenode hdfs --daemon start(stop) datanode hdfs --daemon start(stop) secondarynamenode -

YARN组件

yarn --daemon start(stop) resourcemanager yarn --daemon start(stop) nodemanager

3.2.8 编写Hadoop集群常用脚本

3.2.8.1 Hadoop集群启停脚本(包括HDFS、YARN、HistoryServer):myhadoop.sh

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit;

fi

case $1 in

"start")

echo "====== 启动Hadoop集群 ======"

echo "------ 启动HDFS ------"

ssh node102 "/opt/module/hadoop-3.2.1/sbin/start-dfs.sh"

echo "------ 启动YARN ------"

ssh node103 "/opt/module/hadoop-3.2.1/sbin/start-yarn.sh"

echo "------ 启动HistoryServer ------"

ssh node102 "/opt/module/hadoop-3.2.1/bin/mapred --daemon start historyserver"

;;

"stop")

echo "====== 关闭Hadoop集群 ======"

echo "------ 关闭HistoryServer ------"

ssh node102 "/opt/module/hadoop-3.2.1/bin/mapred --daemon stop historyserver"

echo "------ 关闭YARN ------"

ssh node103 "/opt/module/hadoop-3.2.1/sbin/stop-yarn.sh"

echo "------ 关闭HDFS ------"

ssh node102 "/opt/module/hadoop-3.2.1/sbin/stop-dfs.sh"

;;

*)

echo "Input Args Error..."

;;

esac

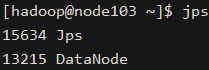

3.2.8.2 查看三台服务器的Java进程脚本:jpsall

#!/bin/bash

for host in node102 node103 node100

do

echo ====== $host ======

ssh $host jps

done

3.2.9 常用端口号

-

hadoop3.x

名称 端口 HDFS NameNode 内部通讯端口 8020/9000/9820 HDFS NameNode 对用户的查询端口 9870 YARN 查看任务运行情况的端口 8088 历史服务器 19888 -

hadoop2.x

名称 端口 HDFS NameNode 内部通讯端口 8020/9000 HDFS NameNode 对用户的查询端口 50070 YARN 查看任务运行情况的端口 8088 历史服务器 19888

3.2.10 常用配置文件

- hadoop3.x

- core-site.xml

- hdfs-site.xml

- yarn-site.xml

- mapred-site.xml

- workers

- hadoop2.x

- core-site.xml

- hdfs-site.xml

- yarn-site.xml

- mapred-site.xml

- slaves

3.2.11 集群时间同步

服务器能连接外网时,不需要时间同步

四、错误和解决方案

-

运行示例MR时,错误: 找不到或无法加载主类 org.apache.hadoop.mapreduce.v2.app.MRAppMaster

[hadoop@node102 input]$ hadoop jar /opt/module/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount /wcinput /wcoutput 2021-04-27 10:05:19,761 INFO client.RMProxy: Connecting to ResourceManager at node103/192.168.2.103:8032 2021-04-27 10:05:21,257 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/hadoop/.staging/job_1619488633583_0003 2021-04-27 10:05:21,388 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false 2021-04-27 10:05:22,103 INFO input.FileInputFormat: Total input files to process : 1 2021-04-27 10:05:22,156 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false 2021-04-27 10:05:22,373 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false 2021-04-27 10:05:22,537 INFO mapreduce.JobSubmitter: number of splits:1 2021-04-27 10:05:22,679 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false 2021-04-27 10:05:22,789 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1619488633583_0003 2021-04-27 10:05:22,789 INFO mapreduce.JobSubmitter: Executing with tokens: [] 2021-04-27 10:05:23,801 INFO conf.Configuration: resource-types.xml not found 2021-04-27 10:05:23,801 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'. 2021-04-27 10:05:24,256 INFO impl.YarnClientImpl: Submitted application application_1619488633583_0003 2021-04-27 10:05:25,482 INFO mapreduce.Job: The url to track the job: http://node103:8088/proxy/application_1619488633583_0003/ 2021-04-27 10:05:25,483 INFO mapreduce.Job: Running job: job_1619488633583_0003 2021-04-27 10:05:48,327 INFO mapreduce.Job: Job job_1619488633583_0003 running in uber mode : false 2021-04-27 10:05:48,328 INFO mapreduce.Job: map 0% reduce 0% 2021-04-27 10:05:51,838 INFO mapreduce.Job: Job job_1619488633583_0003 failed with state FAILED due to: Application application_1619488633583_0003 failed 2 times due to AM Container for appattempt_1619488633583_0003_000002 exited with exitCode: 1 Failing this attempt.Diagnostics: [2021-04-27 10:05:47.137]Exception from container-launch. Container id: container_1619488633583_0003_02_000001 Exit code: 1 [2021-04-27 10:05:47.189]Container exited with a non-zero exit code 1. Error file: prelaunch.err. Last 4096 bytes of prelaunch.err : Last 4096 bytes of stderr : 错误: 找不到或无法加载主类 org.apache.hadoop.mapreduce.v2.app.MRAppMaster [2021-04-27 10:05:47.190]Container exited with a non-zero exit code 1. Error file: prelaunch.err. Last 4096 bytes of prelaunch.err : Last 4096 bytes of stderr : 错误: 找不到或无法加载主类 org.apache.hadoop.mapreduce.v2.app.MRAppMaster For more detailed output, check the application tracking page: http://node103:8088/cluster/app/application_1619488633583_0003 Then click on links to logs of each attempt. . Failing the application. 2021-04-27 10:06:26,946 INFO mapreduce.Job: Counters: 0-

hadoop classpath -

将输出的内容添加到

yarn-site.xml中,name为yarn.application.classpath<property> <name>yarn.application.classpathname> <value>/opt/module/hadoop-3.2.1/etc/hadoop:/opt/module/hadoop-3.2.1/share/hadoop/common/lib/*:/opt/module/hadoop-3.2.1/share/hadoop/common/*:/opt/module/hadoop-3.2.1/share/hadoop/hdfs:/opt/module/hadoop-3.2.1/share/hadoop/hdfs/lib/*:/opt/module/hadoop-3.2.1/share/hadoop/hdfs/*:/opt/module/hadoop-3.2.1/share/hadoop/mapreduce/lib/*:/opt/module/hadoop-3.2.1/share/hadoop/mapreduce/*:/opt/module/hadoop-3.2.1/share/hadoop/yarn:/opt/module/hadoop-3.2.1/share/hadoop/yarn/lib/*:/opt/module/hadoop-3.2.1/share/hadoop/yarn/*value> property> -

重启YARN,即可成功运行MR

-

-

Win10 IP映射失败

-

修改

C:\Windows\System32\drivers\etc/hosts文件(管理员模式打开),增加如下内容:192.168.2.100 node100 192.168.2.101 node101 192.168.2.102 node102 192.168.2.103 node103 192.168.2.104 node104

-