C++_2019-04-14_机器视觉——Halcon专题_深度学习图像

目录

整体分类

区域检测

像素归类

整体分类

### 初始化:文件分支

```

root/

dl_prepared_models/

xxx.mdl(预训练模型,需要时候进行选择)

images/

A/

B/

or:

images/

A/

a/

b/

c/

B/

a/

b/

c/

C/

a/

b/

c/

01_deep_learning_tutorial_preprocessing.hdev

02_deep_learning_tutorial_training.hdev

03_deep_learning_tutorial_evaluation.hdev

04_deep_learning_tutorial_detectier.hdev

readme.md

```

### 第一步:数据imags的预处理

01_deep_learning_tutorial_preprocessing.hdev 生成:

```

root/

dataset_preprocessed/

A/

B/

```

### 第二步:02_deep_learning_tutorial_training.hdev 和 dl_prepared_models文件夹 的模型选一个 pretrained_dl_classifier_compact.hdl

生成结果:

```

root/

classifier_model.hdl

```

### 第三步:03_deep_learning_tutorial_evaluation.hdev 调用模型进行验证。

### 第四步:04_deep_learning_tutorial_detectier.hdev 进图像分类数据转换和读取

* Read data '/class1_pattern3/' or '/class3_pattern3/'and pretrained network.

read_dl_classifier_data_set ('images', 'last_folder', ImageFiles, GroundTruthLabels, \

LabelIndices, UniqueClasses)

read_dl_classifier ('pretrained_dl_classifier_compact.hdl', DLClassifierHandle)

*

* Create the directories for writing the images.

PreprocessedFolder := 'dataset_preprocessed'

file_exists ('dataset_preprocessed', FileExists)

if (FileExists)

remove_dir_recursively (PreprocessedFolder)

endif

create_directories (PreprocessedFolder, UniqueClasses)

*

* Prepare the new image names.

parse_filename (ImageFiles, BaseNames, Extensions, Directories)

ObjectFilesOut := PreprocessedFolder + '/' + GroundTruthLabels + '/' + BaseNames + '.hobj'

*

* Loop through all images,

* preprocess and then write them.

for ImageIndex := 0 to |ImageFiles|-1 by 1

read_image (Image, ImageFiles[ImageIndex])

preprocess_pill_image (Image, ImagePreprocessed, DLClassifierHandle)

write_object (ImagePreprocessed, ObjectFilesOut[ImageIndex])

endfor

*

init_program (WindowHandle)

*

* ** Training **

*

* Read the classifier and the preprocessed data. pretrained_dl_classifier_enhanced.hdl

*read_dl_classifier ('pretrained_dl_classifier_compact.hdl', DLClassifierHandle)

*read_dl_classifier ('pretrained_dl_classifier_enhanced.hdl', DLClassifierHandle)

read_dl_classifier ('pretrained_dl_classifier_resnet50.hdl', DLClassifierHandle)

* The best classifier (according to the validation error)

* is saved during training.

BestClassifier := 'classifier_model.hdl'

read_dl_classifier_data_set ('dataset_preprocessed', 'last_folder', ImageFiles, GroundTruthLabels, LabelIndices, UniqueClasses)

* Set the classes the classifier should distinguish.

set_dl_classifier_param (DLClassifierHandle, 'classes', UniqueClasses)

* Split the data into three subsets for training, validation, and testing.

split_dl_classifier_data_set (ImageFiles, GroundTruthLabels, 70, 15, TrainingImages, TrainingLabels, ValidationImages, ValidationLabels, TestImages, TestLabels)

*

* Adapt the folowing training parameters for your application:

*

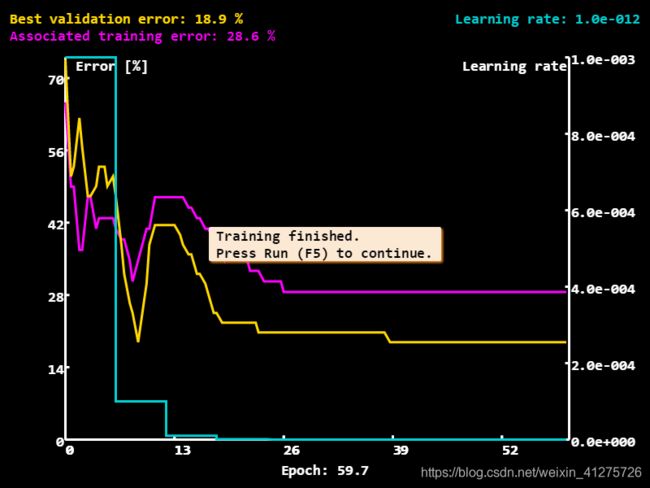

* The number of epochs determines the duration of the training.

NumEpochs := 30

* Plot the training and validation error every nth epoch.

PlotEveryNthEpoch := 0.5

*

* Set the batch_size as high as possible with your hardware.

*set_dl_classifier_param (DLClassifierHandle, 'batch_size', 64)

set_dl_classifier_param (DLClassifierHandle, 'batch_size', 2)

set_dl_classifier_param (DLClassifierHandle, 'runtime_init', 'immediately')

*

* Set the initial learning rate.

set_dl_classifier_param (DLClassifierHandle, 'learning_rate', 0.001)

* Decrease the learning rate regularily to ideally fine-tune your classifier.

LearningRateStepEveryNthEpoch := 6

LearningRateStepRatio := 0.1

*

* This local procedure encapsulates both the training and the visualization

* of the HDevelop example classify_pill_defects_deep_learning.hdev.

train_dl_classifier_plot_progress (TrainingImages, TrainingLabels, ValidationImages, ValidationLabels, NumEpochs, PlotEveryNthEpoch, DLClassifierHandle, WindowHandle, BestClassifier, LearningRateStepEveryNthEpoch, LearningRateStepRatio)

* stop ()

*

init_program (WindowHandle)

*

* Read the best classifier from the training.

BestClassifier := 'classifier_model.hdl'

read_dl_classifier (BestClassifier, BestDLClassifierHandle)

* Read and split data in the same way

* as in the training.

set_system ('seed_rand', 42)

read_dl_classifier_data_set ('dataset_preprocessed', 'last_folder', ImageFiles, GroundTruthLabels, LabelIndices, UniqueClasses)

split_dl_classifier_data_set (ImageFiles, GroundTruthLabels, 70, 15, TrainingImages, TrainingLabels, ValidationImages, ValidationLabels, TestImages, TestLabels)

*

* Generate a confusion matrix of the validation data.

read_image (ImagesValidation, ValidationImages)

apply_dl_classifier (ImagesValidation, BestDLClassifierHandle, DLClassifierResultHandle)

get_dl_classifier_result (DLClassifierResultHandle, 'all', 'predicted_classes', ValidationPredictedClasses)

gen_confusion_matrix (ValidationLabels, ValidationPredictedClasses, [], [], WindowHandle, ConfusionMatrix)

stop ()

get_dl_classifier_image_results (ImagesValidation, ValidationImages, ValidationLabels, ValidationPredictedClasses, [], [], WindowHandle)

*

* Compute evaluation measures.

evaluate_dl_classifier (ValidationLabels, BestDLClassifierHandle, DLClassifierResultHandle, 'f_score', 'global', EvaluationMeasure)

stop ()

*

* Display some examplary heatmaps using test images.

for ImageIndex := 0 to |ValidationLabels| by 1

read_image (TestImage, TestImages[ImageIndex])

dev_display_dl_classifier_heatmap (TestImage, BestDLClassifierHandle, ['feature_size'], [70], WindowHandle)

stop ()

endfor区域检测

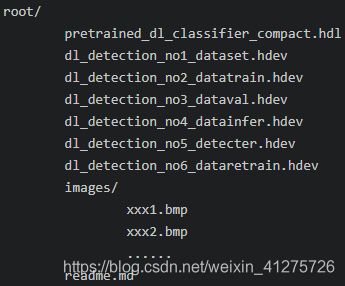

### 初始化:当前空白文件夹包含的文件分支如下

```

root/

dl_prepared_models\

三个分类的网络模型

pretrained_dl_classifier_compact.hdl

dl_detection_no1_dataset.hdev

dl_detection_no2_datatrain.hdev

dl_detection_no3_dataval.hdev

dl_detection_no4_datainfer.hdev

dl_detection_no5_detecter.hdev

dl_detection_no6_dataretrain.hdev

images/

xxx1.bmp

xxx2.bmp

......

readme.md

```

### 第一步:数据打标签

使用 Halcon 的 Deep learning tool 工具包打标签

```

root/

images/

xxx1.bmp

xxx2.bmp

......

labelimg.hdict

```

### 第二步:数据预处理

使用 Halcon 的软件运行 dl_detection_no1_dataset.hdev 生成如下图片信息

```

root/

detect_data/

dl_dataset.hdict/

samples/

1_dlsample.hdict

2_dlsample.hdict

......

```

### 第三步:模型训练

使用 Halcon 的软件运行 dl_detection_no2_datatrain.hdev 生成如下图片信息

```

root/

model_best.hdl

model_best_info.hdict

```

### 第四步:模型验证

使用 Halcon 的软件运行 dl_detection_no3_dataval.hdev

### 第五步:模型测试

使用 Halcon 的软件运行 dl_detection_no4_datainfer.hdev

### 第六步:模型检测

使用 Halcon 的软件运行 dl_detection_no5_detecter.hdev 对images的图片进行检测。

### 第七步:预训练模型进行再训练

```

增加数据集进行训练用

```

* ************************

* ** Set parameters ***

* ************************

DLDatasetFileName := 'labelimg.hdict'

OutputDir := 'detect_data'

Backbone := 'pretrained_dl_classifier_compact.hdl'

* Percentages for splitting the dataset.

TrainingPercent := 60

ValidationPercent := 20

*

* Image dimensions of the network. Later, these values are

* used to rescale the images during preprocessing.

ImageWidth := 512

ImageHeight := 320

ImageNumChannels := 3

*

* Set min_level, max_level, num_subscales, and aspect_ratios.

MinLevel := 2

MaxLevel := 4

NumSubscales := 3

AspectRatios := [1.0,0.5,2.0]

*

* Set capacity to 'medium', which is sufficient for this task

* and delivers better inference and training speed. Compared to

* 'high', the model with 'medium' is more than twice as fast,

* while showing almost the same detection performance.

Capacity := 'medium'

NumEpochs := 30

EvaluationIntervalEpochs := 1

read_dict (DLDatasetFileName, [], [], DLDataset)

split_dl_dataset (DLDataset, TrainingPercent, ValidationPercent, [])

get_dict_tuple (DLDataset, 'class_ids', ClassIDs)

create_dict (DLModelDetectionParam)

set_dict_tuple (DLModelDetectionParam, 'class_ids', ClassIDs)

set_dict_tuple (DLModelDetectionParam, 'image_width', ImageWidth)

set_dict_tuple (DLModelDetectionParam, 'image_height', ImageHeight)

set_dict_tuple (DLModelDetectionParam, 'image_num_channels', ImageNumChannels)

set_dict_tuple (DLModelDetectionParam, 'min_level', MinLevel)

set_dict_tuple (DLModelDetectionParam, 'max_level', MaxLevel)

set_dict_tuple (DLModelDetectionParam, 'num_subscales', NumSubscales)

set_dict_tuple (DLModelDetectionParam, 'aspect_ratios', AspectRatios)

set_dict_tuple (DLModelDetectionParam, 'capacity', Capacity)

create_dl_model_detection ('pretrained_dl_classifier_compact.hdl', |ClassIDs|, DLModelDetectionParam, DLModelHandle)

create_dict (PreprocessSettings)

set_dict_tuple (PreprocessSettings, 'overwrite_files', true)

create_dl_preprocess_param_from_model (DLModelHandle, 'false', 'full_domain', [], [], [], DLPreprocessParam)

preprocess_dl_dataset (DLDataset, OutputDir, DLPreprocessParam, PreprocessSettings, DLDatasetFileName)

* Display

create_dict (WindowDict)

get_dict_tuple (DLDataset, 'samples', DatasetSamples)

for Index := 0 to 5 by 1

SampleIndex := round(rand(1) * (|DatasetSamples| - 1))

read_dl_samples (DLDataset, SampleIndex, DLSample)

dev_display_dl_data (DLSample, [], DLDataset, 'bbox_ground_truth', [], WindowDict)

stop()

endfor

dev_display_dl_data_close_windows (WindowDict)

dev_close_window ()

* ************************

* ** Set parameters ***

* ************************

num_display := 5

* Percentages for splitting the dataset.

TrainingPercent := 60

ValidationPercent := 20

* Image dimensions of the network. Later, these values are

* used to rescale the images during preprocessing.

ImageWidth := 512

ImageHeight := 320

ImageNumChannels := 3

*

* Set min_level, max_level, num_subscales, and aspect_ratios.

MinLevel := 2

MaxLevel := 4

NumSubscales := 3

AspectRatios := [1.0,0.5,2.0]

*

* Set capacity to 'medium', which is sufficient for this task

* and delivers better inference and training speed. Compared to

* 'high', the model with 'medium' is more than twice as fast,

* while showing almost the same detection performance.

Capacity := 'medium'

NumEpochs := 300

EvaluationIntervalEpochs := 1

DLDatasetFile := 'detect_data/dl_dataset.hdict'

* Read in the preprocessed DLDataset file.

read_dict (DLDatasetFile, [], [], DLDataset)

get_dict_tuple (DLDataset, 'class_ids', ClassIDs)

create_dict (DLModelDetectionParam)

set_dict_tuple (DLModelDetectionParam, 'class_ids', ClassIDs)

set_dict_tuple (DLModelDetectionParam, 'image_width', ImageWidth)

set_dict_tuple (DLModelDetectionParam, 'image_height', ImageHeight)

set_dict_tuple (DLModelDetectionParam, 'image_num_channels', ImageNumChannels)

set_dict_tuple (DLModelDetectionParam, 'min_level', MinLevel)

set_dict_tuple (DLModelDetectionParam, 'max_level', MaxLevel)

set_dict_tuple (DLModelDetectionParam, 'num_subscales', NumSubscales)

set_dict_tuple (DLModelDetectionParam, 'aspect_ratios', AspectRatios)

set_dict_tuple (DLModelDetectionParam, 'capacity', Capacity)

create_dl_model_detection ('pretrained_dl_classifier_compact.hdl', |ClassIDs|, DLModelDetectionParam, DLModelHandle)

*

* *** 3.) TRAIN ***

*

* Here, we run a short training of 10 epochs.

* For better model performance increase the number of epochs,

* from 10 to e.g. 60.

create_dl_train_param (DLModelHandle, NumEpochs, EvaluationIntervalEpochs, 'true', 42, [], [], TrainParam)

train_dl_model (DLDataset, DLModelHandle, TrainParam, 0, TrainResults, TrainInfos, EvaluationInfos)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'left', 'black', [], [])

stop ()

dev_close_window ()

dev_close_window ()

dev_close_window ()dev_close_window ()

dev_update_off ()

* ************************

* ** Set parameters ***

* ************************

num_display := 5

* Percentages for splitting the dataset.

TrainingPercent := 60

ValidationPercent := 20

* Image dimensions of the network. Later, these values are

* used to rescale the images during preprocessing.

ImageWidth := 512

ImageHeight := 320

ImageNumChannels := 3

*

* Set min_level, max_level, num_subscales, and aspect_ratios.

MinLevel := 2

MaxLevel := 4

NumSubscales := 3

AspectRatios := [1.0,0.5,2.0]

*

* Set capacity to 'medium', which is sufficient for this task

* and delivers better inference and training speed. Compared to

* 'high', the model with 'medium' is more than twice as fast,

* while showing almost the same detection performance.

Capacity := 'medium'

NumEpochs := 30

EvaluationIntervalEpochs := 1

DLDatasetFile := 'detect_data/dl_dataset.hdict'

* Read in the preprocessed DLDataset file.

read_dict (DLDatasetFile, [], [], DLDataset)

* Read the best model, which is written to file by train_dl_model.

read_dl_model ('model_best.hdl', DLModelHandle)

*

* *** 3.) EVALUATE ***

*

create_dict (GenParamEval)

set_dict_tuple (GenParamEval, 'detailed_evaluation', true)

set_dict_tuple (GenParamEval, 'show_progress', true)

evaluate_dl_model (DLDataset, DLModelHandle, 'split', 'test', GenParamEval, EvaluationResult, EvalParams)

*

create_dict (DisplayMode)

set_dict_tuple (DisplayMode, 'display_mode', ['pie_charts_precision','pie_charts_recall'])

create_dict(WindowDict)

dev_display_detection_detailed_evaluation (EvaluationResult, EvalParams, DisplayMode, WindowDict)

stop ()

dev_display_dl_data_close_windows (WindowDict)

dev_close_window ()dev_close_window ()

dev_update_off ()

* ************************

* ** Set parameters ***

* ************************

num_display := 5

* Percentages for splitting the dataset.

TrainingPercent := 60

ValidationPercent := 20

* Image dimensions of the network. Later, these values are

* used to rescale the images during preprocessing.

ImageWidth := 512

ImageHeight := 320

ImageNumChannels := 3

*

* Set min_level, max_level, num_subscales, and aspect_ratios.

MinLevel := 2

MaxLevel := 4

NumSubscales := 3

AspectRatios := [1.0,0.5,2.0]

*

* Set capacity to 'medium', which is sufficient for this task

* and delivers better inference and training speed. Compared to

* 'high', the model with 'medium' is more than twice as fast,

* while showing almost the same detection performance.

Capacity := 'medium'

NumEpochs := 30

EvaluationIntervalEpochs := 1

DLDatasetFile := 'detect_data/dl_dataset.hdict'

* Read in the preprocessed DLDataset file.

read_dict (DLDatasetFile, [], [], DLDataset)

* Read the best model, which is written to file by train_dl_model.

read_dl_model ('model_best.hdl', DLModelHandle)

create_dl_preprocess_param_from_model (DLModelHandle, 'false', 'full_domain', [], [], [], DLPreprocessParam)

*

* *** 4.) INFER ***

*

* To demonstrate the inference steps, we apply

* the trained model to some randomly chosen example images.

list_image_files ('images', 'default', 'recursive', ImageFiles)

tuple_shuffle (ImageFiles, ImageFilesShuffled)

*

set_dl_model_param (DLModelHandle, 'batch_size', 1)

create_dict(WindowDict)

*

for IndexInference := 0 to 9 by 1

read_image (Image, ImageFilesShuffled[IndexInference])

gen_dl_samples_from_images (Image, DLSampleInference)

preprocess_dl_samples (DLSampleInference, DLPreprocessParam)

apply_dl_model (DLModelHandle, DLSampleInference, [], DLResult)

*

dev_display_dl_data (DLSampleInference, DLResult, DLDataset, 'bbox_result', [], WindowDict)

stop ()

endfor

dev_display_dl_data_close_windows (WindowDict)

dev_close_window ()ClassNames := ['A','B']

ClassIDs := [1,2]

create_dict (DLDataInfo)

set_dict_tuple (DLDataInfo, 'class_names', ClassNames)

set_dict_tuple (DLDataInfo, 'class_ids', ClassIDs)

* Read the path /images/

list_image_files ('images', 'default', 'recursive', ImageFiles)

tuple_shuffle (ImageFiles, ImageFilesShuffled)

* Read the best model, which is written to file by train_dl_model.

read_dl_model ('model_best.hdl', DLModelHandle)

create_dl_preprocess_param_from_model (DLModelHandle, 'false', 'full_domain', [], [], [], DLPreprocessParam)

create_dict(WindowDict)

for IndexInference := 0 to 5 by 1

read_image (Image, ImageFilesShuffled[IndexInference])

gen_dl_samples_from_images (Image, DLSampleInference)

preprocess_dl_samples (DLSampleInference, DLPreprocessParam)

apply_dl_model (DLModelHandle, DLSampleInference, [], DLResult)

dev_display_dl_data (DLSampleInference, DLResult, DLDataInfo,'bbox_result', [], WindowDict)

stop()

endfor

dev_display_dl_data_close_windows (WindowDict)*

* *** 1.) 数据读取 ***

*

***训练第二步:数据和预训练模型读取***

* 输入数据

DLDatasetFileName := 'detect_data/dl_dataset.hdict'

read_dict (DLDatasetFileName, [], [], DLDataset)

* 预训练模型

InitialModelFileName := 'model_best.hdl'

read_dl_model (InitialModelFileName, DLModelHandle)

***

*

* *** 2.) 数据训练 ***

*

***训练第二步:设置模型参数:set_dl_model_param ***

NumEpochs := 20

set_dl_model_param (DLModelHandle, 'batch_size', 1)

set_dl_model_param (DLModelHandle, 'learning_rate', 0.001)

set_dl_model_param (DLModelHandle, 'runtime_init', 'immediately')

*

* Here, we run a short training of 10 epochs.

* For better model performance increase the number of epochs,

* from 10 to e.g. 60.

create_dl_train_param (DLModelHandle, NumEpochs, 1, 'true', 42, [], [], TrainParam)

train_dl_model (DLDataset, DLModelHandle, TrainParam, 0, TrainResults, TrainInfos, EvaluationInfos)

stop()

dev_close_window ()

dev_close_window ()

dev_close_window ()

像素归类