CNN与句子分类之动态池化方法DCNN--TensorFlow实现篇

本文是paper“A Convolutional Neural Network for Modelling Sentences”基于TensorFlow的实现方法,代码和数据集都可以到我的github上面进行下载。

数据集及处理方法

本文仿真的是论文的第二个实验,使用的数据集是TREC。该数据集是QA领域用于分类问题类型的。其中问题主要分为6大类别,比如地理位置、人、数学信息等等,这里使用one-hot编码表明其类别关系。其包含5452个标记好的训练集和500个测试集。每个样本数据如下所示,以冒号分隔,前面标示类别,后面为问题:

NUM:date When did Hawaii become a state ?接下来介绍数据处理函数,这部分写在dataUtils.py文件中。其实和之前写的也大都差不多,都是读取文件中的句子和标签、进行PADDING、构建vocabulary、将句子转换成单词索引以方便embedding层进行转化为词向量。代码入下,已经注释的很清楚,不再进行过多介绍。使用的时候直接调用load_data()函数即可。

def clean_str(string):

string = re.sub(r"[^A-Za-z0-9:(),!?\'\`]", " ", string)

string = re.sub(r" : ", ":", string)

string = re.sub(r"\'s", " \'s", string)

string = re.sub(r"\'ve", " \'ve", string)

string = re.sub(r"n\'t", " n\'t", string)

string = re.sub(r"\'re", " \'re", string)

string = re.sub(r"\'d", " \'d", string)

string = re.sub(r"\'ll", " \'ll", string)

string = re.sub(r",", " , ", string)

string = re.sub(r"!", " ! ", string)

string = re.sub(r"\(", " \( ", string)

string = re.sub(r"\)", " \) ", string)

string = re.sub(r"\?", " \? ", string)

string = re.sub(r"\s{2,}", " ", string)

return string.strip().lower()

def load_data_and_labels():

"""

Loads data from files, splits the data into words and generates labels.

Returns split sentences and labels.

"""

# Load data from files

folder_prefix = 'data/'

x_train = list(open(folder_prefix+"train").readlines())

x_test = list(open(folder_prefix+"test").readlines())

test_size = len(x_test)

x_text = x_train + x_test

x_text = [clean_str(sent) for sent in x_text]

y = [s.split(' ')[0].split(':')[0] for s in x_text]

x_text = [s.split(" ")[1:] for s in x_text]

# Generate labels

all_label = dict()

for label in y:

if not label in all_label:

all_label[label] = len(all_label) + 1

one_hot = np.identity(len(all_label))

y = [one_hot[ all_label[label]-1 ] for label in y]

return [x_text, y, test_size]

def pad_sentences(sentences, padding_word="模型构建

参照论文中所介绍的DCNN模型进行仿真,但是这里有三个细节未按照论文中的要求进行设置:

- 卷积层的宽卷积这里使用的是tensorflow中的“SAME”卷积模式,该模式是保证输入与输出的维数相同,而不是按照l+m-1的输出。其实宽卷积也有很多形式,就是padding的个数不同导致输出的维数也不尽相同。“SAME”模式也是宽卷积的一种。

- 文中所说的动态k,这里因为只是用了两层卷积,所以直接指定了k1和top_k的值,并未实现k的动态计算

- k-max pooling算法也未按照文中所说的保留k个最大值的相对顺序,因为tf中的top_k函数只能返回最大的k个值何其索引,然后就懒得再去进行排序了==

模型构建类照例写在model.py文件中,其实就是两个conv_fold_k-max-pooling层加一个full-connected层。

class DCNN():

def __init__(self, batch_size, sentence_length, num_filters, embed_size, top_k, k1):

'''

构建模型初始化参数

:param batch_size:

:param sentence_length:

:param num_filters:

:param embed_size:

:param top_k: 顶层k-max pooling的k值

:param k1: 第一层k值

'''

self.batch_size = batch_size

self.sentence_length = sentence_length

self.num_filters = num_filters

self.embed_size = embed_size

self.top_k = top_k

self.k1 = k1

def per_dim_conv_layer(self, x, w, b):

'''

per-dim 卷积层

:param x: 输入

:param w: 卷积核权重

:param b: 偏置

:return:

'''

input_unstack = tf.unstack(x, axis=2)

w_unstack = tf.unstack(w, axis=1)

b_unstack = tf.unstack(b, axis=1)

convs = []

with tf.name_scope("per_dim_conv"):

for i in range(len(input_unstack)):

conv = tf.nn.relu(tf.nn.conv1d(input_unstack[i], w_unstack[i], stride=1, padding="SAME") + b_unstack[i])#[batch_size, k1+ws2-1, num_filters[1]]

convs.append(conv)

conv = tf.stack(convs, axis=2)

#[batch_size, k1+ws-1, embed_size, num_filters[1]]

return conv

def fold_k_max_pooling(self, x, k):

input_unstack = tf.unstack(x, axis=2)

out = []

with tf.name_scope("fold_k_max_pooling"):

for i in range(0, len(input_unstack), 2):

fold = tf.add(input_unstack[i], input_unstack[i+1])#[batch_size, k1, num_filters[1]]

conv = tf.transpose(fold, perm=[0, 2, 1])

values = tf.nn.top_k(conv, k, sorted=False).values #[batch_size, num_filters[1], top_k]

values = tf.transpose(values, perm=[0, 2, 1])

out.append(values)

fold = tf.stack(out, axis=2)#[batch_size, k2, embed_size/2, num_filters[1]]

return fold

def full_connect_layer(self, x, w, b, wo, dropout_keep_prob):

with tf.name_scope("full_connect_layer"):

h = tf.nn.tanh(tf.matmul(x, w) + b)

h = tf.nn.dropout(h, dropout_keep_prob)

o = tf.matmul(h, wo)

return o

def DCNN(self, sent, W1, W2, b1, b2, k1, top_k, Wh, bh, Wo, dropout_keep_prob):

conv1 = self.per_dim_conv_layer(sent, W1, b1)

conv1 = self.fold_k_max_pooling(conv1, k1)

conv2 = self.per_dim_conv_layer(conv1, W2, b2)

fold = self.fold_k_max_pooling(conv2, top_k)

fold_flatten = tf.reshape(fold, [-1, top_k*100*14/4])

print fold_flatten.get_shape()

out = self.full_connect_layer(fold_flatten, Wh, bh, Wo, dropout_keep_prob)

return out这里主要介绍一下top_k()函数的用法,该函数的返回结果最大的k个值values,和其对应的索引位置indices。当输入input是一维的时候直接返回k个最大值,当其是一个高维tensor时,返回最后一个维度上的所有的k个值。就是如果一个输入是[100,200,300,400]的tensor,k取10,那么返回结果就是[100,200,300,10]的tensor,可以参考这篇文章进行具体调试方便理解。或者去官网进行查看API。

tf.nn.top_k(input, k=1, sorted=True, name=None)

Args:

input: 1-D or higher Tensor with last dimension at least k.

k: 0-D int32 Tensor. Number of top elements to look for along the last dimension (along each row for matrices).

sorted: If true the resulting k elements will be sorted by the values in descending order.

name: Optional name for the operation.

Returns:

values: The k largest elements along each last dimensional slice.

indices: The indices of values within the last dimension of input.模型训练

上面完成了模型搭建的任务,接下来要做的工作就是训练模型。这部分代码在train.py文件中。也是老套路,先进行数据集的读入和转换工作,然后接下来定义输入的placeholder以及网络中要用的weight,b等参数。然后初始化DCNN类并调用DCNN函数完成模型的搭建。接下来定义cost、predict、acc等需要衡量的指标。然后就可以sess.run了。同样加上一堆的summary,以方便我们在tensorboard中观察训练过程。代码入下:

#coding=utf8

from model import *

import dataUtils

import numpy as np

import time

import os

embed_dim = 100

ws = [7, 5]

top_k = 4

k1 = 19

num_filters = [6, 14]

dev = 300

batch_size = 50

n_epochs = 30

num_hidden = 100

sentence_length = 37

num_class = 6

lr = 0.01

evaluate_every = 100

checkpoint_every = 100

num_checkpoints = 5

# Load data

print("Loading data...")

x_, y_, vocabulary, vocabulary_inv, test_size = dataUtils.load_data()

#x_:长度为5952的np.array。(包含5452个训练集和500个测试集)其中每个句子都是padding成长度为37的list(padding的索引为0)

#y_:长度为5952的np.array。每一个都是长度为6的onehot编码表示其类别属性

#vocabulary:长度为8789的字典,说明语料库中一共包含8789各单词。key是单词,value是索引

#vocabulary_inv:长度为8789的list,是按照单词出现次数进行排列。依次为:,\\?,the,what,is,of,in,a....

#test_size:500,测试集大小

# Randomly shuffle data

x, x_test = x_[:-test_size], x_[-test_size:]

y, y_test = y_[:-test_size], y_[-test_size:]

shuffle_indices = np.random.permutation(np.arange(len(y)))

x_shuffled = x[shuffle_indices]

y_shuffled = y[shuffle_indices]

x_train, x_dev = x_shuffled[:-dev], x_shuffled[-dev:]

y_train, y_dev = y_shuffled[:-dev], y_shuffled[-dev:]

print("Train/Dev/Test split: {:d}/{:d}/{:d}".format(len(y_train), len(y_dev), len(y_test)))

#--------------------------------------------------------------------------------------#

def init_weights(shape, name):

return tf.Variable(tf.truncated_normal(shape, stddev=0.01), name=name)

sent = tf.placeholder(tf.int64, [None, sentence_length])

y = tf.placeholder(tf.float64, [None, num_class])

dropout_keep_prob = tf.placeholder(tf.float32, name="dropout")

with tf.name_scope("embedding_layer"):

W = tf.Variable(tf.random_uniform([len(vocabulary), embed_dim], -1.0, 1.0), name="embed_W")

sent_embed = tf.nn.embedding_lookup(W, sent)

#input_x = tf.reshape(sent_embed, [batch_size, -1, embed_dim, 1])

input_x = tf.expand_dims(sent_embed, -1)

#[batch_size, sentence_length, embed_dim, 1]

W1 = init_weights([ws[0], embed_dim, 1, num_filters[0]], "W1")

b1 = tf.Variable(tf.constant(0.1, shape=[num_filters[0], embed_dim]), "b1")

W2 = init_weights([ws[1], embed_dim/2, num_filters[0], num_filters[1]], "W2")

b2 = tf.Variable(tf.constant(0.1, shape=[num_filters[1], embed_dim]), "b2")

Wh = init_weights([top_k*embed_dim*num_filters[1]/4, num_hidden], "Wh")

bh = tf.Variable(tf.constant(0.1, shape=[num_hidden]), "bh")

Wo = init_weights([num_hidden, num_class], "Wo")

model = DCNN(batch_size, sentence_length, num_filters, embed_dim, top_k, k1)

out = model.DCNN(input_x, W1, W2, b1, b2, k1, top_k, Wh, bh, Wo, dropout_keep_prob)

with tf.name_scope("cost"):

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=out, labels=y))

# train_step = tf.train.AdamOptimizer(lr).minimize(cost)

predict_op = tf.argmax(out, axis=1, name="predictions")

with tf.name_scope("accuracy"):

acc = tf.reduce_mean(tf.cast(tf.equal(tf.argmax(y, 1), tf.argmax(out, 1)), tf.float32))

#-------------------------------------------------------------------------------------------#

print('Started training')

with tf.Session() as sess:

#init = tf.global_variables_initializer().run()

global_step = tf.Variable(0, name="global_step", trainable=False)

optimizer = tf.train.AdamOptimizer(1e-3)

grads_and_vars = optimizer.compute_gradients(cost)

train_op = optimizer.apply_gradients(grads_and_vars, global_step=global_step)

# Keep track of gradient values and sparsity

grad_summaries = []

for g, v in grads_and_vars:

if g is not None:

grad_hist_summary = tf.summary.histogram("{}/grad/hist".format(v.name), g)

sparsity_summary = tf.summary.scalar("{}/grad/sparsity".format(v.name), tf.nn.zero_fraction(g))

grad_summaries.append(grad_hist_summary)

grad_summaries.append(sparsity_summary)

grad_summaries_merged = tf.summary.merge(grad_summaries)

# Output directory for models and summaries

timestamp = str(int(time.time()))

out_dir = os.path.abspath(os.path.join(os.path.curdir, "runs", timestamp))

print("Writing to {}\n".format(out_dir))

# Summaries for loss and accuracy

loss_summary = tf.summary.scalar("loss", cost)

acc_summary = tf.summary.scalar("accuracy", acc)

# Train Summaries

train_summary_op = tf.summary.merge([loss_summary, acc_summary, grad_summaries_merged])

train_summary_dir = os.path.join(out_dir, "summaries", "train")

train_summary_writer = tf.summary.FileWriter(train_summary_dir, sess.graph)

# Dev summaries

dev_summary_op = tf.summary.merge([loss_summary, acc_summary])

dev_summary_dir = os.path.join(out_dir, "summaries", "dev")

dev_summary_writer = tf.summary.FileWriter(dev_summary_dir, sess.graph)

# Checkpoint directory. Tensorflow assumes this directory already exists so we need to create it

checkpoint_dir = os.path.abspath(os.path.join(out_dir, "checkpoints"))

checkpoint_prefix = os.path.join(checkpoint_dir, "model")

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

saver = tf.train.Saver(tf.global_variables(), max_to_keep=num_checkpoints)

# Initialize all variables

sess.run(tf.global_variables_initializer())

def train_step(x_batch, y_batch):

feed_dict = {

sent: x_batch,

y: y_batch,

dropout_keep_prob: 0.5

}

_, step, summaries, loss, accuracy = sess.run(

[train_op, global_step, train_summary_op, cost, acc],

feed_dict)

print("TRAIN step {}, loss {:g}, acc {:g}".format(step, loss, accuracy))

train_summary_writer.add_summary(summaries, step)

def dev_step(x_batch, y_batch, writer=None):

"""

Evaluates model on a dev set

"""

feed_dict = {

sent: x_batch,

y: y_batch,

dropout_keep_prob: 1.0

}

step, summaries, loss, accuracy = sess.run(

[global_step, dev_summary_op, cost, acc],

feed_dict)

print("VALID step {}, loss {:g}, acc {:g}".format(step, loss, accuracy))

if writer:

writer.add_summary(summaries, step)

return accuracy, loss

batches = dataUtils.batch_iter(zip(x_train, y_train), batch_size, n_epochs)

# Training loop. For each batch...

max_acc = 0

best_at_step = 0

for batch in batches:

x_batch, y_batch = zip(*batch)

train_step(x_batch, y_batch)

current_step = tf.train.global_step(sess, global_step)

if current_step % evaluate_every == 0:

print("\nEvaluation:")

acc_dev, _ = dev_step(x_dev, y_dev, writer=dev_summary_writer)

if acc_dev >= max_acc:

max_acc = acc_dev

best_at_step = current_step

path = saver.save(sess, checkpoint_prefix, global_step=current_step)

print("")

if current_step % checkpoint_every == 0:

print 'Best of valid = {}, at step {}'.format(max_acc, best_at_step)

saver.restore(sess, checkpoint_prefix + '-' + str(best_at_step))

print 'Finish training. On test set:'

acc, loss = dev_step(x_test, y_test, writer=None)

print acc, loss训练结果

看了训练结果之后,明显的感觉到了过拟合是什么概念==但是挑了一些参数感觉还是过拟合的,修复效果并不明显。学习率,dropout都试了,还是会过拟合,不过相比上次仿真的那篇论文来说效果已经好很多了,毕竟在训练集上的准确率已经分分钟达到了100%。

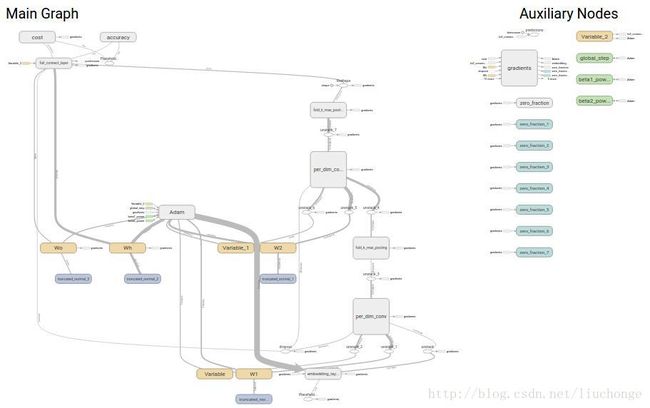

下面我们看几个从tensorboard上面接下来的图片: