【学习】HW5

机器学习/深度学习

- 一、HW5

-

-

- 任务

- 训练数据集

- 评价指标——BLEU

- 工作流程

- 训练技巧

- Baseline Guide

- report

- 代码

-

- 数据集迭代器

- 编码器

- attention

- 解码器

- Seq2Seq

- 模型初始化

- 优化

- 优化器:Adam+lr scheduling

- 验证和推断

- 模型补充

-

一、HW5

任务

在这个家庭作业中,我们将把英语翻译成繁体中文,比如

●Thank you so much, Chris.-> 非常謝謝你,克里斯.

由于句子在不同的语言中长度不同,因此将seq2seq框架应用于此任务。

训练数据集

配对数据Paired data

○TED2020: TED对话与由全球志愿者社区翻译成超过100多种语言的文本

○我们将使用(英文,中文)对齐对

单语数据Monolingual data

○More TED对话(只有中文)

评价指标——BLEU

●修正n-gram精度(n = 1~4)

●简洁性惩罚Brevity penalty:惩罚简短的假设

c是假设长度,r是参考长度

●BLEU socre是n-gram精度的几何平均值,由简洁惩罚影响

工作流程

工作流程:

1.预处理

a. 下载原始数据 b. clean and normalize c. 删除不好的数据(太长/太短) d. tokenization

2.训练

a. 初始化一个模型 b. 使用训练数据训练

3.测试

a. 生成测试数据的转换 b. 评估产品的性能

训练技巧

●标记化数据与子字单位Tokenize data with sub-word units

●标签平滑正则化Label smoothing regularization

●学习率调度Learning rate scheduling

●反翻译Back-translation

(1)Tokenize

Tokenize data with subword units

○ Reduce the vocabulary size

○ Alleviate the open vocabulary problem

○ example

■ ▁put ▁your s el ve s ▁in ▁my ▁po s ition ▁.

■ Put yourselves in my position.

(2)标签平滑Label smoothing

●标签平滑正则化

○在计算损失时,保留一些错误标签的概率

○避免过拟合

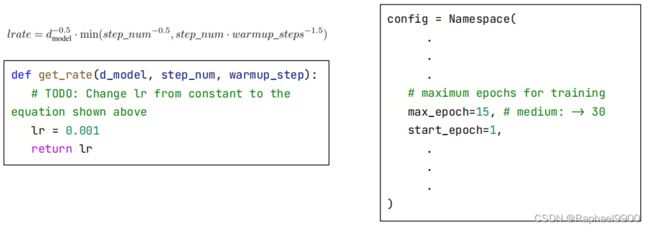

>(3)Leaning rate scheduling>学习速率调度

○将第一个warmup_steps训练步骤的学习速率线性增加,然后按步数的平方反根成比例降低。

○早期transformer稳定训练

(4)反向翻译

使用单语数据 monolingual data创建合成翻译数据

1。在相反方向训练translation系统

2。在目标侧收集单语数据,并应用machine traslation

3。使用翻译的和原始的单语数据作为额外的并行数据来训练更强的翻译系统(strong baseline)

Baseline Guide

● Simple Baseline: 训练一个简单的 RNN seq2seq模型

助教的示例代码运行就是simple baseline

● Medium Baseline: 增加learning rate scheduler和训练更久

修改get_rate函数+epoch改为30:

lr = (d_model**(-0.5)) * min(step_num**(-0.5), step_num*(warmup_step**(-1.5)))

max_epoch=30

● Strong Baseline:切换到transformer和调整超参数

右下角是参考论文。

#修改下面的savedir

config = Namespace(

datadir = "./DATA/data-bin/ted2020",

#savedir = "./checkpoints/rnn",

savedir = "./checkpoints/transformer",

#启用transoformer架构

#encoder = RNNEncoder(args, src_dict, encoder_embed_tokens)

#decoder = RNNDecoder(args, tgt_dict, decoder_embed_tokens)

encoder = TransformerEncoder(args, src_dict, encoder_embed_tokens

decoder = TransformerDecoder(args, tgt_dict, decoder_embed_tokens)

#修改模型参数

arch_args = Namespace(

encoder_embed_dim=256,

encoder_ffn_embed_dim=1024,

encoder_layers=4,

decoder_embed_dim=256,

decoder_ffn_embed_dim=1024,

decoder_layers=4,

share_decoder_input_output_embed=True,

dropout=0.15,

)

#启用这个函数

def add_transformer_args(args):

args.encoder_attention_heads=4

args.encoder_normalize_before=True

args.decoder_attention_heads=4

args.decoder_normalize_before=True

args.activation_fn="relu"

args.max_source_positions=1024

args.max_target_positions=1024

from fairseq.models.transformer import base_architecture

base_architecture(arch_args)

add_transformer_args(arch_args)

● Boss Baseline:应用反向翻译

1.通过转换语言来训练一个逆向模型,从输入是英文输出是中文改为输入是中文输出是英文。

2、用反向模型翻译单语数据,获得合成数据

a。在示例代码中的TODOs。

b。所有的TODO都可以通过使用来自早期cell的命令来完成。

3、用新的数据a训练一个更强的前向模型。

a。如果操作正确,那么新数据上的~30个epochs应该会通过Boss Baseline

report

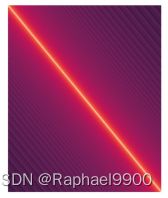

●问题1 Visualize Positional Embedding

○观察不同位置嵌入对之间的相似性,并简要解释结果。

给定一个(N x D)位置嵌入查找表,您的目标是得到一个(N x N)通过计算表中不同嵌入对之间的相似度得到“相似度矩阵”。您需要观察相似度矩阵,并简要地解释结果。在这个问题中,我们主要关注解码器decoder的位置嵌入。

在没有编码位置信息的情况下,我们期望紧密位置的嵌入之间的相似性更强。

用余弦相似度来度量两个向量之间的相似性。有一个余弦相似度的 pytorch实现。

pos_emb = model.decoder.embed_positions.weights.cpu().detach()

torch.Size( [1026, 256] )

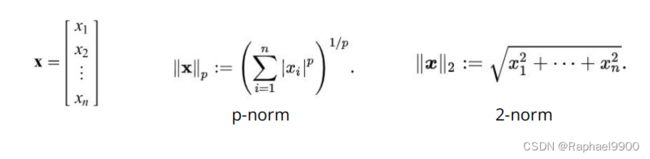

●问题2

○剪辑 gradient norm,并观察梯度范数在不同步骤中的变化。圈出两个有梯度爆炸gradient explosion的地方。

1。设置一个最大范数值max_norm

2。收集每个参数的梯度作为一个向量x。计算向量的p-norm为Lnorm

3。如果是Lnorm <= max_norm,则什么都不做。否则,计算比例因子scale_factor= max_norm / Lnorm,并将每个梯度乘以比例因子。

步骤1:应用剪辑梯度规范,并设置max_norm = 1.0。

步骤2:绘制一个“gradient norm v.s step”的曲线图。

步骤3:圈出两个有梯度爆炸的地方(其中clip_glad_norm函数生效)

代码

'''

作业描述

-英汉(繁体)翻译

-输入:一个英语句子(例如tom is a student)

-输出:中文翻译(例如湯姆 是 個 學生。)

-TODO

-训练一个简单的RNN seq2seq以实现高效翻译

-切换到transformer model 以提高性能

-应用Back-translation 以进一步提高性能

'''

import sys

import pdb

import pprint

import logging

import os

import random

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils import data

import numpy as np

import tqdm.auto as tqdm

from pathlib import Path

from argparse import Namespace

from fairseq import utils

import matplotlib.pyplot as plt

#固定随机种子

seed = 73

random.seed(seed)

torch.manual_seed(seed)

if torch.cuda.is_available():

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

np.random.seed(seed)

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

#数据集

'''

双语平行语料库2020年:

原始:398066(句子)

已处理:393980(句)

测试数据:

大小:4000(句子)

中文翻译未公开。提供的(.zh)文件是psuedo翻译,每行都是一个“。”

'''

#数据集下载

#数据集有两个压缩包:ted2020.tgz(包含raw.en, raw.zh两个文件),test.tgz(包含test.en, test.zh两个文件)。

data_dir = './DATA/rawdata' #解压后的文件全部放到一层目录中,目录位置是:"DATA/rawdata/ted2020"

dataset_name = 'ted2020'

urls = (

"https://github.com/yuhsinchan/ML2022-HW5Dataset/releases/download/v1.0.2/ted2020.tgz",

"https://github.com/yuhsinchan/ML2022-HW5Dataset/releases/download/v1.0.2/test.tgz",

)

file_names = (

'ted2020.tgz', # train & dev

'test.tgz', # test

)

prefix = Path(data_dir).absolute() / dataset_name

prefix.mkdir(parents=True, exist_ok=True)

#解压

for u, f in zip(urls, file_names):

path = prefix/f

if not path.exists():

!wget {u} -O {path}

if path.suffix == ".tgz":

!tar -xvf {path} -C {prefix}

elif path.suffix == ".zip":

!unzip -o {path} -d {prefix}

#将raw.en,raw.zh,test.en,test.zh分别改名称为train_dev.raw.en, train_dev.raw.zh, test.raw.en, test.raw.zh。

!mv {prefix/'raw.en'} {prefix/'train_dev.raw.en'}

!mv {prefix/'raw.zh'} {prefix/'train_dev.raw.zh'}

!mv {prefix/'test/test.en'} {prefix/'test.raw.en'}

!mv {prefix/'test/test.zh'} {prefix/'test.raw.zh'}

!rm -rf {prefix/'test'}

#语言

src_lang = 'en'

tgt_lang = 'zh'

data_prefix = f'{prefix}/train_dev.raw'

test_prefix = f'{prefix}/test.raw'

!head {data_prefix+'.'+src_lang} -n 5

!head {data_prefix+'.'+tgt_lang} -n 5

Thank you so much, Chris.

And it’s truly a great honor to have the opportunity to come to this stage twice; I’m extremely grateful.

I have been blown away by this conference, and I want to thank all of you for the many nice comments about what I had to say the other night.

And I say that sincerely, partly because I need that.

Put yourselves in my position.

非常謝謝你,克里斯。能有這個機會第二度踏上這個演講台

真是一大榮幸。我非常感激。

這個研討會給我留下了極為深刻的印象,我想感謝大家 對我之前演講的好評。

我是由衷的想這麼說,有部份原因是因為 —— 我真的有需要!

請你們設身處地為我想一想!

#预处理文件

import re

def strQ2B(ustring):

"""Full width -> half width"""

# reference:https://ithelp.ithome.com.tw/articles/10233122

ss = []

for s in ustring:

rstring = ""

for uchar in s:

inside_code = ord(uchar)

if inside_code == 12288: # 全宽空间:直接转换

inside_code = 32

elif (inside_code >= 65281 and inside_code <= 65374): # 全宽字符(空格除外)转换

inside_code -= 65248

rstring += chr(inside_code)

ss.append(rstring)

return ''.join(ss) #将字符串ss里面的内容以‘’分隔

#去掉或者替换一些特殊字符

def clean_s(s, lang):

if lang == 'en':

s = re.sub(r"\([^()]*\)", "", s) # remove ([text])

s = s.replace('-', '') # remove '-'

s = re.sub('([.,;!?()\"])', r' \1 ', s) # 保留标点符号

elif lang == 'zh':

s = strQ2B(s) # Q2B

s = re.sub(r"\([^()]*\)", "", s) # remove ([text])

s = s.replace(' ', '')

s = s.replace('—', '')

s = s.replace('“', '"')

s = s.replace('”', '"')

s = s.replace('_', '')

s = re.sub('([。,;!?()\"~「」])', r' \1 ', s) # keep punctuation

s = ' '.join(s.strip().split())

return s

def len_s(s, lang):

if lang == 'zh':

return len(s)

return len(s.split())

#clean后的文件名称是,train_dev.raw.clean.en, train_dev.raw.clean.zh, test.raw.clean.en, test.raw.clean.zh。

def clean_corpus(prefix, l1, l2, ratio=9, max_len=1000, min_len=1):

if Path(f'{prefix}.clean.{l1}').exists() and Path(f'{prefix}.clean.{l2}').exists():

print(f'{prefix}.clean.{l1} & {l2} exists. skipping clean.')

return

with open(f'{prefix}.{l1}', 'r') as l1_in_f:

with open(f'{prefix}.{l2}', 'r') as l2_in_f:

with open(f'{prefix}.clean.{l1}', 'w') as l1_out_f:

with open(f'{prefix}.clean.{l2}', 'w') as l2_out_f:

for s1 in l1_in_f:

s1 = s1.strip()

s2 = l2_in_f.readline().strip()

s1 = clean_s(s1, l1)

s2 = clean_s(s2, l2)

s1_len = len_s(s1, l1)

s2_len = len_s(s2, l2)

if min_len > 0: # remove short sentence

if s1_len < min_len or s2_len < min_len:

continue

if max_len > 0: # remove long sentence

if s1_len > max_len or s2_len > max_len:

continue

if ratio > 0: # remove by ratio of length

if s1_len/s2_len > ratio or s2_len/s1_len > ratio:

continue

print(s1, file=l1_out_f)

print(s2, file=l2_out_f)

clean_corpus(data_prefix, src_lang, tgt_lang)

clean_corpus(test_prefix, src_lang, tgt_lang, ratio=-1, min_len=-1, max_len=-1)

!head {data_prefix+'.clean.'+src_lang} -n 5

!head {data_prefix+'.clean.'+tgt_lang} -n 5

Thank you so much , Chris .

And it’s truly a great honor to have the opportunity to come to this stage twice ; I’m extremely grateful .

I have been blown away by this conference , and I want to thank all of you for the many nice comments about what I had to say the other night .

And I say that sincerely , partly because I need that .

Put yourselves in my position .

非常謝謝你 , 克里斯 。 能有這個機會第二度踏上這個演講台

真是一大榮幸 。 我非常感激 。

這個研討會給我留下了極為深刻的印象 , 我想感謝大家對我之前演講的好評 。

我是由衷的想這麼說 , 有部份原因是因為我真的有需要 !

請你們設身處地為我想一想 !

#分成train/valid

#划分训练集和验证集,train_dev.raw.clean.en和train_dev.clean.zh被分成train.clean.en, valid.clean.en和train.clean.zh, train.clean.zh。

valid_ratio = 0.01 # 3000~4000就足够了

train_ratio = 1 - valid_ratio

if (prefix/f'train.clean.{src_lang}').exists() \

and (prefix/f'train.clean.{tgt_lang}').exists() \

and (prefix/f'valid.clean.{src_lang}').exists() \

and (prefix/f'valid.clean.{tgt_lang}').exists():

print(f'train/valid splits exists. skipping split.')

else:

line_num = sum(1 for line in open(f'{data_prefix}.clean.{src_lang}'))

labels = list(range(line_num))

random.shuffle(labels)

for lang in [src_lang, tgt_lang]:

train_f = open(os.path.join(data_dir, dataset_name, f'train.clean.{lang}'), 'w')

valid_f = open(os.path.join(data_dir, dataset_name, f'valid.clean.{lang}'), 'w')

count = 0

for line in open(f'{data_prefix}.clean.{lang}', 'r'):

if labels[count]/line_num < train_ratio:

train_f.write(line)

else:

valid_f.write(line)

count += 1

train_f.close()

valid_f.close()

'''

Subword Units

词汇表外(OOV)一直是机器翻译中的一个主要问题。这可以通过使用子字单元来缓解。

我们将使用句子包,选择“unigram”或“字节对编码(BPE)”算法

分词

使用sentencepiece中的spm对训练集和验证集进行分词建模,模型名称是spm8000.model,同时产生词汇库spm8000.vocab。

使用模型对训练集、验证集、以及测试集进行分词处理,得到文件train.en, train.zh, valid.en, valid.zh, test.en, test.zh。

'''

import sentencepiece as spm

vocab_size = 8000

if (prefix/f'spm{vocab_size}.model').exists():

print(f'{prefix}/spm{vocab_size}.model exists. skipping spm_train.')

else:

spm.SentencePieceTrainer.train(

input=','.join([f'{prefix}/train.clean.{src_lang}',

f'{prefix}/valid.clean.{src_lang}',

f'{prefix}/train.clean.{tgt_lang}',

f'{prefix}/valid.clean.{tgt_lang}']),

model_prefix=prefix/f'spm{vocab_size}',

vocab_size=vocab_size,

character_coverage=1,

model_type='unigram', # 'bpe' works as well

input_sentence_size=1e6,

shuffle_input_sentence=True,

normalization_rule_name='nmt_nfkc_cf',

)

spm_model = spm.SentencePieceProcessor(model_file=str(prefix/f'spm{vocab_size}.model'))

in_tag = {

'train': 'train.clean',

'valid': 'valid.clean',

'test': 'test.raw.clean',

}

for split in ['train', 'valid', 'test']:

for lang in [src_lang, tgt_lang]:

out_path = prefix/f'{split}.{lang}'

if out_path.exists():

print(f"{out_path} exists. skipping spm_encode.")

else:

with open(prefix/f'{split}.{lang}', 'w') as out_f:

with open(prefix/f'{in_tag[split]}.{lang}', 'r') as in_f:

for line in in_f:

line = line.strip()#用于移除字符串头尾指定的字符(默认为空格或换行符)或字符序列。

tok = spm_model.encode(line, out_type=str)

print(' '.join(tok), file=out_f)#token是" ",加入句子

!head {data_dir+'/'+dataset_name+'/train.'+src_lang} -n 5 #显示前五行

!head {data_dir+'/'+dataset_name+'/train.'+tgt_lang} -n 5

▁thank ▁you ▁so ▁much ▁, ▁chris ▁.

▁and ▁it ’ s ▁ t ru ly ▁a ▁great ▁ho n or ▁to ▁have ▁the ▁ op port un ity ▁to ▁come ▁to ▁this ▁st age ▁ t wi ce ▁; ▁i ’ m ▁ex t re me ly ▁gr ate ful ▁.

▁i ▁have ▁been ▁ bl ow n ▁away ▁by ▁this ▁con fer ence ▁, ▁and ▁i ▁want ▁to ▁thank ▁all ▁of ▁you ▁for ▁the ▁many ▁ ni ce ▁ com ment s ▁about ▁what ▁i ▁had ▁to ▁say ▁the ▁other ▁night ▁.

▁and ▁i ▁say ▁that ▁since re ly ▁, ▁part ly ▁because ▁i ▁need ▁that ▁.

▁put ▁your s el ve s ▁in ▁my ▁po s ition ▁.

▁ 非常 謝 謝 你 ▁, ▁ 克 里 斯 ▁。 ▁ 能 有 這個 機會 第二 度 踏 上 這個 演講 台

▁ 真 是 一 大 榮 幸 ▁。 ▁我 非常 感 激 ▁。

▁這個 研 討 會 給我 留 下 了 極 為 深 刻 的 印 象 ▁, ▁我想 感 謝 大家 對我 之前 演講 的 好 評 ▁。

▁我 是由 衷 的 想 這麼 說 ▁, ▁有 部份 原因 是因為 我 真的 有 需要 ▁!

▁ 請 你們 設 身 處 地 為 我想 一 想 ▁!

#使用fairseq将数据二进制化

#文件二进制化,该过程使用fairseq库,这个库对于序列数据的处理很方便。运行后最终生成了一系列的文件,文件目录是"DATA/data_bin/ted2020",这下面有18个文件,其中的一些二进制文件才是我们最终想要的训练数据。

binpath = Path('./DATA/data-bin', dataset_name)

if binpath.exists():

print(binpath, "exists, will not overwrite!")

else:

!python -m fairseq_cli.preprocess \

--source-lang {src_lang}\

--target-lang {tgt_lang}\

--trainpref {prefix/'train'}\

--validpref {prefix/'valid'}\

--testpref {prefix/'test'}\

--destdir {binpath}\

--joined-dictionary\

--workers 2

2022-12-13 13:43:29 | INFO | fairseq_cli.preprocess | Namespace(align_suffix=None, alignfile=None, all_gather_list_size=16384, azureml_logging=False, bf16=False, bpe=None, cpu=False, criterion=‘cross_entropy’, dataset_impl=‘mmap’, destdir=‘DATA/data-bin/ted2020’, empty_cache_freq=0, fp16=False, fp16_init_scale=128, fp16_no_flatten_grads=False, fp16_scale_tolerance=0.0, fp16_scale_window=None, joined_dictionary=True, log_format=None, log_interval=100, lr_scheduler=‘fixed’, memory_efficient_bf16=False, memory_efficient_fp16=False, min_loss_scale=0.0001, model_parallel_size=1, no_progress_bar=False, nwordssrc=-1, nwordstgt=-1, only_source=False, optimizer=None, padding_factor=8, profile=False, quantization_config_path=None, reset_logging=False, scoring=‘bleu’, seed=1, source_lang=‘en’, srcdict=None, suppress_crashes=False, target_lang=‘zh’, task=‘translation’, tensorboard_logdir=None, testpref=‘/content/DATA/rawdata/ted2020/test’, tgtdict=None, threshold_loss_scale=None, thresholdsrc=0, thresholdtgt=0, tokenizer=None, tpu=False, trainpref=‘/content/DATA/rawdata/ted2020/train’, user_dir=None, validpref=‘/content/DATA/rawdata/ted2020/valid’, wandb_project=None, workers=2)

2022-12-13 13:44:19 | INFO | fairseq_cli.preprocess | [en] Dictionary: 8000 types

2022-12-13 13:45:19 | INFO | fairseq_cli.preprocess | [en] /content/DATA/rawdata/ted2020/train.en: 390041 sents, 12215608 tokens, 0.0% replaced by

2022-12-13 13:45:19 | INFO | fairseq_cli.preprocess | [en] Dictionary: 8000 types

2022-12-13 13:45:20 | INFO | fairseq_cli.preprocess | [en] /content/DATA/rawdata/ted2020/valid.en: 3939 sents, 122560 tokens, 0.0% replaced by

2022-12-13 13:45:20 | INFO | fairseq_cli.preprocess | [en] Dictionary: 8000 types

2022-12-13 13:45:20 | INFO | fairseq_cli.preprocess | [en] /content/DATA/rawdata/ted2020/test.en: 4000 sents, 122894 tokens, 0.0% replaced by

2022-12-13 13:45:20 | INFO | fairseq_cli.preprocess | [zh] Dictionary: 8000 types

2022-12-13 13:46:13 | INFO | fairseq_cli.preprocess | [zh] /content/DATA/rawdata/ted2020/valid.zh: 3939 sents, 96124 tokens, 0.0052% replaced by

2022-12-13 13:46:13 | INFO | fairseq_cli.preprocess | [zh] Dictionary: 8000 types

2022-12-13 13:46:13 | INFO | fairseq_cli.preprocess | [zh] /content/DATA/rawdata/ted2020/test.zh: 4000 sents, 8000 tokens, 0.0% replaced by

2022-12-13 13:46:13 | INFO | fairseq_cli.preprocess | Wrote preprocessed data to DATA/data-bin/ted2020

#实验配置

config = Namespace(

datadir = "./DATA/data-bin/ted2020",

savedir = "./checkpoints/rnn",

source_lang = "en",

target_lang = "zh",

# 获取和处理数据时的cpu线程。

num_workers=2,

# batch size in terms of tokens. gradient accumulation increases the effective batchsize.

max_tokens=8192,

accum_steps=2,

# the lr s calculated from Noam lr scheduler. you can tune the maximum lr by this factor.

lr_factor=2.,

lr_warmup=4000,

#裁剪渐变规范有助于缓解渐变爆炸

clip_norm=1.0,

# maximum epochs for training

max_epoch=15,

start_epoch=1,

# beam size for beam search

beam=5,

# 生成最大长度ax+b的序列,其中x是源长度

max_len_a=1.2,

max_len_b=10,

# when decoding, post process sentence by removing sentencepiece symbols and jieba tokenization.

post_process = "sentencepiece",

# checkpoints

keep_last_epochs=5,

resume=None, # if resume from checkpoint name (under config.savedir)

# logging

use_wandb=False,

)

#logging package logs ordinary messages,wandb logs the loss, bleu, etc. in the training process

logging.basicConfig(

format="%(asctime)s | %(levelname)s | %(name)s | %(message)s",

datefmt="%Y-%m-%d %H:%M:%S",

level="INFO", # "DEBUG" "WARNING" "ERROR"

stream=sys.stdout,

)

proj = "hw5.seq2seq"

logger = logging.getLogger(proj)

if config.use_wandb:

import wandb

wandb.init(project=proj, name=Path(config.savedir).stem, config=config)

#CUDA Environments

cuda_env = utils.CudaEnvironment()

utils.CudaEnvironment.pretty_print_cuda_env_list([cuda_env])

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

#Dataloading

'''

我们从fairseq借用TranslationTask

用于加载上面创建的二进制数据

实现良好的数据迭代器(数据加载器)

built-in task.source_dictionary and task.target_dictionary 也很方便

实现良好的beach search decoder

''

from fairseq.tasks.translation import TranslationConfig, TranslationTask

## setup task

task_cfg = TranslationConfig(

data=config.datadir,

source_lang=config.source_lang,

target_lang=config.target_lang,

train_subset="train",

required_seq_len_multiple=8,

dataset_impl="mmap",

upsample_primary=1,

)

task = TranslationTask.setup_task(task_cfg)

logger.info("loading data for epoch 1")

task.load_dataset(split="train", epoch=1, combine=True) # 如果有反翻译数据,请合并。

task.load_dataset(split="valid", epoch=1)

sample = task.dataset("valid")[1]

pprint.pprint(sample)

pprint.pprint(

"Source: " + \

task.source_dictionary.string(

sample['source'],

config.post_process,

)

)

pprint.pprint(

"Target: " + \

task.target_dictionary.string(

sample['target'],

config.post_process,

)

)

{‘id’: 1,

‘source’: tensor([ 18, 14, 6, 2234, 60, 19, 80, 5, 256, 16, 405, 1407,

1706, 7, 2]),

‘target’: tensor([ 140, 690, 28, 270, 45, 151, 1142, 660, 606, 369, 3114, 2434,

1434, 192, 2])}

“Source: that’s exactly what i do optical mind control .”

‘Target: 這實在就是我所做的–光學操控思想’

数据集迭代器

控制每个batch处理包含不超过N个tokens,从而优化GPU内存效率

为每个epoch梳理训练集

忽略超过最大长度的句子

将batch中的所有句子填充到相同的长度,从而实现GPU的并行计算

添加eos并移动一个token

teacher forcing:为了训练模型以基于前缀预测下一个token,我们将右移的目标序列作为解码器输入。

一般来说,将bos前置到目标就可以完成任务(如下所示)

然而,在fairseq中,这是通过将eos token移动到开头来完成的。根据经验,这也有同样的效果。例如:

#输出目标(target)和解码器输入(prev_output_tokens):

eos = 2

target = 419, 711, 238, 888, 792, 60, 968, 8, 2

prev_output_tokens = 2, 419, 711, 238, 888, 792, 60, 968, 8

def load_data_iterator(task, split, epoch=1, max_tokens=4000, num_workers=1, cached=True):

batch_iterator = task.get_batch_iterator(

dataset=task.dataset(split),

max_tokens=max_tokens,

max_sentences=None,

max_positions=utils.resolve_max_positions(

task.max_positions(),

max_tokens,

),

ignore_invalid_inputs=True,

seed=seed,

num_workers=num_workers,

epoch=epoch,

disable_iterator_cache=not cached,

#将此设置为False以加快速度。但是,如果设置为False,则先将max_tokens更改

#此方法的第一次调用无效。

)

return batch_iterator

demo_epoch_obj = load_data_iterator(task, "valid", epoch=1, max_tokens=20, num_workers=1, cached=False)

demo_iter = demo_epoch_obj.next_epoch_itr(shuffle=True)

sample = next(demo_iter)

sample

WARNING:fairseq.tasks.fairseq_task:2,532 samples have invalid sizes and will be skipped, max_positions=(20, 20), first few sample ids=[29, 135, 2444, 3058, 682, 731, 235, 1558, 3383, 559]

{‘id’: tensor([723]),

‘nsentences’: 1,

‘ntokens’: 18,

‘net_input’: {‘src_tokens’: tensor([[ 1, 1, 1, 1, 1, 18, 26, 82, 8, 480, 15, 651,

1361, 38, 6, 176, 2696, 39, 5, 822, 92, 260, 7, 2]]),

‘src_lengths’: tensor([19]),

‘prev_output_tokens’: tensor([[ 2, 140, 296, 318, 1560, 51, 568, 316, 225, 1952, 254, 78,

151, 2691, 9, 215, 1680, 10, 1, 1, 1, 1, 1, 1]])},

‘target’: tensor([[ 140, 296, 318, 1560, 51, 568, 316, 225, 1952, 254, 78, 151,

2691, 9, 215, 1680, 10, 2, 1, 1, 1, 1, 1, 1]])}

#每个batch都是一个python dict,带有字符串键和Tensor值。内容如下:

batch = {

"id": id, # id for each example

"nsentences": len(samples), # batch size (sentences)

"ntokens": ntokens, # batch size (tokens)

"net_input": {

"src_tokens": src_tokens, # 源语言序列

"src_lengths": src_lengths, # 填充前每个示例的序列长度

"prev_output_tokens": prev_output_tokens, #如上所述,右移目标。

},

"target": target, # target sequence

}

#模型体系结构

#我们再次继承了fairseq的编码器、解码器和模型,因此在测试阶段,我们可以直接利用fair seq的beam search decoder.

from fairseq.models import (

FairseqEncoder,

FairseqIncrementalDecoder,

FairseqEncoderDecoderModel

)

编码器

编码器是RNN或Transformer编码器。以下描述适用于RNN。对于每个输入token,编码器将生成一个输出向量和一个隐藏状态向量,隐藏状态向量将传递到下一步。换句话说,编码器顺序读入输入序列,并在每个时间步输出单个矢量,然后在最后一个时间步最终输出最终隐藏状态或内容矢量。

(1)参数:

encoder_embed_dim:嵌入的维度,这将一个 one-hot vector压缩为固定的维度,从而实现维度缩减。

encoder_ffn_embed_dim:是隐藏状态和输出向量的维度。

encoder_layers:是编码器RNN的层数。

dropout:决定神经元激活的概率设置为0,以防止过度拟合。通常,这在训练中应用,在测试中删除。

dictionary:fairseq提供的字典dictionary。它用于获得填充索引index,进而获得编码器填充掩码mask。

embed_tokens: token嵌入的实例(nn.Embedding)

(2)输入:

src_tokens:表示英语的整数序列,例如1、28、29、205、2

(3)输出:

输出:RNN在每个时间步的输出,可以由 Attention进一步处理

final_hiddens:每个时间步的隐藏状态,将传递给解码器进行解码

encoder_padding_mask:这告诉解码器要忽略哪个位置

class RNNEncoder(FairseqEncoder):

def __init__(self, args, dictionary, embed_tokens):

super().__init__(dictionary)

self.embed_tokens = embed_tokens

self.embed_dim = args.encoder_embed_dim

self.hidden_dim = args.encoder_ffn_embed_dim

self.num_layers = args.encoder_layers

self.dropout_in_module = nn.Dropout(args.dropout)

self.rnn = nn.GRU(

self.embed_dim,

self.hidden_dim,

self.num_layers,

dropout=args.dropout,

batch_first=False,

bidirectional=True

)

self.dropout_out_module = nn.Dropout(args.dropout)

self.padding_idx = dictionary.pad()

def combine_bidir(self, outs, bsz: int):

out = outs.view(self.num_layers, 2, bsz, -1).transpose(1, 2).contiguous()

return out.view(self.num_layers, bsz, -1)

def forward(self, src_tokens, **unused):

bsz, seqlen = src_tokens.size()

# get embeddings

x = self.embed_tokens(src_tokens)

x = self.dropout_in_module(x)

# B x T x C -> T x B x C

x = x.transpose(0, 1)

#直通双向RNN

h0 = x.new_zeros(2 * self.num_layers, bsz, self.hidden_dim)

x, final_hiddens = self.rnn(x, h0)

outputs = self.dropout_out_module(x)

# outputs = [sequence len, batch size, hid dim * directions]

# hidden = [num_layers * directions, batch size , hid dim]

# 由于编码器是双向的,我们需要连接两个方向的隐藏状态

final_hiddens = self.combine_bidir(final_hiddens, bsz)

# hidden = [num_layers x batch x num_directions*hidden]

encoder_padding_mask = src_tokens.eq(self.padding_idx).t()

return tuple(

(

outputs, # seq_len x batch x hidden

final_hiddens, # num_layers x batch x num_directions*hidden

encoder_padding_mask, # seq_len x batch

)

)

def reorder_encoder_out(self, encoder_out, new_order):

#这是fairseq的beam search所使用的。如何以及为什么在这里并不特别重要。

return tuple(

(

encoder_out[0].index_select(1, new_order),

encoder_out[1].index_select(1, new_order),

encoder_out[2].index_select(1, new_order),

)

)

attention

当输入序列较长时,“content vector”单独不能准确地表示整个序列,注意力机制可以为解码器提供更多信息。

根据当前时间步长的Decoder embeddings,将编码器输出与Decoder embeddings匹配以确定相关性,然后将编码器输出乘以相关性加权后相加,作为解码器RNN的输入。

常见的attention实现使用神经网络/点积作为 query (decoder embeddings) 和key (Encoder 输出)之间的相关性,然后是softmax以获得分布,最后通过所述分布对值(编码器输出值)进行加权和。

参数:

input_embed_dim:key的维度,应该是解码器中的向量的维度,以参与其他

source_embed_dim:query的维度,应该是要关注的向量的维度(编码器输出)

output_embed_dim: value的维度,应该是attention后向量的维度,下一层期望的维度

输入:

inputs:是关键,是关注他人的媒介

encoder_outputs:是查询/值,是要关注的向量

encoder_padding_mask:这告诉解码器要忽略哪个位置

输出:

output: attention后的context vector

attention score:注意力分布

class AttentionLayer(nn.Module):

def __init__(self, input_embed_dim, source_embed_dim, output_embed_dim, bias=False):

super().__init__()

self.input_proj = nn.Linear(input_embed_dim, source_embed_dim, bias=bias)

self.output_proj = nn.Linear(

input_embed_dim + source_embed_dim, output_embed_dim, bias=bias

)

def forward(self, inputs, encoder_outputs, encoder_padding_mask):

# inputs: T, B, dim

# encoder_outputs: S x B x dim

# padding mask: S x B

#首先将全部转换为batch

inputs = inputs.transpose(1,0) # B, T, dim

encoder_outputs = encoder_outputs.transpose(1,0) # B, S, dim

encoder_padding_mask = encoder_padding_mask.transpose(1,0) # B, S

# 投影到encoder_outputs的维度

x = self.input_proj(inputs)

# compute attention

# (B, T, dim) x (B, dim, S) = (B, T, S)

attn_scores = torch.bmm(x, encoder_outputs.transpose(1,2))#转置

# 在与填充相对应的位置取消attention

if encoder_padding_mask is not None:

# leveraging broadcast B, S -> (B, 1, S)

encoder_padding_mask = encoder_padding_mask.unsqueeze(1)

attn_scores = (

attn_scores.float()

.masked_fill_(encoder_padding_mask, float("-inf"))#用来mask掉当前时刻后面时刻的序列信息

.type_as(attn_scores)#按照给定的tensor的类型转换类型

) # FP16 support: cast to float and back

#源序列对应维度上的softmax

attn_scores = F.softmax(attn_scores, dim=-1)

# shape (B, T, S) x (B, S, dim) = (B, T, dim) weighted sum

x = torch.bmm(attn_scores, encoder_outputs)

# (B, T, dim)

x = torch.cat((x, inputs), dim=-1)

x = torch.tanh(self.output_proj(x)) # concat + linear + tanh

# restore shape (B, T, dim) -> (T, B, dim)

return x.transpose(1,0), attn_scores

解码器

解码器的隐藏状态将由编码器的最终隐藏状态(the content vector)初始化

同时,解码器将根据当前时间步的输入(先前时间步的输出)更改其隐藏状态,并生成输出

Attention提高了性能

seq2seq步骤是在解码器中实现的,这样以后seq2seq类就可以接受RNN和Transformer,而无需进一步修改。

参数:

decoder_embed_dim:是decoder embeddings的维度

decoder_ffn_embed_dim:是解码器RNN隐藏状态的维度,类似于encoder_ffn_embed_dim

decoder_layers:RNN解码器的层数

share_decoder_input_output_embed:通常,解码器的投影矩阵将与解码器输入嵌入共享权重

字典:fairseq提供的字典

embed_tokens:令牌嵌入的实例(nn.Embedding)

输入:

prev_output_tokens:表示右移目标的整数序列,例如1、28、29、205、2

encoder_out:编码器的输出。

incrementalstate:为了在测试期间加快解码速度,我们将保存每个时间步的隐藏状态。

输出:

outputs:解码器每个时间步的逻辑(softmax之前)输出

extra:未使用

class RNNDecoder(FairseqIncrementalDecoder):

def __init__(self, args, dictionary, embed_tokens):

super().__init__(dictionary)

self.embed_tokens = embed_tokens

assert args.decoder_layers == args.encoder_layers, f"""seq2seq rnn requires that encoder

and decoder have same layers of rnn. got: {args.encoder_layers, args.decoder_layers}"""

assert args.decoder_ffn_embed_dim == args.encoder_ffn_embed_dim*2, f"""seq2seq-rnn requires

that decoder hidden to be 2*encoder hidden dim. got: {args.decoder_ffn_embed_dim, args.encoder_ffn_embed_dim*2}"""

self.embed_dim = args.decoder_embed_dim

self.hidden_dim = args.decoder_ffn_embed_dim

self.num_layers = args.decoder_layers

self.dropout_in_module = nn.Dropout(args.dropout)

self.rnn = nn.GRU(

self.embed_dim,

self.hidden_dim,

self.num_layers,

dropout=args.dropout,

batch_first=False,

bidirectional=False

)

self.attention = AttentionLayer(

self.embed_dim, self.hidden_dim, self.embed_dim, bias=False

)

# self.attention = None

self.dropout_out_module = nn.Dropout(args.dropout)

if self.hidden_dim != self.embed_dim:

self.project_out_dim = nn.Linear(self.hidden_dim, self.embed_dim)

else:

self.project_out_dim = None

if args.share_decoder_input_output_embed:

self.output_projection = nn.Linear(

self.embed_tokens.weight.shape[1],

self.embed_tokens.weight.shape[0],

bias=False,

)

self.output_projection.weight = self.embed_tokens.weight

else:

self.output_projection = nn.Linear(

self.output_embed_dim, len(dictionary), bias=False

)

nn.init.normal_(

self.output_projection.weight, mean=0, std=self.output_embed_dim ** -0.5

)

def forward(self, prev_output_tokens, encoder_out, incremental_state=None, **unused):

# extract the outputs from encoder

encoder_outputs, encoder_hiddens, encoder_padding_mask = encoder_out

# outputs: seq_len x batch x num_directions*hidden

# encoder_hiddens: num_layers x batch x num_directions*encoder_hidden

# padding_mask: seq_len x batch

if incremental_state is not None and len(incremental_state) > 0:

# 如果保留了上一个时间步的信息,我们可以从那里继续,而不是从bos开始

prev_output_tokens = prev_output_tokens[:, -1:]

cache_state = self.get_incremental_state(incremental_state, "cached_state")

prev_hiddens = cache_state["prev_hiddens"]

else:

#增量状态不存在,或者这是训练时间,或者是测试时间的第一个时间步

#准备seq2seq:将encoder_hidden传递给解码器隐藏状态

prev_hiddens = encoder_hiddens

bsz, seqlen = prev_output_tokens.size()

# embed tokens

x = self.embed_tokens(prev_output_tokens)

x = self.dropout_in_module(x)

# B x T x C -> T x B x C

x = x.transpose(0, 1)

# decoder-to-encoder attention

if self.attention is not None:

x, attn = self.attention(x, encoder_outputs, encoder_padding_mask)

# pass thru unidirectional RNN

x, final_hiddens = self.rnn(x, prev_hiddens)

# outputs = [sequence len, batch size, hid dim]

# hidden = [num_layers * directions, batch size , hid dim]

x = self.dropout_out_module(x)

# project to embedding size (if hidden differs from embed size, and share_embedding is True,

# we need to do an extra projection)

if self.project_out_dim != None:

x = self.project_out_dim(x)

# project to vocab size

x = self.output_projection(x)

# T x B x C -> B x T x C

x = x.transpose(1, 0)

#如果是增量,记录当前时间步的隐藏状态,将在下一个时间步中恢复

cache_state = {

"prev_hiddens": final_hiddens,

}

self.set_incremental_state(incremental_state, "cached_state", cache_state)

return x, None

def reorder_incremental_state(

self,

incremental_state,

new_order,

):

# This is used by fairseq's beam search. How and why is not particularly important here.

cache_state = self.get_incremental_state(incremental_state, "cached_state")

prev_hiddens = cache_state["prev_hiddens"]

prev_hiddens = [p.index_select(0, new_order) for p in prev_hiddens]

cache_state = {

"prev_hiddens": torch.stack(prev_hiddens),

}

self.set_incremental_state(incremental_state, "cached_state", cache_state)

return

Seq2Seq

由编码器和解码器组成

接收输入并传递给编码器

将编码器的输出传递给解码器

解码器将根据先前时间步的输出以及编码器输出进行解码

解码完成后,返回解码器输出

class Seq2Seq(FairseqEncoderDecoderModel):

def __init__(self, args, encoder, decoder):

super().__init__(encoder, decoder)

self.args = args

def forward(

self,

src_tokens,

src_lengths,

prev_output_tokens,

return_all_hiddens: bool = True,

):

"""

运行编码器-解码器模型的正向传递。

"""

encoder_out = self.encoder(

src_tokens, src_lengths=src_lengths, return_all_hiddens=return_all_hiddens

)

logits, extra = self.decoder(

prev_output_tokens,

encoder_out=encoder_out,

src_lengths=src_lengths,

return_all_hiddens=return_all_hiddens,

)

return logits, extra

模型初始化

# # HINT: transformer结构

from fairseq.models.transformer import (

TransformerEncoder,

TransformerDecoder,

)

def build_model(args, task):

"""基于超参数构建模型实例 """

src_dict, tgt_dict = task.source_dictionary, task.target_dictionary

# token embeddings

encoder_embed_tokens = nn.Embedding(len(src_dict), args.encoder_embed_dim, src_dict.pad())

decoder_embed_tokens = nn.Embedding(len(tgt_dict), args.decoder_embed_dim, tgt_dict.pad())

# encoder decoder

# HINT: TODO:切换到TransformerEncoder和TransformerDecoder

encoder = RNNEncoder(args, src_dict, encoder_embed_tokens)

decoder = RNNDecoder(args, tgt_dict, decoder_embed_tokens)

#encoder = TransformerEncoder(args, src_dict, encoder_embed_tokens)

#decoder = TransformerDecoder(args, tgt_dict, decoder_embed_tokens)

# sequence to sequence model

model = Seq2Seq(args, encoder, decoder)

# seq2seq模型的初始化很重要,需要额外的处理

def init_params(module):

from fairseq.modules import MultiheadAttention

if isinstance(module, nn.Linear):

module.weight.data.normal_(mean=0.0, std=0.02)

if module.bias is not None:

module.bias.data.zero_()

if isinstance(module, nn.Embedding):

module.weight.data.normal_(mean=0.0, std=0.02)

if module.padding_idx is not None:

module.weight.data[module.padding_idx].zero_()

if isinstance(module, MultiheadAttention):

module.q_proj.weight.data.normal_(mean=0.0, std=0.02)

module.k_proj.weight.data.normal_(mean=0.0, std=0.02)

module.v_proj.weight.data.normal_(mean=0.0, std=0.02)

if isinstance(module, nn.RNNBase):

for name, param in module.named_parameters():

if "weight" in name or "bias" in name:

param.data.uniform_(-0.1, 0.1)

# weight initialization

model.apply(init_params)

return model

#体系结构相关配置

arch_args = Namespace(

encoder_embed_dim=256,

encoder_ffn_embed_dim=512,

encoder_layers=1,

decoder_embed_dim=256,

decoder_ffn_embed_dim=1024,

decoder_layers=1,

share_decoder_input_output_embed=True,

dropout=0.3,

)

# HINT: 这些关于Transformer参数的补丁

def add_transformer_args(args):

args.encoder_attention_heads=4

args.encoder_normalize_before=True

args.decoder_attention_heads=4

args.decoder_normalize_before=True

args.activation_fn="relu"

args.max_source_positions=1024

args.max_target_positions=1024

#Transformer默认参数上的修补程序(上面未设置的)

from fairseq.models.transformer import base_architecture

base_architecture(arch_args)

#add_transformer_args(arch_args)

if config.use_wandb:

wandb.config.update(vars(arch_args))

model = build_model(arch_args, task)

logger.info(model)

/usr/local/lib/python3.8/dist-packages/torch/nn/modules/rnn.py:67: UserWarning: dropout option adds dropout after all but last recurrent layer, so non-zero dropout expects num_layers greater than 1, but got dropout=0.3 and num_layers=1

warnings.warn("dropout option adds dropout after all but last "

优化

loss:标签平滑正则化Label Smoothing Regularization

让模型学习生成不太集中的分布,并防止over-confidence

有时,事实真相可能不是唯一的答案。因此,在计算损失时,我们为不正确的标签保留一些概率

避免过拟合

class LabelSmoothedCrossEntropyCriterion(nn.Module):

def __init__(self, smoothing, ignore_index=None, reduce=True):

super().__init__()

self.smoothing = smoothing

self.ignore_index = ignore_index

self.reduce = reduce

def forward(self, lprobs, target):

if target.dim() == lprobs.dim() - 1:

target = target.unsqueeze(-1)

# nll: 负对数似然,当target 是一个one-hot时的交叉熵。以下行与F.nll_loss相同

nll_loss = -lprobs.gather(dim=-1, index=target)

#为其他标签保留一些可能性。因此当计算交叉熵时,相当于对所有标签的log probs求和

smooth_loss = -lprobs.sum(dim=-1, keepdim=True)

if self.ignore_index is not None:

pad_mask = target.eq(self.ignore_index)

nll_loss.masked_fill_(pad_mask, 0.0)

smooth_loss.masked_fill_(pad_mask, 0.0)

else:

nll_loss = nll_loss.squeeze(-1)

smooth_loss = smooth_loss.squeeze(-1)

if self.reduce:

nll_loss = nll_loss.sum()

smooth_loss = smooth_loss.sum()

#在计算交叉熵时,添加其他标签的损失

eps_i = self.smoothing / lprobs.size(-1)

loss = (1.0 - self.smoothing) * nll_loss + eps_i * smooth_loss

return loss

#通常,0.1就足够了

criterion = LabelSmoothedCrossEntropyCriterion(

smoothing=0.1,

ignore_index=task.target_dictionary.pad(),

)

优化器:Adam+lr scheduling

在训练Transformer时,逆平方根scheduling 对稳定性很重要。它后来也在RNN上使用。根据以下公式更新学习率。线性增加第一级,然后与时间步长的平方根倒数成比例衰减。

def get_rate(d_model, step_num, warmup_step):

# TODO: Change lr from constant to the equation shown above

# lr = 0.001

lr = (d_model**(-0.5)) * min(step_num**(-0.5), step_num*(warmup_step**(-1.5)))

return lr

class NoamOpt:

"实现速率的Optim包装器。"

def __init__(self, model_size, factor, warmup, optimizer):

self.optimizer = optimizer

self._step = 0

self.warmup = warmup

self.factor = factor

self.model_size = model_size

self._rate = 0

@property

def param_groups(self):

return self.optimizer.param_groups

def multiply_grads(self, c):

"""Multiplies grads by a constant *c*."""

for group in self.param_groups:

for p in group['params']:

if p.grad is not None:

p.grad.data.mul_(c)

def step(self):

"Update parameters and rate"

self._step += 1

rate = self.rate()

for p in self.param_groups:

p['lr'] = rate

self._rate = rate

self.optimizer.step()

def rate(self, step = None):

"Implement `lrate` above"

if step is None:

step = self._step

return 0 if not step else self.factor * get_rate(self.model_size, step, self.warmup)

#训练

from fairseq.data import iterators

from torch.cuda.amp import GradScaler, autocast

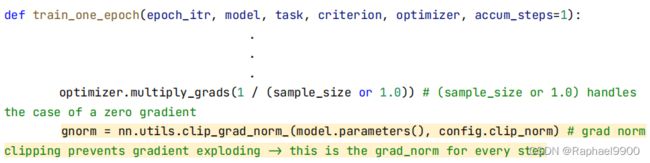

def train_one_epoch(epoch_itr, model, task, criterion, optimizer, accum_steps=1):

itr = epoch_itr.next_epoch_itr(shuffle=True)

itr = iterators.GroupedIterator(itr, accum_steps) # gradient accumulation: update every accum_steps samples

stats = {"loss": []}

scaler = GradScaler() # automatic mixed precision (amp)

model.train()

progress = tqdm.tqdm(itr, desc=f"train epoch {epoch_itr.epoch}", leave=False)

for samples in progress:

model.zero_grad()

accum_loss = 0

sample_size = 0

# gradient accumulation: update every accum_steps samples

for i, sample in enumerate(samples):

if i == 1:

# emptying the CUDA cache after the first step can reduce the chance of OOM

torch.cuda.empty_cache()

sample = utils.move_to_cuda(sample, device=device)

target = sample["target"]

sample_size_i = sample["ntokens"]

sample_size += sample_size_i

# mixed precision training

with autocast():

net_output = model.forward(**sample["net_input"])

lprobs = F.log_softmax(net_output[0], -1)

loss = criterion(lprobs.view(-1, lprobs.size(-1)), target.view(-1))

# logging

accum_loss += loss.item()

# back-prop

scaler.scale(loss).backward()

scaler.unscale_(optimizer)

optimizer.multiply_grads(1 / (sample_size or 1.0)) # (sample_size or 1.0) handles the case of a zero gradient

gnorm = nn.utils.clip_grad_norm_(model.parameters(), config.clip_norm) # grad norm clipping prevents gradient exploding

scaler.step(optimizer)

scaler.update()

# logging

loss_print = accum_loss/sample_size

stats["loss"].append(loss_print)

progress.set_postfix(loss=loss_print)

if config.use_wandb:

wandb.log({

"train/loss": loss_print,

"train/grad_norm": gnorm.item(),

"train/lr": optimizer.rate(),

"train/sample_size": sample_size,

})

loss_print = np.mean(stats["loss"])

logger.info(f"training loss: {loss_print:.4f}")

return stats

验证和推断

为了防止过度拟合,每个 epoch 都需要进行验证,以验证对不可见数据的性能。

该程序本质上与训练相同,增加了推理步骤

验证后,我们可以保存模型权重

仅验证损失无法描述模型的实际性能

基于当前模型直接生成翻译假设,然后使用参考译文计算BLEU

我们还可以手动检查假设的质量

我们使用fairseq序列生成器进行波束搜索以生成平移假设

# fairseq's beam search generator

# given model and input seqeunce, produce translation hypotheses by beam search

sequence_generator = task.build_generator([model], config)

def decode(toks, dictionary):

# convert from Tensor to human readable sentence

s = dictionary.string(

toks.int().cpu(),

config.post_process,

)

return s if s else ""

def inference_step(sample, model):

gen_out = sequence_generator.generate([model], sample)

srcs = []

hyps = []

refs = []

for i in range(len(gen_out)):

# for each sample, collect the input, hypothesis and reference, later be used to calculate BLEU

srcs.append(decode(

utils.strip_pad(sample["net_input"]["src_tokens"][i], task.source_dictionary.pad()),

task.source_dictionary,

))

hyps.append(decode(

gen_out[i][0]["tokens"], # 0 indicates using the top hypothesis in beam

task.target_dictionary,

))

refs.append(decode(

utils.strip_pad(sample["target"][i], task.target_dictionary.pad()),

task.target_dictionary,

))

return srcs, hyps, refs

import shutil

import sacrebleu

def validate(model, task, criterion, log_to_wandb=True):

logger.info('begin validation')

itr = load_data_iterator(task, "valid", 1, config.max_tokens, config.num_workers).next_epoch_itr(shuffle=False)

stats = {"loss":[], "bleu": 0, "srcs":[], "hyps":[], "refs":[]}

srcs = []

hyps = []

refs = []

model.eval()

progress = tqdm.tqdm(itr, desc=f"validation", leave=False)

with torch.no_grad():

for i, sample in enumerate(progress):

# validation loss

sample = utils.move_to_cuda(sample, device=device)

net_output = model.forward(**sample["net_input"])

lprobs = F.log_softmax(net_output[0], -1)

target = sample["target"]

sample_size = sample["ntokens"]

loss = criterion(lprobs.view(-1, lprobs.size(-1)), target.view(-1)) / sample_size

progress.set_postfix(valid_loss=loss.item())

stats["loss"].append(loss)

# do inference

s, h, r = inference_step(sample, model)

srcs.extend(s)

hyps.extend(h)

refs.extend(r)

tok = 'zh' if task.cfg.target_lang == 'zh' else '13a'

stats["loss"] = torch.stack(stats["loss"]).mean().item()

stats["bleu"] = sacrebleu.corpus_bleu(hyps, [refs], tokenize=tok) # 計算BLEU score

stats["srcs"] = srcs

stats["hyps"] = hyps

stats["refs"] = refs

if config.use_wandb and log_to_wandb:

wandb.log({

"valid/loss": stats["loss"],

"valid/bleu": stats["bleu"].score,

}, commit=False)

showid = np.random.randint(len(hyps))

logger.info("example source: " + srcs[showid])

logger.info("example hypothesis: " + hyps[showid])

logger.info("example reference: " + refs[showid])

# show bleu results

logger.info(f"validation loss:\t{stats['loss']:.4f}")

logger.info(stats["bleu"].format())

return stats

#保存和加载模型权重

def validate_and_save(model, task, criterion, optimizer, epoch, save=True):

stats = validate(model, task, criterion)

bleu = stats['bleu']

loss = stats['loss']

if save:

# save epoch checkpoints

savedir = Path(config.savedir).absolute()

savedir.mkdir(parents=True, exist_ok=True)

check = {

"model": model.state_dict(),

"stats": {"bleu": bleu.score, "loss": loss},

"optim": {"step": optimizer._step}

}

torch.save(check, savedir/f"checkpoint{epoch}.pt")

shutil.copy(savedir/f"checkpoint{epoch}.pt", savedir/f"checkpoint_last.pt")

logger.info(f"saved epoch checkpoint: {savedir}/checkpoint{epoch}.pt")

# save epoch samples

with open(savedir/f"samples{epoch}.{config.source_lang}-{config.target_lang}.txt", "w") as f:

for s, h in zip(stats["srcs"], stats["hyps"]):

f.write(f"{s}\t{h}\n")

# get best valid bleu

if getattr(validate_and_save, "best_bleu", 0) < bleu.score:

validate_and_save.best_bleu = bleu.score

torch.save(check, savedir/f"checkpoint_best.pt")

del_file = savedir / f"checkpoint{epoch - config.keep_last_epochs}.pt"

if del_file.exists():

del_file.unlink()

return stats

def try_load_checkpoint(model, optimizer=None, name=None):

name = name if name else "checkpoint_last.pt"

checkpath = Path(config.savedir)/name

if checkpath.exists():

check = torch.load(checkpath)

model.load_state_dict(check["model"])

stats = check["stats"]

step = "unknown"

if optimizer != None:

optimizer._step = step = check["optim"]["step"]

logger.info(f"loaded checkpoint {checkpath}: step={step} loss={stats['loss']} bleu={stats['bleu']}")

else:

logger.info(f"no checkpoints found at {checkpath}!")

#main

model = model.to(device=device)

criterion = criterion.to(device=device)

logger.info("task: {}".format(task.__class__.__name__))

logger.info("encoder: {}".format(model.encoder.__class__.__name__))

logger.info("decoder: {}".format(model.decoder.__class__.__name__))

logger.info("criterion: {}".format(criterion.__class__.__name__))

logger.info("optimizer: {}".format(optimizer.__class__.__name__))

logger.info(

"num. model params: {:,} (num. trained: {:,})".format(

sum(p.numel() for p in model.parameters()),

sum(p.numel() for p in model.parameters() if p.requires_grad),

)

)

logger.info(f"max tokens per batch = {config.max_tokens}, accumulate steps = {config.accum_steps}")

epoch_itr = load_data_iterator(task, "train", config.start_epoch, config.max_tokens, config.num_workers)

try_load_checkpoint(model, optimizer, name=config.resume)

while epoch_itr.next_epoch_idx <= config.max_epoch:

# train for one epoch

train_one_epoch(epoch_itr, model, task, criterion, optimizer, config.accum_steps)

stats = validate_and_save(model, task, criterion, optimizer, epoch=epoch_itr.epoch)

logger.info("end of epoch {}".format(epoch_itr.epoch))

epoch_itr = load_data_iterator(task, "train", epoch_itr.next_epoch_idx, config.max_tokens, config.num_workers)

# checkpoint_last.pt : latest epoch

# checkpoint_best.pt : highest validation bleu

# avg_last_5_checkpoint.pt: the average of last 5 epochs

try_load_checkpoint(model, name="avg_last_5_checkpoint.pt")

validate(model, task, criterion, log_to_wandb=False)

None

#生成预测

def generate_prediction(model, task, split="test", outfile="./prediction.txt"):

task.load_dataset(split=split, epoch=1)

itr = load_data_iterator(task, split, 1, config.max_tokens, config.num_workers).next_epoch_itr(shuffle=False)

idxs = []

hyps = []

model.eval()

progress = tqdm.tqdm(itr, desc=f"prediction")

with torch.no_grad():

for i, sample in enumerate(progress):

# validation loss

sample = utils.move_to_cuda(sample, device=device)

# do inference

s, h, r = inference_step(sample, model)

hyps.extend(h)

idxs.extend(list(sample['id']))

# sort based on the order before preprocess

hyps = [x for _,x in sorted(zip(idxs,hyps))]

with open(outfile, "w") as f:

for h in hyps:

f.write(h+"\n")

generate_prediction(model, task)

raise

模型补充

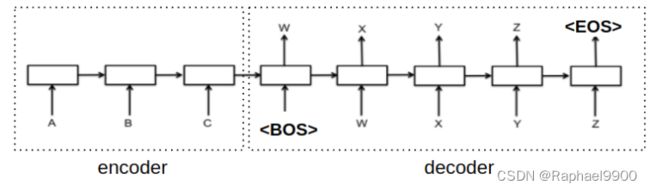

对于seq2seq模型做的翻译来说会有以下结构,因为在学习李宏毅老师的课程中没有明确说明代码的结构,所以做了一些B站一些老师的分析学习。这里可以用来类似学习。

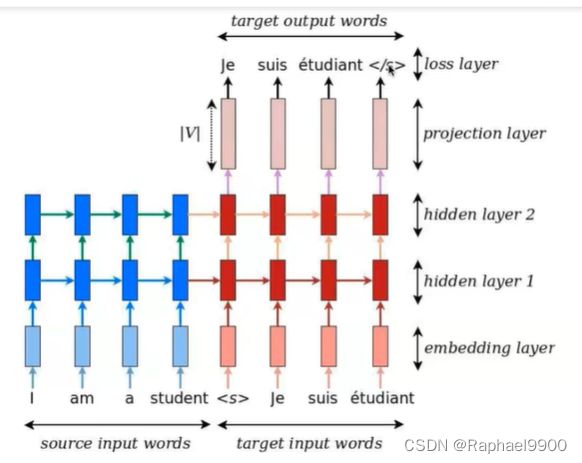

从图中可以看出来seq2seq是一个具备编码器和解码器的模型,编码器的输入是我们的源输入文字,解码器的输入是target 输入的文字。对于编码器和解码器来说会有embedding layer、hidden layer,但是只有解码器有projection layer和loss layer。最后解码器输出的是target 输出文字。

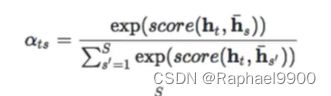

也可以考虑Attention机制,对于输入序列每个输入得到的输出,计算注意力权重并加权:

不仅仅使用Encoder最后一步的输出,而且使用Encoder每一步的输出,和图像标题生成中的小块类似

Decoder每次进行生成时,先根据Decoder当前状态和Encoder每一步输出之间的关系,计算对应的注意力权重

根据权重将Encoder每一步的输出进行加权求和,得到当前这一步所使用的上下文context。

Decoder根据context以及上一步的输出,更新得到下一步的状态,进而得到下一步的输出。

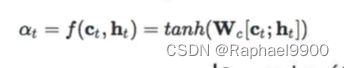

对于蓝色块和红色块(encoder的输出和decoder的当前状态)相乘得到attention weights,attention weights与蓝色块计算得到context vector,context vector,与红色快计算得到attention vector,然后对attention vector棕色块做projection得到最终输出。虚线表示把每步输出作为下一步输入,这样就构成一个teacher forcing。

tanh是激活函数。