一文总结经典卷积神经网络(AlexNet/VGGNet/ResNet/DenseNet 附代码)

很久没有更博了…以后我还敢!

一文总结经典卷积神经网络

- 1.AlexNet

-

- 1.1 AlexNet网络结构

- 1.2 论文创新点

- 1.3 AlexNet代码实现

- 2. VGGNet

-

- 2.1 VGGNet网络结构

- 2.2论文创新点

- 2.3 VGGNet代码实现

- 3. ResNet

-

- 3.1 ResNet网络结构

- 3.2论文创新点

- 3.3 ResNet代码实现

- 4. DenseNet

-

- 4.1 DenseNet网络结构

- 4.2 论文创新点

- 4.3 DenseNet代码实现

- 结语

- 参考文献

1.AlexNet

论文:AlexNet论文

深度卷积神经网络AlexNet是由Alex Krizhevsky,Ilya Sutskever和Geoffrey E. Hinton三人提出的,该网络摘得了2010年ILSVRC比赛的桂冠。有关于G·Hinton,我相信只要了解过机器学习和深度学习的学者们肯定都是知道的,他于2018年同Yoshua Bengio和Yann LeCun共同获得图灵奖(计算机的诺贝尔奖),这三人被誉为“深度学习的三大马车”。深度学习这一提法也是G·Hinton最先提出的。

Alex Krizhevsky不怎么出名,从这篇paper发表之后,近几年也没有发表过top的paper(2018年发表过一篇一区paper,不过是第三作者),但是他为深度学习做出了特别多的贡献,他是许多知名数据集的创造者,例如:cifar10、Coco等。他还和G·Hinton一同研究了受限玻尔兹曼机(RBM)、深度置信网络(DBN)及应用。

Ilya Sutskever也是一位大佬,目前担任OpenAI的首席科学家。他除了与Alex Krizhevsky和G·Hinton共同发明AlexNet外,他还与Oriol Vinyals和Quoc Le一起发明了序列学习方法。此外,Sutskever还参与了AlphaGo和TensorFlow的研发。

1.1 AlexNet网络结构

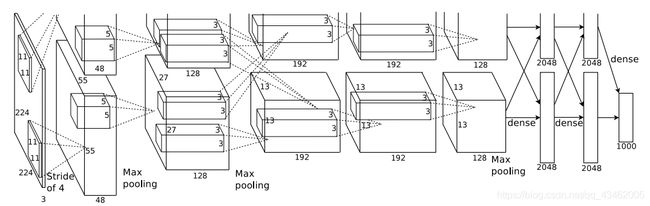

该图摘录自论文《ImageNet Classification with Deep Convolutional Neural Networks》

AlexNet包含八层网络。前五个是卷积层,最后三个是全连接层。它使用了非线性的ReLU激活函数,显示出比tanh函数和Sigmoid函数更强的训练性能,在2012年参加了ImageNet大规模视觉识别挑战赛,该网络的top-5错误率为15.3%,比第二名的错误率低10.8个百分点。AlexNet被认为是计算机视觉领域最有影响力的论文之一。至2020年,AlexNet的论文已被引用了70,000次。

1.2 论文创新点

- 使用了ReLU作为激活函数。 ReLU函数显示出比tanh函数和Sigmoid函数更强的训练性能,目前也广泛的被使用着。

- 在多个GPU上训练。 如上图所示,AlexNet的训练是在两块GPU上并行训练的,这种模型训练的思路加快了深度学习的发展。

- 提出了局部响应归一化LRN。 有助于模型提高泛化能力(后面会有进一步的研究说明)。

- 提出一种抗过拟合方法 — Dropout。 该方法也是目前被广泛采用的抗过拟合方法,非常行之有效。该方法的最先的提出是G·Hinton在他的课堂上讲的,但并没有赴之笔墨和实验,Krizhevsky将老师提出的方法用于实验并发表成论文。

1.3 AlexNet代码实现

Code in pytorch

import torch

from torch import nn

class AlexNet8(nn.Module):

"""

input: 227*227

"""

def __init__(self):

super(AlexNet8, self).__init__()

# 第一层 卷积核大小(11*11*96) 卷积步长(4) 最大值池化(3*3) 池化步长(2)

# [227,227,3] => [27,27,96]

self.layer1 = nn.Sequential(

nn.Conv2d(in_channels=3,out_channels=96,kernel_size=11,stride=4),

nn.LocalResponseNorm(size=5),

nn.MaxPool2d(kernel_size=3,stride=2))

# 第二层 卷积核大小(5*5*256) 卷积步长(1) 填充(2) 最大值池化(3*3) 池化步长(2)

# [27,27,96] => [13,13,256]

self.layer2 = nn.Sequential(

nn.Conv2d(in_channels=96, out_channels=256, kernel_size=5, padding=2, stride=1),

nn.LocalResponseNorm(size=5),

nn.MaxPool2d(kernel_size=3, stride=2))

# 第三层 卷积核大小(3*3*384) 卷积步长(1) 填充(1)

# [13,13,256] => [13,13,384]

self.layer3 = nn.Conv2d(in_channels=256, out_channels=384, kernel_size=3, padding=1, stride=1)

# 第四层 卷积核大小(3*3*384) 卷积步长(1) 填充(1)

# [13,13,384] => [13,13,384]

self.layer4 = nn.Conv2d(in_channels=384, out_channels=384, kernel_size=3, padding=1, stride=1)

# 第五层 卷积核大小(3*3*384) 卷积步长(2) 填充(1) 最大值池化(3*3) 池化步长(2)

# [13,13,384] => [6,6,256]

self.layer5 = nn.Sequential(

nn.Conv2d(in_channels=384, out_channels=256, kernel_size=3, padding=1, stride=1),

nn.MaxPool2d(kernel_size=3, stride=2))

# [6,6,256] => [6*6*256]

self.fallen = nn.Flatten()

# 第六层 全连接层

self.layer6 = nn.Linear(9216,4096)

# 第七层 全连接层

self.layer7 = nn.Linear(4096,4096)

# 第八层 全连接层

self.layer8 = nn.Linear(4096,10)

def forward(self,x):

out = self.layer1(x)

print("layer1:",out.shape)

out = self.layer2(out)

print("layer2:",out.shape)

out = self.layer3(out)

print("layer3:",out.shape)

out = self.layer4(out)

print("layer4:",out.shape)

out = self.layer5(out)

print("layer5:",out.shape)

out = self.fallen(out)

out = self.layer6(out)

print("layer6:",out.shape)

out = self.layer7(out)

print("layer7:",out.shape)

out = self.layer8(out)

print("layer8:",out.shape)

return out

def main():

tmp = torch.rand(27,3,227,227)

model = AlexNet8()

out = model(tmp)

print(out.shape)

if __name__ == '__main__':

main()

Code in tensorflow 2.x

import tensorflow as tf

from tensorflow.keras.layers import Dense,Conv2D,MaxPool2D,Dropout,Flatten

from tensorflow.keras.activations import relu,softmax

from tensorflow.keras.models import Model

# 定义AlexNet

class self_AlexNet(Model):

def __init__(self):

super(self_AlexNet,self).__init__()

self.conv1 = Conv2D(filters=96,kernel_size=(11,11),strides=4,activation=relu)

self.pool1 = MaxPool2D(pool_size=(3,3),strides=2)

self.conv2 = Conv2D(filters=256,kernel_size(5,5),strides=1,padding='same',activation=relu)

self.pool2 = MaxPool2D(pool_size=(3,3),strides=2)

self.conv3 = Conv2D(filters=384,kernel_size=(3,3),padding='same',activation=relu)

self.conv4 = Conv2D(filters=384,kernel_size=(3,3),padding='same',activation=relu)

self.conv5 = Conv2D(filters=256,kernel_size=(3,3),padding='same',activation=relu)

self.pool5 = MaxPool2D(pool_size=(3,3),strides=2)

self.fallten = Flatten()

self.fc6 = Dense(units=2048,activation=relu)

self.drop6 = Dropout(rate=0.5)

self.fc7 = Dense(units=2048,activation=relu)

self.drop7 = Dropout(rate=0.5)

self.fc8 = Dense(units=3,activation=softmax)

def call(self,input):

out = self.conv1(input)

out = self.pool1(out)

out = self.conv2(out)

out = self.pool2(out)

out = self.conv3(out)

out = self.conv4(out)

out = self.conv5(out)

out = self.pool5(out)

# 原论文是使用两块GPU并行计算的 所以是4096

# 这里应该修改为9216

# out = tf.reshape(out,[-1,9216])

out = self.fallten(out)

out = self.fc6(out)

out = self.drop6(out)

out = self.fc7(out)

out = self.drop7(out)

out = self.fc8(out)

return out

def main():

AlexNet = self_AlexNet()

AlexNet.build(input_shape=(None, 227, 227, 3))

tmp = tf.random.normal([3, 227, 227, 3])

out = AlexNet(tmp)

print(out.shape)

if __name__ == '__main__':

main()

2. VGGNet

论文:VGGNet论文

VGGNet是2014年,牛津大学计算机视觉组(Visual Geometry Group)和Google DeepMind公司的研究员一起研发出了新的深度卷积神经网络,并取得了ILSVRC2014比赛分类项目的第二名(第一名是GoogLeNet,也是同年提出的)。

2.1 VGGNet网络结构

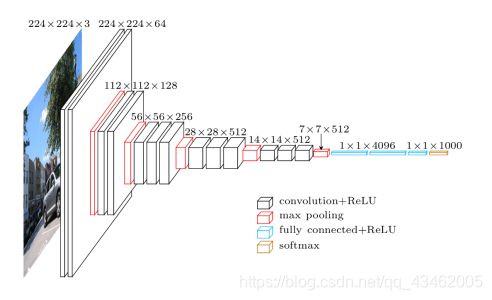

该图摘自网络

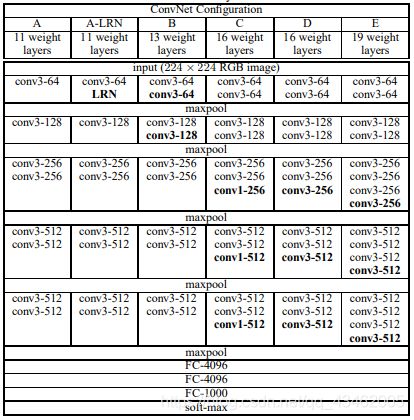

该图摘自论文《Very Deep Convolutional Networks for Large-Scale Image Recognition》

VGGNet构造了六种相似又不同的网络结构,其中最被广泛传播的是拥有16层神经网络的VGG16和19层神经网络的VGG19。其具体网络配置如上图所示。

VGG16由13层卷积层和3层全连接层构成,层与层之间使用max-pooling(最大池化)分开,所有隐层的激活单元都采用ReLU函数。

2.2论文创新点

- 实验证明了LRN层(局部响应归一化)无性能增益(A-LRN)。 陈云霁老师在《智能计算系统》课程上讲到因为他们团队设计的人工智能芯片“寒武纪”不支持LRN,被国际评委点评了,实则LRN也并不能带来性能增益。

- 随着深度增加,分类性能逐渐提高。

- 多个小卷积核比单个大卷积核性能好。 VGG作者做了实验用B和自己一个不在实验组里的较浅网络比较,较浅网络用conv5x5来代替B的两个conv3x3,结果显示多个小卷积核比单个大卷积核效果要好。

2.3 VGGNet代码实现

Code in pytorch

import torch

from torch import nn

class VGGNet16(nn.Module):

"""

input: 224*224

"""

def __init__(self):

super(VGGNet16, self).__init__()

# 第一层 卷积核大小(64,3,3,3) 卷积步长(1) 填充(1)

# 第二层 卷积核大小(64,3,3,64) 卷积步长(1) 填充(1)

# 最大值池化(2*2) 池化步长(2)

# [227,227,3] =>[112, 112, 64]

self.layer1_2 = nn.Sequential(

nn.Conv2d(in_channels=3,out_channels=64,kernel_size=3,padding=1),

nn.ReLU(True),

nn.Conv2d(in_channels=64,out_channels=64,kernel_size=3,padding=1),

nn.ReLU(True),

nn.MaxPool2d(kernel_size=2,stride=2))

# 第三层 卷积核大小(128,64,3,3) 卷积步长(1) 填充(1)

# 第四层 卷积核大小(128,128,3,64) 卷积步长(1) 填充(1)

# 最大值池化(2*2) 池化步长(2)

# [112, 112, 64] => [56, 56, 128]

self.layer3_4 = nn.Sequential(

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, padding=1),

nn.ReLU(True),

nn.Conv2d(in_channels=128, out_channels=128, kernel_size=3, padding=1),

nn.ReLU(True),

nn.MaxPool2d(kernel_size=2, stride=2))

# 第五层 卷积核大小(256,128,3,3) 卷积步长(1) 填充(1)

# 第六层 卷积核大小(256,256,3,3) 卷积步长(1) 填充(1)

# 第七层 卷积核大小(256,256,3,3) 卷积步长(1) 填充(1)

# 最大值池化(2*2) 池化步长(2)

# [56, 56, 128] => [28, 28, 256]

self.layer5_7 = nn.Sequential(

nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, padding=1),

nn.ReLU(True),

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, padding=1),

nn.ReLU(True),

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, padding=1),

nn.ReLU(True),

nn.MaxPool2d(kernel_size=2, stride=2))

# 第八层 卷积核大小(512,256,3,3) 卷积步长(1) 填充(1)

# 第九层 卷积核大小(512,512,3,3) 卷积步长(1) 填充(1)

# 第十层 卷积核大小(512,512,3,3) 卷积步长(1) 填充(1)

# 最大值池化(2*2) 池化步长(2)

# [28, 28, 256] => [14, 14, 512]

self.layer8_10 = nn.Sequential(

nn.Conv2d(in_channels=256, out_channels=512, kernel_size=3, padding=1),

nn.ReLU(True),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, padding=1),

nn.ReLU(True),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, padding=1),

nn.ReLU(True),

nn.MaxPool2d(kernel_size=2, stride=2))

# 第十一层 卷积核大小(512,512,3,3) 卷积步长(1) 填充(1)

# 第十二层 卷积核大小(512,512,3,3) 卷积步长(1) 填充(1)

# 第十三层 卷积核大小(512,512,3,3) 卷积步长(1) 填充(1)

# 最大值池化(2*2) 池化步长(2)

# [14, 14, 512] => [7, 7, 512]

self.layer11_13 = nn.Sequential(

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, padding=1),

nn.ReLU(True),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, padding=1),

nn.ReLU(True),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, padding=1),

nn.ReLU(True),

nn.MaxPool2d(kernel_size=2, stride=2))

self.fallen = nn.Flatten()

# 第十四层 全连接层

self.layer14 = nn.Sequential(

nn.Linear(512*7*7,4096),

nn.ReLU(True))

# 第十五层 全连接层

self.layer15 = nn.Sequential(

nn.Linear(4096, 4096),

nn.ReLU(True))

# 第十六层 全连接层

self.layer16 = nn.Linear(4096,10)

def forward(self,x):

out = self.layer1_2(x)

print("layer1_2:",out.shape)

out = self.layer3_4(out)

print("layer3_4:",out.shape)

out = self.layer5_7(out)

print("layer5_7:",out.shape)

out = self.layer8_10(out)

print("layer8_10:",out.shape)

out = self.layer11_13(out)

print("layer11_13:", out.shape)

out = self.fallen(out)

out = self.layer14(out)

print("layer14:", out.shape)

out = self.layer15(out)

print("layer15:", out.shape)

out = self.layer16(out)

print("layer16:", out.shape)

return out

def main():

tmp = torch.rand(27,3,224,224)

model = VGGNet16()

out = model(tmp)

print(out.shape)

if __name__ == '__main__':

main()

Code in tensorflow 2.x

import tensorflow as tf

from tensorflow.keras.layers import Dense,Conv2D,MaxPool2D,Flatten

from tensorflow.keras.activations import relu

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.models import Model

# 定义VGG16

class self_VGG16(Model):

def __init__(self):

super(self_VGG16,self).__init__()

self.conv1 = Conv2D(filters=64, kernel_size=[3, 3], padding='same', activation=relu)

self.conv2 = Conv2D(filters=64, kernel_size=[3, 3], padding='same', activation=relu)

self.pool1 = MaxPool2D(pool_size=[2, 2], strides=2, padding='same')

# unit 2

self.conv3 = Conv2D(128, kernel_size=[3, 3], padding='same', activation=relu)

self.conv4 = Conv2D(128, kernel_size=[3, 3], padding='same', activation=relu)

self.pool2 = MaxPool2D(pool_size=[2, 2], padding='same')

# unit 3

self.conv5 = Conv2D(256, kernel_size=[3, 3], padding='same', activation=relu)

self.conv6 = Conv2D(256, kernel_size=[3, 3], padding='same', activation=relu)

self.conv7 = Conv2D(256, kernel_size=[1, 1], padding='same', activation=relu)

self.pool3 = MaxPool2D(pool_size=[2, 2], padding='same')

# unit 4

self.conv8 = Conv2D(512, kernel_size=[3, 3], padding='same', activation=relu)

self.conv9 = Conv2D(512, kernel_size=[3, 3], padding='same', activation=relu)

self.conv10 = Conv2D(512, kernel_size=[1, 1], padding='same', activation=relu)

self.pool4 = MaxPool2D(pool_size=[2, 2], padding='same')

# unit 5

self.conv11 = Conv2D(512, kernel_size=[3, 3], padding='same', activation=relu)

self.conv12 = Conv2D(512, kernel_size=[3, 3], padding='same', activation=relu)

self.conv13 = Conv2D(512, kernel_size=[1, 1], padding='same', activation=relu)

self.pool5 = MaxPool2D(pool_size=[2, 2], padding='same')

# 全连接

self.fc14 = Dense(4096, activation=relu)

self.fc15 = Dense(4096, activation=relu)

self.fc16 = Dense(1000, activation=None)

def call(self,input):

out = self.conv1(input)

out = self.conv2(out)

out = self.pool1(out)

out = self.conv3(out)

out = self.conv4(out)

out = self.pool2(out)

out = self.conv5(out)

out = self.conv6(out)

out = self.conv7(out)

out = self.pool3(out)

out = self.conv8(out)

out = self.conv9(out)

out = self.conv10(out)

out = self.pool4(out)

out = self.conv11(out)

out = self.conv12(out)

out = self.conv13(out)

out = self.pool5(out)

out = Flatten()(out)

out = self.fc14(out)

out = self.fc15(out)

out = self.fc16(out)

return out

def main():

VGG16 = self_VGG16()

VGG16.build(input_shape=(None, 227, 227, 3))

tmp = tf.random.normal([3, 227, 227, 3])

out = VGG16(tmp)

print(out.shape)

if __name__ == '__main__':

main()

3. ResNet

论文:ResNet论文

ResNet是华裔大佬何凯明在2015年提出的,何凯明目前是Facebook AI Research(FAIR)的研究科学家。在此之前,他还曾在Microsoft Research Asia(MSRA)工作。目前好像是在从事GCN和表示学习的相关研究。

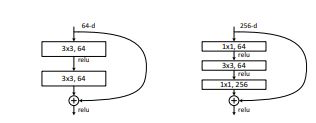

ResNet是一个非常厉害的网络,残差的思想至今仍然可以被称为是state-of-the-art。ResNet解决了深层神经网络“退化"的问题。因此,现如今才得以出现几百几千层的深层网络。

深层神经网络”退化“指的是:对浅层网络逐渐叠加layers,模型在训练集和测试集上的性能会变好,因为模型复杂度更高了,表达能力更强了,可以对潜在的映射关系拟合得更好。而“退化”指的是,给网络叠加更多的层后,性能却快速下降。

3.1 ResNet网络结构

该图摘录自论文《Deep Residual Learning for Image Recognition》

3.2论文创新点

- ResNet解决了深层神经网络“退化"的问题。

- 提出了残差的思想,推动了深度学习的发展。

3.3 ResNet代码实现

Code in pytorch

# 导入包

import torch

from torch import nn

from torch.nn import functional as F

class ResBlk(nn.Module):

"""

ResNet Block

"""

expansion = 1

def __init__(self,ch_in,ch_out,stride=1):

super(ResBlk, self).__init__()

"""

两个网络层

每个网络层 包含 3x3 的卷积

"""

self.blockfunc = nn.Sequential(

nn.Conv2d(ch_in, ch_out, kernel_size=3, stride=stride, padding=1),

nn.BatchNorm2d(ch_out),

nn.ReLU(inplace=True),

nn.Conv2d(ch_out, ch_out*ResBlk.expansion, kernel_size=3,padding=1),

nn.BatchNorm2d(ch_out*ResBlk.expansion),

)

self.extra = nn.Sequential()

if stride!=1 or ch_out*ResBlk.expansion != ch_in:

# [b,ch_in,h,w] => [b,ch_out,h,w]

self.extra= nn.Sequential(

nn.Conv2d(ch_in,ch_out*ResBlk.expansion,kernel_size=1,stride=stride),

nn.BatchNorm2d(ch_out*ResBlk.expansion)

)

def forward(self,x):

"""

:param x: [b,ch,h,w]

:return:

"""

# shortcut

# element-wise add :[b,ch_in,h,w] + [b,ch_out,h,w]

return F.relu(self.blockfunc(x)+self.extra(x))

class ResNet(nn.Module):

def __init__(self,a,b,c,d):

super(ResNet, self).__init__()

self.in_channels = 64

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels=3,out_channels=64,kernel_size=7,stride=2),

nn.BatchNorm2d(64),

nn.ReLU(True),

nn.MaxPool2d(kernel_size=3, stride=2)

)

self.Convblock1 = nn.Sequential()

for i in range(1,a+1):

self.Convblock1.add_module(name="Convblock1 {}th".format(i),module=ResBlk(ch_in = self.in_channels, ch_out = 64))

self.Convblock2 = nn.Sequential()

for i in range(1,b+1):

if i==1:

self.Convblock2.add_module(name="Convblock2 {}th".format(i), module=ResBlk(ch_in = self.in_channels, ch_out = 128,stride=2))

self.in_channels = 128

else:

self.Convblock2.add_module(name="Convblock2 {}th".format(i), module=ResBlk(ch_in = self.in_channels, ch_out = 128))

self.Convblock3 = nn.Sequential()

for i in range(1,c+1):

if i==1:

self.Convblock3.add_module(name="Convblock3 {}th".format(i), module=ResBlk(ch_in = self.in_channels, ch_out = 256,stride=2))

self.in_channels = 256

else:

self.Convblock3.add_module(name="Convblock3 {}th".format(i), module=ResBlk(ch_in = self.in_channels, ch_out = 256))

self.Convblock4 = nn.Sequential()

for i in range(1, d + 1):

if i==1:

self.Convblock4.add_module(name="Convblock4 1th", module=ResBlk(ch_in = self.in_channels, ch_out = 512,stride=2))

self.in_channels = 512

else:

self.Convblock4.add_module(name="Convblock4 2th", module=ResBlk(ch_in = self.in_channels, ch_out = 512))

self.pool = nn.AdaptiveAvgPool2d((1, 1))

self.fallen = nn.Flatten()

self.fc = nn.Linear(25088,10)

def forward(self,x):

out = self.conv1(x)

# print("layer1:", out.shape)

out = self.Convblock1(out)

# print("layer2:", out.shape)

out = self.Convblock2(out)

# print("layer3:", out.shape)

out = self.Convblock3(out)

# print("layer4:", out.shape)

out = self.Convblock4(out)

# print("layer5:", out.shape)

out = self.fallen(out)

out = self.fc(out)

# print("layer6:", out.shape)

return out

def ResNet34():

return ResNet(3, 4, 6, 3)

def ResNet18():

return ResNet(2, 2, 2, 2)

def main():

tmp = torch.rand(2,3,227,227)

model = ResNet34()

out = model(tmp)

print(out.shape)

if __name__ == '__main__':

main()

Code in tensorflow 2.x

import tensorflow as tf

from tensorflow.keras.layers import Dense,Conv2D,MaxPool2D,Flatten,Layer,BatchNormalization,ReLU,AvgPool2D

from tensorflow.keras.activations import relu

from tensorflow.keras.models import Model,Sequential

class BasicBlock(Layer):

def __init__(self,filter_num1,filter_num2,stride):

super(BasicBlock, self).__init__()

self.conv1 = Conv2D(filters=filter_num1,kernel_size=[1,1],strides=stride)

self.bn1 = BatchNormalization()

self.relu1 = ReLU()

self.conv2 = Conv2D(filters=filter_num1,kernel_size=[3,3],strides=stride,padding='same')

self.bn2 = BatchNormalization()

self.relu2 = ReLU()

self.conv3 = Conv2D(filters=filter_num2,kernel_size=[1,1],strides=stride,padding='same')

self.bn3 = BatchNormalization()

self.downsample = Sequential([Conv2D(filters=filter_num2,kernel_size=[1,1],strides=stride),

BatchNormalization(),

ReLU()])

def call(self, inputs):

out = self.conv1(inputs)

out = self.bn1(out)

out = self.relu1(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu2(out)

out = self.conv3(out)

out = self.bn3(out)

# 计算残差

residual = self.downsample(inputs)

out += residual

out = relu(out)

return out

class self_ResNet101(Model):

def __init__(self):

super(self_ResNet101,self).__init__()

self.conv1 = Sequential([Conv2D(filters=64,kernel_size=[7,7],strides=2,padding='same'),

BatchNormalization(),

ReLU(),

MaxPool2D(pool_size=[3,3],strides=2)])

self.layerN = []

# layer1

for i in range(3):

self.layerN.append(BasicBlock(32,64,1))

# layer2

for i in range(4):

self.layerN.append(BasicBlock(64,128,1))

# layer3

for i in range(23):

self.layerN.append(BasicBlock(128,256,1))

# layer4

for i in range(3):

self.layerN.append(BasicBlock(256,512,1))

self.layerN = Sequential(self.layerN)

self.Avg = AvgPool2D(pool_size=[7,7],strides=1)

self.flatten = Flatten()

self.fc = Dense(units=3)

def call(self,data):

out = self.conv1(data)

out = self.layerN(out)

out = self.Avg(out)

out = self.flatten(out)

out = self.fc(out)

return out

def main():

ResNet101 = self_ResNet101()

ResNet101.build(input_shape=(None, 227, 227, 3))

tmp = tf.random.normal([3, 227, 227, 3])

out = ResNet101(tmp)

print(out.shape)

if __name__ == '__main__':

main()

4. DenseNet

论文:DenseNet论文

这篇论文是2017年CVPR的Best paper,其发明者也是华裔大佬,黄高博士。

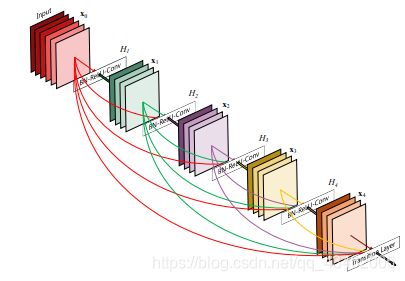

随着神经网络变得越来越深,一个新的问题出现了:当输入特征数据或梯度数据通过多个神经层时,它在到达网络结尾(或开始)处就消失了。 ResNets通过恒等连接将信号从一层传输到下一层。Stochastic depth通过在训练期间随机丢弃层来缩短ResNets,以得到更好的信息和梯度流。FractalNets重复组合几个并行层序列和不同数量的卷积块,以获得较深的标准深度,同时在网络中保持许多短路径。尽管上述方法的网络结构都有所不同,但它们有一个共同特征:创建从早期层到后期层的短路径。

以前的研究说明,只要网络包含短路连接,基本上就能更深,更准确。本论文基于这个观察,引入了密集卷积网络(DenseNet),它以前馈方式将每个层连接到所有层。传统的卷积网络L层有L个连接,而DenseNet有 L ( L + 1 ) / 2 L(L+1)/2 L(L+1)/2 个直接连接。对于每一层,它前面所有层的特征图都当作输入,而其本身的特征图作为所有后面层的输入(短路连接被发挥到极致,网络中每两层都相连)。

4.1 DenseNet网络结构

该图摘录自论文《Densely Connected Convolutional Networks》

4.2 论文创新点

- 缓解梯度消失问题。

- 加强特征传播。

- 鼓励特征重用。

- 大幅减少参数数量。

4.3 DenseNet代码实现

Code in pytorch

import torch

from torch import nn

class DenseBlock(nn.Module):

def __init__(self,ch_in,ch_out):

super(DenseBlock, self).__init__()

self.layer1 = nn.Conv2d(in_channels=ch_in,out_channels=ch_out,kernel_size=1,padding=1)

self.layer2 = nn.Conv2d(in_channels=ch_in,out_channels=ch_out,kernel_size=3)

def forward(self,x):

return torch.cat([x,self.layer2(self.layer1(x))],dim=1)

class DenseNet121(nn.Module):

growth_rate = 32

def __init__(self):

super(DenseNet121, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(in_channels=3,out_channels=DenseNet121.growth_rate,kernel_size=7,stride=2),

nn.MaxPool2d(kernel_size=3,stride=2))

self.layer2 = nn.Sequential()

for i in range(6):

self.layer2.add_module(name="DenseBlock1 {}th".format(i),module=DenseBlock(ch_in=DenseNet121.growth_rate,ch_out=DenseNet121.growth_rate))

DenseNet121.growth_rate = 2 * DenseNet121.growth_rate

self.layer3 = nn.Sequential(

nn.Conv2d(in_channels=DenseNet121.growth_rate,out_channels=DenseNet121.growth_rate,kernel_size=1),

nn.AvgPool2d(kernel_size=2,stride=2))

self.layer4 = nn.Sequential()

for i in range(12):

self.layer4.add_module(name="DenseBlock2 {}th".format(i), module=DenseBlock(ch_in=DenseNet121.growth_rate, ch_out=DenseNet121.growth_rate))

DenseNet121.growth_rate = 2 * DenseNet121.growth_rate

self.layer5 = nn.Sequential(

nn.Conv2d(in_channels=DenseNet121.growth_rate, out_channels=DenseNet121.growth_rate, kernel_size=1),

nn.AvgPool2d(kernel_size=2, stride=2))

self.layer6 = nn.Sequential()

for i in range(24):

self.layer6.add_module(name="DenseBlock3 {}th".format(i), module=DenseBlock(ch_in=DenseNet121.growth_rate, ch_out=DenseNet121.growth_rate))

DenseNet121.growth_rate = 2 * DenseNet121.growth_rate

self.layer7 = nn.Sequential(

nn.Conv2d(in_channels=DenseNet121.growth_rate, out_channels=DenseNet121.growth_rate, kernel_size=1),

nn.AvgPool2d(kernel_size=2, stride=2))

self.layer8 = nn.Sequential()

for i in range(16):

self.layer8.add_module(name="DenseBlock4 {}th".format(i), module=DenseBlock(ch_in=DenseNet121.growth_rate, ch_out=DenseNet121.growth_rate))

DenseNet121.growth_rate = 2 * DenseNet121.growth_rate

self.layer9 = nn.AvgPool2d(kernel_size=7)

self.flaten = nn.Flatten()

self.layer10 = nn.Linear(20,10)

def forward(self,x):

out = self.layer1(x)

print("layer1:", out.shape)

out = self.layer2(out)

print("layer2:", out.shape)

out = self.layer3(out)

print("layer3:", out.shape)

out = self.layer4(out)

print("layer4:", out.shape)

out = self.layer5(out)

print("layer5:", out.shape)

out = self.layer6(out)

print("layer6:", out.shape)

out = self.layer7(out)

print("layer7:", out.shape)

out = self.layer8(out)

print("layer8:", out.shape)

out = self.layer9(out)

print("layer9:", out.shape)

out = self.flaten(out)

out = self.layer10(out)

print("layer10:", out.shape)

return out

def main():

tmp = torch.rand(2, 3, 227, 227)

model = DenseNet121()

out = model(tmp)

print(out.shape)

if __name__ == '__main__':

main()

Code in tensorflow 2.x

import tensorflow as tf

from tensorflow.keras.layers import Conv2D, MaxPool2D, Layer, BatchNormalization, ReLU, AvgPool2D, Dense

from tensorflow.keras.models import Model, Sequential

class ConvBlock(Layer):

def __init__(self):

super(ConvBlock, self).__init__()

self.BN1 = BatchNormalization()

self.Activation1 = ReLU()

self.Conv1 = Conv2D(filters=32 * 4, kernel_size=[1, 1], padding='same')

self.BN2 = BatchNormalization()

self.Activation2 = ReLU()

self.Conv2 = Conv2D(filters=32, kernel_size=[3, 3], padding='same')

self.BASCD1 = Sequential([self.BN1, self.Activation1, self.Conv1])

self.BASCD2 = Sequential([self.BN2, self.Activation2, self.Conv2])

def call(self, inputs):

out = self.BASCD1(inputs)

# print('ConvBlock out1 shape:',out.shape)

out = self.BASCD2(out)

# print('ConvBlock out2 shape:',out.shape)

return out

class DenseBlock(Layer):

def __init__(self, numberLayers):

super(DenseBlock, self).__init__()

self.num = numberLayers

self.ListDenseBlock = []

for i in range(self.num):

self.ListDenseBlock.append(ConvBlock())

def call(self, inputs):

data = inputs

for i in range(self.num):

# print('data', i, 'shape:', data.shape)

out = Sequential(self.ListDenseBlock[i])(data)

# print('out',i,'shape:',out.shape)

data = tf.concat([out, data], axis=3)

return data

class TransLayer(Layer):

def __init__(self):

super(TransLayer, self).__init__()

self.BN1 = BatchNormalization()

self.Conv1 = Conv2D(64, kernel_size=[1, 1])

self.pool1 = AvgPool2D(pool_size=[2, 2], strides=2)

def call(self, inputs):

out = self.BN1(inputs)

out = self.Conv1(out)

out = self.pool1(out)

return out

class DenseNet(Model):

def __init__(self):

super(DenseNet, self).__init__()

# 卷积层

self.Conv = Conv2D(filters=32, kernel_size=(5, 5), strides=2)

self.Pool = MaxPool2D(pool_size=(2, 2), strides=2)

# DenseBlock 1

self.layer1 = DenseBlock(6)

# Transfer 1

self.TransLayer1 = TransLayer()

# DenseBlock 2

self.layer2 = DenseBlock(12)

# Transfer 2

self.TransLayer2 = TransLayer()

# DenseBlock 3

self.layer3 = DenseBlock(24)

# Transfer 3

self.TransLayer3 = TransLayer()

# DenseBlock 4

self.layer4 = DenseBlock(16)

# Transfer 4

self.TransLayer4 = AvgPool2D(pool_size=(7, 7))

self.softmax = Dense(3)

def call(self, inputs):

out = self.Conv(inputs)

# print('Conv shape:',out.shape) # (None, 112, 112, 32)

out = self.Pool(out)

# print('Pool shape:',out.shape) # (None, 56, 56, 32)

out = self.layer1(out)

# print('layer1 shape:', out.shape) # (None, 56, 56, 224)

out = self.TransLayer1(out)

# print('TransLayer1 shape:', out.shape) # (None, 28, 28, 64)

out = self.layer2(out)

# print('layer2 shape:', out.shape) # (None, 28, 28, 448)

out = self.TransLayer2(out)

# print('TransLayer2 shape:', out.shape) # (None, 14, 14, 64)

out = self.layer3(out)

# print('layer3 shape:', out.shape) # (None, 14, 14, 832)

out = self.TransLayer3(out)

# print('TransLayer3 shape:', out.shape) # (None, 7, 7, 64)

out = self.layer4(out)

# print('layer4 shape:', out.shape) # (None, 7, 7, 576)

out = self.TransLayer4(out)

# print('TransLayer4 shape:', out.shape) # (None, 1, 1, 576)

out = self.softmax(out)

return out

def main():

DenseNet121 = DenseNet()

DenseNet121.build(input_shape=(None, 227, 227, 3))

tmp = tf.random.normal([3, 227, 227, 3])

out = DenseNet121(tmp)

print(out.shape)

if __name__ == '__main__':

main()

结语

写者分别使用tensorflow2.x框架和torch框架来对经典CNN编程实现,聊一下感受就是

- 两个框架二维卷积时,在视图上有不同的理解。channel在torch编程中处在tensor的第二个维度,而在tensorflow中是第四个维度。

- tensorflow更具有包容性。如果是一个新手要学习深度学习框架,不考虑生态,那我会推荐他学习tensorflow 2.x版本。例如使用tensorflow编写一个卷积层时,只需要给出输出特征图的channel数,既卷积核的个数,而不需要给输入特征图的channel数,既tensorflow在运行中会自动适应,而torch则要同时给出输入特征图和输出特征图的channel数。

- tensorboard是tensorflow的可视化工具,visdom是torch的可视化工具。两种可视化工具都很强大,不过个人更加偏爱visdom,因为它的界面更加简洁。

所有代码已经上传至Github。

参考文献

- 维基百科:https://en.wikipedia.org/wiki/AlexNet

- Alex Krizhevsky 个人主页:https://www.cs.toronto.edu/~kriz/

- 雪饼:https://my.oschina.net/u/876354/blog/1634322

- 机器之心:https://www.jiqizhixin.com/graph/technologies/1d2204cb-e4cf-47c5-bfa2-691367fe2387

- 何凯明主页:http://kaiminghe.com/

- 维基百科:https://en.wikipedia.org/wiki/Residual_neural_network

- 日拱一卒:https://www.cnblogs.com/shine-lee/p/12363488.html

- AI之路:https://blog.csdn.net/u014380165/article/details/75142664

- ktulu7:https://www.jianshu.com/p/cced2e8378bf

- 黄高主页:http://www.gaohuang.net/