【图像分类】基于宽度学习实现minist数据集图像分类matlab源码

简介

一、宽度学习的前世今生

宽度学习系统(BLS) 一词的提出源于澳门大学科技学院院长陈俊龙和其学生于2018年1月发表在IEEE TRANSACTIONS ON NEURAL NETWORKS AND LEARNING SYSTEMS,VOL. 29, NO. 1 的一篇文章,题目叫《Broad Learning System: An Effective and Efficient Incremental Learning System Without the Need for Deep Architecture 》。文章的主旨十分明显,就是提出了一种可以和深度学习媲美的宽度学习框架。

为什么要提出宽度学习? 众所周知,深度学习中最让人头疼之处在于其数量庞大的待优化参数,通常需要耗费大量的时间和机器资源来进行优化。

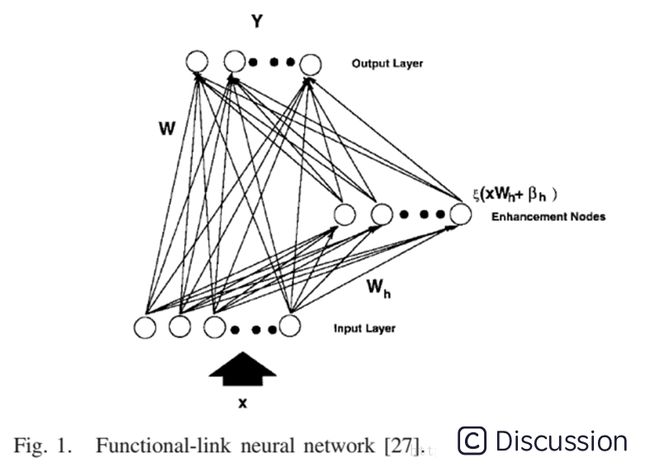

宽度学习的前身实际上是已经被人们研究了很久的随机向量函数连接网络 random vector functional-link neural network (RVFLNN) ,如图所示:\  \ 咋一看这网络结构没有什么奇特之处,其实也对,就是在单层前馈网络(SLFN)中增加了从输入层到输出层的直接连接。网络的第一层也叫输入层,第二层改名了,叫做增强层,第三层是输出层。具体来看,网络中有三种连接,分别是

\ 咋一看这网络结构没有什么奇特之处,其实也对,就是在单层前馈网络(SLFN)中增加了从输入层到输出层的直接连接。网络的第一层也叫输入层,第二层改名了,叫做增强层,第三层是输出层。具体来看,网络中有三种连接,分别是

- (输入层 => 增强层)加权后有非线性变换

- (增强层 => 输出层)只有线性变换

- (输入层 => 输出层)只有线性变换

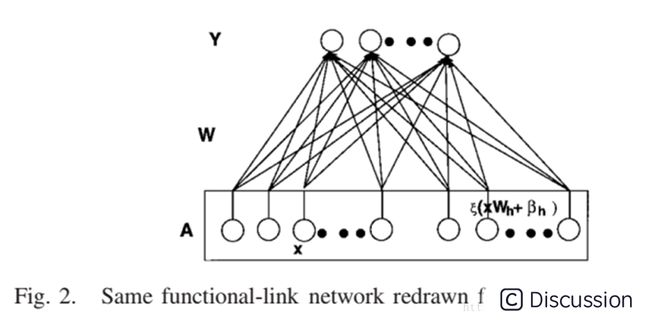

所以在RVFLNN中只有增强层 是真正意义上的神经网络单元,因为只有它带了激活函数,网络的其他部分均是线性的。下面我们将这个网络结构扭一扭:\  \ 当我们把增强层和输入层排成一行时,将它们视为一体,那网络就成了由 A(输入层+增强层)到 Y 的线性变换了!线性变换对应的权重矩阵 W 就是 输入层加增强层 到 输出层 之间的线性连接!!

\ 当我们把增强层和输入层排成一行时,将它们视为一体,那网络就成了由 A(输入层+增强层)到 Y 的线性变换了!线性变换对应的权重矩阵 W 就是 输入层加增强层 到 输出层 之间的线性连接!!

这时你可能要问:那输入层到增强层之间的连接怎么处理/优化?我们的回答是:不管它!!! 我们给这些连接随机初始化,固定不变!

如果我们固定输入层到增强层之间的权重,那么对整个网络的训练就是求出 A 到 Y 之间的变换 W,而 W 的确定非常简单:W=A−1YW = A^{-1}YW=A−1Y\ 输入 X 已知,就可以求出增强层 A;训练数据的标签已知,就知道了 Y。接下来的学习就是一步到位的事情了。

为什么可以这样做?\ 深度学习费了老劲把网络层数一加再加,就是为了增加模型的复杂度,能更好地逼近我们希望学习到的非线性函数,但是不是非线性层数越多越好呢?理论早就证明单层前馈网络(SLFN)已经可以作为函数近似器了,可见增加层数并不是必要的。RVFLNN也被证明可以用来逼近紧集上的任何连续函数,其非线性近似能力就体现在增强层的非线性激活函数上,只要增强层单元数量足够多,要多非线性有多非线性!

二、宽度学习系统(BLS)

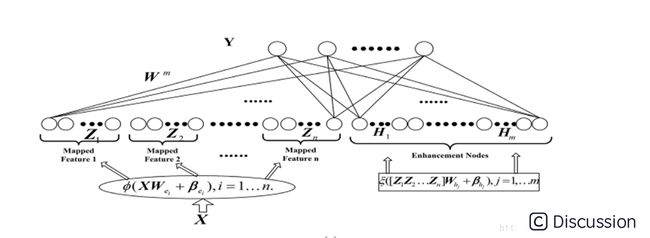

\ 之前介绍的是RVFLNN,现在来看BLS,它对输入层做了一点改进,就是不直接用原始数据作为输入层,而是先对数据做了一些变换,相当于特征提取,将变化后的特征作为原RVFLNN的输入层,这样做的意义不是很大,只不过想告诉你:宽度学习可以利用别的模型提取到的特征来训练,即可以可别的机器学习算法组装。现在我们不把第一层叫做输入层,而是叫它特征层。

\ 之前介绍的是RVFLNN,现在来看BLS,它对输入层做了一点改进,就是不直接用原始数据作为输入层,而是先对数据做了一些变换,相当于特征提取,将变化后的特征作为原RVFLNN的输入层,这样做的意义不是很大,只不过想告诉你:宽度学习可以利用别的模型提取到的特征来训练,即可以可别的机器学习算法组装。现在我们不把第一层叫做输入层,而是叫它特征层。

当给定了特征 Z,直接计算增强层 H,将特征层和增强层合并成 A=[Z|H],竖线表示合并成一行。由于训练数据的标签 Y 已知,计算权重 W=A−1YW = A^{-1}YW=A−1Y 即可。实际计算时,使用岭回归 来求解权值矩阵,即通过下面的优化问题来解W(其中σ1=σ2=v=u=2\sigma_1=\sigma_2=v=u=2σ1=σ2=v=u=2):\ ![]() \ 解得\

\ 解得\  \ 以上过程是一步到位,即当数据固定,模型结构固定,可以直接找到最优的参数 W。

\ 以上过程是一步到位,即当数据固定,模型结构固定,可以直接找到最优的参数 W。

然而在大数据时代,数据固定是不可能的,数据会源源不断地来。模型固定也是不现实的,因为时不时需要调整数据的维数,比如增加新的特征。这样一来,就有了针对以上网络的增量学习算法。注意,宽度学习的核心在其增量学习算法,因为当数据量上亿时,相当于矩阵 Z 或 X 有上亿行,每次更新权重都对一个上一行的矩阵求伪逆 是不现实的!

增量学习的核心就是,利用上一次的计算结果,和新加入的数据,只需少量计算就能得进而得到更新的权重。\  \ 例如:当我们发现初始设计的模型拟合能力不够,需要增加增强节点数量来减小损失函数。这时,我们给矩阵 A 增加一列 a,表示新增的增强节点,得到[A|a],这时要计算新的权值矩阵,就需要求 [A∣a]−1[A|a]^{-1}[A∣a]−1,于是问题就转化成分块矩阵的广义逆问题,得到了[A∣a]−1[A|a]^{-1}[A∣a]−1,则更新的权重为 Wnew=[A∣a]−1YW_{new} = [A|a]^{-1}YWnew=[A∣a]−1Y,具体解形式如下,可以看到,Wn+1W_{n+1}Wn+1中用到了更新之前的权值矩阵WnW_{n}Wn,因而有效地减少了更新权重的计算量。\

\ 例如:当我们发现初始设计的模型拟合能力不够,需要增加增强节点数量来减小损失函数。这时,我们给矩阵 A 增加一列 a,表示新增的增强节点,得到[A|a],这时要计算新的权值矩阵,就需要求 [A∣a]−1[A|a]^{-1}[A∣a]−1,于是问题就转化成分块矩阵的广义逆问题,得到了[A∣a]−1[A|a]^{-1}[A∣a]−1,则更新的权重为 Wnew=[A∣a]−1YW_{new} = [A|a]^{-1}YWnew=[A∣a]−1Y,具体解形式如下,可以看到,Wn+1W_{n+1}Wn+1中用到了更新之前的权值矩阵WnW_{n}Wn,因而有效地减少了更新权重的计算量。\

源代码

``` %%%%%%%%%%%%%%%%%%%%%%%%This is the demo for the bls models including the %%%%%%%%%%%%%%%%%%%%%%%%proposed incremental learning algorithms. %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% %%%%%%%%%%load the dataset MNIST dataset%%%%%%%%%%%%%%%%%%%% clear; warning off all; format compact; load mnist;

%%%%%%%%%%%%%%%the samples from the data are normalized and the lable data %%%%%%%%%%%%%%%trainy and testy are reset as NC matrices%%%%%%%%%%%%%% train_x = double(train_x/255); train_y = double(train_y); % test_x = double(train_x/255); % test_y = double(train_y); test_x = double(test_x/255); test_y = double(test_y); train_y=(train_y-1)2+1; testy=(testy-1)*2+1; assert(isfloat(trainx), 'trainx must be a float'); assert(all(trainx(:)>=0) && all(trainx(:)<=1), 'all data in trainx must be in [0:1]'); assert(isfloat(testx), 'testx must be a float'); assert(all(testx(:)>=0) && all(testx(:)<=1), 'all data in testx must be in [0:1]'); %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% disp('Press any key to run the one shot BLS demo'); pause

%%%%%%%%%%%%%%%%%%%%This is the model of broad learning sytem with%%%%%% %%%%%%%%%%%%%%%%%%%%one shot structrue%%%%%%%%%%%%%%%%%%%%%%%% C = 2^-30; s = .8;%the l2 regularization parameter and the shrinkage scale of the enhancement nodes N11=10;%feature nodes per window N2=10;% number of windows of feature nodes N33=11000;% number of enhancement nodes epochs=10;% number of epochs trainerr=zeros(1,epochs);testerr=zeros(1,epochs); traintime=zeros(1,epochs);testtime=zeros(1,epochs); % rand('state',67797325) % 12000 %%%%% The random seed recommended by the % reference HELM [10]. N1=N11; N3=N33;

for j=1:epochs

[TrainingAccuracy,TestingAccuracy,Trainingtime,Testingtime] = blstrain(trainx,trainy,testx,testy,s,C,N1,N2,N3);

trainerr(j)=TrainingAccuracy * 100; testerr(j)=TestingAccuracy * 100; traintime(j)=Trainingtime; testtime(j)=Testingtime; end save ( ['mnistresultoneshot' num2str(N3)], 'trainerr', 'testerr', 'traintime', 'testtime'); %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% disp('Press any key to run the one shot BLS demo with BP algorithm'); pause

%%%%%%%%%%%%%%%%%%%%This is the model of broad learning system for one%%%%%% %%%%%%%%%%%%%%%%%%%%shot structrue with fine tuning under BP algorithm%%%%%%%%%%%%%%%%%%%%%%%% C = 2^-30; s = .8;%the l2 regularization parameter and the shrinkage scale of the enhancement nodes N11=10;%feature nodes per window N2=10;% number of windows of feature nodes N33=5000;% number of enhancement nodes epochs=1;% number of epochs trainerr=zeros(1,epochs);testerr=zeros(1,epochs); traintime=zeros(1,epochs);testtime=zeros(1,epochs); % rand('state',67797325) % 12000 %%%%% The random seed recommended by the % reference HELM [10]. N1=N11; N3=N33;

for j=1:epochs

[TrainingAccuracy,TestingAccuracy,Trainingtime,Testingtime] = blstrainbp(trainx,trainy,testx,testy,s,C,N1,N2,N3);

trainerr(j)=TrainingAccuracy * 100; testerr(j)=TestingAccuracy * 100; traintime(j)=Trainingtime; testtime(j)=Testingtime; end save ( ['mnistresultbp' num2str(N3)], 'trainerr', 'testerr', 'traintime', 'test_time'); %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% disp('Press any key to run the BLS demo for increment of m enhancement nodes'); pause

%%%%%%%%%%%%%%%%%%%%This is the model of broad learning system for%%%%%% %%%%%%%%%%%%%%%%%%%%increment of m enhancement nodes%%%%%%%%%%%%%%%%%%%%%%%% C = 2^-30; s = .8;%the l2 regularization parameter and the shrinkage scale of the enhancement nodes N11=10;%feature nodes per window N2=10;% number of windows of feature nodes N33=9000;% number of enhancement nodes m=500;%number of enhancement nodes in each incremental learning l=5;% steps of incremental learning epochs=1;% number of epochs % rand('state',67797325) % 12000 %%%%% The random seed recommended by the % reference HELM [10]. N1=N11; N3=N33;

[trainerr,testerr,traintime,testtime,Testingtime,Trainingtime] = blstrainenhance(trainx,trainy,testx,testy,s,C,N1,N2,N3,epochs,m,l); save ( ['mnistresultenhance'], 'trainerr', 'testerr', 'traintime', 'testtime','Testingtime','Trainingtime'); %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% disp('Press any key to run the BLS demo for increment of m2+m3 enhancement nodes and m1 feature nodes'); pause

%%%%%%%%%%%%%%%%%%%%This is the model of broad learning system for%%%%%% %%%%%%%%%%%%%%%%%%%%increment of m2+m3 enhancement nodes and m1 feature nodes %%%%%%%%%%%%%%%%%%%%%%%% C = 2^-30;%the regularization parameter for sparse regualarization s = .8;%the shrinkage parameter for enhancement nodes N11=10;%feature nodes per window N2=6;% number of windows of feature nodes N33=3000;% number of enhancement nodes epochs=1;% number of epochs m1=10;%number of feature nodes per increment step m2=750;%number of enhancement nodes related to the incremental feature nodes per increment step m3=1250;%number of enhancement nodes in each incremental learning l=5;% steps of incremental learning trainerrt=zeros(epochs,l);testerrt=zeros(epochs,l);traintimet=zeros(epochs,l);testtimet=zeros(epochs,l); Testingtimet=zeros(epochs,1);Trainingtimet=zeros(epochs,1); % rand('state',67797325) % 12000 %%%%% The random seed recommended by the % reference HELM [10]. N1=N11; N3=N33;

for i=1:epochs

[trainerr,testerr,traintime,testtime,Testingtime,Trainingtime] = blstrainenhancefeature(trainx,trainy,testx,testy,s,C,N1,N2,N3,m1,m2,m3,l); trainerrt(i,:)=trainerr;testerrt(i,:)=testerr;traintimet(i,:)=traintime;testtimet(i,:)=testtime; Testingtimet(i)=Testingtime;Trainingtimet(i)=Trainingtime; end

save ( [ 'mnistresultenhancefeature'], 'trainerrt', 'testerrt', 'traintimet', 'testtimet','Testingtimet','Trainingtimet'); %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% disp('Press any key to run the BLS demo for increment of m input patterns'); pause

%%%%%%%%%%%%%%%%%%%%This is the model of broad learning system for%%%%%% %%%%%%%%%%%%%%%%%%%% increment of m input patterns %%%%%%%%%%%%%%%%%%%%%%%% trainxf=trainx;trainyf=trainy; trainx=trainxf(1:10000,:);trainy=trainyf(1:10000,:); % the selected input patterns of int incremental learning C = 2^-30;%the regularization parameter for sparse regualarization s = .8;%the shrinkage parameter for enhancement nodes N11=10;%feature nodes per window N2=10;% number of windows of feature nodes N33=5000;% number of enhancement nodes epochs=1;% number of epochs m=10000;%number of added input patterns per increment step l=6;% steps of incremental learning trainerrt=zeros(epochs,l);testerrt=zeros(epochs,l);traintimet=zeros(epochs,l);testtimet=zeros(epochs,l); Testingtimet=zeros(epochs,1);Trainingtimet=zeros(epochs,1); % rand('state',67797325) % 12000 %%%%% The random seed recommended by the % reference HELM [10]. N1=N11; N3=N33;

for i=1:epochs

[trainerr,testerr,traintime,testtime,Testingtime,Trainingtime] = blstraininput(trainx,trainy,trainxf,trainyf,testx,testy,s,C,N1,N2,N3,m,l); trainerrt(i,:)=trainerr;testerrt(i,:)=testerr;traintimet(i,:)=traintime;testtimet(i,:)=testtime; Testingtimet(i)=Testingtime;Trainingtimet(i)=Trainingtime; end save ( [ 'mnistresultinput'], 'trainerrt', 'testerrt', 'traintimet', 'testtimet','Testingtimet','Trainingtimet');

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% disp('Press any key to run the BLS demo for increment of m input patterns and m2 enhancement nodes'); pause

%%%%%%%%%%%%%%%%%%%%This is the model of broad learning system for%%%%%% %%%%%%%%%%%%%%%%%%%%the increment of m input patterns and m2 enhancement nodes %%%%%%%%%%%%%%%%%%%%%%%% % trainxf=trainx;trainyf=trainy; % trainx=trainxf(1:10000,:);trainy=trainyf(1:10000,:); % the selected input patterns of int incremental learning C = 2^-30;%the regularization parameter for sparse regualarization s = .8;%the shrinkage parameter for enhancement nodes N11=10;%feature nodes per window N2=10;% number of windows of feature nodes N33=3000;% number of enhancement nodes epochs=1;% number of epochs m=10000;%number of added input patterns per incremental step m2=1600; %number of added enhancement nodes per incremental step l=6;% steps of incremental learning trainerrt=zeros(epochs,l);testerrt=zeros(epochs,l);traintimet=zeros(epochs,l);testtimet=zeros(epochs,l); Testingtimet=zeros(epochs,1);Trainingtimet=zeros(epochs,1); % rand('state',67797325) % 12000 %%%%% The random seed recommended by the % reference HELM [10]. N1=N11; N3=N33;

for i=1:epochs

[trainerr,testerr,traintime,testtime,Testingtime,Trainingtime] = blstraininputenhance(trainx,trainy,trainxf,trainyf,testx,testy,s,C,N1,N2,N3,m,m2,l); trainerrt(i,:)=trainerr;testerrt(i,:)=testerr;traintimet(i,:)=traintime;testtimet(i,:)=testtime; Testingtimet(i)=Testingtime;Trainingtimet(i)=Trainingtime; end save ( [ 'mnistresultinputenhance'], 'trainerrt', 'testerrt', 'traintimet', 'testtimet','Testingtimet','Trainingtimet'); %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% ```

结果显示

```

BLSdemoMNIST Press any key to run the one shot BLS demo Feature nodes in window 1.000000: Max Val of Output 197.231923 Min Val -132.445934 Feature nodes in window 2.000000: Max Val of Output 162.050448 Min Val -259.157923 Feature nodes in window 3.000000: Max Val of Output 158.825012 Min Val -170.848908 Feature nodes in window 4.000000: Max Val of Output 183.610345 Min Val -176.640724 Feature nodes in window 5.000000: Max Val of Output 156.930629 Min Val -246.324992 Feature nodes in window 6.000000: Max Val of Output 205.564476 Min Val -224.889567 Feature nodes in window 7.000000: Max Val of Output 190.490079 Min Val -201.207669 Feature nodes in window 8.000000: Max Val of Output 222.445795 Min Val -212.138630 Feature nodes in window 9.000000: Max Val of Output 139.036012 Min Val -169.134295 Feature nodes in window 10.000000: Max Val of Output 142.961186 Min Val -160.903292 Enhancement nodes: Max Val of Output 3.420353 Min Val -0.244599 Training has been finished! The Total Training Time is : 102.494 seconds Training Accuracy is : 99.7367 % Testing has been finished! The Total Testing Time is : 5.7862 seconds Testing Accuracy is : 98.61 % Feature nodes in window 1.000000: Max Val of Output 193.033289 Min Val -151.255824 Feature nodes in window 2.000000: Max Val of Output 168.607872 Min Val -179.982576 Feature nodes in window 3.000000: Max Val of Output 151.063291 Min Val -229.175817 Feature nodes in window 4.000000: Max Val of Output 176.036863 Min Val -184.911995 Feature nodes in window 5.000000: Max Val of Output 206.601918 Min Val -158.703191 Feature nodes in window 6.000000: Max Val of Output 151.741127 Min Val -168.885708 Feature nodes in window 7.000000: Max Val of Output 170.957269 Min Val -207.027683 Feature nodes in window 8.000000: Max Val of Output 181.140414 Min Val -239.784371 Feature nodes in window 9.000000: Max Val of Output 150.326291 Min Val -199.228402 Feature nodes in window 10.000000: Max Val of Output 199.252684 Min Val -158.362546 Enhancement nodes: Max Val of Output 3.472201 Min Val -0.215642 Training has been finished! The Total Training Time is : 67.9411 seconds Training Accuracy is : 99.7367 % Testing has been finished! The Total Testing Time is : 7.4485 seconds Testing Accuracy is : 98.52 % Feature nodes in window 1.000000: Max Val of Output 209.865969 Min Val -182.405300 Feature nodes in window 2.000000: Max Val of Output 235.302850 Min Val -189.549668 Feature nodes in window 3.000000: Max Val of Output 187.654419 Min Val -151.607187 Feature nodes in window 4.000000: Max Val of Output 221.993407 Min Val -216.096033 Feature nodes in window 5.000000: Max Val of Output 155.948920 Min Val -204.342383 Feature nodes in window 6.000000: Max Val of Output 141.583633 Min Val -174.913595 Feature nodes in window 7.000000: Max Val of Output 163.356243 Min Val -188.587996 Feature nodes in window 8.000000: Max Val of Output 161.750612 Min Val -180.693640 Feature nodes in window 9.000000: Max Val of Output 165.778937 Min Val -181.464787 Feature nodes in window 10.000000: Max Val of Output 224.677511 Min Val -134.572391 Enhancement nodes: Max Val of Output 3.606442 Min Val -0.236093 Training has been finished! The Total Training Time is : 87.4557 seconds Training Accuracy is : 99.7283 % Testing has been finished! The Total Testing Time is : 10.3998 seconds Testing Accuracy is : 98.61 % Feature nodes in window 1.000000: Max Val of Output 224.958358 Min Val -170.193686 Feature nodes in window 2.000000: Max Val of Output 194.514557 Min Val -208.327173 Feature nodes in window 3.000000: Max Val of Output 221.825322 Min Val -149.311265 Feature nodes in window 4.000000: Max Val of Output 164.107288 Min Val -158.172390 Feature nodes in window 5.000000: Max Val of Output 175.199592 Min Val -205.682766 Feature nodes in window 6.000000: Max Val of Output 148.039458 Min Val -232.191557 Feature nodes in window 7.000000: Max Val of Output 180.215697 Min Val -164.596921 Feature nodes in window 8.000000: Max Val of Output 204.296698 Min Val -261.752318 Feature nodes in window 9.000000: Max Val of Output 148.888232 Min Val -175.420035 Feature nodes in window 10.000000: Max Val of Output 158.381113 Min Val -186.385498 Enhancement nodes: Max Val of Output 3.426611 Min Val -0.213306 Training has been finished! The Total Training Time is : 70.7262 seconds Training Accuracy is : 99.7417 % Testing has been finished! The Total Testing Time is : 7.8665 seconds Testing Accuracy is : 98.62 % Feature nodes in window 1.000000: Max Val of Output 187.042762 Min Val -151.085976 Feature nodes in window 2.000000: Max Val of Output 143.741841 Min Val -220.929141 Feature nodes in window 3.000000: Max Val of Output 169.951028 Min Val -212.490180 Feature nodes in window 4.000000: Max Val of Output 289.859616 Min Val -130.221157 Feature nodes in window 5.000000: Max Val of Output 175.216899 Min Val -161.939318 Feature nodes in window 6.000000: Max Val of Output 184.618817 Min Val -193.127798 Feature nodes in window 7.000000: Max Val of Output 244.702315 Min Val -134.325021 Feature nodes in window 8.000000: Max Val of Output 189.362845 Min Val -192.633648 Feature nodes in window 9.000000: Max Val of Output 279.149738 Min Val -139.633519 Feature nodes in window 10.000000: Max Val of Output 114.273544 Min Val -227.540166 Enhancement nodes: Max Val of Output 2.799741 Min Val -0.223121 Training has been finished! The Total Training Time is : 64.4458 seconds Training Accuracy is : 99.7233 % Testing has been finished! The Total Testing Time is : 3.1583 seconds Testing Accuracy is : 98.61 % Feature nodes in window 1.000000: Max Val of Output 197.550232 Min Val -144.378822 Feature nodes in window 2.000000: Max Val of Output 185.717489 Min Val -199.374493 Feature nodes in window 3.000000: Max Val of Output 147.551743 Min Val -186.059951 Feature nodes in window 4.000000: Max Val of Output 191.530521 Min Val -147.093382 Feature nodes in window 5.000000: Max Val of Output 138.906715 Min Val -167.454957 Feature nodes in window 6.000000: Max Val of Output 188.726521 Min Val -170.718024 Feature nodes in window 7.000000: Max Val of Output 164.679873 Min Val -191.313601 Feature nodes in window 8.000000: Max Val of Output 176.794643 Min Val -198.186120 Feature nodes in window 9.000000: Max Val of Output 212.549570 Min Val -151.211118 Feature nodes in window 10.000000: Max Val of Output 189.429728 Min Val -268.050392 Enhancement nodes: Max Val of Output 3.458946 Min Val -0.234552 Training has been finished! The Total Training Time is : 98.2127 seconds Training Accuracy is : 99.7083 % Testing has been finished! The Total Testing Time is : 4.0624 seconds Testing Accuracy is : 98.52 % Feature nodes in window 1.000000: Max Val of Output 169.947671 Min Val -230.259097 Feature nodes in window 2.000000: Max Val of Output 187.996530 Min Val -164.891535 Feature nodes in window 3.000000: Max Val of Output 174.947416 Min Val -247.660674 Feature nodes in window 4.000000: Max Val of Output 167.589510 Min Val -182.023083 Feature nodes in window 5.000000: Max Val of Output 165.654639 Min Val -180.222647 Feature nodes in window 6.000000: Max Val of Output 140.067802 Min Val -238.472102 Feature nodes in window 7.000000: Max Val of Output 150.675923 Min Val -163.925895 Feature nodes in window 8.000000: Max Val of Output 196.565904 Min Val -201.551648 Feature nodes in window 9.000000: Max Val of Output 160.964453 Min Val -202.823595 Feature nodes in window 10.000000: Max Val of Output 200.572165 Min Val -156.066579 Enhancement nodes: Max Val of Output 3.474922 Min Val -0.223896 Training has been finished! The Total Training Time is : 87.7135 seconds Training Accuracy is : 99.7417 % Testing has been finished! The Total Testing Time is : 2.732 seconds Testing Accuracy is : 98.64 % Feature nodes in window 1.000000: Max Val of Output 127.903963 Min Val -213.316681 Feature nodes in window 2.000000: Max Val of Output 155.038787 Min Val -180.186374 Feature nodes in window 3.000000: Max Val of Output 202.938832 Min Val -208.113174 Feature nodes in window 4.000000: Max Val of Output 158.992307 Min Val -172.330486 Feature nodes in window 5.000000: Max Val of Output 149.274163 Min Val -239.594803 Feature nodes in window 6.000000: Max Val of Output 254.333166 Min Val -151.058499 Feature nodes in window 7.000000: Max Val of Output 146.588936 Min Val -216.985141 Feature nodes in window 8.000000: Max Val of Output 142.499936 Min Val -233.436812 Feature nodes in window 9.000000: Max Val of Output 193.282726 Min Val -191.544201 Feature nodes in window 10.000000: Max Val of Output 141.050001 Min Val -182.792208 Enhancement nodes: Max Val of Output 3.542802 Min Val -0.234569 Training has been finished! The Total Training Time is : 72.4605 seconds Training Accuracy is : 99.745 % Testing has been finished! The Total Testing Time is : 3.1958 seconds Testing Accuracy is : 98.64 % Feature nodes in window 1.000000: Max Val of Output 218.772784 Min Val -208.236765 Feature nodes in window 2.000000: Max Val of Output 310.514595 Min Val -129.830654 Feature nodes in window 3.000000: Max Val of Output 241.129595 Min Val -172.617081 Feature nodes in window 4.000000: Max Val of Output 260.665208 Min Val -160.102947 Feature nodes in window 5.000000: Max Val of Output 155.496253 Min Val -205.477988 Feature nodes in window 6.000000: Max Val of Output 185.787754 Min Val -178.261461 Feature nodes in window 7.000000: Max Val of Output 261.771560 Min Val -156.336839 Feature nodes in window 8.000000: Max Val of Output 192.373735 Min Val -162.358270 Feature nodes in window 9.000000: Max Val of Output 229.965693 Min Val -156.619508 Feature nodes in window 10.000000: Max Val of Output 123.569253 Min Val -250.158478 Enhancement nodes: Max Val of Output 3.423224 Min Val -0.232924 Training has been finished! The Total Training Time is : 74.1174 seconds Training Accuracy is : 99.7467 % Testing has been finished! The Total Testing Time is : 7.2923 seconds Testing Accuracy is : 98.61 % Feature nodes in window 1.000000: Max Val of Output 217.388485 Min Val -152.120232 Feature nodes in window 2.000000: Max Val of Output 256.180746 Min Val -183.626129 Feature nodes in window 3.000000: Max Val of Output 157.092001 Min Val -181.354268 Feature nodes in window 4.000000: Max Val of Output 207.950323 Min Val -166.509437 Feature nodes in window 5.000000: Max Val of Output 155.502495 Min Val -247.480722 Feature nodes in window 6.000000: Max Val of Output 167.192962 Min Val -195.591846 Feature nodes in window 7.000000: Max Val of Output 127.299207 Min Val -244.663429 Feature nodes in window 8.000000: Max Val of Output 181.659436 Min Val -189.241315 Feature nodes in window 9.000000: Max Val of Output 151.453113 Min Val -212.202012 Feature nodes in window 10.000000: Max Val of Output 241.829813 Min Val -188.288168 Enhancement nodes: Max Val of Output 3.330709 Min Val -0.215613 Training has been finished! The Total Training Time is : 65.475 seconds Training Accuracy is : 99.7133 % Testing has been finished! The Total Testing Time is : 3.2262 seconds Testing Accuracy is : 98.47 % Press any key to run the one shot BLS demo with BP algorithm ```