pytorch学习笔记9-损失函数与反向传播

目录

1.损失函数

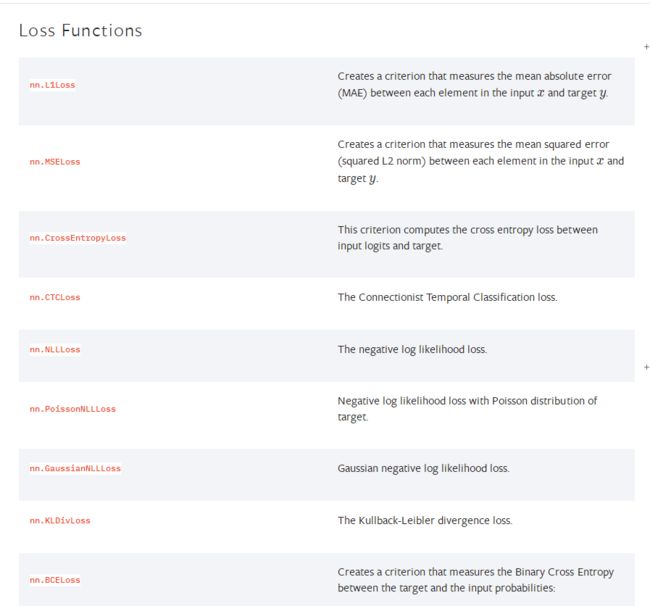

2.PyTorch中的损失函数

2.1nn.L1Loss

2.2nn.MSELoss

2.3nn.CrossEntropyLoss

3.将交叉熵损失函数用到上节的神经网络结构中

4.优化器

正文

1.损失函数

作用:

1. 计算实际输出和目标之间的差距

2. 为我们更新输出提供一定依据(反向传播)

2. PyTorch中的损失函数

2.1 nn.L1Loss

import torch

from torch.nn import L1Loss

Input = torch.tensor([1, 2, 3], dtype=torch.float32)

target = torch.tensor([1, 2, 5], dtype=torch.float32)

loss = L1Loss()

output = loss(Input, target)

print(output)

注意要求浮点数。

默认reduction=mean,可以看到输出结果为:

tensor(0.6667)当修改reduction=sum时,输出:

tensor(2.)2.2 nn.MSELoss

import torch

from torch import nn

from torch.nn import L1Loss

Input = torch.tensor([1, 2, 3], dtype=torch.float32)

target = torch.tensor([1, 2, 5], dtype=torch.float32)

loss_mse = nn.MSELoss()

output_mse = loss_mse(Input, target)

print(output_mse)

输出:

tensor(1.3333)2.3 nn.CrossEntropyLoss

交叉熵,用于多分类问题。

假设有一个三分类问题,要判断是人、狗和猫的概率。x就相当于下面的output([0.1,0.2,0.3]),class就相当于target(1),-x[class]表示x中索引号为class。

写个代码验证一下:

import torch

from torch import nn

from torch.nn import L1Loss

Input = torch.tensor([1, 2, 3], dtype=torch.float32)

target = torch.tensor([1, 2, 5], dtype=torch.float32)

x = torch.tensor([0.1, 0.2, 0.3])

y = torch.tensor([1])

x = torch.reshape(x, (1, 3))

loss_cross = nn.CrossEntropyLoss()

result_cross = loss_cross(x, y)

print(result_cross)注意这个损失函数的输入要求维度是(N,C),即batch_size和类别数,所以使用reshape。

输出:

tensor(1.1019)使用计算器计算的结果跟预想的一样,注意log其实是ln。

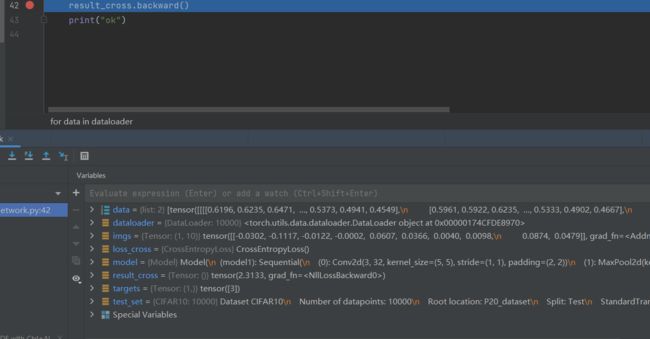

3. 将交叉熵损失函数用到上节的神经网络结构中

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

test_set = torchvision.datasets.CIFAR10(root="P20_dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(test_set, batch_size=1)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

model = Model()

for data in dataloader:

imgs, targets = data

print(imgs)

# (1, 3, 32, 32) -> (1, 10)

imgs = model(imgs)

# 经过model之后变成二维的(1,10)的数组,里面的数不是概率,而是计算得到的线性值

print(imgs)

print(targets)

查看imgs和targets,发现网络没有问题,输出如下(只截取了第一个)

Files already downloaded and verified

tensor([[[[0.6196, 0.6235, 0.6471, ..., 0.5373, 0.4941, 0.4549],

[0.5961, 0.5922, 0.6235, ..., 0.5333, 0.4902, 0.4667],

[0.5922, 0.5922, 0.6196, ..., 0.5451, 0.5098, 0.4706],

...,

[0.2667, 0.1647, 0.1216, ..., 0.1490, 0.0510, 0.1569],

[0.2392, 0.1922, 0.1373, ..., 0.1020, 0.1137, 0.0784],

[0.2118, 0.2196, 0.1765, ..., 0.0941, 0.1333, 0.0824]],

[[0.4392, 0.4353, 0.4549, ..., 0.3725, 0.3569, 0.3333],

[0.4392, 0.4314, 0.4471, ..., 0.3725, 0.3569, 0.3451],

[0.4314, 0.4275, 0.4353, ..., 0.3843, 0.3725, 0.3490],

...,

[0.4863, 0.3922, 0.3451, ..., 0.3804, 0.2510, 0.3333],

[0.4549, 0.4000, 0.3333, ..., 0.3216, 0.3216, 0.2510],

[0.4196, 0.4118, 0.3490, ..., 0.3020, 0.3294, 0.2627]],

[[0.1922, 0.1843, 0.2000, ..., 0.1412, 0.1412, 0.1294],

[0.2000, 0.1569, 0.1765, ..., 0.1216, 0.1255, 0.1333],

[0.1843, 0.1294, 0.1412, ..., 0.1333, 0.1333, 0.1294],

...,

[0.6941, 0.5804, 0.5373, ..., 0.5725, 0.4235, 0.4980],

[0.6588, 0.5804, 0.5176, ..., 0.5098, 0.4941, 0.4196],

[0.6275, 0.5843, 0.5176, ..., 0.4863, 0.5059, 0.4314]]]])

tensor([[ 0.0778, 0.0686, -0.0873, -0.0305, -0.0285, -0.0514, -0.0745, 0.1134,

0.0639, -0.0471]], grad_fn=)

tensor([3])

...... 引入损失函数

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

test_set = torchvision.datasets.CIFAR10(root="P20_dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(test_set, batch_size=1)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss_cross = nn.CrossEntropyLoss()

model = Model()

for data in dataloader:

imgs, targets = data

imgs = model(imgs)

# 这里imgs的形状为(1,10)符合loss_cross的要求

result_cross = loss_cross(imgs, targets)

print(result_cross)输出结果就是神经网络的输出与真实输出的误差。

Files already downloaded and verified

tensor(2.2437, grad_fn=)

tensor(2.2852, grad_fn=)

tensor(2.2734, grad_fn=)

tensor(2.3660, grad_fn=)

tensor(2.2655, grad_fn=)

tensor(2.2731, grad_fn=)

...... 在上面代码37行result_cross = loss_cross(imgs, targets)后面添加下面的东西就是对进行反向传播了。

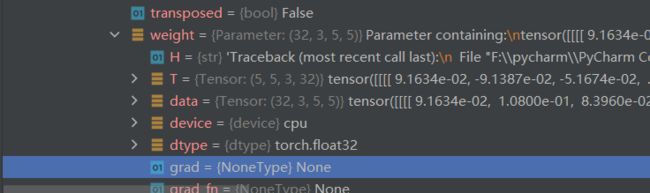

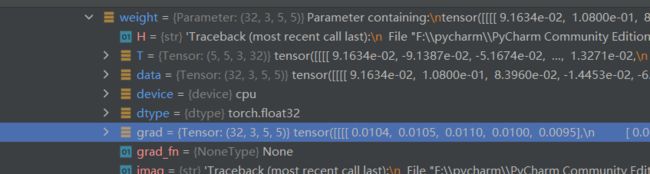

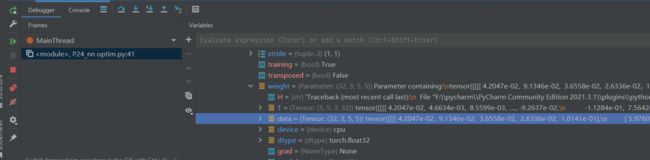

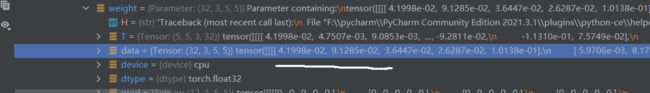

result_cross.backward()设置断点

在变量中查看model-model1-protected attributes-modules-“0”-weight-grad

点击下一步可以看到梯度grad有值显示,说明进行了反向传播。

4. 优化器

调用损失函数的backward计算反向传播,可以得到每个需要调节的参数的梯度,之后可以通过优化器对参数进行调整以达到减小误差的目的。

4.1 官方文档

如何使用优化器:

构造优化器(选择优化器算法)

调用step方法对卷积核中参数进行调整

例子:

for input, target in dataset:

optimizer.zero_grad()

output = model(input)

loss = loss_fn(output, target)

loss.backward()

optimizer.step()第二行操作是将上一步backward得到的参数对应的梯度清零,防止模型训练出现问题。

4.2 优化器使用(SGD随机梯度下降法)

使用3中的代码查看在优化器作用下卷积核中权重的改变。

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

test_set = torchvision.datasets.CIFAR10(root="P20_dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(test_set, batch_size=1)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss_cross = nn.CrossEntropyLoss()

model = Model()

optim = torch.optim.SGD(model.parameters(), lr=0.01)

for data in dataloader:

imgs, targets = data

outputs = model(imgs)

result_cross = loss_cross(outputs, targets)

optim.zero_grad()

result_cross.backward()

optim.step()

通过debug查看权重weight中的data,

可以发现随着循环进行,权重的data在变小。其实这个循环是取不同的图片,看着不明显,需要在这个循环外套一个循环,可以比较明显的看出loss在变小。

把上面的循环改成:

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = model(imgs)

result_cross = loss_cross(outputs, targets)

optim.zero_grad()

result_cross.backward()

optim.step()

running_loss += result_cross

print(running_loss)对数据集图片遍历20次,每次初始running_loss=0,可以看到对数据集图片每遍历一次,running_loss在变小。由于数据量比较多,所以大循环进行两次就中断了。

Files already downloaded and verified

tensor(18652.5039, grad_fn=)

tensor(16108.1016, grad_fn=)

......