【Pytorch项目实战】之图像分类与识别:手写数字识别(MNIST)、普适物体识别(CIFAR-10)

文章目录

- 图像分类与识别

-

- (一)实战:基于CNN的手写数字识别(数据集:MNIST)

- (二)实战:基于CNN的图像分类(数据集:CIFAR-10)

图像分类与识别

分类、识别、检测的区别

- 分类:对图像中特定的对象进行分类(不同类别)。

- 如:CIFAR分类。

- 识别:对图像中特定的对象进行识别(同一类别)。

- 如:人脸识别、虹膜识别、指纹识别。

- 检测:识别对象在图像中的位置。

- 如:人脸检测、行人检测、车辆检测、交通标志检测、视频监控等。

(一)实战:基于CNN的手写数字识别(数据集:MNIST)

############################################

# 主要步骤:

# (1)利用Pytorch内置函数mnist下载数据。

# (2)利用torchvision对数据进行预处理,调用torch.utils建立一个数据迭代器。

# (3)可视化原数据

# (4)利用nn工具箱构建神经网络模型

# (5)实例化模型,并定义损失函数及优化器。

# (6)训练模型

# (7)可视化结果

############################################

# (1)MNIST数据集是机器学习领域中非常经典的一个数据集, 共4个文件,训练集、训练集标签、测试集、测试集标签。由60000个训练样本和10000个测试样本组成,每个样本都是一张28 * 28像素的灰度手写数字图片。

# (2)直接下载下来的数据是无法通过解压或者应用程序打开的,因为这些文件不是任何标准的图像格式而是以字节的形式进行存储的,所以必须编写程序来打开它。

############################################

import numpy as np

import matplotlib.pyplot as plt

import torch

import torch.nn.functional as F

import torch.optim as optim

import torch.nn as nn

from torchvision.datasets import mnist # 导入内置的mnist数据

import torchvision.transforms as transforms # 导入图像预处理模块

from torch.utils.data import DataLoader

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True' # "OMP: Error #15: Initializing libiomp5md.dll"

############################################

# (1)定义超参数

train_batch_size = 64

test_batch_size = 128

learning_rate = 0.01

num_epoches = 20

lr = 0.01

momentum = 0.5

############################################

# (2)下载数据,并进行数据预处理

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize([0.5], [0.5])])

# 11、transforms.Compose()方法是将多种变换组合在一起。Compose()会将transforms列表里面的transform操作进行遍历。

# 22、torchvision.transforms.Normalize(mean, std):用给定的均值和标准差分别对每个通道的数据进行正则化。

# 单通道=[0.5], [0.5] ———— 三通道=[m1,m2,m3], [n1,n2,n3]

train_dataset = mnist.MNIST('./pytorch_knowledge', train=True, transform=transform, download=True)

test_dataset = mnist.MNIST('./pytorch_knowledge', train=False, transform=transform)

# download参数控制是否需要下载。如果目录下已有MNIST,可选择False。

train_loader = DataLoader(train_dataset, batch_size=train_batch_size, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=test_batch_size, shuffle=False)

############################################

# (3)可视化源数据

examples = enumerate(test_loader)

batch_idx, (example_data, example_targets) = next(examples)

fig = plt.figure()

for i in range(6):

plt.subplot(2, 3, i+1)

plt.tight_layout()

plt.imshow(example_data[i][0], cmap='gray', interpolation='none')

plt.title('Ground Truth:{}' .format((example_targets[i])))

plt.xticks(([]))

plt.yticks(([]))

plt.show()

############################################

# (4)构建网络模型

class Net(nn.Module):

# 使用Sequential构建网络,将网络的层组合到一起

def __init__(self, in_dim, n_hidden_1, n_hidden_2, out_dim):

super(Net, self).__init__()

self.layer1 = nn.Sequential(nn.Linear(in_dim, n_hidden_1), nn.BatchNorm1d(n_hidden_1))

self.layer2 = nn.Sequential(nn.Linear(n_hidden_1, n_hidden_2), nn.BatchNorm1d(n_hidden_2))

self.layer3 = nn.Sequential(nn.Linear(n_hidden_2, out_dim))

def forward(self, x):

x = F.relu(self.layer1(x))

x = F.relu(self.layer2(x))

x = self.layer3(x)

return x

if __name__ == '__main__':

############################################

# (5)检测是否有可用的GPU,有则使用,否则使用GPU

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

# 实例化网络

model = Net(28*28, 300, 100, 10)

model.to(device)

# 定义损失函数和优化器

optimizer = optim.SGD(model.parameters(), lr=lr, momentum=momentum)

criterion = nn.CrossEntropyLoss()

############################################

# (6)训练模型

losses = []

acces = []

eval_losses = []

eval_acces = []

for epoch in range(num_epoches):

# 动态修改参数学习率

if epoch % 5 == 0:

optimizer.param_groups[0]['lr'] *= 0.1

# 训练集 #######################################

train_loss = 0

train_acc = 0

# 将模型切换为训练模式

model.train()

for img, label in train_loader:

img = img.to(device)

label = label.to(device)

img = img.view(img.size(0), -1)

out = model(img) # 前向传播

loss = criterion(out, label) # 损失函数

optimizer.zero_grad() # 梯度清零

loss.backward() # 反向传播

optimizer.step() # 参数更新

# 记录误差

train_loss += loss.item()

# 记录分类的准确率

_, pred = out.max(1) # 提取分类精度最高的结果

num_correct = (pred == label).sum().item() # 汇总准确度

acc = num_correct / img.shape[0]

train_acc += acc

train_loss_temp = train_loss / len(train_loader) # 记录单次训练损失

train_acc_temp = train_acc / len(train_loader) # 记录单次训练准确度

losses.append(train_loss / len(train_loader))

acces.append(train_acc / len(train_loader))

# 测试集 #######################################

eval_loss = 0

eval_acc = 0

# 将模型切换为测试模式

model.eval()

for img, label in test_loader:

img = img.to(device)

label = label.to(device)

img = img.view(img.size(0), -1)

out = model(img) # 前向传播

loss = criterion(out, label) # 损失函数

# 记录误差

eval_loss += loss.item()

# 记录分类的准确率

_, pred = out.max(1) # 提取分类精度最高的结果

num_correct = (pred == label).sum().item()

acc = num_correct / img.shape[0]

eval_acc += acc

eval_loss_temp = train_loss / len(train_loader) # 记录单次测试损失

eval_acc_temp = train_acc / len(train_loader) # 记录单次测试准确度

eval_losses.append(eval_loss / len(test_loader))

eval_acces.append(eval_acc / len(test_loader))

print('epoch:{}, Train_loss:{:.4f}, Train_Acc:{:.4f}, Test_loss:{:.4f}, Test_Acc:{:4f}'

.format(epoch, train_loss_temp, train_acc_temp, eval_loss_temp, eval_acc_temp))

# (7)可视化结果

plt.title('Train Loss')

plt.plot(np.arange(len(losses)), losses)

plt.legend(['train loss'], loc='upper right')

plt.xlabel('Steps') # 设置x轴标签

plt.ylabel('Loss') # 设置y轴标签

plt.ylim((0, 1.2)) # 设置y轴的数值显示范围:plt.ylim(y_min, y_max)

plt.show()

# 备注1:model.eval()的作用是不启用 Batch Normalization 和 Dropout。

# 备注2:model.train()的作用是启用 Batch Normalization 和 Dropout。

(二)实战:基于CNN的图像分类(数据集:CIFAR-10)

Dataset之CIFAR-10:CIFAR-10数据集的简介、下载、使用方法之详细攻略

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True' # "OMP: Error #15: Initializing libiomp5md.dll"

###################################################################

def imshow(img):

"""显示图像"""

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

class CNNNet(nn.Module):

"""模型定义"""

def __init__(self):

super(CNNNet, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=16, kernel_size=5, stride=1)

self.pool1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=36, kernel_size=3, stride=1)

self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.fc1 = nn.Linear(1296, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.pool1(F.relu(self.conv1(x)))

x = self.pool2(F.relu(self.conv2(x)))

x = x.view(-1, 36*6*6)

x = F.relu(self.fc2(F.relu(self.fc1(x))))

return x

if __name__ == '__main__':

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") # 检测是否有可用的GPU,有则使用,否则使用CPU。

net = CNNNet() # 模型实例化

net = net.to(device) # 将构建的张量或者模型分配到相应的设备上。

###################################################################

# (1)下载数据、数据预处理、迭代器

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=16, shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=16, shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

###################################################################

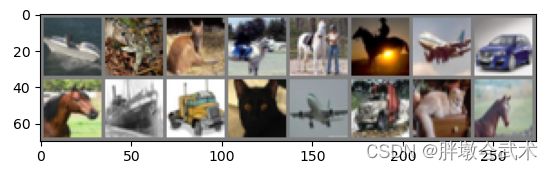

# (2)随机获取部分训练数据

dataiter = iter(trainloader)

images, labels = dataiter.next()

imshow(torchvision.utils.make_grid(images)) # 显示图像

print(' '.join('%5s' % classes[labels[j]] for j in range(16))) # 打印标签

print("net have {} paramerters in total".format(sum(x.numel() for x in net.parameters())))

###################################################################

# (3)模型训练

criterion = nn.CrossEntropyLoss() # 交叉熵损失函数

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9) # 优化器

nn.Sequential(*list(net.children())[:4]) # 取模型中的前四层

for epoch in range(10):

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# 获取训练数据

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad() # 权重参数梯度清零

outputs = net(inputs) # 前向传播

loss = criterion(outputs, labels) # 损失函数

loss.backward() # 后向传播

optimizer.step() # 梯度更新

# 显示损失值

running_loss += loss.item()

if i % 2000 == 1999: # print every 2000 mini-batches. 共打印:batches * epoch

print('[%d, %5d] loss: %.3f' % (epoch+1, i+1, running_loss/2000))

running_loss = 0.0

print('Finished Training')

###################################################################

# (4)模型验证

correct = 0

total = 0

with torch.no_grad():

for data in testloader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = net(images) # 前向传播

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (100 * correct / total))