小布助手对话短文本语义匹配阅读源代码1--build_vocab.py understand

小布助手对话短文本语义匹配

对于大佬这段代码的解读

首先进入build_vocab.py之中,查看形成词表的过程

关键代码

counts = [3,5,3,3,5,5]

接下来调用词频形成新的vocab.txt的词表过程

(由于数据是脱敏的,这里考虑词语出现的频率,即词频)

for ch in childPath:

print('file_name = ')

print(modelPath+ch+'vocab.txt')

r"""

nezha-base-count3/pretrain/nezha_model/vocab.txt:词频:3,词表大小:9448

nezha-base-count3/finetuning/models/vocab.txt:词频:3,词表大小:9448

nezha-base-count5/pretrain/nezha_model/vocab.txt:词频:5,词表大小:6930

nezha-base-count5/finetuning/models/vocab.txt:词频:5,词表大小:6930

bert-base-count3/pretrain/bert_model/vocab.txt:词频:3,词表大小:9448

bert-base-count3/finetuning/models/vocab.txt:词频:3,词表大小:9448

bert-base-count3-len100/finetuning/models/vocab.txt:词频:3,词表大小:9448

bert-base-count5/pretrain/bert_model/vocab.txt:词频:5,词表大小:6930

bert-base-count5-len32/finetuning/models/vocab.txt:词频:5,词表大小:6930

"""

with open(modelPath+ch+'vocab.txt', "w", encoding="utf-8") as f:

for i in vocab:

f.write(str(i)+'\n')

接下来进入train_bert.py之中读取预训练的相应代码

self.bert = BertModel(config, add_pooling_layer=False)

self.cls = BertOnlyMLMHead(config)

进入到BertOnlyMLMHead类别之中去查看

self.predictions = BertLMPredictionHead(config)

进入到BertLMPredictionHead类别之中进行查看

self.transform = BertPredictionHeadTransform(config)

self.decoder = nn.Linear(config.hidden_size, config.vocab_size, bias=False)

self.bias = nn.Parameter(torch.zeros(config.vocab_size))

self.decoder.bias = self.bias

接下来进入BertPredictionHeadTransform类别之中去查看

self.dense = nn.Linear(config.hidden_size, config.hidden_size)

if isinstance(config.hidden_act, str):

self.transform_act_fn = ACT2FN[config.hidden_act]

else:

self.transform_act_fn = config.hidden_act

self.LayerNorm = nn.LayerNorm(config.hidden_size, eps=config.layer_norm_eps)

最后计算对应的损失内容

masked_lm_loss = None

if labels is not None:

loss_fct = CrossEntropyLoss() # -100 index = padding token

masked_lm_loss = loss_fct(prediction_scores.view(-1, self.config.vocab_size), labels.view(-1))

#判断是否单词预测准确,计算对应的交叉熵损失函数

if not return_dict:

output = (prediction_scores,) + outputs[2:]

return ((masked_lm_loss,) + output) if masked_lm_loss is not None else output

return MaskedLMOutput(

loss=masked_lm_loss,

logits=prediction_scores,

hidden_states=outputs.hidden_states,

attentions=outputs.attentions,

)

!!!注意!!!这里标志的时候labels的列表那一栏一定要用对应的-100填充,而不是用0来填充,否则这里交叉熵会偏向于0,参数不能够很好地打乱

因为在计算交叉熵的时候,如果predict的这个位置有vocab_size的概率,而label这个位置的标记为0,那么这个位置就会计算相应的交叉熵,也就是说如果labels中的padding都为0的时候,相当于后面padding的位置都被[MASK]掉了并且真实概率为0,这种情况下训练多了,自然模型的参数就会偏向同一个位置,因为你预测的标签大多数都得为0

这里的return_dict的标志一直为True,所以中间的if not return_dict并没有调用,最后返回的为MaskedLMOutput的类别

return MaskedLMOutput(

loss=masked_lm_loss,

logits=prediction_scores,

hidden_states=outputs.hidden_states,

attentions=outputs.attentions,

)

这里输出的MaskedLMOutput的内容为

MaskedLMOutput(loss=tensor(15.9516, device='cuda:0', grad_fn=), logits=tensor([

[[ -7.1032, -7.7221, -7.8289, ..., -5.4624, -4.8262, -1.8701],

[ -6.6322, -7.9446, -7.6102, ..., -7.7900, -6.9102, -0.4106],

[ -7.4549, -8.6645, -8.5466, ..., -6.1190, -5.7303, -2.0438],

...,

[ -8.5308, -9.6921, -9.5116, ..., -6.1869, -6.7780, -2.4076],

[ -8.2630, -9.3654, -9.2944, ..., -6.7684, -6.5533, -2.4583],

[ -8.1570, -9.1377, -9.1000, ..., -7.6866, -7.1135, -1.3787]],

[[ -6.6938, -7.0423, -6.4993, ..., -2.5347, -4.2420, 1.0630],

[ -6.9207, -6.9863, -6.6222, ..., -3.4255, -3.8394, 2.3977],

[ -6.3949, -6.4493, -6.1290, ..., -2.4942, -3.9657, 3.1857],

...,

[ -5.1594, -5.3287, -5.1231, ..., -2.8701, -3.4257, 0.3638],

[ -5.8926, -5.8620, -5.7480, ..., -3.9318, -5.5555, -0.8947],

[ -4.8589, -5.0712, -4.8379, ..., -2.0654, -3.1606, 0.2632]],

[[ -6.5105, -6.5952, -6.9593, ..., -4.0284, -1.6125, -0.7875],

[ -6.0690, -5.9327, -6.2466, ..., -3.7733, -1.3420, -1.5750],

[ -5.9906, -6.2979, -6.4472, ..., -4.0264, 0.3995, -2.1036],

...,

[ -6.9289, -6.9648, -7.4615, ..., -4.7760, -2.1715, -3.0456],

[ -6.4378, -6.4278, -6.8050, ..., -4.9064, -1.3761, -0.4627],

[ -6.0452, -6.0653, -6.4155, ..., -4.0300, -0.8617, -0.9327]],

...,

[[ -7.6371, -8.5441, -8.1590, ..., -5.5289, -8.8313, -0.4131],

[ -7.6919, -8.7561, -8.4187, ..., -6.8730, -8.5900, -0.8731],

[ -6.6849, -7.7773, -7.3579, ..., -7.2328, -6.1711, -0.1909],

...,

[ -7.7695, -8.7595, -8.4814, ..., -8.3588, -9.8023, 0.8142],

[ -6.7915, -7.4320, -7.0011, ..., -5.2655, -7.0732, 0.3049],

[ -6.2993, -6.9163, -6.5946, ..., -7.1879, -4.9885, -0.6570]],

[[ -5.8317, -6.1604, -6.1133, ..., -4.5175, -3.4055, 3.5319],

[ -6.3563, -6.5795, -6.2000, ..., -6.1266, -5.9040, 3.8659],

[ -6.3519, -6.9847, -6.5742, ..., -5.6252, -1.6596, 3.5224],

...,

[ -7.1194, -7.0762, -7.5309, ..., -3.8539, -6.0422, 0.5517],

[ -4.0919, -4.7526, -4.2898, ..., -3.8815, -3.4095, 2.5926],

[ -4.8606, -5.0404, -4.9578, ..., -4.2013, -3.6956, 1.2091]],

[[-10.3326, -10.0173, -10.1646, ..., -6.9618, -4.9550, 1.3689],

[-10.0917, -9.6132, -9.9763, ..., -7.9266, -5.4265, 0.2729],

[-10.2127, -10.1853, -10.4537, ..., -9.1625, -5.5202, -1.0849],

...,

[-12.2502, -11.6368, -12.1717, ..., -10.3624, -8.7308, 0.8698],

[-13.9685, -13.0956, -13.7665, ..., -11.6488, -7.1361, -0.2751],

[-10.7794, -10.0167, -10.4134, ..., -8.3896, -6.0833, 0.0164]]],

device='cuda:0', grad_fn=), hidden_states=None, attentions=None)

MaskedLMOutput就是重新定义的存储输出数据的一个类别,进入MaskedLMOutput之中查看定义

class MaskedLMOutput(ModelOutput):

loss: Optional[torch.FloatTensor] = None

logits: torch.FloatTensor = None

hidden_states: Optional[Tuple[torch.FloatTensor]] = None

attentions: Optional[Tuple[torch.FloatTensor]] = None

最后面对应的网络层结构为

(cls): BertOnlyMLMHead(

(predictions): BertLMPredictionHead(

(transform): BertPredictionHeadTransform(

(dense): Linear(in_features=768, out_features=768, bias=True)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

(decoder): Linear(in_features=768, out_features=21128, bias=True)

)

)

经过

model.resize_token_embeddings(len(tokenizer))

重新定义输出网络层的维度之后,网络结构为

(cls): BertOnlyMLMHead(

(predictions): BertLMPredictionHead(

(transform): BertPredictionHeadTransform(

(dense): Linear(in_features=768, out_features=768, bias=True)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

(decoder): Linear(in_features=768, out_features=9448, bias=True)

)

)

接下来分析对于数据的定义

train_MLM_data=MLM_Data(train_data,maxlen,tokenizer)

batch_size = 10

dl=blockShuffleDataLoader(train_MLM_data,None,key=lambda x:len(x[0])+len(x[1]),shuffle=False

,batch_size=batch_size,collate_fn=train_MLM_data.collate)

这里面对于数据集合MLM_Data的调用

先调用blockShuffleDataLoader之中的__iter__函数

def __iter__(self):

self.dataset.data=blockShuffle(self.dataset.data,self.batch_size,self.sortBsNum,self.key)

#print('self.dataset.data = ')

#print(self.dataset.data[0:10])

if self.num_workers == 0:

return _SingleProcessDataLoaderIter(self)

else:

return _MultiProcessingDataLoaderIter(self)

调用两次blockShuffleDataLoader中的__iter__函数???两次???不知道为什么调用两次???

然后先调用__getitem__函数batch_size次,将每一个数据取出来

def __getitem__(self, item):

print('MLM_Data __getitem__')

#self.tk.cls_token_id = 2,[UNK]

#self.tk.sep_token_id = 3,[SEP]

text1,text2,_=self.data[item]#预处理,mask等操作

if random.random()>0.5:

text1,text2=text2,text1#交换位置

text1,text2=truncate(text1,text2,self.maxLen)

text1_ids,text2_ids = self.tk.convert_tokens_to_ids(text1),self.tk.convert_tokens_to_ids(text2)

text1_ids, out1_ids = self.random_mask(text1_ids)#添加mask预测

text2_ids, out2_ids = self.random_mask(text2_ids)

input_ids = [self.tk.cls_token_id] + text1_ids + [self.tk.sep_token_id] + text2_ids + [self.tk.sep_token_id]#拼接

token_type_ids=[0]*(len(text1_ids)+2)+[1]*(len(text2_ids)+1)

labels = [-100] + out1_ids + [-100] + out2_ids + [-100]

assert len(input_ids)==len(token_type_ids)==len(labels)

return {'input_ids':input_ids,'token_type_ids':token_type_ids,'labels':labels}

最后再调用collate函数,将一个批次的数据统一处理

@classmethod

def collate(cls,batch):

print('MLM_Data collate')

#collate_fn参数实现batch的输出内容

input_ids=[i['input_ids'] for i in batch]

token_type_ids=[i['token_type_ids'] for i in batch]

labels=[i['labels'] for i in batch]

input_ids=paddingList(input_ids,0,returnTensor=True)

token_type_ids=paddingList(token_type_ids,0,returnTensor=True)

labels=paddingList(labels,-100,returnTensor=True)

#!!!注意labels padding方法

attention_mask=(input_ids!=0)

return {'input_ids':input_ids,'token_type_ids':token_type_ids

,'attention_mask':attention_mask,'labels':labels}

中间调用两次blockShuffleDataLoader中的__iter__函数的原因需要进入Trainer类之中去查看

trainer = Trainer(

model=model,

args=training_args,

train_dataLoader=dl,

prediction_loss_only=True

)

但是在调用blockShuffleDataLoader的过程中,我们发现,第一次经历了blockShuffle函数操作之后

print('origin self.dataset = ')

print(self.dataset.data[0:10])

self.dataset.data=blockShuffle(self.dataset.data,self.batch_size,self.sortBsNum,self.key)

print('after deal self.dataset.data = ')

print(self.dataset.data[0:10])

输出内容

origin self.dataset =

[

[['12', '253', '32', '39', '9', '1162', '533'], ['28', '12', '13', '74', '75'], -1],

[['2601', '4610', '8', '9', '629'], ['5931', '2601', '4610', '202', '629'], -1],

[['304', '7311', '304', '1095', '66'], ['304', '464', '1231'], -1],

[['457', '59', '1584', '163', '462', '12', '19', '7376'], ['12', '421', '39', '9', '28', '9', '1758', '76'], -1],

[['3004', '279', '4', '11'], ['3004', '12', '13', '14', '279', '4', '11'], -1],

[['29', '1596', '1645', '4000', '12'], ['29', '10', '459', '2552', '13007', '12'], -1],

[['12', '71', '10', '3267', '76'], ['12', '1746', '940', '13', '462', '247', '76'], -1],

[['126', '168', '16', '12', '518', '163'], ['12', '518', '163', '126', '168', '16'], -1],

[['12', '1794', '23', '25', '247'], ['12', '898', '19', '433', '434', '23', '3535'], -1],

[['19710', '72', '29'], ['72', '29', '3241'], -1]

]

data =

after deal self.dataset.data =

[

[['781', '335', '1277', '45', '358', '47', '440', '1259', '48', '47', '46'], ['538', '538', '439', '538', '47', '1277', '1277', '1277', '1277', '6442', '6442', '358', '48', '48', '48', '50', '50', '50', '1263', '1263', '1263', '1263', '781', '1263', '781', '781', '781', '781', '47', '47', '47', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '538', '47', '538', '47', '538', '47', '538', '1277', '47', '538', '538', '47', '538', '47', '47', '376', '376', '376', '376', '1948'], -1],

[['2152', '2152', '16389', '2152', '16389', '2152', '29', '10', '360', '12', '275', '11', '29', '10', '360', '12', '275', '11', '29', '10', '360', '12', '275', '11', '29', '10', '360', '12', '275', '11', '29', '10', '360', '12', '275', '29', '10', '360', '12', '275', '29', '10', '360', '12', '275', '11', '29', '10', '360', '12', '275', '11', '29', '10', '360', '12', '275', '11', '29', '10', '360', '12', '275', '11'], ['29', '360', '12', '275', '66'], -1],

[['66', '1141', '675', '5608', '12', '1812', '1376', '217', '23', '161', '217', '161', '11', '10', '23', '29', '10', '23', '28', '23', '471', '2074', '2075', '29', '254', '1045', '23', '217', '127', '52', '11', '453', '80', '1041', '76', '107', '4942', '2745', '8300', '172', '6', '161', '29', '29', '274', '161', '127', '678', '29', '161', '217', '23', '8301'], ['447', '217', '23', '2805', '1227', '19', '66'], '0'],

[['29', '106', '1698', '350', '415', '29', '2658', '1600', '1652', '11', '5', '2235', '48', '50', '50', '48', '426', '415', '2504', '811', '2008', '11', '415', '29', '251', '485', '127', '2023', '426', '415', '29', '762', '1652', '11', '5', '2235', '8301', '19', '415', '29', '106', '304', '2008', '19', '28', '2235', '140', '370', '853', '442', '415'], ['29', '106', '647', '350', '29', '426', '19', '1094', '354'], '0'],

[['28', '12', '124', '29', '19', '415', '29', '19', '426', '28', '247', '200', '2286', '19', '433', '434', '1522', '379', '426', '4580', '11', '1233', '83', '140', '370', '56', '57', '32', '227', '398', '592', '19', '23', '4798', '4799', '4800', '19', '189', '6', '156', '253', '10', '13', '79', '11', '29', '1475', '8', '9', '313', '267'], ['29', '19', '426', '32', '800', '2675', '744', '1276'], '0'],

[['12', '126', '415', '29', '19', '243', '5', '2536', '736', '476', '573', '32', '2707', '253', '80', '11', '415', '29', '692', '11', '350', '2707', '415', '1213', '333', '19', '23', '1141', '107', '606', '4693', '4002', '11', '226', '941', '440', '920', '921', '415', '1006', '647', '2126', '8', '9', '773', '33'], ['692', '1650', '1675', '1495', '1213', '333', '29', '19', '10', '4249', '831'], '0'],

[['29', '613', '82', '1475', '23', '127', '1272', '59', '168', '14158', '468', '533', '6', '1022', '1559', '698', '13', '14159', '14160', '1016', '29', '595', '82', '1475', '23', '606', '724', '29', '762', '10', '674', '442', '29', '320', '12', '14161', '1430', '11', '28', '12', '23', '192', '522', '1326'], ['274', '360', '12', '161', '19', '467', '82', '1475', '23', '14162', '59', '11'], '0'],

[['1248', '127', '6', '12', '431', '432', '161', '176', '29', '133', '230', '300', '72', '17291', '2022', '751', '217', '32', '921', '243', '12', '1104', '1513', '1290', '574', '804', '19', '120', '121', '751', '239', '406', '176', '12', '133', '11', '12', '243', '134', '1059', '19', '433', '434', '12', '227', '11', '12', '161', '12'], ['29', '23', '161', '1006', '12', '176', '29'], '0'],

[['127', '344', '925', '960', '9715', '818', '3052'], ['12', '126', '415', '29', '19', '140', '370', '243', '14120', '573', '32', '920', '921', '253', '80', '11', '415', '29', '692', '11', '5', '130', '2707', '415', '1213', '333', '19', '23', '1141', '107', '127', '6', '5808', '2796', '4002', '11', '226', '941', '440', '920', '921', '415', '1698', '2126', '8', '9', '773', '33'], '0'],

[['28', '12', '217', '23', '29', '19', '415', '29', '19', '28', '247', '200', '2286', '19', '433', '434', '2022', '1675', '270', '11', '431', '23', '83', '56', '57', '754', '398', '277', '23', '11772', '130', '804', '19', '1317', '156', '253', '10', '13', '79', '11', '29', '1475', '8', '9', '313', '267'], ['29', '411', '662', '524', '525', '12', '19', '59'], '0']]

第二次调用的过程中,整个数据并没有发生变化,因此这里的数据处理操作就只体现在第一次调用__iter__的函数之中

这里的__iter__通过修改MLM_Data中的data属性,由于在random_mask函数和__getitem__函数中都是以data为基础的,所以修改MLM_Data(Dataset)中的data属性即可改变对应的data内容

预训练由于没有明确的指标,所以一般没有测试集(训练集合当测试集只会越训练越好)

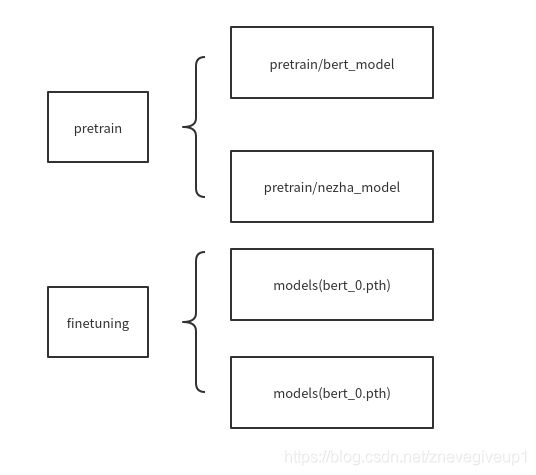

预训练完成之后存下来的源代码文件内容

这里的排序操作内容

这里的排序操作内容

def blockShuffle(data:list,bs:int,sortBsNum,key):

#假设输入的bs=10

random.shuffle(data)#先打乱,random.shuffle(data)用于将一个列表中的元素打乱

tail=len(data)%bs#计算碎片长度

tail=[] if tail==0 else data[-tail:]

data=data[:len(data)-len(tail)]

assert len(data)%bs==0#剩下的一定能被bs整除

#sortBsNum = None

sortBsNum=len(data)//bs if sortBsNum is None else sortBsNum#为None就是整体排序

data=splitList(data,sortBsNum*bs)

data=[sorted(i,key=key,reverse=True) for i in data]#每个大块进行降排序

data=unionList(data)

data=splitList(data,bs)#最后,按bs分块

random.shuffle(data)#块间打乱

data=unionList(data)+tail

return data