玩转KITTI3D目标检测:KITTI评估工具evaluate的使用

近期因实验需要利用kitti数据集,发现关于评估工具使用的部分网上教程不够详细,特此记录.

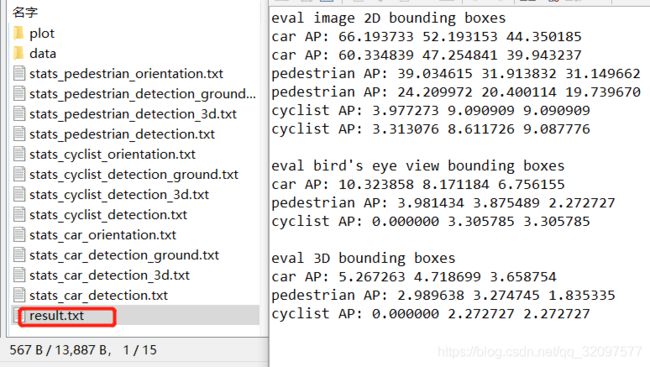

文末为了方便对数据结果观看,附上了修改代码.

1. KITTI评估工具来源

- matlab(2D/3D框显示和demo文件)

- mapping

- cpp(评估的主要工具)

- readme.txt(说明文件)

为了方便调用和显示,将cpp文件夹中evaluate_object.cpp文件更换为文末代码.

2. 文件编译

(1)Cmake编译

进入cpp目录下,依次执行以下

cmake .

make

(2)gcc编译

g++ -O3 -DNDEBUG -o evaluate_object evaluate_object.cpp

3.程序调用

(1)准备label和results文件夹,文件目录如下

- label

- 00XXXX.txt

- results

- data

- 00XXXX.txt

- data

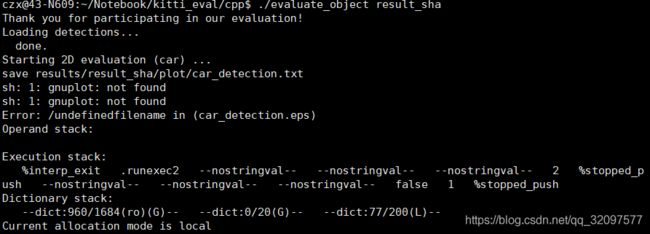

(2)评估

./evaluate_object label results

其中label和results分别代表路径

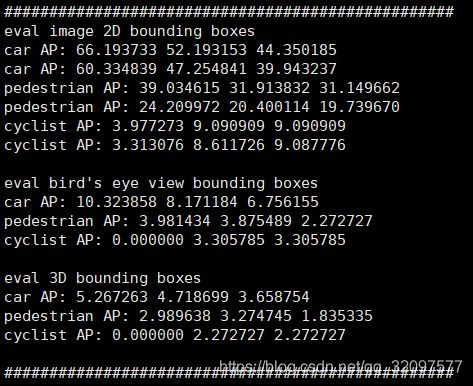

4.实验结果

附:修改的c++程序

#include