笔记--Nvidia Jetson利用Anaconda安装MMDeploy及API测试

目录

前言

1--安装Anaconda

2--创建conda环境及安装pytorch

3--配置环境依赖

4--安装mmdeploy

5--实例测试

6--参考

前言

建议结合官方文档阅读。

1--安装Anaconda

①下载Anaconda

cd ~/mmdeploy_test # 进入一个新的文件夹

# 下载anaconda包

wget "https://github.com/conda-forge/miniforge/releases/latest/download/Miniforge-pypy3-Linux-aarch64.sh"

bash Miniforge-pypy3-Linux-aarch64.sh # 执行安装

source ~/.bashrc # 更新Path②设置国内源

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/pytorch/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/peterjc123/

conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/

conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/pytorch/

conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/peterjc123/

conda config --set show_channel_urls yes

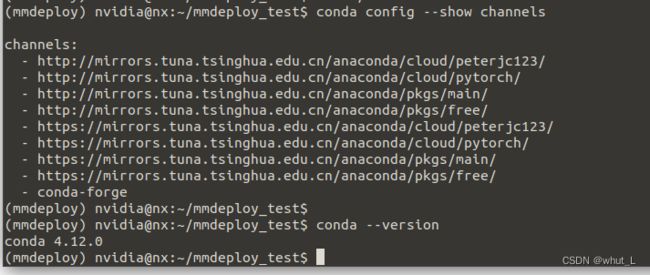

③测试

conda config --show channels # 查看源路径

conda --version # 查看版本2--创建conda环境及安装pytorch

①创建mmdeploy环境,python版本为3.6

# 创建mmdeploy环境

conda create -n mmdeploy python=3.6 -y

conda activate mmdeploypython版本建议安装3.6和3.8,以与nvidia jetson提供的pytorch安装包适配。

②下载pytorch安装包

国内无pvn的情况下,建议从nvidia jetson提供的pytorch官网下载pytorch安装包。

# 在windows或服务器上下载pytorch安装包

# 通过sftp传到nvidia jetson中

# 以代替命令:wget https://nvidia.box.com/shared/static/fjtbno0vpo676a25cgvuqc1wty0fkkg6.whl -O torch-1.10.0-cp36-cp36m-linux_aarch64.whl③安装pytorch

# 安装依赖库

sudo apt-get install libopenblas-base libopenmpi-dev libomp-dev

pip3 install Cython numpy

# 运行pytorch安装包

pip3 install torch-1.10.0-cp36-cp36m-linux_aarch64.whl④添加路径

# 添加路径

vim ~/.bashrc

export OPENBLAS_CORETYPE=ARMV8 # 增加这行内容

:wq # 保存退出

source ~/.bashrc⑤安装torchvision(这个过程可能会卡顿或者持续时间较长)

# 安装torchvision

sudo apt-get install libjpeg-dev zlib1g-dev libpython3-dev libavcodec-dev libavformat-dev libswscale-dev -y

git clone https://github.com/pytorch/vision torchvision

cd torchvision

git checkout tags/v0.11.1 -b v0.11.1

export BUILD_VERSION=0.11.1

pip install -e .3--配置环境依赖

①配置cmake

## 更新cmake

# 卸载已安装的版本

sudo apt-get purge cmake

sudo snap remove cmake

# 安装新版本

export CMAKE_VER=3.23.1

export ARCH=aarch64

wget https://github.com/Kitware/CMake/releases/download/v${CMAKE_VER}/cmake-${CMAKE_VER}-linux-${ARCH}.sh

chmod +x cmake-${CMAKE_VER}-linux-${ARCH}.sh

sudo ./cmake-${CMAKE_VER}-linux-${ARCH}.sh --prefix=/usr --skip-license

cmake --version②配置Tensorrt

配置Tensorrt

cp -r /usr/lib/python3.6/dist-packages/tensorrt* /home/nvidia/miniforge-pypy3/envs/mmdeploy/lib/python3.6/site-packages/

conda deactivate

conda activate mmdeploy

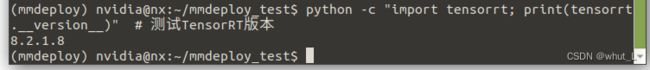

python -c "import tensorrt; print(tensorrt.__version__)" # 测试TensorRT版本

# 设置路径 利用vim ~/.bashrc进行

export TENSORRT_DIR=/usr/include/aarch64-linux-gnu

export PATH=$PATH:/usr/local/cuda/bin

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda/lib64③安装mmcv-full (这个过程编译可能会卡顿或者持续时间较长)

# 安装mmcv-full

sudo apt-get install -y libssl-dev

git clone https://github.com/open-mmlab/mmcv.git

cd mmcv

git checkout -b v1.4.0

MMCV_WITH_OPS=1 pip install -e .④安装onnx

pip install onnx⑤安装h5py

sudo apt-get install -y pkg-config libhdf5-100 libhdf5-dev # 安装依赖

pip install versioned-hdf5 # 安装⑥安装spdlog

sudo apt-get install -y libspdlog-dev⑦安装ppl.cv

git clone https://github.com/openppl-public/ppl.cv.git # 下载

cd ppl.cv

export PPLCV_DIR=$(pwd)

echo -e '\n# set environment variable for ppl.cv' >> ~/.bashrc # 设置path

echo "export PPLCV_DIR=$(pwd)" >> ~/.bashrc

git checkout tags/v0.6.2 -b v0.6.2

./build.sh cuda⑧安装mmdetecttion

4--安装mmdeploy

①下载mmdeploy

git clone --recursive https://github.com/open-mmlab/mmdeploy.git

cd mmdeploy

export MMDEPLOY_DIR=$(pwd)②编译TensorRT自定义算子

# 编译tensorRT自定义算子

mkdir -p build && cd build

cmake .. -DMMDEPLOY_TARGET_BACKENDS="trt"

make -j$(nproc)③安装mmdeploy依赖库

# 安装MMDeploy依赖库

cd ${MMDEPLOY_DIR}

pip install -v -e .④编译C++ SDK

mkdir -p build && cd build

cmake .. \

-DMMDEPLOY_BUILD_SDK=ON \

-DMMDEPLOY_BUILD_SDK_PYTHON_API=ON \

-DMMDEPLOY_TARGET_DEVICES="cuda;cpu" \

-DMMDEPLOY_TARGET_BACKENDS="trt" \

-DMMDEPLOY_CODEBASES=all \

-Dpplcv_DIR=${PPLCV_DIR}/cuda-build/install/lib/cmake/ppl

make -j$(nproc) && make install⑤编译demo sdk(六个.cpp文件)

# 编译demo sdk

cd ${MMDEPLOY_DIR}/build/install/example

mkdir -p build && cd build

cmake .. -DMMDeploy_DIR=${MMDEPLOY_DIR}/build/install/lib/cmake/MMDeploy

make -j$(nproc)5--实例测试

①mask_RCNN模型,python API模型转换

## mask_RCNN 实例

# 调用pythonAPI 转换模型: pytorch→onnx→engine

python tools/deploy.py \

configs/mmdet/instance-seg/instance-seg_tensorrt_dynamic-320x320-1344x1344.py \

/home/nvidia/mmdeploy_test/mmdetection/configs/mask_rcnn/mask_rcnn_x101_64x4d_fpn_mstrain-poly_3x_coco.py \ # 这个要在mmdetection源码中引用

checkpoints/mask_rcnn_x101_64x4d_fpn_mstrain-poly_3x_coco_20210526_120447-c376f129.pth \

maskrcnn_file/demo.jpg --work-dir work_dirs/maskrcnn/ \

--device cuda --show --dump-info引用“/home/nvidia/mmdeploy_test/mmdetection/configs/mask_rcnn/mask_rcnn_x101_64x4d_fpn_mstrain-poly_3x_coco.py”文件时,需要在mmdetection源码中引用,所以要先git clone 下载mmdetection

②C++推理实例

# 测试C++实例

# 运行实例分割程序(参数1:gpu加速; 参数2:推理模型的地址; 参数3:推理测试图片的地址)

./object_detection cuda /home/nvidia/mmdeploy_test/mmdeploy/work_dirs/maskrcnn/ /home/nvidia/mmdeploy_test/mmdeploy/maskrcnn_file/demo.jpg6--参考

官方安装文档