机器学习-Sklearn-11(支持向量机SVM-SVC真实数据案例:预测明天是否会下雨)

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

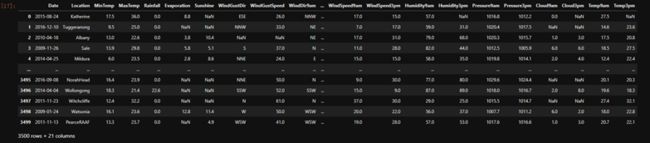

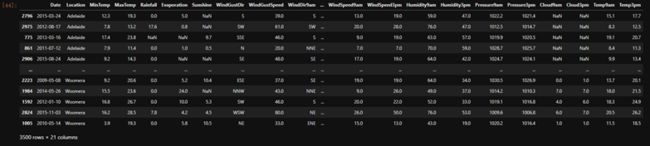

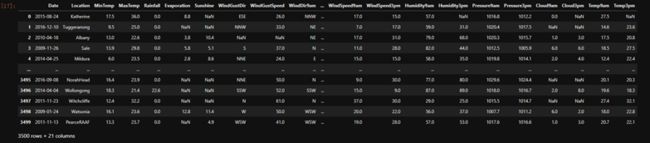

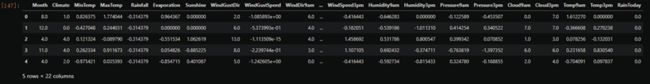

weather = pd.read_csv(r"D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\weatherAUS5000.csv",index_col=0)

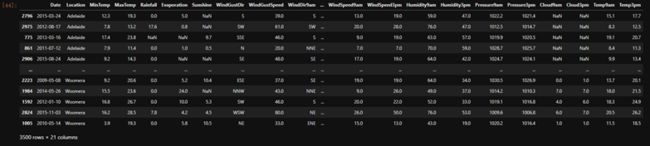

weather.head()

X = weather.iloc[:,:-1]

Y = weather.iloc[:,-1]

X.shape

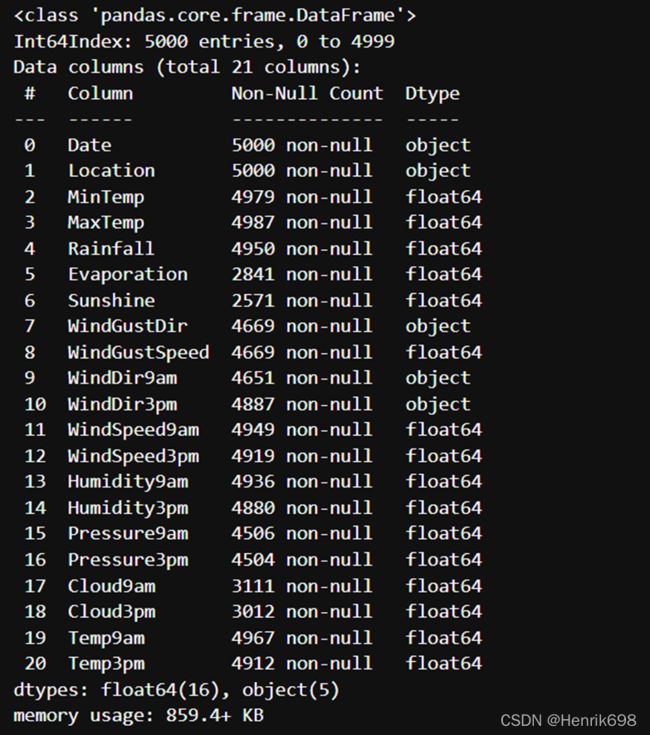

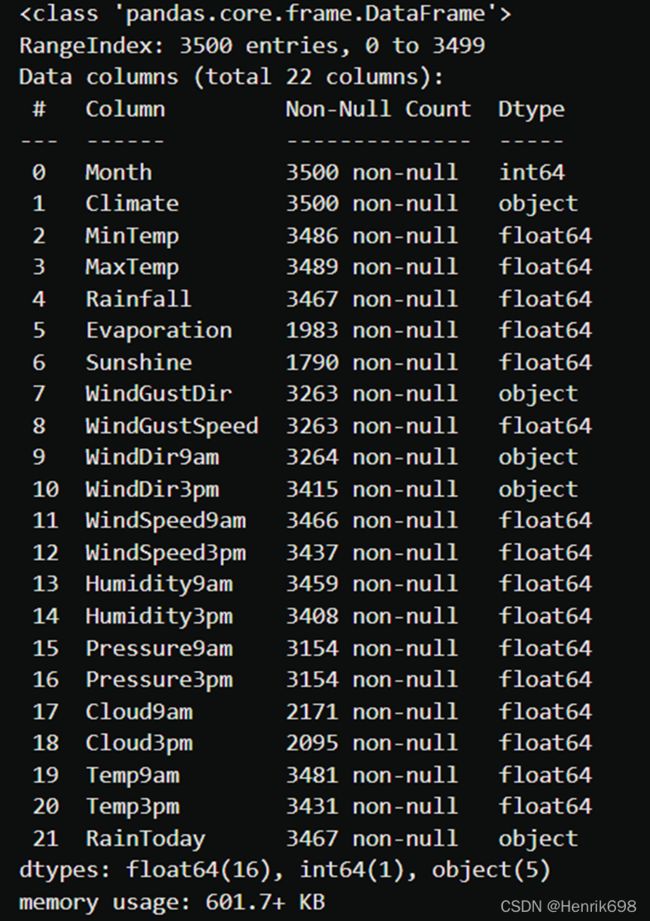

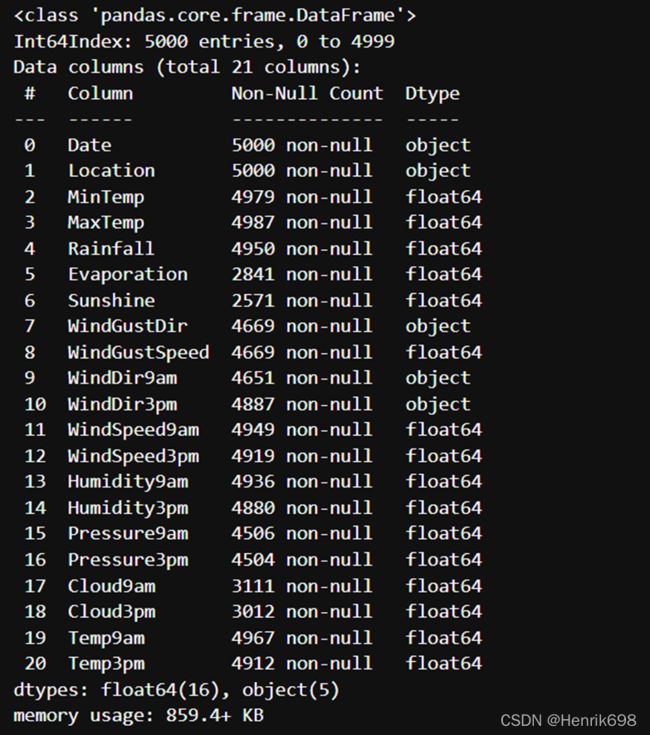

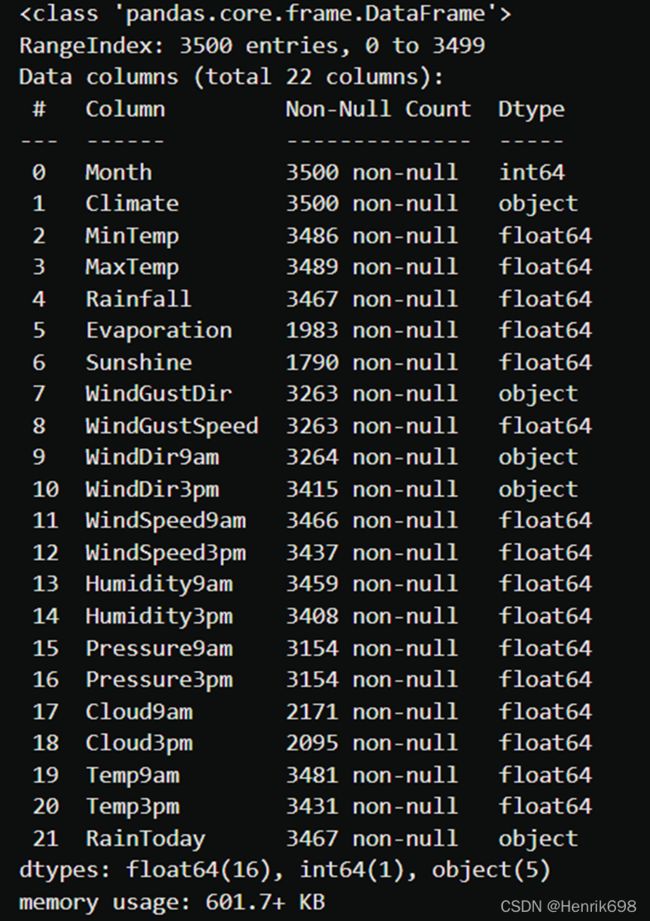

X.info()

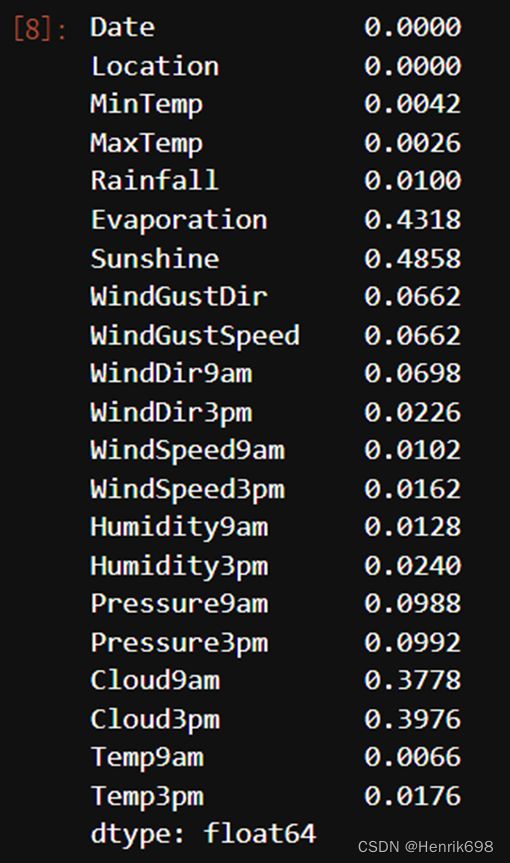

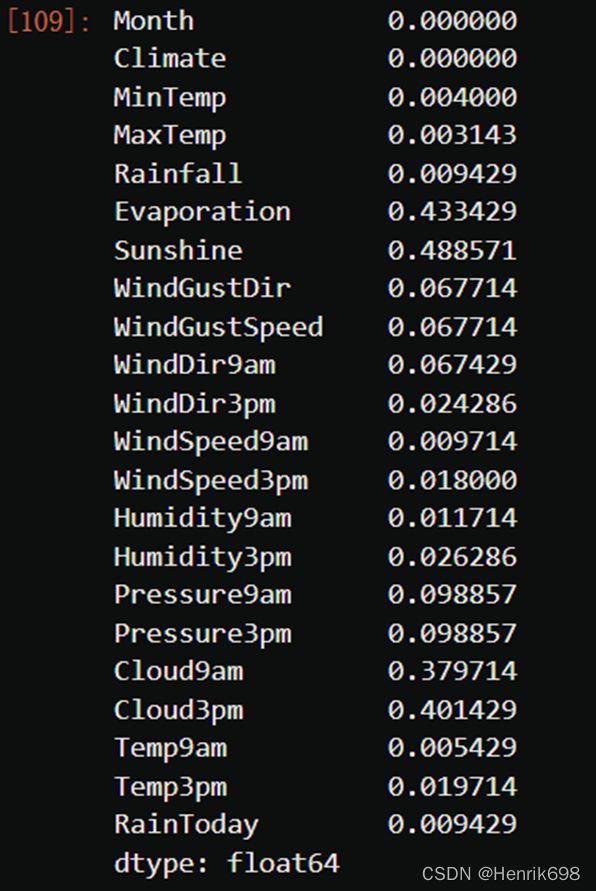

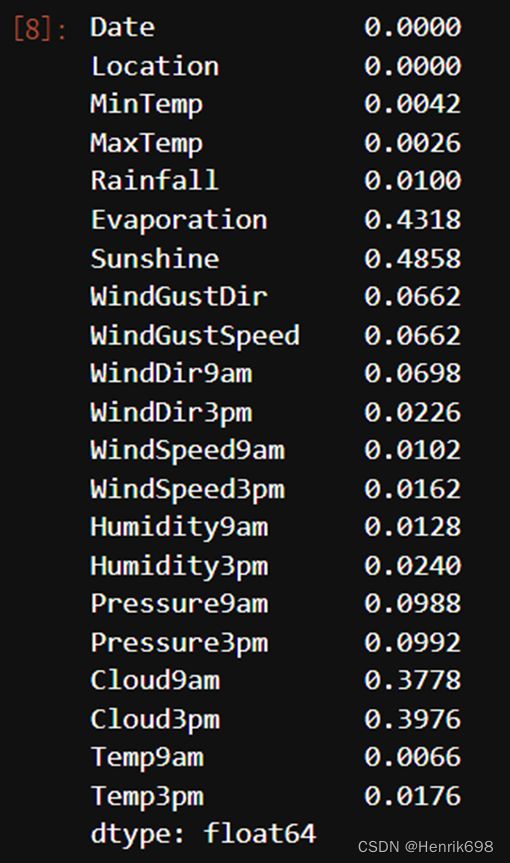

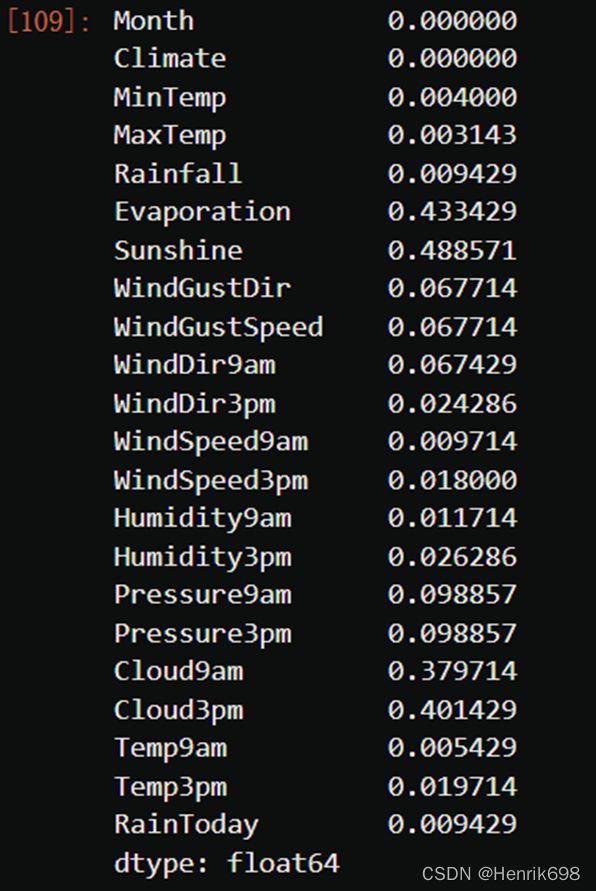

X.isnull().mean()

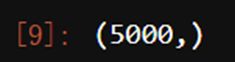

Y.shape

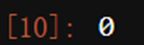

Y.isnull().sum()

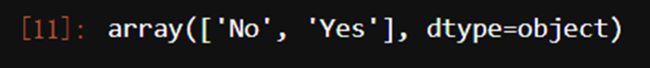

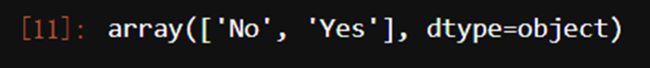

np.unique(Y)

Xtrain, Xtest, Ytrain, Ytest = train_test_split(X,Y,test_size=0.3,random_state=420)

Xtrain.head()

for i in [Xtrain, Xtest, Ytrain, Ytest]:

i.index = range(i.shape[0])

Xtrain

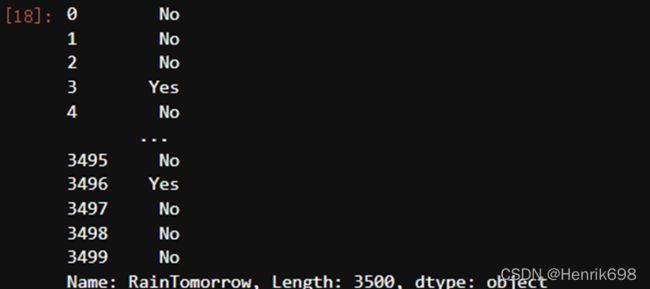

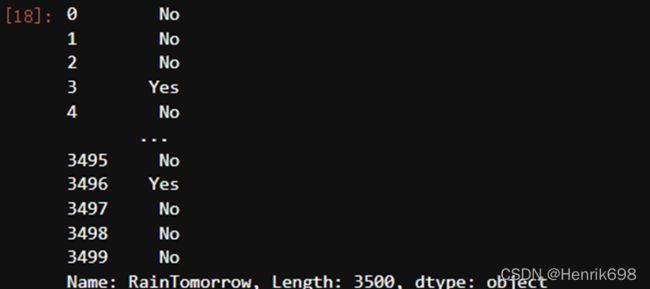

Ytrain

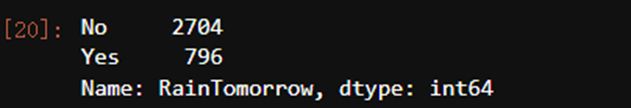

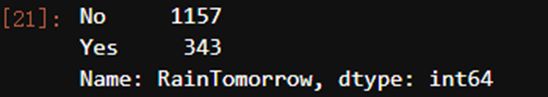

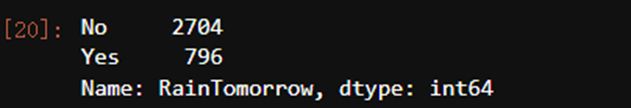

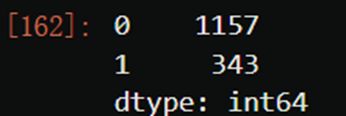

Ytrain.value_counts()

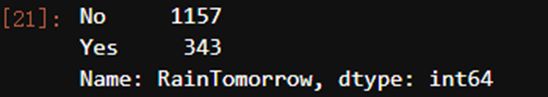

Ytest.value_counts()

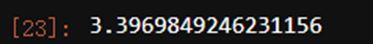

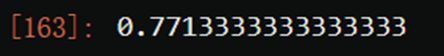

Ytrain.value_counts()[0]/Ytrain.value_counts()[1]

from sklearn.preprocessing import LabelEncoder

encorder = LabelEncoder().fit(Ytrain)

Ytrain = pd.DataFrame(encorder.transform(Ytrain))

Ytest = pd.DataFrame(encorder.transform(Ytest))

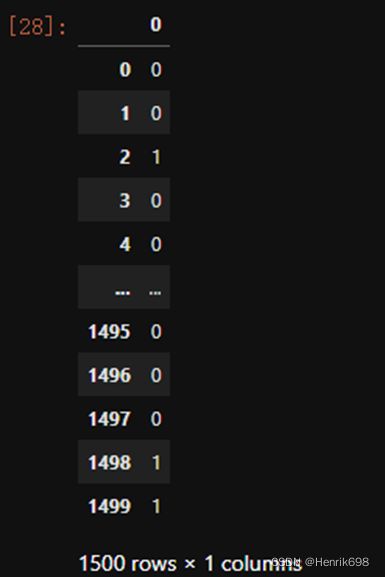

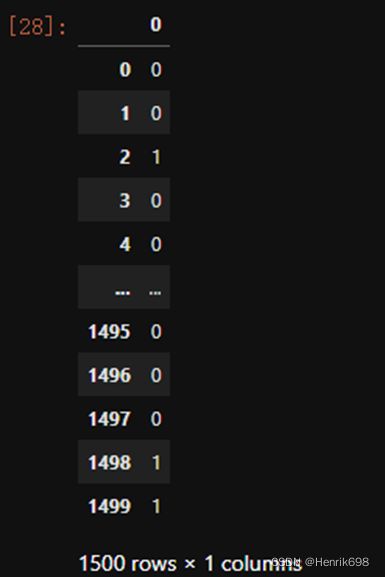

Ytrain

Ytest

Ytrain.to_csv(r"D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\Ytrain.csv")

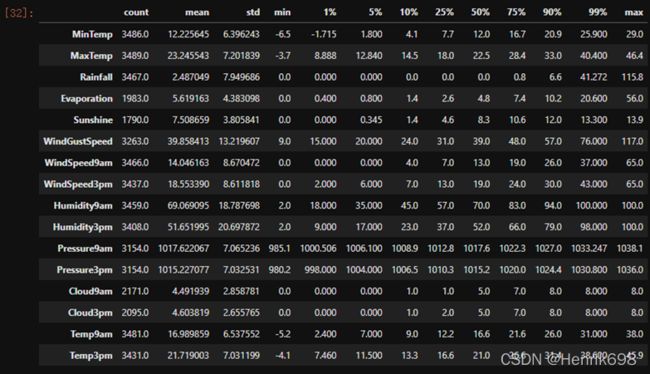

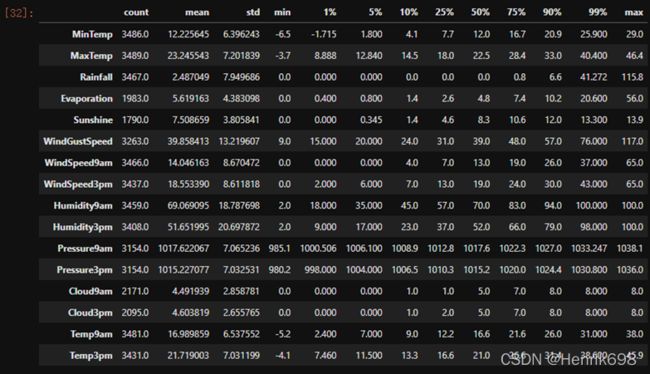

Xtrain.describe([0.01,0.05,0.1,0.25,0.5,0.75,0.9,0.99]).T

Xtest.describe([0.01,0.05,0.1,0.25,0.5,0.75,0.9,0.99]).T

"""

对于去kaggle上下载了数据的小伙伴们,以及坚持要使用完整版数据的(15W行)小伙伴们,如果你发现了异常值,首先你要观察,这个异常值出现的频率

如果异常值只出现了一次,多半是输入错误,直接把异常值删除;

如果异常值出现了多次,去跟业务人员沟通,可能这是某种特殊表示,如果是人为造成的错误,异常值留着是没有用的,只要数据量不是太大,都可以删除;

如果异常值占到你总数据量的10%以上了,不能轻易删除。可以考虑把异常值替换成非异常但是非干扰的项,比如说用0来进行替换,或者把异常当缺失值,用均值或者众数来进行替换。

'''

#下面cell的是处理异常的代码:

#先查看原始的数据结构

Xtrain.shape

Xtest.shape

#观察异常值是大量存在,还是少数存在

Xtrain.loc[Xtrain.loc[:,"Cloud9am"] == 9,"Cloud9am"]

Xtest.loc[Xtest.loc[:,"Cloud9am"] == 9,"Cloud9am"]

Xtest.loc[Xtest.loc[:,"Cloud3pm"] == 9,"Cloud3pm"]

#少数存在,于是采取删除的策略

#注意如果删除特征矩阵,则必须连对应的标签一起删除,特征矩阵的行和标签的行必须要一一对应

Xtrain = Xtrain.drop(index = 71737)

Ytrain = Ytrain.drop(index = 71737)

#删除完毕之后,观察原始的数据结构,确认删除正确

Xtrain.shape

Xtest = Xtest.drop(index = [19646,29632])

Ytest = Ytest.drop(index = [19646,29632])

Xtest.shape

#进行任何行删除之后,千万记得要恢复索引

for i in [Xtrain, Xtest, Ytrain, Ytest]:

i.index = range(i.shape[0])

Xtrain.head()

Xtest.head()

'''

"""

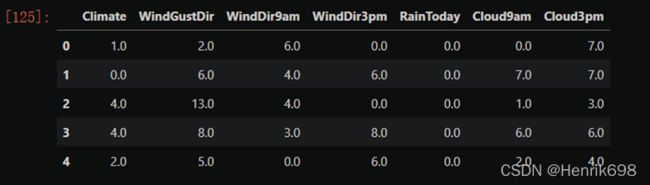

Xtrain.head()

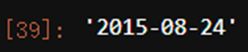

Xtrain.iloc[0,0]

type(Xtrain.iloc[0,0])

Xtrain.iloc[:,0].value_counts()

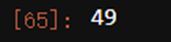

Xtrain.iloc[:,0].value_counts().count()

Xtrainc = Xtrain.copy()

Xtrainc.sort_values(by="Location")

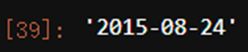

Xtrain.loc[Xtrain.iloc[:,0] == '2015-08-24',:]

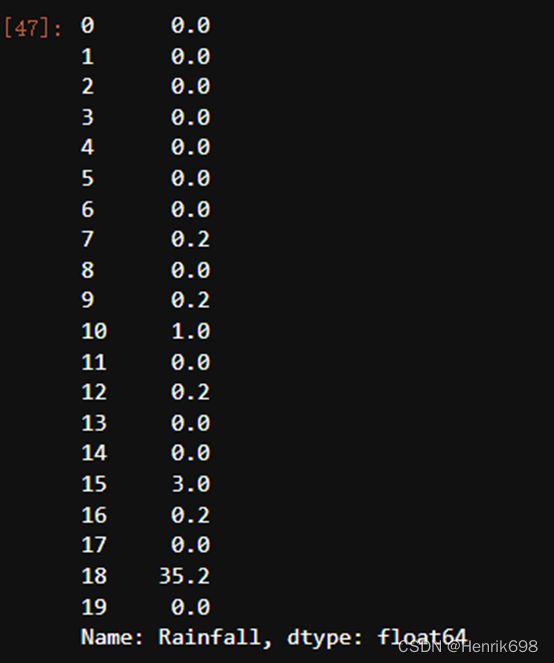

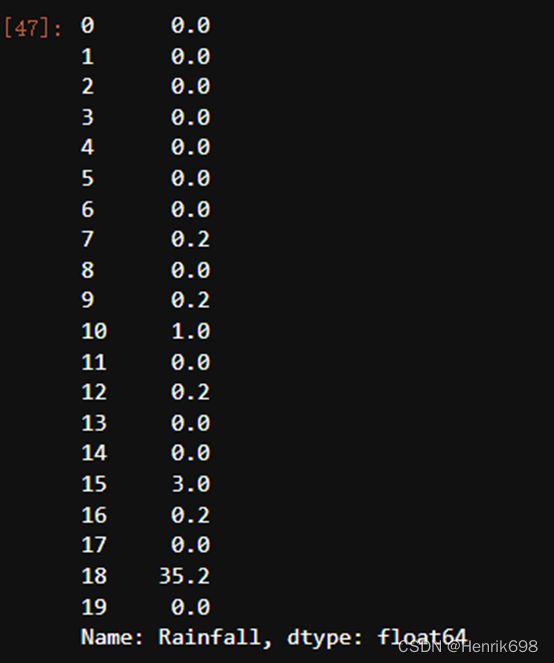

Xtrain["Rainfall"].head(20)

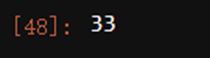

Xtrain["Rainfall"].isnull().sum()

Xtrain.loc[Xtrain["Rainfall"] >= 1,"RainToday"] = "Yes"

Xtrain.loc[Xtrain["Rainfall"] < 1,"RainToday"] = "No"

Xtrain.loc[Xtrain["Rainfall"] == np.nan,"RainToday"] = np.nan

Xtest.loc[Xtest["Rainfall"] >= 1,"RainToday"] = "Yes"

Xtest.loc[Xtest["Rainfall"] < 1,"RainToday"] = "No"

Xtest.loc[Xtest["Rainfall"] == np.nan,"RainToday"] = np.nan

Xtrain.head()

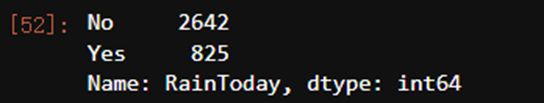

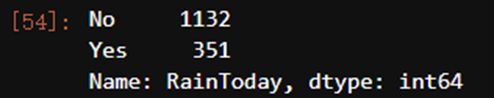

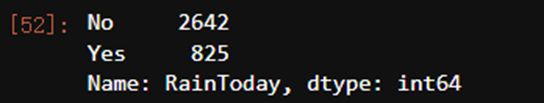

Xtrain.loc[:,'RainToday'].value_counts()

Xtest.head()

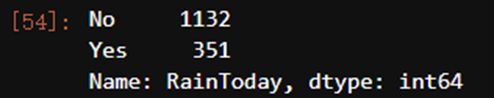

Xtest.loc[:,'RainToday'].value_counts()

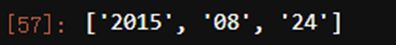

Xtrain.loc[0,"Date"].split("-")

int(Xtrain.loc[0,"Date"].split("-")[1])

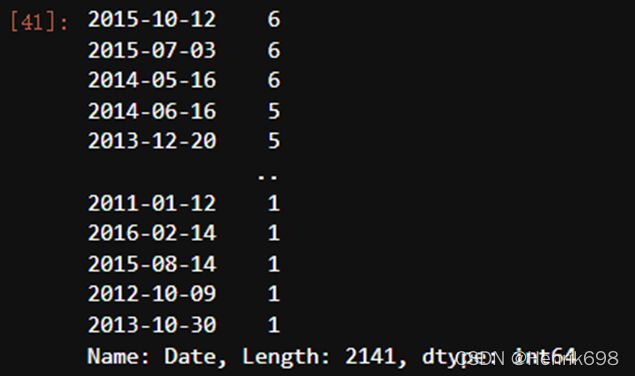

Xtrain["Date"] = Xtrain["Date"].apply(lambda x:int(x.split("-")[1]))

Xtrain.head()

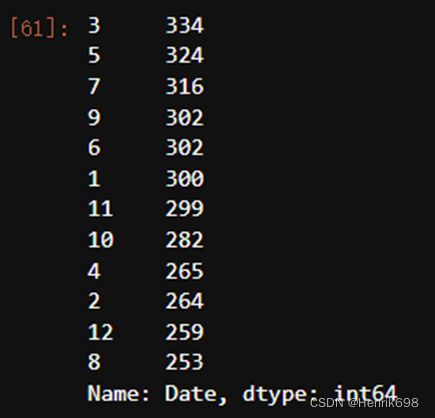

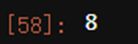

Xtrain.loc[:,'Date'].value_counts()

Xtrain = Xtrain.rename(columns={"Date":"Month"})

Xtrain.head()

Xtest["Date"] = Xtest["Date"].apply(lambda x:int(x.split("-")[1]))

Xtest = Xtest.rename(columns={"Date":"Month"})

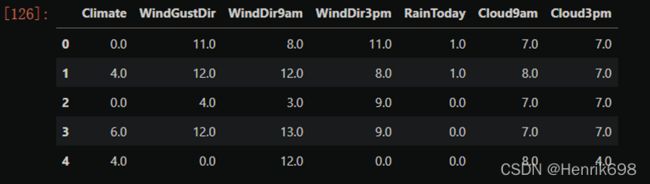

Xtest.head()

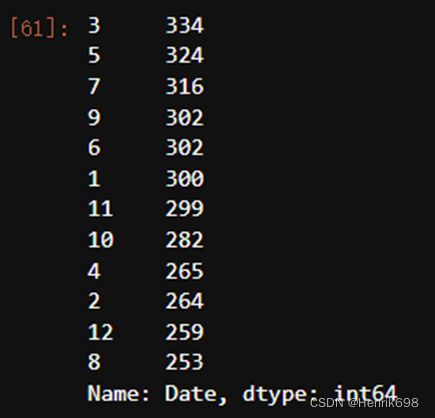

Xtrain.loc[:,'Location'].value_counts().count()

import time

from selenium import webdriver

import pandas as pd

import numpy as np

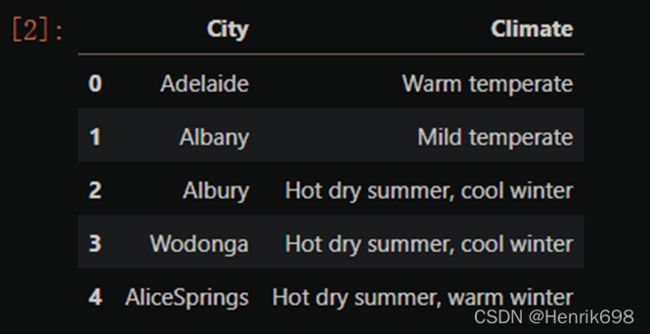

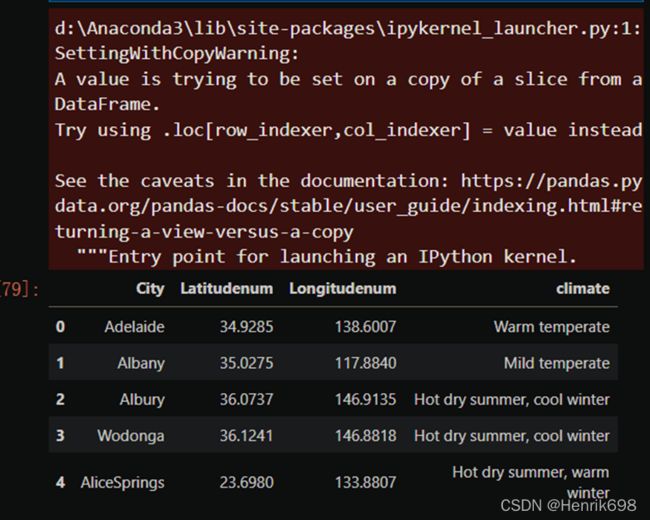

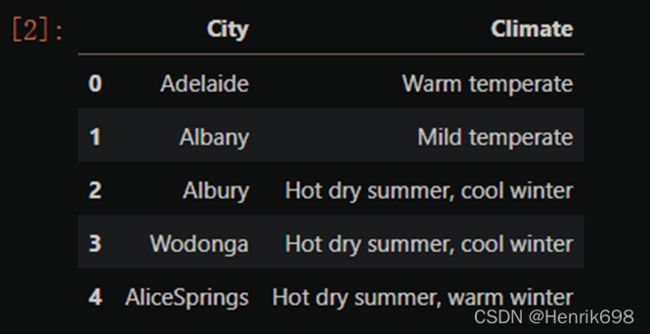

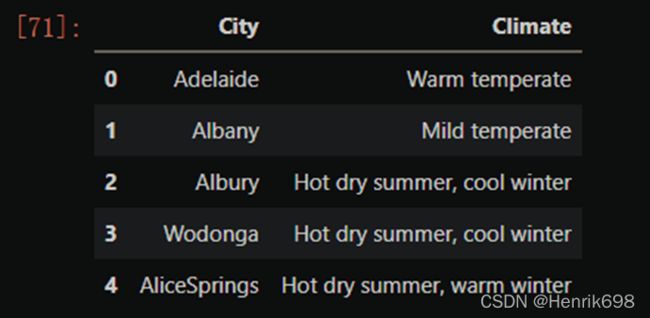

city = pd.read_csv(r'D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\Cityclimate.csv')

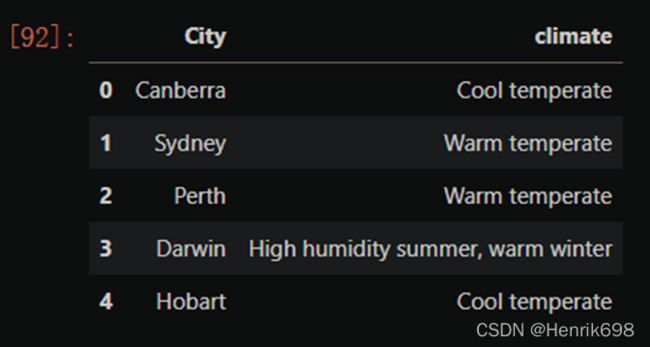

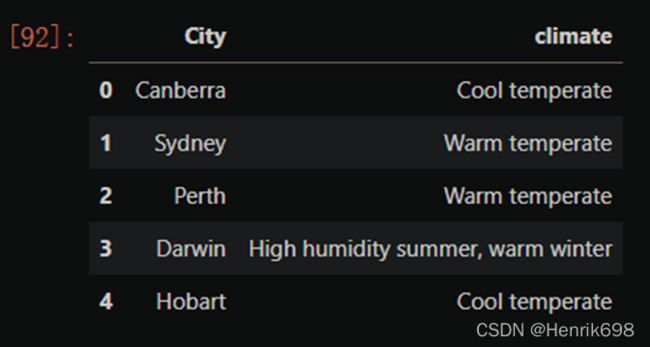

city.head()

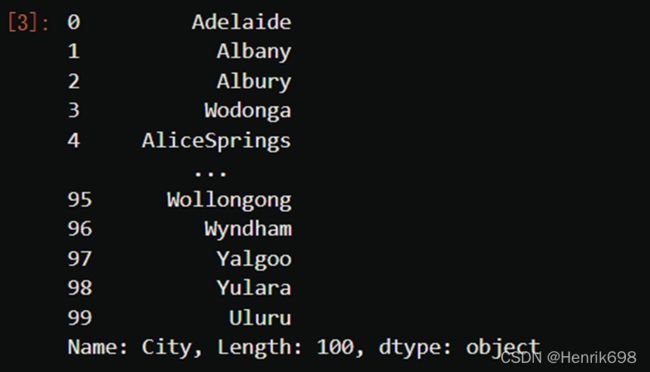

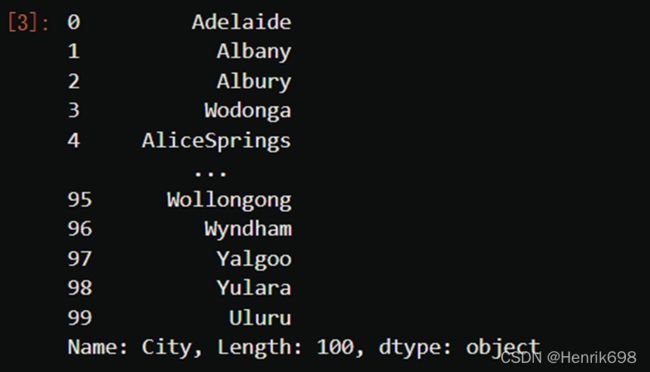

cityname = city.iloc[:,0]

cityname

df =pd.DataFrame(index = cityname.index)

driver = webdriver.Chrome()

time0 = time.time()

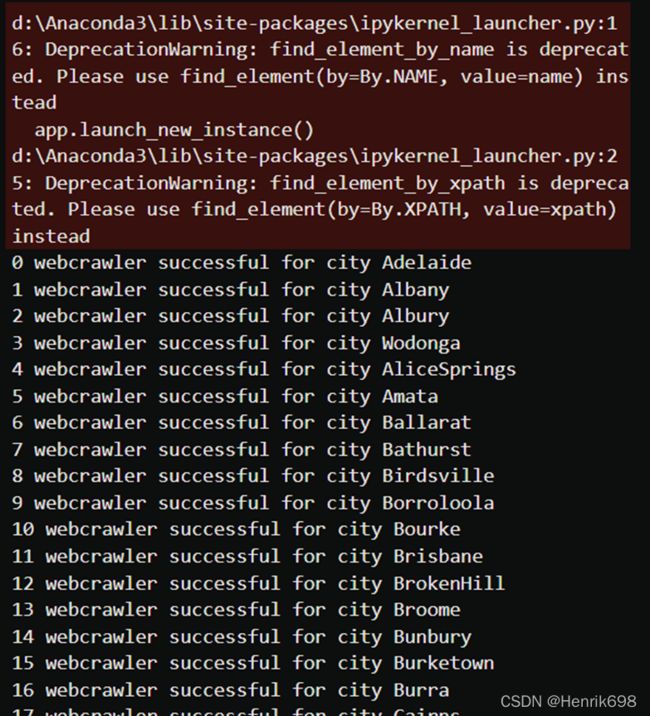

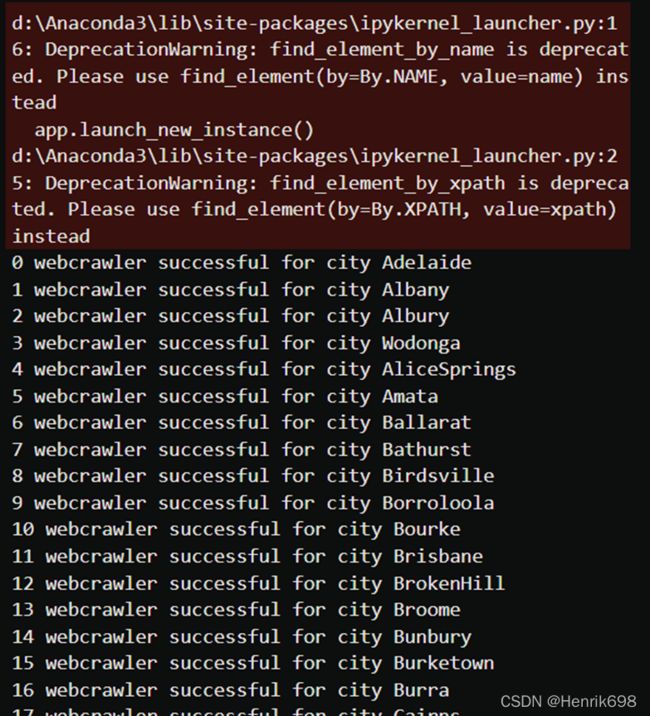

for num,city in enumerate(cityname):

driver.get('https://www.google.co.uk/webhp?hl=en&sa=X&ved=0ahUKEwimtcX24cTfAhUJE7wKHVkWB5AQPAgH')

time.sleep(1)

search_box = driver.find_element_by_name('q')

search_box.send_keys("%s Australia Latitude and longitude" % (city))

search_box.submit()

result = driver.find_element_by_xpath('//div[@class="Z0LcW"]').text

resultsplit = result.split(" ")

df.loc[num,"City"] = city

df.loc[num,"Latitude"] = resultsplit[0]

df.loc[num,'Longitude'] = resultsplit[2]

df.loc[num,"Latitudedir"] = resultsplit[1]

df.loc[num,"Longitudedir"] = resultsplit[3]

print("%i webcrawler successful for city %s" %(num, city))

time.sleep(1)

driver.quit()

print(time.time()-time0)

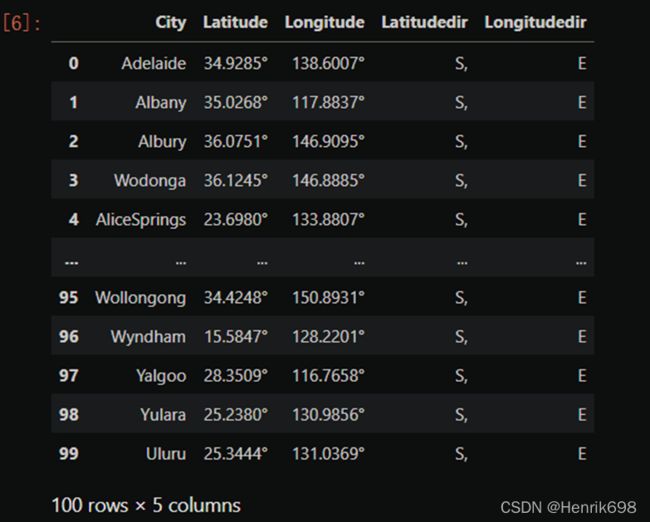

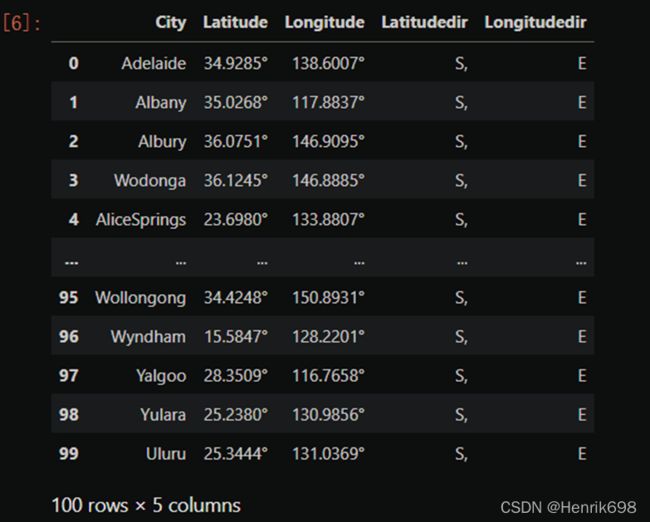

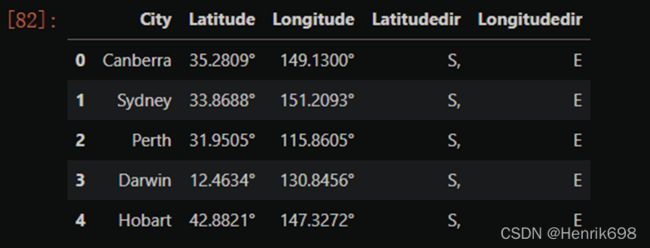

df

df.to_csv(r"D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\csv\cityll.csv")

cityll = pd.read_csv(r"D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\cityll.csv",index_col=0)

city_climate = pd.read_csv(r"D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\Cityclimate.csv")

cityll.head()

city_climate.head()

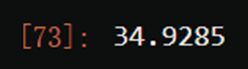

float(cityll.loc[0,'Latitude'][:-1])

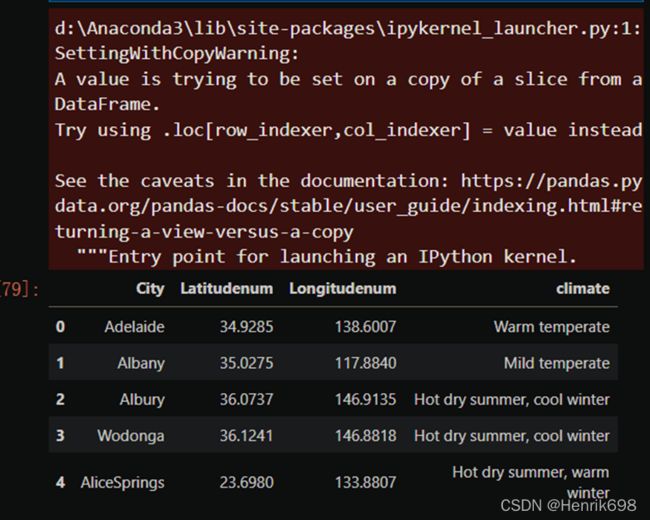

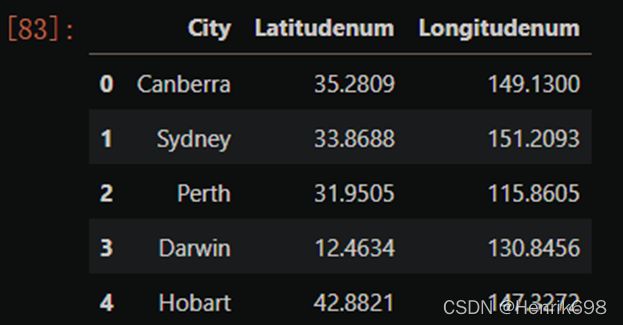

cityll["Latitudenum"] = cityll["Latitude"].apply(lambda x:float(x[:-1]))

cityll["Longitudenum"] = cityll["Longitude"].apply(lambda x:float(x[:-1]))

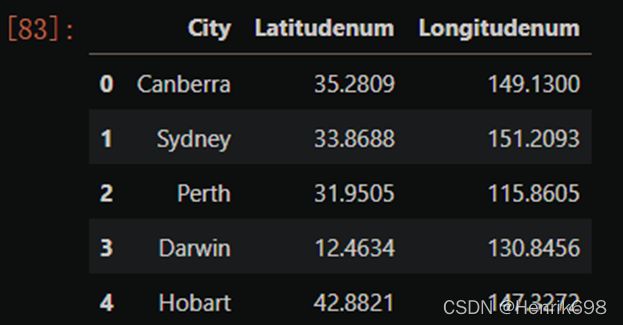

cityll.head()

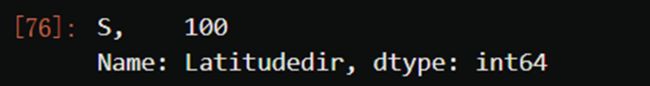

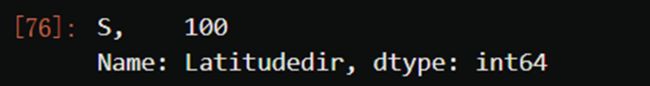

cityll.loc[:,"Latitudedir"].value_counts()

citylld = cityll.iloc[:,[0,5,6]]

citylld

citylld["climate"] = city_climate.iloc[:,-1]

citylld.head()

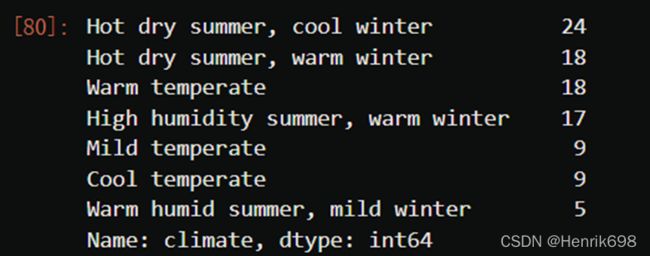

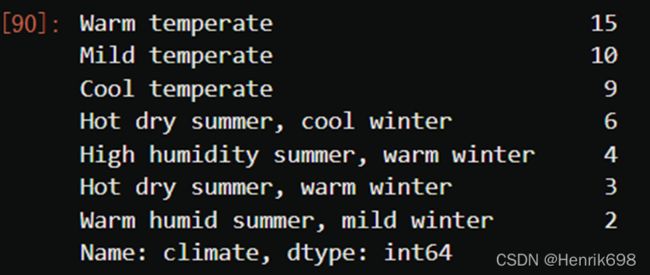

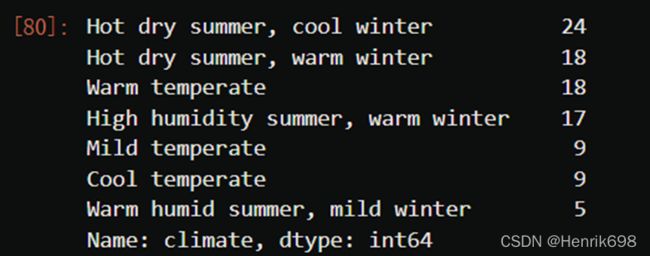

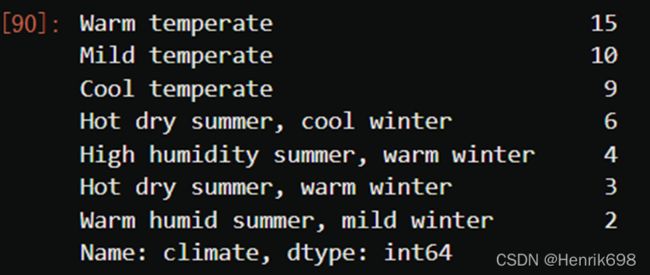

citylld.loc[:,"climate"].value_counts()

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

weather = pd.read_csv(r"D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\weatherAUS5000.csv",index_col=0)

X = weather.iloc[:,:-1]

Y = weather.iloc[:,-1]

Xtrain, Xtest, Ytrain, Ytest = train_test_split(X,Y,test_size=0.3,random_state=420)

for i in [Xtrain, Xtest, Ytrain, Ytest]:

i.index = range(i.shape[0])

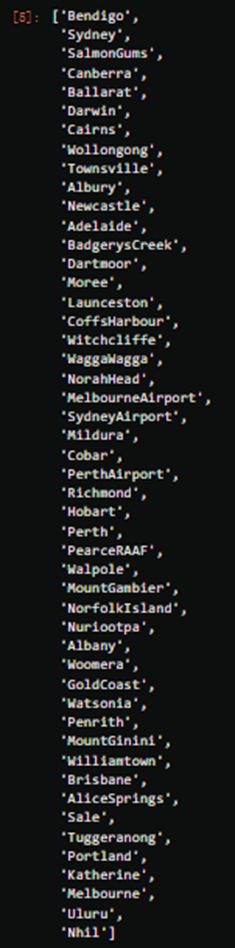

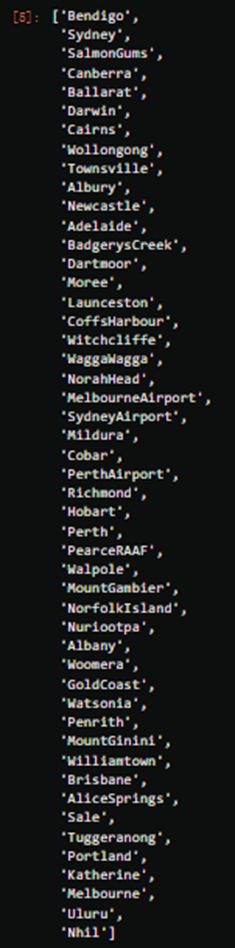

cityname = Xtrain.iloc[:,1].value_counts().index.tolist()

cityname

import time

from selenium import webdriver

df = pd.DataFrame(index=range(len(cityname)))

driver = webdriver.Chrome()

time0 = time.time()

for num, city in enumerate(cityname):

driver.get('https://www.google.co.uk/webhp?hl=en&sa=X&ved=0ahUKEwimtcX24cTfAhUJE7wKHVkWB5AQPAgH')

time.sleep(0.5)

search_box = driver.find_element_by_name('q')

search_box.send_keys('%s Australia Latitude and longitude' % (city))

search_box.submit()

result = driver.find_element_by_xpath('//div[@class="Z0LcW"]').text

resultsplit = result.split(" ")

df.loc[num,"City"] = city

df.loc[num,"Latitude"] = resultsplit[0]

df.loc[num,"Longitude"] = resultsplit[2]

df.loc[num,"Latitudedir"] = resultsplit[1]

df.loc[num,"Longitudedir"] = resultsplit[3]

print("%i webcrawler successful for city %s" % (num,city))

time.sleep(1)

driver.quit()

print(time.time() - time0)

df

df.to_csv(r"D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\csv\samplecity.csv")

samplecity = pd.read_csv(r"D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\samplecity.csv",index_col=0)

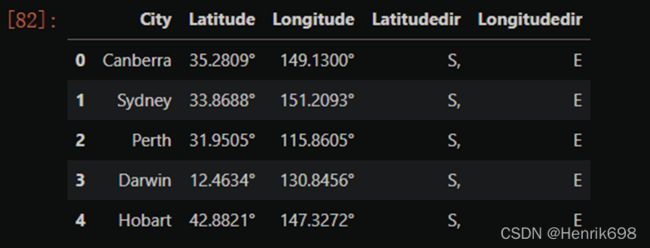

samplecity.head()

samplecity["Latitudenum"] = samplecity["Latitude"].apply(lambda x:float(x[:-1]))

samplecity["Longitudenum"] = samplecity["Longitude"].apply(lambda x:float(x[:-1]))

samplecityd = samplecity.iloc[:,[0,5,6]]

samplecityd.head()

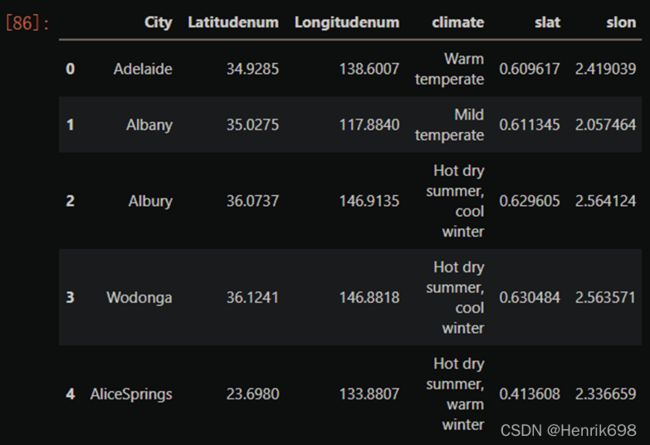

from math import radians, sin, cos, acos

citylld.loc[:,"slat"] = citylld.iloc[:,1].apply(lambda x : radians(x))

citylld.loc[:,"slon"] = citylld.iloc[:,2].apply(lambda x : radians(x))

samplecityd.loc[:,"elat"] = samplecityd.iloc[:,1].apply(lambda x : radians(x))

samplecityd.loc[:,"elon"] = samplecityd.iloc[:,2].apply(lambda x : radians(x))

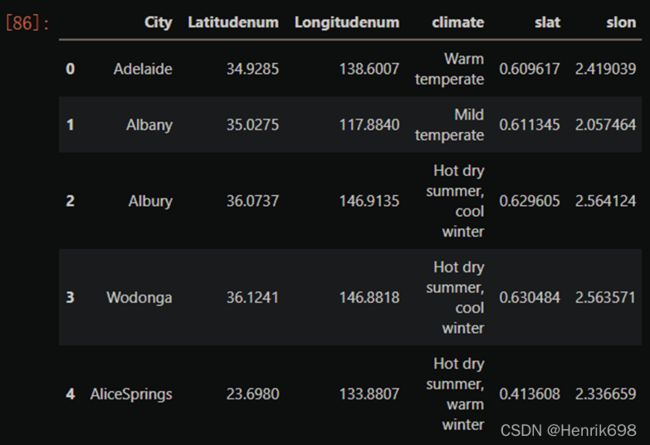

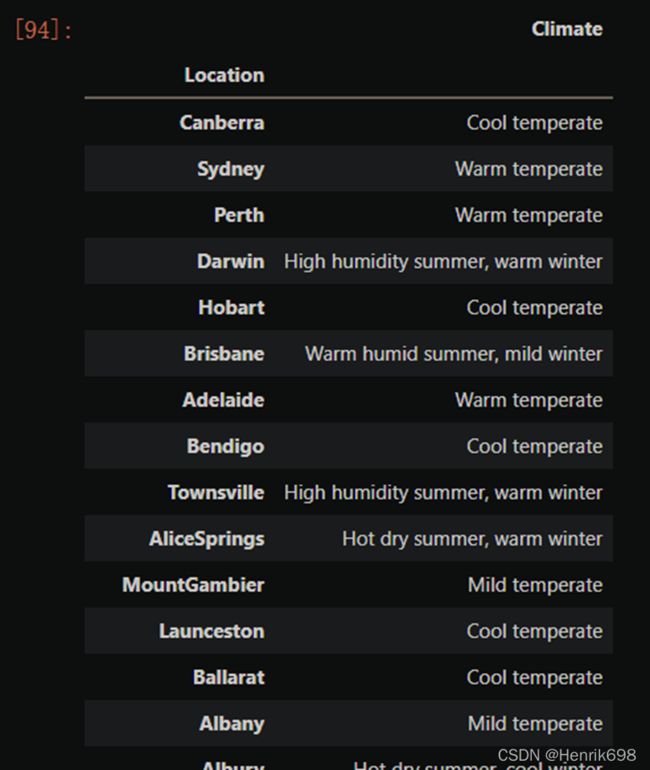

citylld.head()

samplecityd.head()

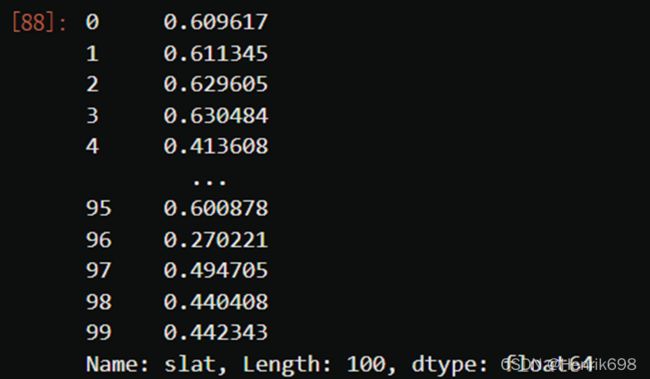

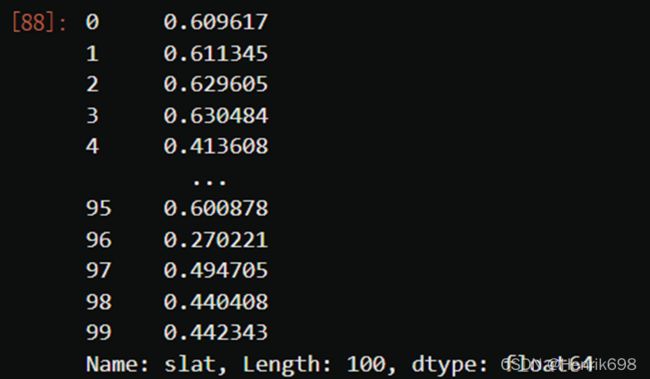

slat = citylld.loc[:,"slat"]

slat

import sys

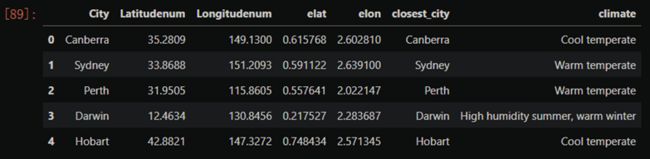

for i in range(samplecityd.shape[0]):

slat = citylld.loc[:,"slat"]

slon = citylld.loc[:,"slon"]

elat = samplecityd.loc[i,"elat"]

elon = samplecityd.loc[i,"elon"]

dist = 6371.01 * np.arccos(np.sin(slat)*np.sin(elat) +

np.cos(slat)*np.cos(elat)*np.cos(slon.values - elon))

city_index = np.argsort(dist)[0]

samplecityd.loc[i,"closest_city"] = citylld.loc[city_index,"City"]

samplecityd.loc[i,"climate"] = citylld.loc[city_index,"climate"]

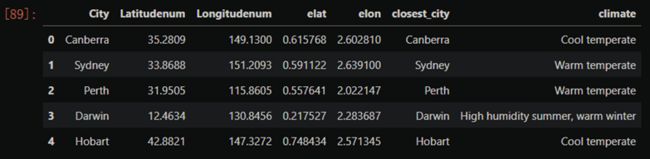

samplecityd.head(5)

samplecityd["climate"].value_counts()

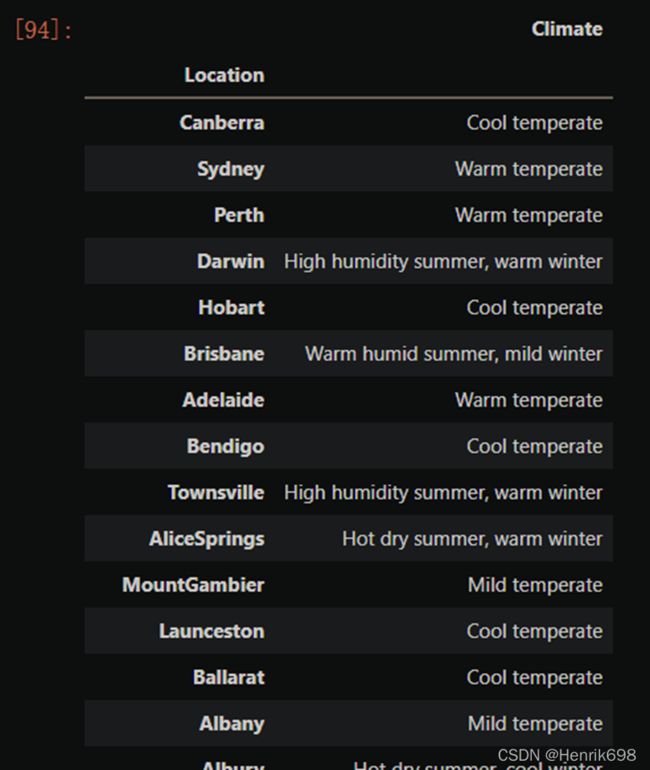

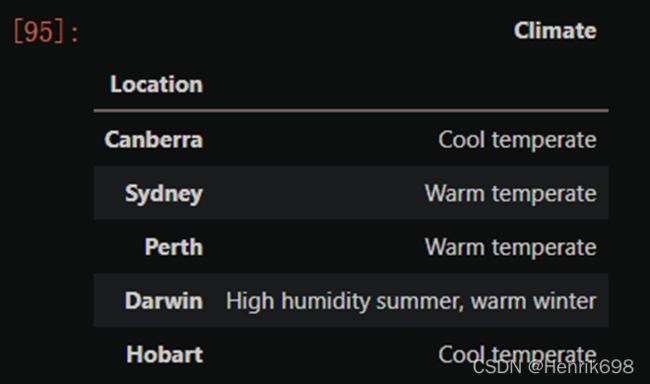

locafinal = samplecityd.iloc[:,[0,-1]]

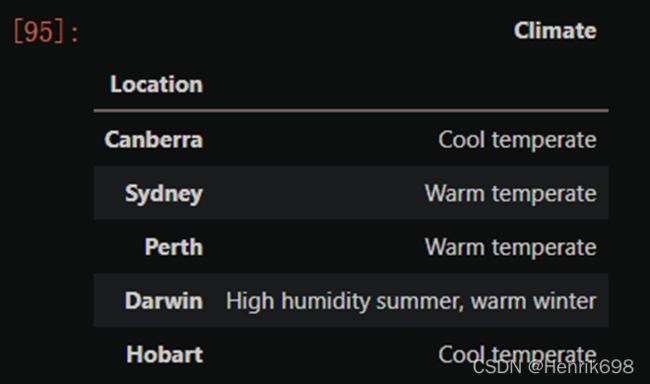

locafinal.head()

locafinal.columns = ["Location","Climate"]

locafinal.head()

locafinal = locafinal.set_index(keys="Location")

locafinal

locafinal.to_csv(r"D:\whole_development_of_the_stack_study\RS_Algorithm_Course\Practical\machine_learning\08_09_10_11SVM_01\samplelocation.csv")

locafinal.head()

Xtrain.head()

import re

Xtrain["Location"] = Xtrain["Location"].map(locafinal.iloc[:,0])

Xtrain.head()

Xtrain["Location"] = Xtrain["Location"].apply(lambda x:re.sub(",","",x.strip()))

Xtrain.head()

Xtest["Location"] = Xtest["Location"].map(locafinal.iloc[:,0]).apply(lambda x:re.sub(",","",x.strip()))

Xtrain = Xtrain.rename(columns={"Location":"Climate"})

Xtest = Xtest.rename(columns={"Location":"Climate"})

Xtrain.head()

Xtest.head()

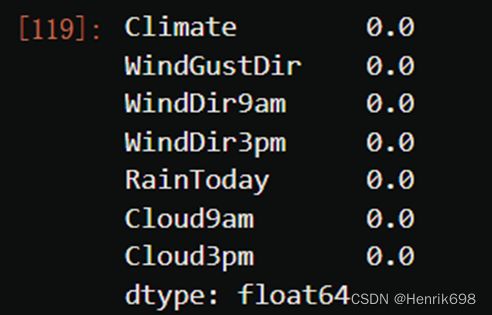

Xtrain.isnull().mean()

Xtrain.info()

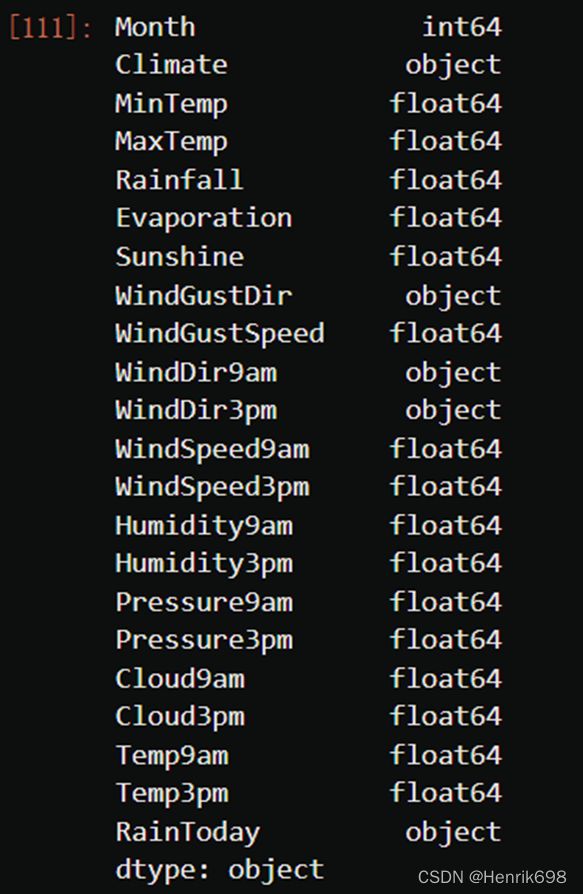

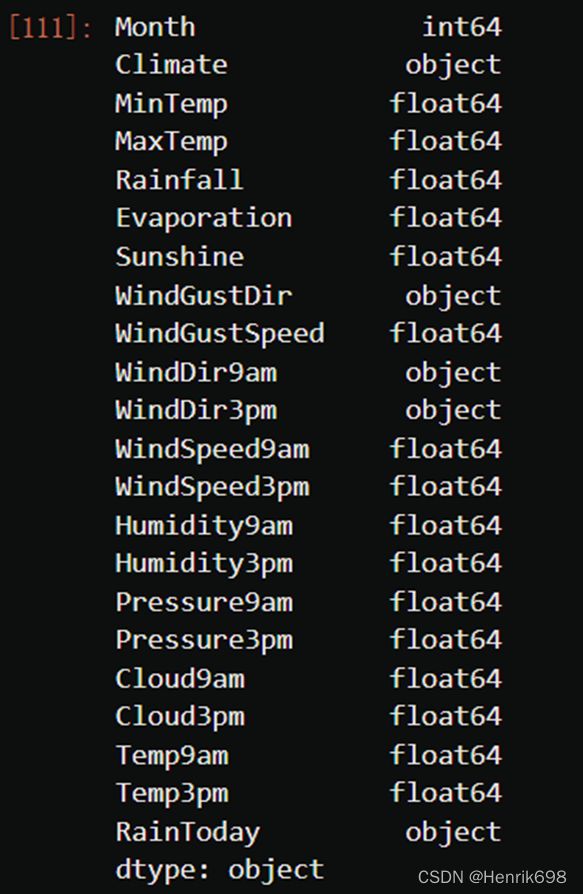

Xtrain.dtypes

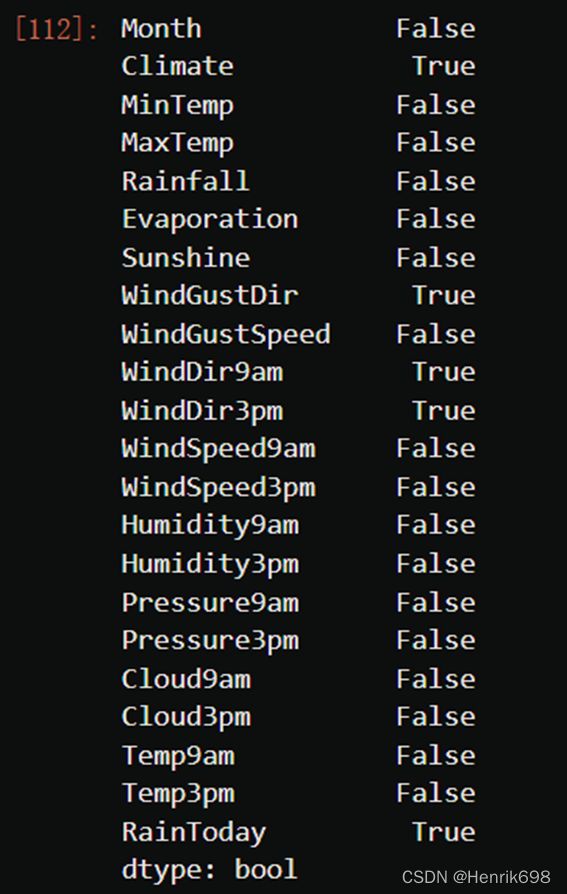

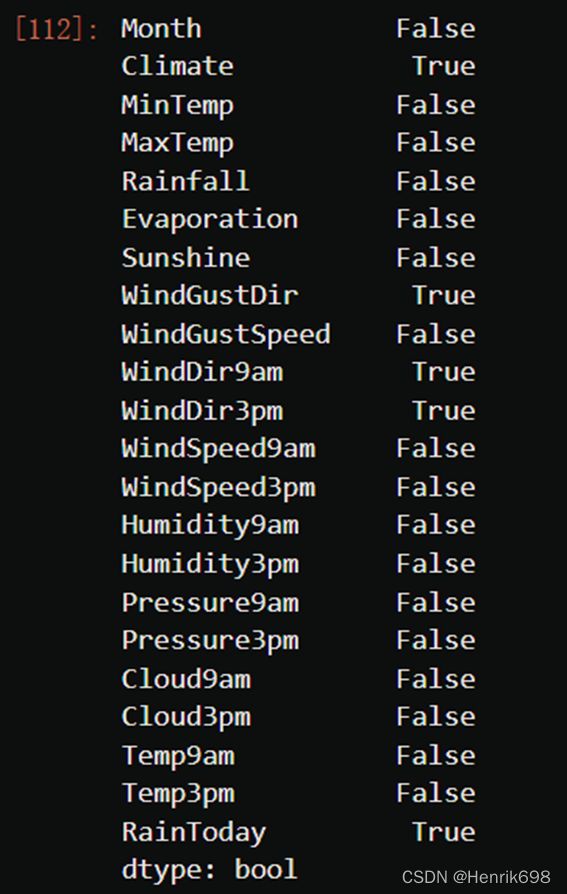

Xtrain.dtypes == 'object'

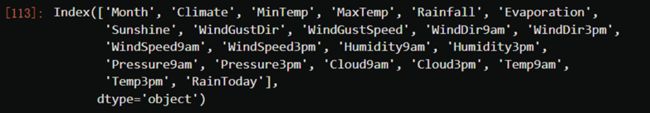

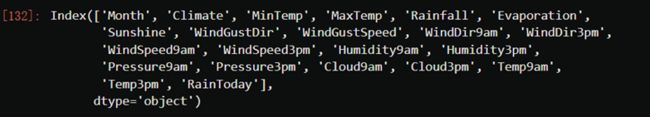

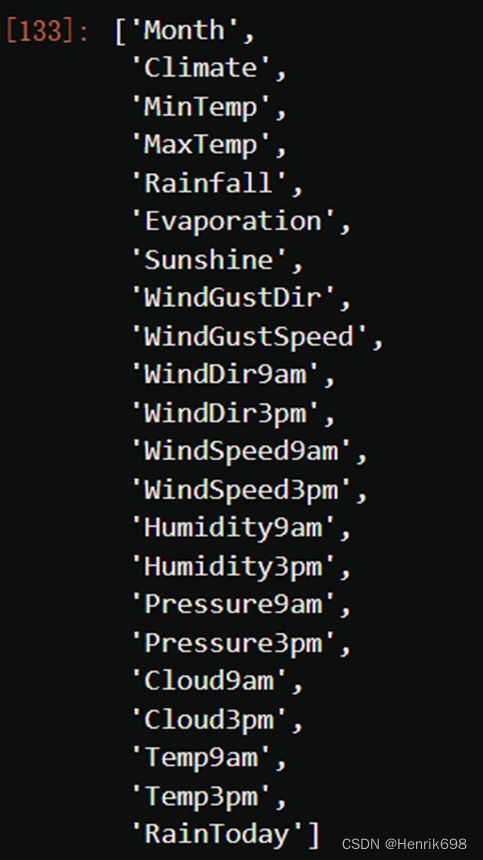

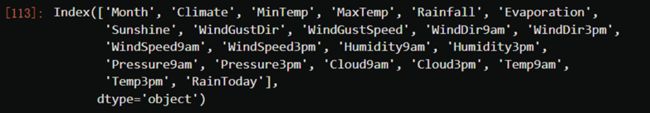

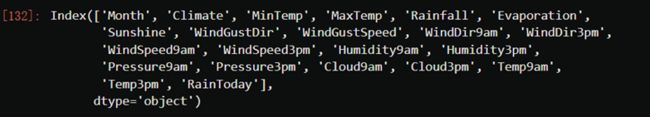

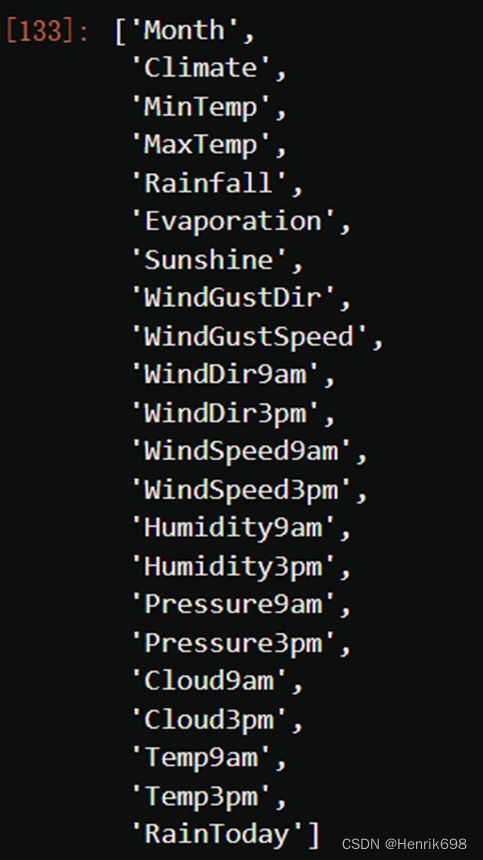

Xtrain.columns

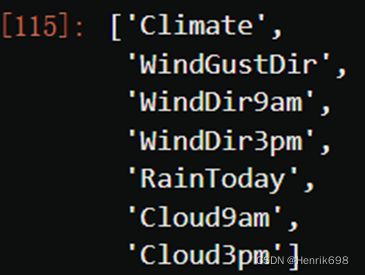

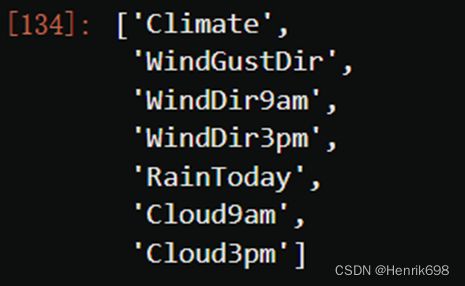

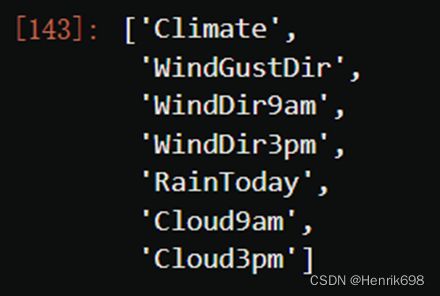

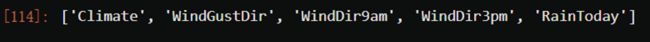

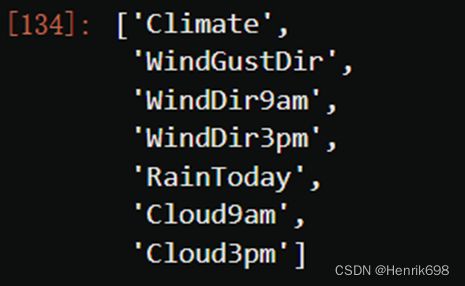

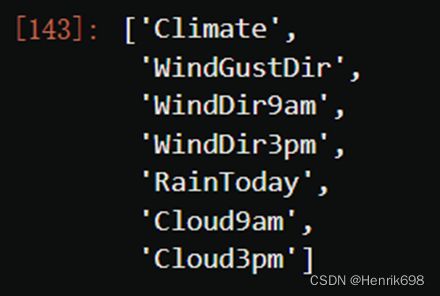

cate = Xtrain.columns[Xtrain.dtypes == "object"].tolist()

cate

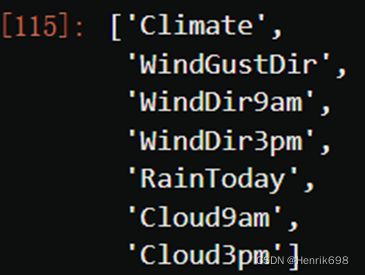

cloud = ["Cloud9am","Cloud3pm"]

cate = cate + cloud

cate

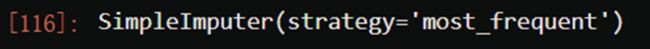

from sklearn.impute import SimpleImputer

si = SimpleImputer(missing_values=np.nan, strategy="most_frequent")

si.fit(Xtrain.loc[:,cate])

Xtrain.loc[:,cate] = si.transform(Xtrain.loc[:,cate])

Xtest.loc[:,cate] = si.transform(Xtest.loc[:,cate])

Xtrain.head()

Xtest.head()

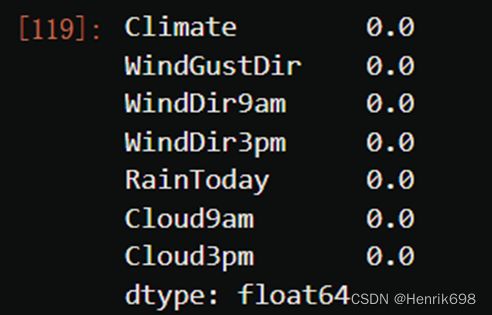

Xtrain.loc[:,cate].isnull().mean()

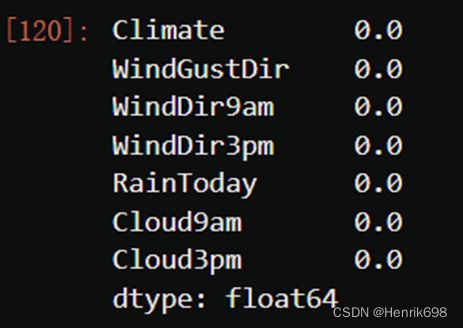

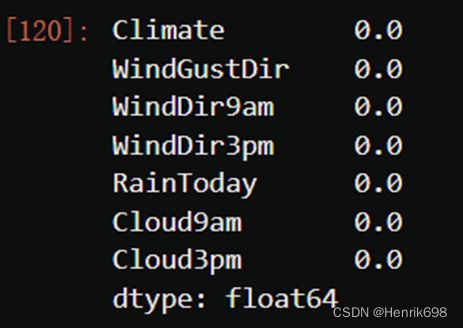

Xtest.loc[:,cate].isnull().mean()

(5)处理分类型变量:将分类型变量编码

from sklearn.preprocessing import OrdinalEncoder

oe = OrdinalEncoder()

oe = oe.fit(Xtrain.loc[:,cate])

Xtrain.loc[:,cate] = oe.transform(Xtrain.loc[:,cate])

Xtest.loc[:,cate] = oe.transform(Xtest.loc[:,cate])

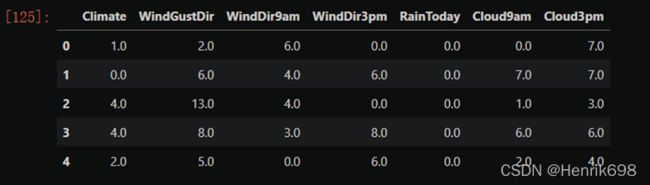

Xtrain.loc[:,cate].head()

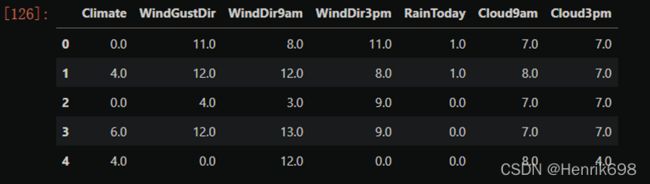

Xtest.loc[:,cate].head()

Xtrain.head()

(6)处理连续型变量:填补缺失值

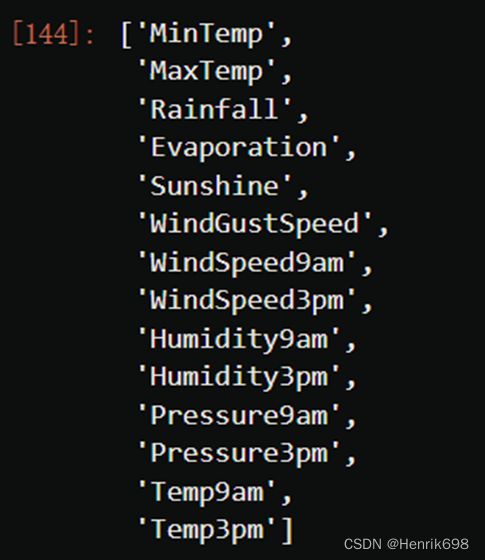

Xtrain.columns

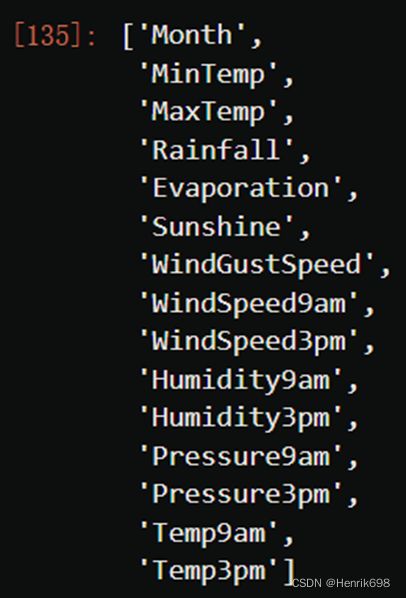

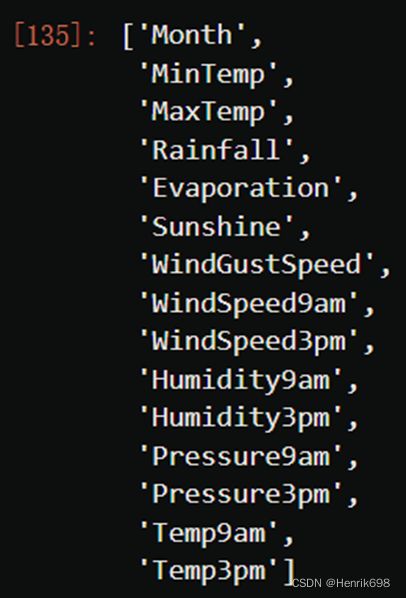

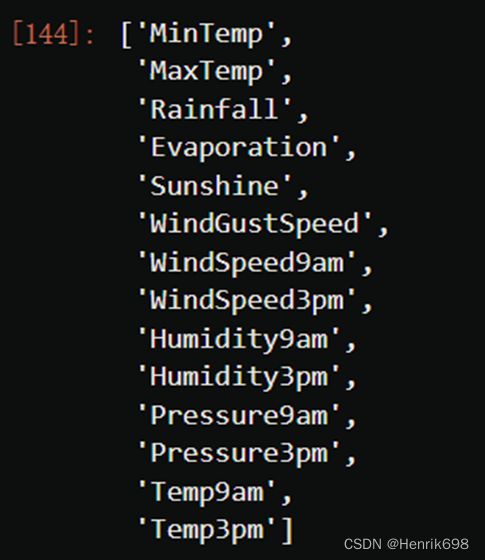

col = Xtrain.columns.tolist()

col

cate

for i in cate:

col.remove(i)

col

impmean = SimpleImputer(missing_values=np.nan,strategy = "mean")

impmean = impmean.fit(Xtrain.loc[:,col])

Xtrain.loc[:,col] = impmean.transform(Xtrain.loc[:,col])

Xtest.loc[:,col] = impmean.transform(Xtest.loc[:,col])

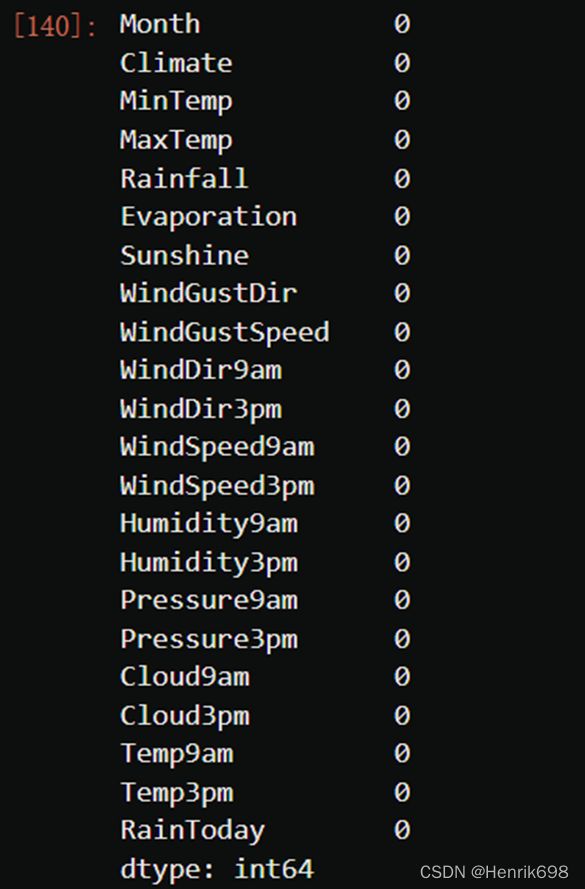

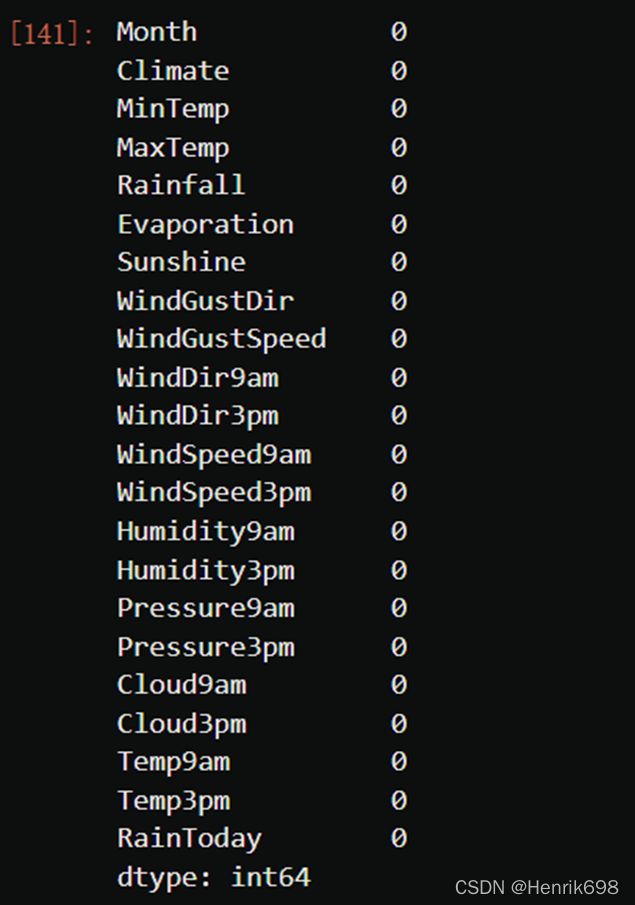

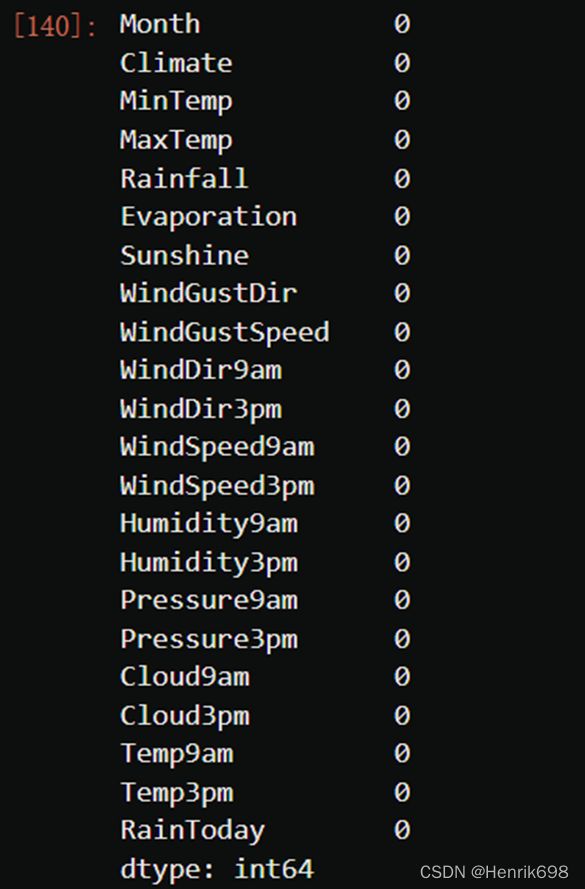

Xtrain.isnull().sum()

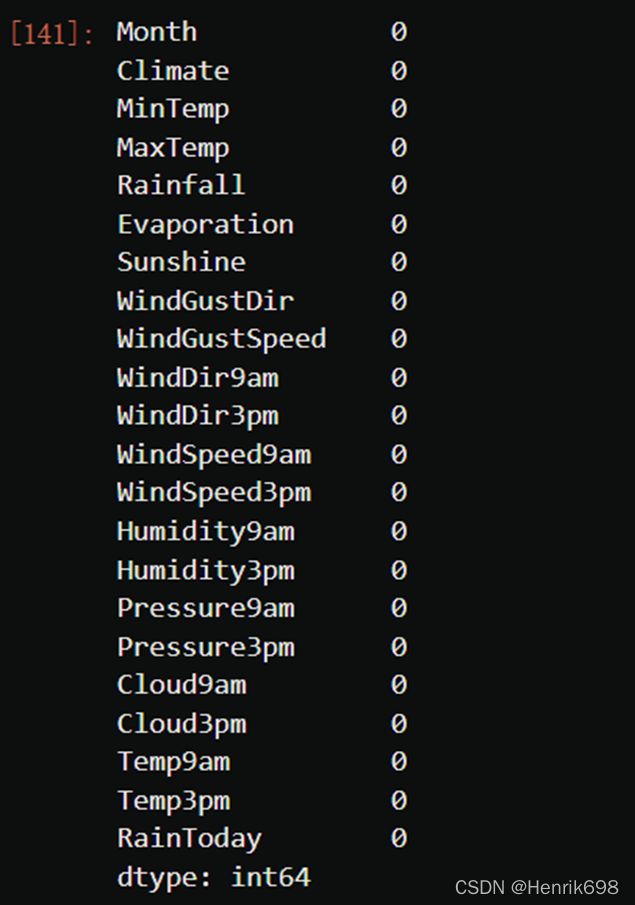

Xtest.isnull().sum()

cate

col.remove("Month")

col

from sklearn.preprocessing import StandardScaler

ss = StandardScaler()

ss = ss.fit(Xtrain.loc[:,col])

Xtrain.loc[:,col] = ss.transform(Xtrain.loc[:,col])

Xtest.loc[:,col] = ss.transform(Xtest.loc[:,col])

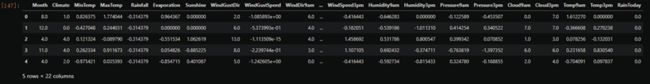

Xtrain.head()

Xtest.head()

from time import time

import datetime

from sklearn.svm import SVC

from sklearn.model_selection import cross_val_score

from sklearn.metrics import roc_auc_score, recall_score

Ytrain = Ytrain.iloc[:,0].ravel()

Ytest = Ytest.iloc[:,0].ravel()

times = time()

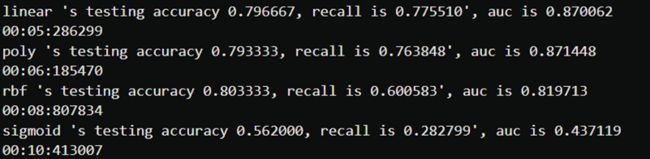

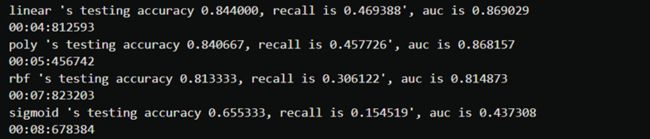

for kernel in ["linear","poly","rbf","sigmoid"]:

clf = SVC(kernel = kernel

,gamma="auto"

,degree = 1

,cache_size = 10000

).fit(Xtrain, Ytrain)

result = clf.predict(Xtest)

score = clf.score(Xtest,Ytest)

recall = recall_score(Ytest, result)

auc = roc_auc_score(Ytest,clf.decision_function(Xtest))

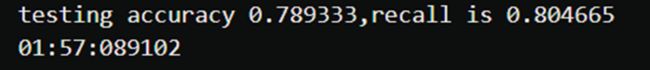

print("%s 's testing accuracy %f, recall is %f', auc is %f" %(kernel,score,recall,auc))

print(datetime.datetime.fromtimestamp(time()-times).strftime("%M:%S:%f"))

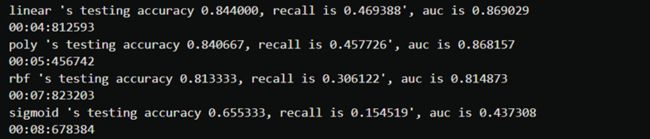

5、模型调参

(1)追求最高Recall

times = time()

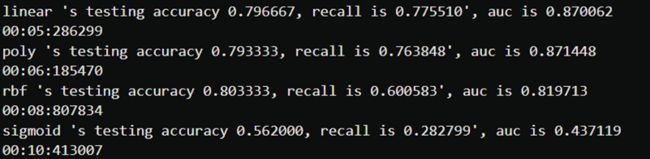

for kernel in ["linear","poly","rbf","sigmoid"]:

clf = SVC(kernel = kernel

,gamma="auto"

,degree = 1

,cache_size = 10000

,class_weight = "balanced"

).fit(Xtrain, Ytrain)

result = clf.predict(Xtest)

score = clf.score(Xtest,Ytest)

recall = recall_score(Ytest, result)

auc = roc_auc_score(Ytest,clf.decision_function(Xtest))

print("%s 's testing accuracy %f, recall is %f', auc is %f" %(kernel,score,recall,auc))

print(datetime.datetime.fromtimestamp(time()-times).strftime("%M:%S:%f"))

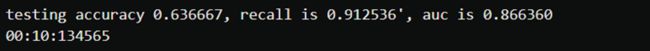

times = time()

clf = SVC(kernel = "linear"

,gamma="auto"

,cache_size = 10000

,class_weight = {1:10}

).fit(Xtrain, Ytrain)

result = clf.predict(Xtest)

score = clf.score(Xtest,Ytest)

recall = recall_score(Ytest, result)

auc = roc_auc_score(Ytest,clf.decision_function(Xtest))

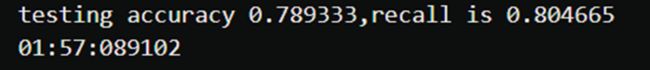

print("testing accuracy %f, recall is %f', auc is %f" %(score,recall,auc))

print(datetime.datetime.fromtimestamp(time()-times).strftime("%M:%S:%f"))

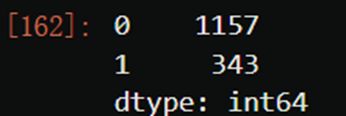

valuec = pd.Series(Ytest).value_counts()

valuec

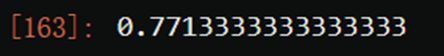

valuec[0]/valuec.sum()

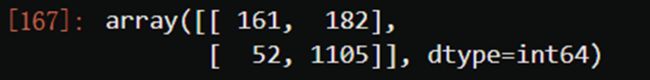

from sklearn.metrics import confusion_matrix as CM

clf = SVC(kernel = "linear"

,gamma="auto"

,cache_size = 5000

).fit(Xtrain, Ytrain)

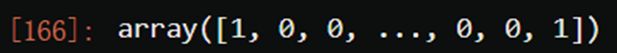

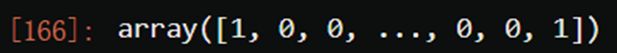

result = clf.predict(Xtest)

result

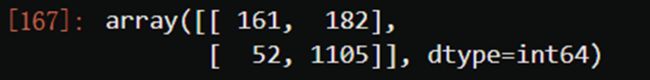

cm = CM(Ytest,result,labels=(1,0))

cm

specificity = cm[1,1]/cm[1,:].sum()

specificity

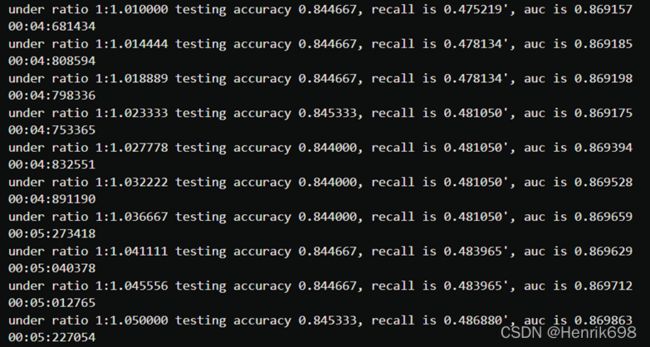

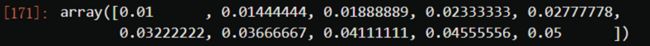

irange = np.linspace(0.01,0.05,10)

irange

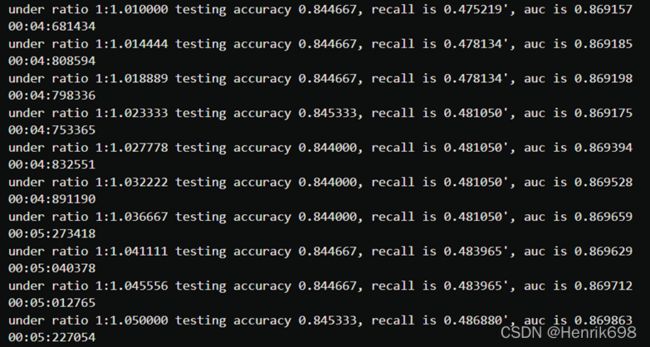

for i in irange:

times = time()

clf = SVC(kernel = "linear"

,gamma="auto"

,cache_size = 10000

,class_weight = {1:1+i}

).fit(Xtrain, Ytrain)

result = clf.predict(Xtest)

score = clf.score(Xtest,Ytest)

recall = recall_score(Ytest, result)

auc = roc_auc_score(Ytest,clf.decision_function(Xtest))

print("under ratio 1:%f testing accuracy %f, recall is %f', auc is %f" %(1+i,score,recall,auc))

print(datetime.datetime.fromtimestamp(time()-times).strftime("%M:%S:%f"))

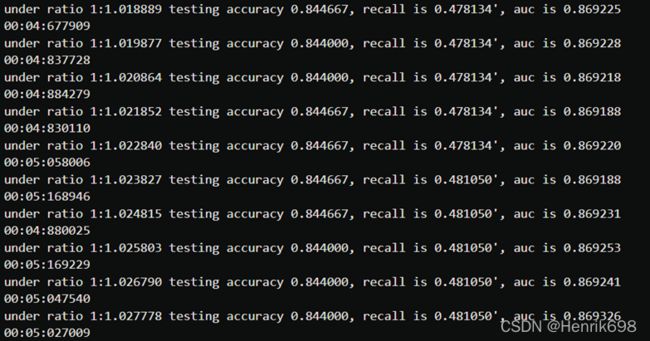

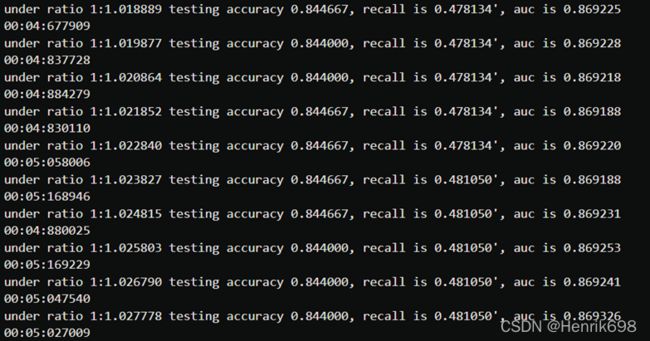

irange_ = np.linspace(0.018889,0.027778,10)

for i in irange_:

times = time()

clf = SVC(kernel = "linear"

,gamma="auto"

,cache_size = 10000

,class_weight = {1:1+i}

).fit(Xtrain, Ytrain)

result = clf.predict(Xtest)

score = clf.score(Xtest,Ytest)

recall = recall_score(Ytest, result)

auc = roc_auc_score(Ytest,clf.decision_function(Xtest))

print("under ratio 1:%f testing accuracy %f, recall is %f', auc is %f" %(1+i,score,recall,auc))

print(datetime.datetime.fromtimestamp(time()-times).strftime("%M:%S:%f"))

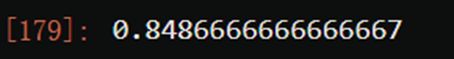

from sklearn.linear_model import LogisticRegression as LR

logclf = LR(solver="liblinear").fit(Xtrain, Ytrain)

logclf.score(Xtest,Ytest)

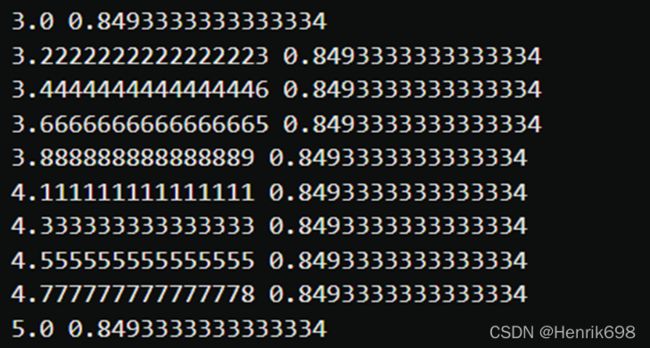

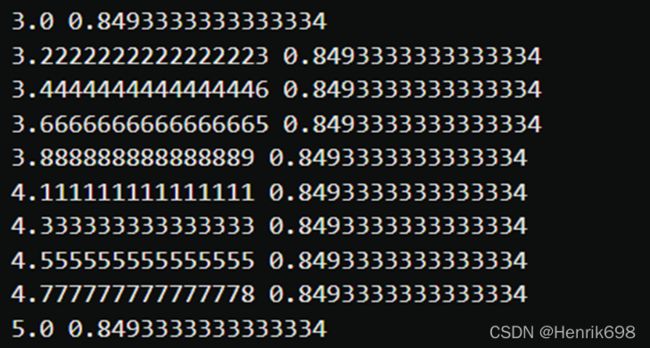

C_range = np.linspace(3,5,10)

for C in C_range:

logclf = LR(solver="liblinear",C=C).fit(Xtrain, Ytrain)

print(C,logclf.score(Xtest,Ytest))

import matplotlib.pyplot as plt

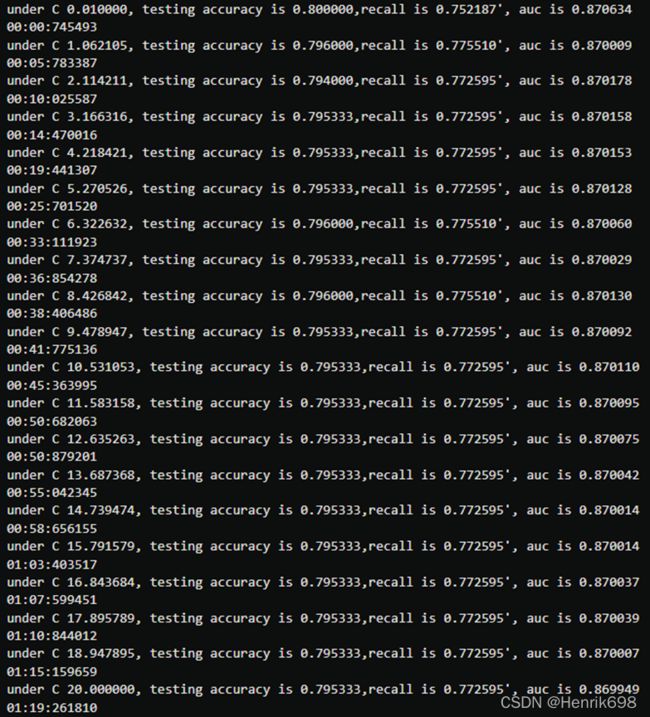

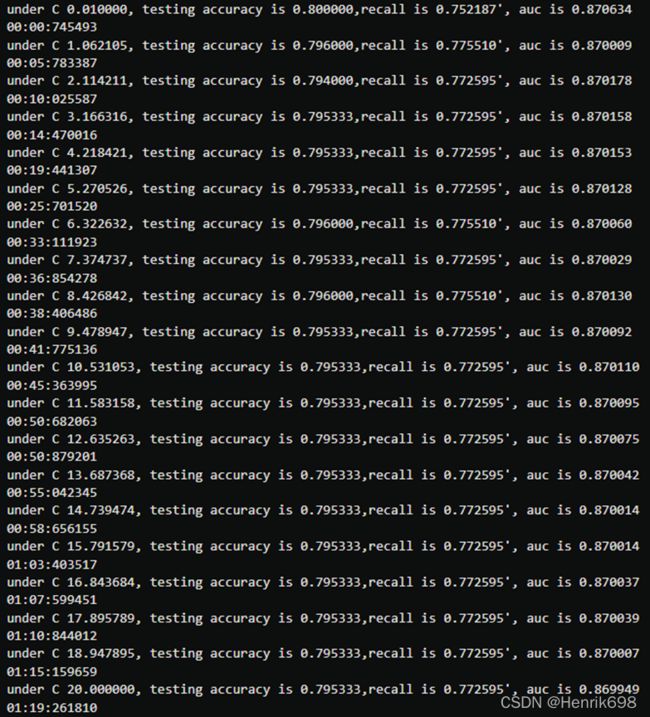

C_range = np.linspace(0.01,20,20)

recallall = []

aucall = []

scoreall = []

for C in C_range:

times = time()

clf = SVC(kernel = "linear",C=C,cache_size = 20000

,class_weight = "balanced"

).fit(Xtrain, Ytrain)

result = clf.predict(Xtest)

score = clf.score(Xtest,Ytest)

recall = recall_score(Ytest, result)

auc = roc_auc_score(Ytest,clf.decision_function(Xtest))

recallall.append(recall)

aucall.append(auc)

scoreall.append(score)

print("under C %f, testing accuracy is %f,recall is %f', auc is %f" %(C,score,recall,auc))

print(datetime.datetime.fromtimestamp(time()-times).strftime("%M:%S:%f"))

print(max(aucall),C_range[aucall.index(max(aucall))])

plt.figure()

plt.plot(C_range,recallall,c="red",label="recall")

plt.plot(C_range,aucall,c="black",label="auc")

plt.plot(C_range,scoreall,c="orange",label="accuracy")

plt.legend()

plt.show()

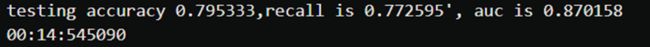

times = time()

clf = SVC(kernel = "linear",C=3.1663157894736838,cache_size = 5000

,class_weight = "balanced"

).fit(Xtrain, Ytrain)

result = clf.predict(Xtest)

score = clf.score(Xtest,Ytest)

recall = recall_score(Ytest, result)

auc = roc_auc_score(Ytest,clf.decision_function(Xtest))

print("testing accuracy %f,recall is %f', auc is %f" % (score,recall,auc))

print(datetime.datetime.fromtimestamp(time()-times).strftime("%M:%S:%f"))

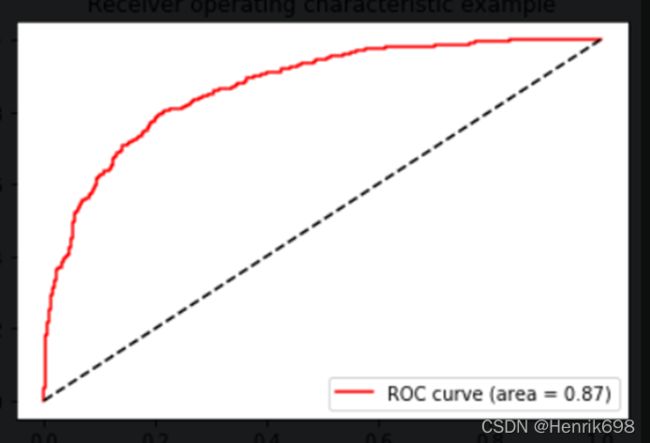

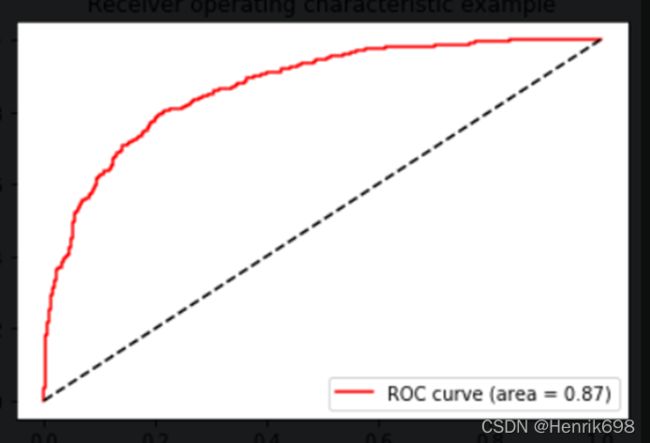

from sklearn.metrics import roc_curve as ROC

import matplotlib.pyplot as plt

FPR, Recall, thresholds = ROC(Ytest,clf.decision_function(Xtest),pos_label=1)

area = roc_auc_score(Ytest,clf.decision_function(Xtest))

plt.figure()

plt.plot(FPR, Recall, color='red',

label='ROC curve (area = %0.2f)' % area)

plt.plot([0, 1], [0, 1], color='black', linestyle='--')

plt.xlim([-0.05, 1.05])

plt.ylim([-0.05, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('Recall')

plt.title('Receiver operating characteristic example')

plt.legend(loc="lower right")

plt.show()

maxindex = (Recall - FPR).tolist().index(max(Recall - FPR))

thresholds[maxindex]

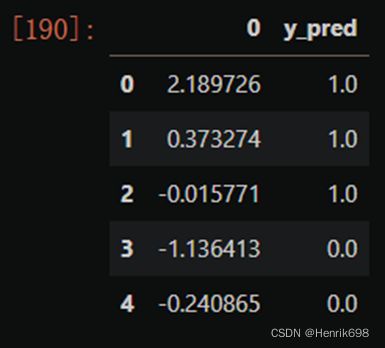

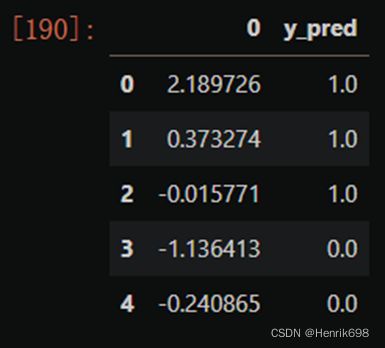

from sklearn.metrics import accuracy_score as AC

times = time()

clf = SVC(kernel = "linear",C=3.1663157894736838,cache_size = 5000

,class_weight = "balanced"

).fit(Xtrain, Ytrain)

prob = pd.DataFrame(clf.decision_function(Xtest))

prob.loc[prob.iloc[:,0] >= thresholds[maxindex],"y_pred"]=1

prob.loc[prob.iloc[:,0] < thresholds[maxindex],"y_pred"]=0

prob.head()

prob.loc[:,"y_pred"].isnull().sum()

score = AC(Ytest,prob.loc[:,"y_pred"].values)

recall = recall_score(Ytest, prob.loc[:,"y_pred"])

print("testing accuracy %f,recall is %f" % (score,recall))

print(datetime.datetime.fromtimestamp(time()-times).strftime("%M:%S:%f"))

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()