点云处理的train pipeline

LoadPointsFromFile

在进入train_pipeline之前,results中有如下的数据

![]()

LoadPointsFromFile的作用就是从原始的点云的文件中读取出点的数据,并将其转换为mmdet3d.core.points.lidar_points.LiDARPoints这个类,然后在results中增加一个键值对,来存放读入的点的信息

![]()

LoadPointsFromMultiSweeps

其作用是读取multi sweep的点云数据,个人理解是单帧的点云过于稀疏,用多帧来补充点的个数,经过这个之前有34752个点,经过这个之后有267766个点 。同时也会去除掉离远点较近的点

核心代码:

for idx in choices:

sweep = results['sweeps'][idx]

points_sweep = self._load_points(sweep['data_path'])

points_sweep = np.copy(points_sweep).reshape(-1, self.load_dim)

if self.remove_close:

# 去除掉离liar系下原点较近的点,

points_sweep = self._remove_close(points_sweep)

sweep_ts = sweep['timestamp'] / 1e6

# 个人理解这里是将sweep中点的坐标与当前帧对齐

points_sweep[:, :3] = points_sweep[:, :3] @ sweep[

'sensor2lidar_rotation'].T

points_sweep[:, :3] += sweep['sensor2lidar_translation']

points_sweep[:, 4] = ts - sweep_ts

points_sweep = points.new_point(points_sweep)

sweep_points_list.append(points_sweep)

这里有将sweep中点的坐标与当前帧对齐的操作,为啥对齐需要这样操作,还待补充

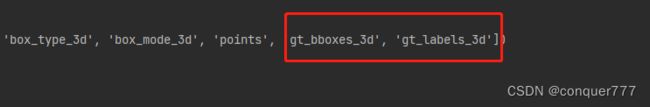

LoadAnnotations3D

从results中读取gt和label的信息,并且给在results中新增添gt_bboxes_3d和gt_labels_3d这两个键值对

def _load_bboxes_3d(self, results):

results['gt_bboxes_3d'] = results['ann_info']['gt_bboxes_3d']

results['bbox3d_fields'].append('gt_bboxes_3d')

return results

def _load_labels_3d(self, results):

results['gt_labels_3d'] = results['ann_info']['gt_labels_3d']

return results

GlobalRotScaleTrans

首先将gt_bbox和点云中的点都进行旋转

# 调用mmdet3d中 LiDARInstance3DBoxes中的rotate方法实现

points, rot_mat_T = input_dict[key].rotate(

noise_rotation, input_dict['points'])

input_dict['points'] = points

# 同时在数据中记录旋转的矩阵

input_dict['pcd_rotation'] = rot_mat_T

# LiDARInstance3DBoxes中的rotate的实现

rot_sin = torch.sin(angle)

rot_cos = torch.cos(angle)

# 生成旋转矩阵

rot_mat_T = self.tensor.new_tensor([[rot_cos, -rot_sin, 0],

[rot_sin, rot_cos, 0],

[0, 0, 1]])

# 对gt的中心点坐标进行旋转

self.tensor[:, :3] = self.tensor[:, :3] @ rot_mat_T

# 对gt的航向角进行旋转

self.tensor[:, 6] += angle

# 对点云中的点进行旋转

if isinstance(points, torch.Tensor):

points[:, :3] = points[:, :3] @ rot_mat_T

elif isinstance(points, np.ndarray):

rot_mat_T = rot_mat_T.numpy()

points[:, :3] = np.dot(points[:, :3], rot_mat_T)

elif isinstance(points, BasePoints):

# clockwise

points.rotate(-angle)

else:

raise ValueError

将gt_bbox和point进行scale

scale = input_dict['pcd_scale_factor']

points = input_dict['points']

# 对点云中的每个点的坐标乘以scale

points.scale(scale)

# 下面一行是base_points中对scale的实现

self.tensor[:, :3] *= scale_factor

input_dict['points'] = points

for key in input_dict['bbox3d_fields']:

#对gt中点的坐标和长宽高都进行scale

input_dict[key].scale(scale)

# 下面两行是base_box3d中scale的实现

self.tensor[:, :6] *= scale_factor

self.tensor[:, 7:] *= scale_factor

对点云中的每个点都乘以scale_factor,每个点的坐标都发生了改变,相当于由这些点构成的物体也发生了改变,可以理解为将整个点云感知到的场景进行了resize

将gt_bbox和point进行trans(可以理解为平移?)

translation_std = np.array(self.translation_std, dtype=np.float32)

trans_factor = np.random.normal(scale=translation_std, size=3).T

input_dict['points'].translate(trans_factor)

input_dict['pcd_trans'] = trans_factor

for key in input_dict['bbox3d_fields']:

input_dict[key].translate(trans_factor)

本质上就是对gt的中心点坐标和每一个point坐标都加上trans_factor,并且在增加一个键值对来记录这个平移的向量