Hadoop中的MapReduce框架原理、OutputFormat数据输出,接口实现类、 自定义OutputFormat案例实操

文章目录

- 13.MapReduce框架原理

-

- 13.4 OutputFormat数据输出

-

- 13.4.1 OutputFormat接口实现类

- 13.4.2 自定义OutputFormat案例实操

-

- 13.4.2.1 需求

-

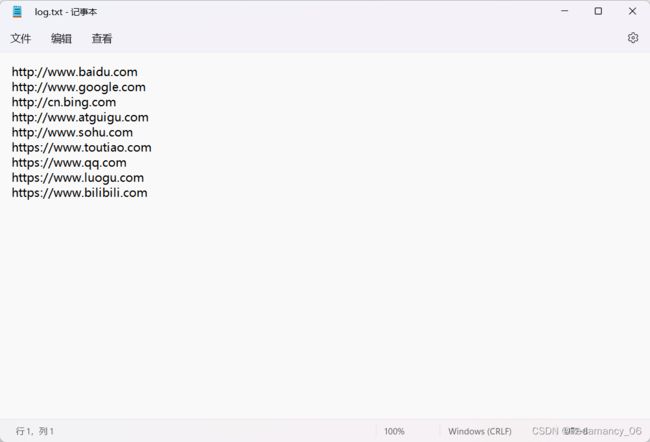

- 13.4.2.1.1 输入数据

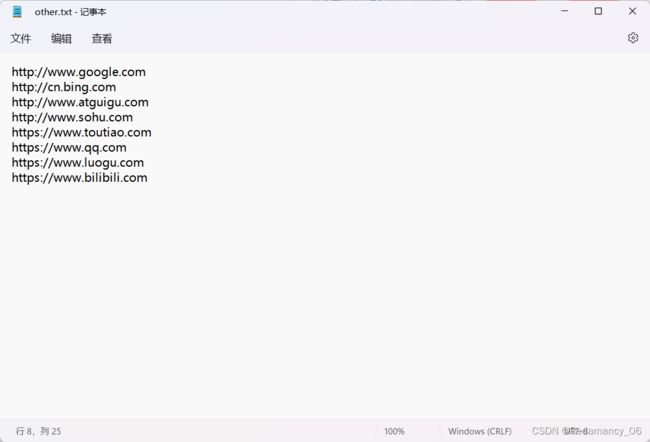

- 13.4.2.1.2 期望输出数据

- 13.4.2.2 需求分析

-

- 13.4.2.2.1 需求

- 13.4.2.2.2 输入数据

- 13.4.2.2.3 输出数据

- 13.4.2.2.4 自定义一个OutputFormat类

- 13.4.2.2.5 驱动类Driver

- 13.4.2.3 案例实操

-

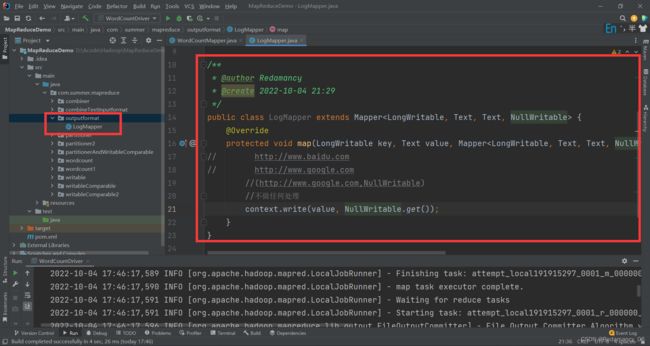

- 13.4.2.3.1 编写 LogMapper 类

- 13.4.2.3.2 编写 LogReducer 类

- 13.4.2.3.3 自定义一个 LogOutputFormat 类

- 13.4.2.3.4 编写 LogRecordWriter 类

- 13.4.2.3.5编写 LogDriver 类

13.MapReduce框架原理

13.4 OutputFormat数据输出

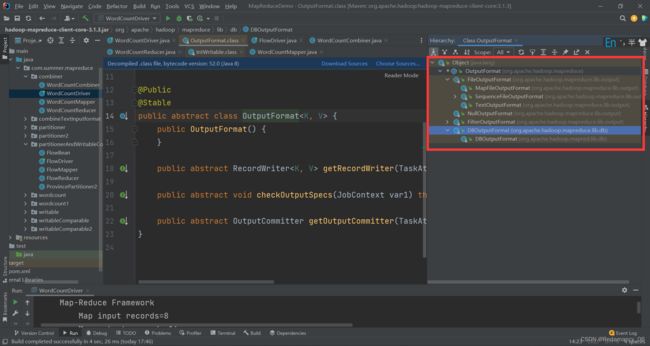

13.4.1 OutputFormat接口实现类

OutputFormat是MapReduce输出的基类,所有实现MapReduce输出都实现了 OutputFormat接口。下面我们介绍几种常见的OutputFormat实现类。

1.OutputFormat实现类

1.OutputFormat实现类

2.默认输出格式TextOutputFormat

3.自定义OutputFormat

3.1 应用场景:

例如:输出数据到MySQL/HBase/Elasticsearch等存储框架中。

3.2 自定义OutputFormat步骤

➢ 自定义一个类继承FileOutputFormat。

➢ 改写RecordWriter,具体改写输出数据的方法write()。

13.4.2 自定义OutputFormat案例实操

13.4.2.1 需求

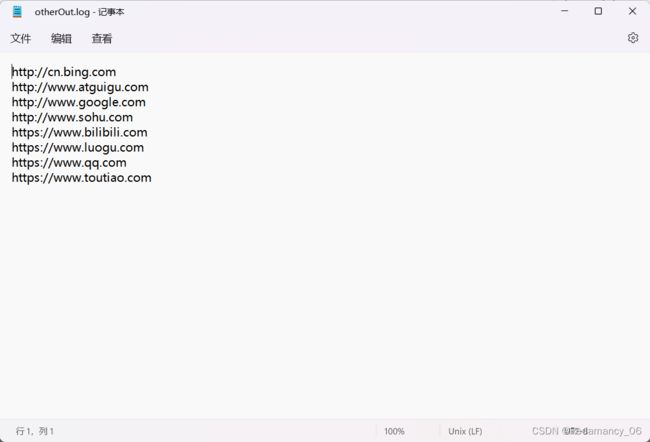

过滤输入的log日志,包含baidu的网站输出到e:/baidu.log,不包含baidu的网站输出到e:/other.log。

13.4.2.1.1 输入数据

http://www.baidu.com

http://www.google.com

http://cn.bing.com

http://www.atguigu.com

http://www.sohu.com

https://www.toutiao.com

https://www.qq.com

https://www.luogu.com

https://www.bilibili.com

13.4.2.1.2 期望输出数据

13.4.2.2 需求分析

13.4.2.2.1 需求

过滤输入的log日志,包含baidu的网站输出到e:/baidu.log,不包含baidu的网站输出到e:/other.log。

13.4.2.2.2 输入数据

http://www.baidu.com

http://www.google.com

http://cn.bing.com

http://www.atguigu.com

http://www.sohu.com

http://www.sina.com

http://www.sin2a.com

http://www.sin2desa.com

http://www.sindsafa.com

13.4.2.2.3 输出数据

http://www.baidu.com

http://www.google.com

http://cn.bing.com

http://www.atguigu.com

http://www.sohu.com

https://www.toutiao.com

https://www.qq.com

https://www.luogu.com

https://www.bilibili.com

13.4.2.2.4 自定义一个OutputFormat类

创建一个类LogRecordWriter继承RecordWriter

(a)创建两个文件的输出流:baiduOut、otherOut

(b)如果输入数据包含baidu,输出到baiduOut流如果不包含baidu,输出到otherOut流

13.4.2.2.5 驱动类Driver

// 要将自定义的输出格式组件设置到job中

job.setOutputFormatClass(LogOutputFormat.class) ;

13.4.2.3 案例实操

13.4.2.3.1 编写 LogMapper 类

package com.summer.mapreduce.outputformat;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* @author Redamancy

* @create 2022-10-04 21:29

*/

public class LogMapper extends Mapper<LongWritable, Text, Text, NullWritable> {

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, NullWritable>.Context context) throws IOException, InterruptedException {

// http://www.baidu.com

// http://www.google.com

//(http://www.google.com,NullWritable)

//不做任何处理

context.write(value, NullWritable.get());

}

}

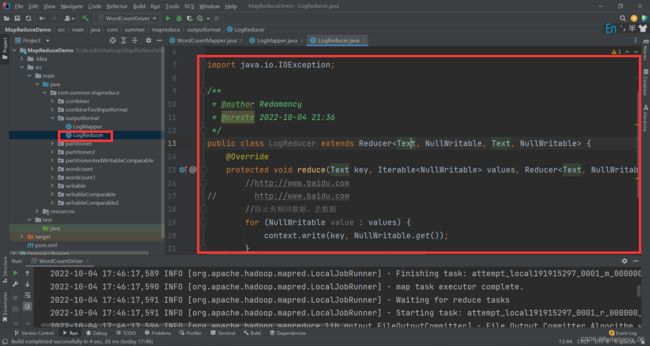

13.4.2.3.2 编写 LogReducer 类

package com.summer.mapreduce.outputformat;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* @author Redamancy

* @create 2022-10-04 21:36

*/

public class LogReducer extends Reducer<Text, NullWritable, Text, NullWritable> {

@Override

protected void reduce(Text key, Iterable<NullWritable> values, Reducer<Text, NullWritable, Text, NullWritable>.Context context) throws IOException, InterruptedException {

//http://www.baidu.com

// http://www.baidu.com

//防止有相同数据,丢数据

for (NullWritable value : values) {

context.write(key, NullWritable.get());

}

}

}

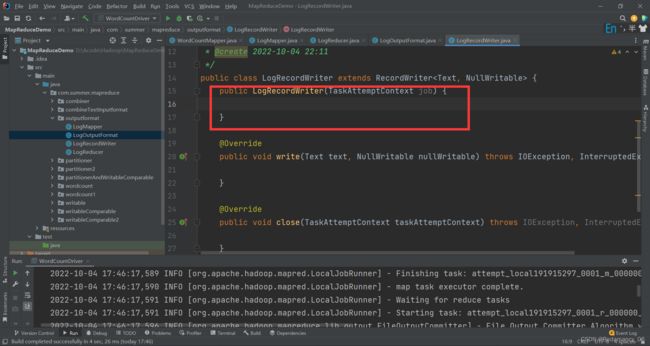

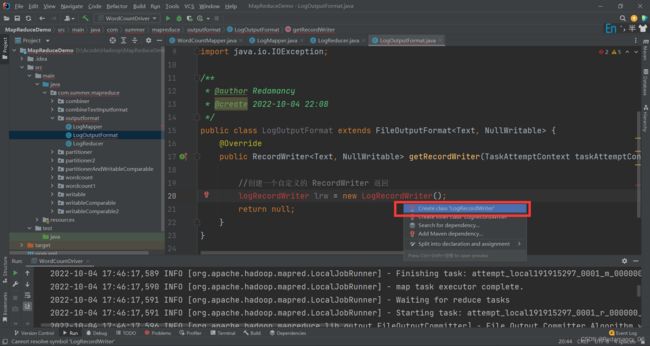

13.4.2.3.3 自定义一个 LogOutputFormat 类

新建一个java文件,命名为LogOutputFormat,当到这一步的时候会报错,需要创建一个class类,创建就行了

新建一个java文件,命名为LogOutputFormat,当到这一步的时候会报错,需要创建一个class类,创建就行了

创建成功

因为要和当前的任务连接在一起,因此这里要填job,但是填上后会报错,需要什么就创建什么

package com.summer.mapreduce.outputformat;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.RecordWriter;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* @author Redamancy

* @create 2022-10-04 22:08

*/

public class LogOutputFormat extends FileOutputFormat<Text, NullWritable> {

@Override

public RecordWriter<Text, NullWritable> getRecordWriter(TaskAttemptContext job) throws IOException, InterruptedException {

//创建一个自定义的 RecordWriter 返回

LogRecordWriter lrw = new LogRecordWriter(job);

return lrw;

}

}

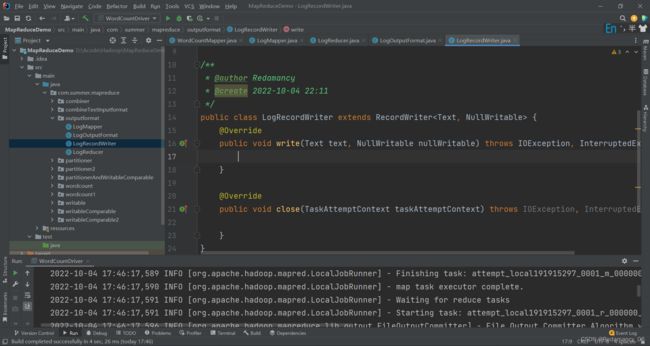

13.4.2.3.4 编写 LogRecordWriter 类

package com.summer.mapreduce.outputformat;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.RecordWriter;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import java.io.IOException;

/**

* @author Redamancy

* @create 2022-10-04 22:11

*/

public class LogRecordWriter extends RecordWriter<Text, NullWritable> {

private FSDataOutputStream otherOut;

private FSDataOutputStream baiduOut;

public LogRecordWriter(TaskAttemptContext job) {

//创建两条流

try {

//获取文件系统对象

FileSystem fs = FileSystem.get(job.getConfiguration());

//用文件系统对象创建两个输出流对应不同的目录

baiduOut = fs.create(new Path("D:\\Acode\\Hadoop\\output\\baiduOut.log"));

otherOut = fs.create(new Path("D:\\Acode\\Hadoop\\output\\otherOut.log"));

} catch (IOException e) {

e.printStackTrace();

}

}

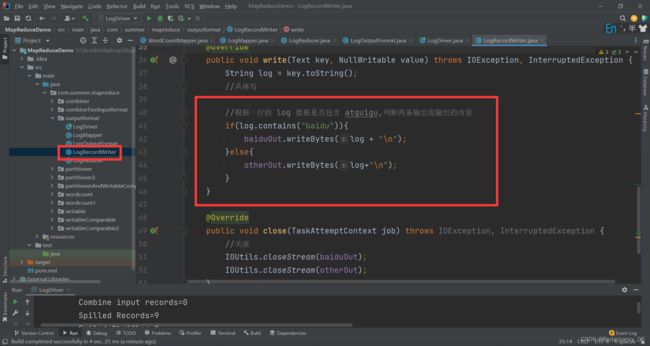

@Override

public void write(Text key, NullWritable value) throws IOException, InterruptedException {

String log = key.toString();

//具体写

//根据一行的 log 数据是否包含 atguigu,判断两条输出流输出的内容

if(log.contains("baidu")){

baiduOut.writeBytes(log + "\n");

}else{

otherOut.writeBytes(log+"\n");

}

}

@Override

public void close(TaskAttemptContext job) throws IOException, InterruptedException {

//关流

IOUtils.closeStream(baiduOut);

IOUtils.closeStream(otherOut);

}

}

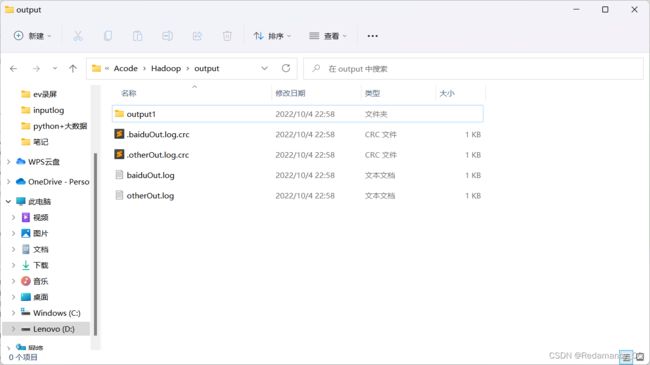

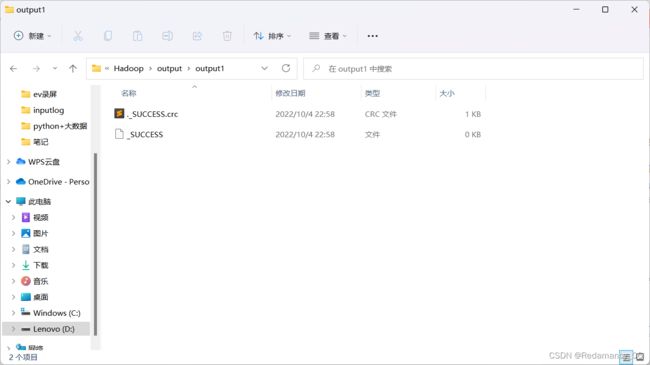

13.4.2.3.5编写 LogDriver 类

package com.summer.mapreduce.outputformat;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* @author Redamancy

* @create 2022-10-04 22:41

*/

public class LogDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(LogDriver.class);

job.setMapperClass(LogMapper.class);

job.setReducerClass(LogReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

//设置自定义的outputformat

job.setOutputFormatClass(LogOutputFormat.class);

FileInputFormat.setInputPaths(job, new Path("D:\\Acode\\Hadoop\\input\\inputlog"));

//虽然我们自定义了outputformat,但是因为我们的outputformat继承自fileoutputformat

//而fileoutputformat要输出一个_SUCCESS文件,所以在这还得指定一个输出目录

FileOutputFormat.setOutputPath(job, new Path("D:\\Acode\\Hadoop\\output\\output1"));

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}