03-Zookeeper典型使用场景实战

1、zookeeper的应用场景1-分布式锁

1.1 非公平锁

1.2 公平锁

1)实现原理

2)幽灵节点的规避

幽灵结点是指当客户端创建节点成功后,没有收到服务端的回应,也就是客户端不知道自己已经成功创建了节点。这样就又会尝试创建新的结点,那之前创建的结点就是幽灵结点了。

解决:Zookeeper规避的方式就是创建的时候给前面加一个uuid,客户端去创建节点的时候会先按这个uuid找。有的话就不会再创建。

解决:curator使用protect模式避免幽灵节点

org.apache.curator.framework.recipes.locks.StandardLockInternalsDriver#createsTheLock

@Override

public String createsTheLock(CuratorFramework client, String path, byte[] lockNodeBytes) throws Exception

{

String ourPath;

if ( lockNodeBytes != null )

{

ourPath = client.create().creatingParentContainersIfNeeded().withProtection().withMode(CreateMode.EPHEMERAL_SEQUENTIAL).forPath(path, lockNodeBytes);

}

else

{

ourPath = client.create().creatingParentContainersIfNeeded().withProtection().withMode(CreateMode.EPHEMERAL_SEQUENTIAL).forPath(path);

}

return ourPath;

}

org.apache.curator.framework.imps.CreateBuilderImpl#forPath(java.lang.String, byte[])

org.apache.curator.framework.imps.CreateBuilderImpl#protectedPathInForeground

org.apache.curator.framework.imps.CreateBuilderImpl#pathInForeground

失败会进行重试:org.apache.curator.RetryLoop#callWithRetry

锁前面会加一个uuid,一旦服务端没有响应,客户端会进行重试,并且判断前缀是否已存在,避免幽灵节点的产生:

org.apache.curator.framework.imps.CreateBuilderImpl#findProtectedNodeInForeground

org.apache.curator.framework.imps.CreateBuilderImpl#findNode

static String findNode(final List<String> children, final String path, final String protectedId)

{

final String protectedPrefix = ProtectedUtils.getProtectedPrefix(protectedId);

String foundNode = Iterables.find

(

children,

new Predicate<String>()

{

@Override

public boolean apply(String node)

{

return node.startsWith(protectedPrefix);

}

},

null

);

if ( foundNode != null )

{

foundNode = ZKPaths.makePath(path, foundNode);

}

return foundNode;

}

查看zookeeper中的节点:前缀

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 15] ls /cachePreHeat_leader

[_c_8033b1b4-1a1e-479b-97e7-d63eafa858ac-lock-0000000001, _c_f6c7522b-e184-484e-b480-2bc54be365f5-lock-0000000002]

3)案例演示

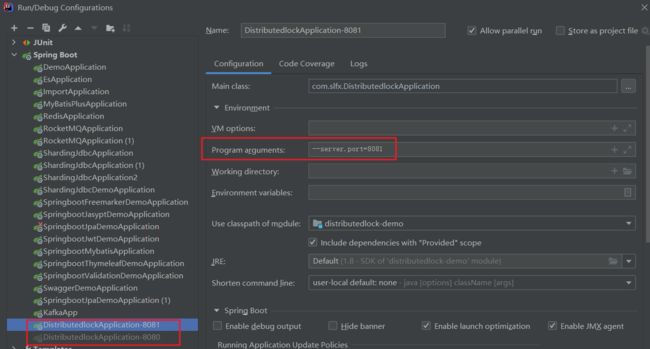

需求:通过集群演示库存扣减演示分布式锁的作用

a)准备工作

nginx路径:通过nginx的负载均衡来负载服务

F:\webService\nginx-1.13.12-swagger

nginx.conf

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream zookeeperlock{

server localhost:8080;

server localhost:8081;

}

server {

listen 80;

server_name localhost;

location / {

root html;

index index.html index.htm;

proxy_pass http://zookeeperlock/;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

@Transactional

public void reduceStock(Integer id){

// 1. 获取库存

Product product = productMapper.getProduct(id);

// 模拟耗时业务处理

sleep( 500); // 其他业务处理

if (product.getStock() <=0 ) {

throw new RuntimeException("out of stock");

}

// 2. 减库存

int i = productMapper.deductStock(id);

if (i==1){

Order order = new Order();

order.setUserId(UUID.randomUUID().toString());

order.setPid(id);

orderMapper.insert(order);

}else{

throw new RuntimeException("deduct stock fail, retry.");

}

}

InterProcessMutex lock = new InterProcessMutex(client, lockPath);

if ( lock.acquire(maxWait, waitUnit) )

{

try

{

// do some work inside of the critical section here

}

finally

{

lock.release();

}

}

修改代码:通过zk实现分布式锁

添加依赖:

<dependency>

<groupId>org.apache.curatorgroupId>

<artifactId>curator-recipesartifactId>

<version>5.0.0version>

<exclusions>

<exclusion>

<groupId>org.apache.zookeepergroupId>

<artifactId>zookeeperartifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.apache.zookeepergroupId>

<artifactId>zookeeperartifactId>

<version>3.5.8version>

dependency>

配置:

@Configuration

public class CuratorCfg {

@Bean(initMethod = "start")

public CuratorFramework curatorFramework(){

RetryPolicy retryPolicy = new ExponentialBackoffRetry(1000, 3);

CuratorFramework client = CuratorFrameworkFactory.newClient("192.168.75.131:2181,192.168.75.131:2182,192.168.75.131:2183,192.168.75.131:2184", retryPolicy);

return client;

}

}

代码实现:

@GetMapping("/stock/deduct")

public Object reduceStock(Integer id) throws Exception {

InterProcessMutex interProcessMutex = new InterProcessMutex(curatorFramework, "/product_" + id);

try {

// ...

interProcessMutex.acquire();

orderService.reduceStock(id);

} catch (Exception e) {

if (e instanceof RuntimeException) {

throw e;

}

}finally {

interProcessMutex.release();

}

return "ok:" + port;

}

重新测试:数据正常

查看zookeeper中的节点信息

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 7] ls /product_1

[_c_0b7cee67-16d0-45a5-b1b5-a03504dafbb5-lock-0000000028, _c_157f2d69-705d-4ce5-9cdf-68bdf38a2a68-lock-0000000045, _c_1f2eeb6e-fc46-42fa-98ec-f9108d89bf46-lock-0000000041, _c_243442b6-afe8-4d4f-8edf-e1448b26444e-lock-0000000035, _c_262f2603-2417-4961-8180-bd30f7978b30-lock-0000000029, _c_272b6137-13a1-4b8f-af80-575355202519-lock-0000000049, _c_2a9a2f79-48e2-40b3-be5b-57301fcba73b-lock-0000000034, _c_2fc8f3e5-aba8-497c-b4a1-1712b61c0d45-lock-0000000037, _c_3a8c89dd-ecb4-4f11-aeb6-8ddd76420537-lock-0000000047, _c_3b513bd2-0f68-4a9e-91b1-9a46d941675a-lock-0000000040, _c_3e8aa208-8421-417d-918f-150716c76b90-lock-0000000025, _c_46cf8f3d-3cee-40b4-b72b-b7f14e5b5112-lock-0000000036, _c_4fee07d1-03d3-4922-b8a2-f5887a8f081c-lock-0000000046, _c_6999941b-89ed-4f9e-a90c-4f02a335ed43-lock-0000000038, _c_75b2f7f4-c7d9-4ecb-a172-cc625571c3e7-lock-0000000048, _c_8e7ddaae-3ebb-48bf-9c21-e3a5a3e13e3f-lock-0000000039, _c_a5054002-8c20-4f37-9ed5-682005db1703-lock-0000000030, _c_a7db80ba-6786-4644-b219-9a28f7004aa9-lock-0000000031, _c_d0c3aa6c-e2db-4efe-87dd-87d7232057d1-lock-0000000033, _c_d75bf5b6-74ad-4b56-a0f7-540edd31ced5-lock-0000000042, _c_d76942c3-1955-477f-a845-d7c79627f2e8-lock-0000000027, _c_d90fbb3f-733b-4119-bff7-3f59a236ae07-lock-0000000026, _c_d9fffe53-99a5-44eb-b32f-93f16092205d-lock-0000000043, _c_db0355fd-06ab-46b3-8438-fa58e0fa068f-lock-0000000044, _c_eee8daf9-3471-46cb-a45a-2ebe8c56db27-lock-0000000032]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 8] ls /product_1

[_c_157f2d69-705d-4ce5-9cdf-68bdf38a2a68-lock-0000000045, _c_1f2eeb6e-fc46-42fa-98ec-f9108d89bf46-lock-0000000041, _c_243442b6-afe8-4d4f-8edf-e1448b26444e-lock-0000000035, _c_272b6137-13a1-4b8f-af80-575355202519-lock-0000000049, _c_2a9a2f79-48e2-40b3-be5b-57301fcba73b-lock-0000000034, _c_2fc8f3e5-aba8-497c-b4a1-1712b61c0d45-lock-0000000037, _c_3a8c89dd-ecb4-4f11-aeb6-8ddd76420537-lock-0000000047, _c_3b513bd2-0f68-4a9e-91b1-9a46d941675a-lock-0000000040, _c_46cf8f3d-3cee-40b4-b72b-b7f14e5b5112-lock-0000000036, _c_4fee07d1-03d3-4922-b8a2-f5887a8f081c-lock-0000000046, _c_6999941b-89ed-4f9e-a90c-4f02a335ed43-lock-0000000038, _c_75b2f7f4-c7d9-4ecb-a172-cc625571c3e7-lock-0000000048, _c_8e7ddaae-3ebb-48bf-9c21-e3a5a3e13e3f-lock-0000000039, _c_a5054002-8c20-4f37-9ed5-682005db1703-lock-0000000030, _c_a7db80ba-6786-4644-b219-9a28f7004aa9-lock-0000000031, _c_d0c3aa6c-e2db-4efe-87dd-87d7232057d1-lock-0000000033, _c_d75bf5b6-74ad-4b56-a0f7-540edd31ced5-lock-0000000042, _c_d9fffe53-99a5-44eb-b32f-93f16092205d-lock-0000000043, _c_db0355fd-06ab-46b3-8438-fa58e0fa068f-lock-0000000044, _c_eee8daf9-3471-46cb-a45a-2ebe8c56db27-lock-0000000032]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 9] ls /product_1

[_c_157f2d69-705d-4ce5-9cdf-68bdf38a2a68-lock-0000000045, _c_272b6137-13a1-4b8f-af80-575355202519-lock-0000000049, _c_3a8c89dd-ecb4-4f11-aeb6-8ddd76420537-lock-0000000047, _c_4fee07d1-03d3-4922-b8a2-f5887a8f081c-lock-0000000046, _c_75b2f7f4-c7d9-4ecb-a172-cc625571c3e7-lock-0000000048]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 10] ls /product_1

[_c_272b6137-13a1-4b8f-af80-575355202519-lock-0000000049, _c_3a8c89dd-ecb4-4f11-aeb6-8ddd76420537-lock-0000000047, _c_75b2f7f4-c7d9-4ecb-a172-cc625571c3e7-lock-0000000048]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 11] ls /product_1

[_c_272b6137-13a1-4b8f-af80-575355202519-lock-0000000049, _c_75b2f7f4-c7d9-4ecb-a172-cc625571c3e7-lock-0000000048]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 12] ls /product_1

[_c_272b6137-13a1-4b8f-af80-575355202519-lock-0000000049]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 13] ls /product_1

[]

4)curator加锁原理

获取锁:org.apache.curator.framework.recipes.locks.InterProcessMutex#acquire()org.apache.curator.framework.recipes.locks.InterProcessMutex#internalLock

private boolean internalLock(long time, TimeUnit unit) throws Exception

{

/*

Note on concurrency: a given lockData instance

can be only acted on by a single thread so locking isn't necessary

*/

Thread currentThread = Thread.currentThread();

LockData lockData = threadData.get(currentThread);

if ( lockData != null )

{

// re-entering 可冲入锁

lockData.lockCount.incrementAndGet();

return true;

}

String lockPath = internals.attemptLock(time, unit, getLockNodeBytes());

if ( lockPath != null )

{

LockData newLockData = new LockData(currentThread, lockPath);

threadData.put(currentThread, newLockData);

return true;

}

return false;

}

org.apache.curator.framework.recipes.locks.LockInternals#attemptLock

String attemptLock(long time, TimeUnit unit, byte[] lockNodeBytes) throws Exception

{

final long startMillis = System.currentTimeMillis();

final Long millisToWait = (unit != null) ? unit.toMillis(time) : null;

final byte[] localLockNodeBytes = (revocable.get() != null) ? new byte[0] : lockNodeBytes;

int retryCount = 0;

String ourPath = null;

boolean hasTheLock = false;

boolean isDone = false;

while ( !isDone )

{

isDone = true;

try

{

ourPath = driver.createsTheLock(client, path, localLockNodeBytes);

hasTheLock = internalLockLoop(startMillis, millisToWait, ourPath);

}

catch ( KeeperException.NoNodeException e )

{

// gets thrown by StandardLockInternalsDriver when it can't find the lock node

// this can happen when the session expires, etc. So, if the retry allows, just try it all again

if ( client.getZookeeperClient().getRetryPolicy().allowRetry(retryCount++, System.currentTimeMillis() - startMillis, RetryLoop.getDefaultRetrySleeper()) )

{

isDone = false;

}

else

{

throw e;

}

}

}

if ( hasTheLock )

{

return ourPath;

}

return null;

}

org.apache.curator.framework.recipes.locks.StandardLockInternalsDriver#createsTheLock

@Override

public String createsTheLock(CuratorFramework client, String path, byte[] lockNodeBytes) throws Exception

{

String ourPath;

if ( lockNodeBytes != null )

{

ourPath = client.create().creatingParentContainersIfNeeded().withProtection().withMode(CreateMode.EPHEMERAL_SEQUENTIAL).forPath(path, lockNodeBytes);

}

else

{

ourPath = client.create().creatingParentContainersIfNeeded().withProtection().withMode(CreateMode.EPHEMERAL_SEQUENTIAL).forPath(path);

}

return ourPath;

}

org.apache.curator.framework.recipes.locks.LockInternals#internalLockLoop

private boolean internalLockLoop(long startMillis, Long millisToWait, String ourPath) throws Exception

{

boolean haveTheLock = false;

boolean doDelete = false;

try

{

if ( revocable.get() != null )

{

client.getData().usingWatcher(revocableWatcher).forPath(ourPath);

}

while ( (client.getState() == CuratorFrameworkState.STARTED) && !haveTheLock )

{

List<String> children = getSortedChildren();

String sequenceNodeName = ourPath.substring(basePath.length() + 1); // +1 to include the slash

PredicateResults predicateResults = driver.getsTheLock(client, children, sequenceNodeName, maxLeases);

if ( predicateResults.getsTheLock() )

{

haveTheLock = true;

}

else

{

String previousSequencePath = basePath + "/" + predicateResults.getPathToWatch();

synchronized(this)

{

try

{

// use getData() instead of exists() to avoid leaving unneeded watchers which is a type of resource leak

client.getData().usingWatcher(watcher).forPath(previousSequencePath);

if ( millisToWait != null )

{

millisToWait -= (System.currentTimeMillis() - startMillis);

startMillis = System.currentTimeMillis();

if ( millisToWait <= 0 )

{

doDelete = true; // timed out - delete our node

break;

}

wait(millisToWait);

}

else

{

wait();

}

}

catch ( KeeperException.NoNodeException e )

{

// it has been deleted (i.e. lock released). Try to acquire again

}

}

}

}

}

catch ( Exception e )

{

ThreadUtils.checkInterrupted(e);

doDelete = true;

throw e;

}

finally

{

if ( doDelete )

{

deleteOurPath(ourPath);

}

}

return haveTheLock;

}

org.apache.curator.framework.recipes.locks.StandardLockInternalsDriver#getsTheLock

@Override

public PredicateResults getsTheLock(CuratorFramework client, List<String> children, String sequenceNodeName, int maxLeases) throws Exception

{

int ourIndex = children.indexOf(sequenceNodeName);

validateOurIndex(sequenceNodeName, ourIndex);

boolean getsTheLock = ourIndex < maxLeases;

String pathToWatch = getsTheLock ? null : children.get(ourIndex - maxLeases);

return new PredicateResults(pathToWatch, getsTheLock);

}

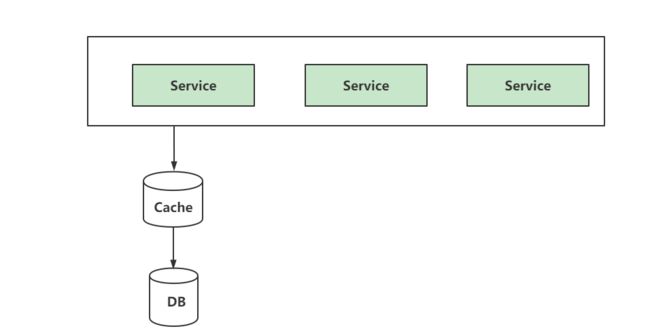

1.3 共享锁

前面这两种加锁方式有一个共同的特质,就是都是互斥锁,同一时间只能有一个请求占用,如果是大量的并发上来,性能是会急剧下降的,所有的请求都得加锁,那是不是真的所有的请求都需要加锁呢?答案是否定的,比如如果数据没有进行任何修改的话,是不需要加锁的,但是如果读数据的请求还没读完,这个时候来了一个写请求,怎么办呢?有人已经在读数据了,这个时候是不能写数据的,不然数据就不正确了。直到前面读锁全部释放掉以后,写请求才能执行,所以需要给这个读请求加一个标识(读锁),让写请求知道,这个时候是不能修改数据的。不然数据就不一致了。如果已经有人在写数据了,再来一个请求写数据,也是不允许的,这样也会导致数据的不一致,所以所有的写请求,都需要加一个写锁,是为了避免同时对共享数据进行写操作。

1)读写锁不一致场景

2)zookeeper共享实现原理

3)curator实现读写锁

org.apache.curator.framework.recipes.locks.InterProcessReadWriteLock

public class InterProcessReadWriteLock

{

private final InterProcessMutex readMutex;

private final InterProcessMutex writeMutex;

// must be the same length. LockInternals depends on it

private static final String READ_LOCK_NAME = "__READ__";

private static final String WRITE_LOCK_NAME = "__WRIT__";

public InterProcessReadWriteLock(CuratorFramework client, String basePath, byte[] lockData)

{

lockData = (lockData == null) ? null : Arrays.copyOf(lockData, lockData.length);

writeMutex = new InternalInterProcessMutex

(

client,

basePath,

WRITE_LOCK_NAME,

lockData,

1,

new SortingLockInternalsDriver()

{

@Override

public PredicateResults getsTheLock(CuratorFramework client, List<String> children, String sequenceNodeName, int maxLeases) throws Exception

{

return super.getsTheLock(client, children, sequenceNodeName, maxLeases);

}

}

);

readMutex = new InternalInterProcessMutex

(

client,

basePath,

READ_LOCK_NAME,

lockData,

Integer.MAX_VALUE,

new SortingLockInternalsDriver()

{

@Override

public PredicateResults getsTheLock(CuratorFramework client, List<String> children, String sequenceNodeName, int maxLeases) throws Exception

{

return readLockPredicate(children, sequenceNodeName);

}

}

);

}

写锁的加锁逻辑和分布式锁的逻辑一致。

读锁的加锁逻辑不一致:org.apache.curator.framework.recipes.locks.InterProcessReadWriteLock#readLockPredicate

private PredicateResults readLockPredicate(List<String> children, String sequenceNodeName) throws Exception

{

if ( writeMutex.isOwnedByCurrentThread() )

{

return new PredicateResults(null, true);

}

int index = 0;

int firstWriteIndex = Integer.MAX_VALUE;

int ourIndex = -1;

for ( String node : children )

{

if ( node.contains(WRITE_LOCK_NAME) )

{

firstWriteIndex = Math.min(index, firstWriteIndex);

}

else if ( node.startsWith(sequenceNodeName) )

{

ourIndex = index;

break;

}

++index;

}

StandardLockInternalsDriver.validateOurIndex(sequenceNodeName, ourIndex);

boolean getsTheLock = (ourIndex < firstWriteIndex);

String pathToWatch = getsTheLock ? null : children.get(firstWriteIndex);

return new PredicateResults(pathToWatch, getsTheLock);

}

1.4 redis分布式锁和redis分布式锁的区别

- 可靠性:redis在集群模式或者哨兵模式情况下,主节点宕机情况下锁可能或丢失,会在另外的节点创建锁

- 性能:zk要写过半的机器,性能低一点

2、zookeeper的应用场景2-leader选举

2.1 demo演示

LeaderSelectorListener listener = new LeaderSelectorListenerAdapter()

{

public void takeLeadership(CuratorFramework client) throws Exception

{

// this callback will get called when you are the leader

// do whatever leader work you need to and only exit

// this method when you want to relinquish leadership

}

}

LeaderSelector selector = new LeaderSelector(client, path, listener);

selector.autoRequeue(); // not required, but this is behavior that you will probably expect

selector.start();

java代码:

package coml.slfx.zookeeper.leaderselector;

import org.apache.curator.RetryPolicy;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.framework.recipes.leader.LeaderSelector;

import org.apache.curator.framework.recipes.leader.LeaderSelectorListener;

import org.apache.curator.framework.recipes.leader.LeaderSelectorListenerAdapter;

import org.apache.curator.retry.ExponentialBackoffRetry;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.TimeUnit;

public class LeaderSelectorDemo {

private static final String CONNECT_STR = "192.168.75.131:2181,192.168.75.131:2182,192.168.75.131:2183,192.168.75.131:2184";

private static RetryPolicy retryPolicy = new ExponentialBackoffRetry(5 * 1000, 10);

private static CuratorFramework curatorFramework;

private static CountDownLatch countDownLatch = new CountDownLatch(1);

public static void main(String[] args) throws InterruptedException {

String appName = System.getProperty("appName");

CuratorFramework curatorFramework = CuratorFrameworkFactory.newClient(CONNECT_STR, retryPolicy);

LeaderSelectorDemo.curatorFramework = curatorFramework;

curatorFramework.start();

LeaderSelectorListener listener = new LeaderSelectorListenerAdapter() {

public void takeLeadership(CuratorFramework client) throws Exception {

//只有选举成功的才能执行业务逻辑

System.out.println(" I' m leader now . i'm , " + appName);

TimeUnit.SECONDS.sleep(15);

}

};

LeaderSelector selector = new LeaderSelector(curatorFramework, "/cachePreHeat_leader", listener);

selector.autoRequeue(); // not required, but this is behavior that you will probably expect

selector.start();

countDownLatch.await();

}

}

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 14] ls /

[cachePreHeat_leader, zookeeper]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 16] ls /cachePreHeat_leader

[_c_68286420-6d29-4e8d-b979-142a0102899e-lock-0000000010, _c_da1a051d-528f-49f0-a644-753ef24b9ad3-lock-0000000009, _c_f0c49142-3531-46c9-b3a4-970d0a5c828c-lock-0000000008]

2.2 实现原理

org.apache.curator.framework.recipes.leader.LeaderSelector#internalRequeue

private synchronized boolean internalRequeue()

{

if ( !isQueued && (state.get() == State.STARTED) )

{

isQueued = true;

Future<Void> task = executorService.submit(new Callable<Void>()

{

@Override

public Void call() throws Exception

{

try

{

doWorkLoop();

}

finally

{

clearIsQueued();

if ( autoRequeue.get() )

{

internalRequeue();

}

}

return null;

}

});

ourTask.set(task);

return true;

}

return false;

}

3、注册中心实战

3.1 注册中心场景分析

3.2 注册中心实战

Spring Cloud 生态也提供了Zookeeper注册中心的实现,这个项目叫 Spring Cloud Zookeeper

参考:https://cloud.spring.io/spring-cloud-zookeeper/reference/html/

项目说明: 为了简化需求,我们以两个服务来进行讲解,实际使用时可以举一反三

- user-center : 用户服务

- product-center: 产品服务

效果:用户调用产品服务,且实现客户端的负载均衡,产品服务自动加入集群,自动退出服务。

1)项目搭建

创建product-center和user-center服务

2) 配置zookeeper

a)product-center

application.properties

spring.application.name=user-center

#zookeeper 连接地址

spring.cloud.zookeeper.connect-string=192.168.75.131:2181,192.168.75.131:2182,192.168.75.131:2183,192.168.75.131:2184

代码编写: 配置 Resttemplate 支持负载均衡方式

package com.slfx.usercenter;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.cloud.client.loadbalancer.LoadBalanced;

import org.springframework.context.annotation.Bean;

import org.springframework.web.client.RestTemplate;

@SpringBootApplication

public class UserCenterApplication {

public static void main(String[] args) {

SpringApplication.run(UserCenterApplication.class, args);

}

@Bean

@LoadBalanced

public RestTemplate restTemplate() {

RestTemplate restTemplate = new RestTemplate();

return restTemplate;

}

}

编写测试类: TestController, Spring Cloud 支持 Feign, Spring RestTemplate,WebClient 以 逻辑名称, 替代具体url的形式访问。

package com.slfx.usercenter;

import org.springframework.cloud.client.ServiceInstance;

import org.springframework.cloud.client.loadbalancer.LoadBalancerClient;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.client.RestTemplate;

import javax.annotation.Resource;

@RestController

public class TestController {

@Resource

private RestTemplate restTemplate;

@Resource

private LoadBalancerClient loadBalancerClient;

@GetMapping("/test")

public String test() {

return this.restTemplate.getForObject("http://product-center/getInfo", String.class);

}

@GetMapping("/lb")

public String getLb() {

ServiceInstance choose = loadBalancerClient.choose("product-center");

String serviceId = choose.getServiceId();

int port = choose.getPort();

return serviceId + " : " + port;

}

}

b)product-center 服务

application.properties

spring.application.name=product-center

#zookeeper 连接地址

spring.cloud.zookeeper.connect-string=192.168.75.131:2181,192.168.75.131:2182,192.168.75.131:2183,192.168.75.131:2184

#将本服务注册到zookeeper

spring.cloud.zookeeper.discovery.register=true

spring.cloud.zookeeper.session-timeout=30000

主类,接收一个getInfo 请求

package com.slfx.productcenter;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@SpringBootApplication

@RestController

public class ProductCenterApplication {

@Value("${server.port}")

private String port;

@Value( "${spring.application.name}" )

private String name;

@GetMapping("/getInfo")

public String getServerPortAndName(){

return this.name +" : "+ this.port;

}

public static void main(String[] args) {

SpringApplication.run(ProductCenterApplication.class, args);

}

}

3)测试验证

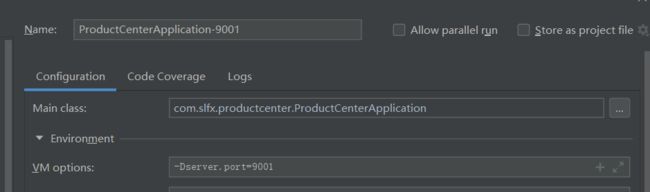

启动两个product-center 实例

通过idea 配置,启动多个实例,用端口区分不同的应用

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 26] ls /services

[a, product-center, user-center]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 27] ls /services/product-center

[60f989c9-fa4f-4b51-b254-30ce373dc25e, fd3abef0-e8cf-4892-8d0c-fc4a2f3adb2d]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 28] ls /services/user-center

[32d8e6b6-0c27-40f8-9454-9c253334c51f]

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 29] get /services/user-center/32d8e6b6-0c27-40f8-9454-9c253334c51f

{"name":"user-center","id":"32d8e6b6-0c27-40f8-9454-9c253334c51f","address":"DESKTOP-4MEEC6F","port":8080,"sslPort":null,"payload":{"@class":"org.springframework.cloud.zookeeper.discovery.ZookeeperInstance","id":"application-1","name":"user-center","metadata":{"instance_status":"UP"}},"registrationTimeUTC":1650099943035,"serviceType":"DYNAMIC","uriSpec":{"parts":[{"value":"scheme","variable":true},{"value":"://","variable":false},{"value":"address","variable":true},{"value":":","variable":false},{"value":"port","variable":true}]}}

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 30] get /services/product-center/60f989c9-fa4f-4b51-b254-30ce373dc25e

{"name":"product-center","id":"60f989c9-fa4f-4b51-b254-30ce373dc25e","address":"DESKTOP-4MEEC6F","port":9002,"sslPort":null,"payload":{"@class":"org.springframework.cloud.zookeeper.discovery.ZookeeperInstance","id":"application-1","name":"product-center","metadata":{"instance_status":"UP"}},"registrationTimeUTC":1650099921496,"serviceType":"DYNAMIC","uriSpec":{"parts":[{"value":"scheme","variable":true},{"value":"://","variable":false},{"value":"address","variable":true},{"value":":","variable":false},{"value":"port","variable":true}]}}

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 31] get /services/product-center/fd3abef0-e8cf-4892-8d0c-fc4a2f3adb2d

{"name":"product-center","id":"fd3abef0-e8cf-4892-8d0c-fc4a2f3adb2d","address":"DESKTOP-4MEEC6F","port":9001,"sslPort":null,"payload":{"@class":"org.springframework.cloud.zookeeper.discovery.ZookeeperInstance","id":"application-1","name":"product-center","metadata":{"instance_status":"UP"}},"registrationTimeUTC":1650100200714,"serviceType":"DYNAMIC","uriSpec":{"parts":[{"value":"scheme","variable":true},{"value":"://","variable":false},{"value":"address","variable":true},{"value":":","variable":false},{"value":"port","variable":true}]}}

[zk: localhost:2181,localhost:2182,localhost:2183,localhost:2184(CONNECTED) 32]

{

"name": "user-center",

"id": "32d8e6b6-0c27-40f8-9454-9c253334c51f",

"address": "DESKTOP-4MEEC6F",

"port": 8080,

"sslPort": null,

"payload": {

"@class": "org.springframework.cloud.zookeeper.discovery.ZookeeperInstance",

"id": "application-1",

"name": "user-center",

"metadata": {

"instance_status": "UP"

}

},

"registrationTimeUTC": 1650099943035,

"serviceType": "DYNAMIC",

"uriSpec": {

"parts": [{

"value": "scheme",

"variable": true

}, {

"value": "://",

"variable": false

}, {

"value": "address",

"variable": true

}, {

"value": ":",

"variable": false

}, {

"value": "port",

"variable": true

}]

}

}

访问测试:http://localhost:8080/test

e": “user-center”,

“id”: “32d8e6b6-0c27-40f8-9454-9c253334c51f”,

“address”: “DESKTOP-4MEEC6F”,

“port”: 8080,

“sslPort”: null,

“payload”: {

“@class”: “org.springframework.cloud.zookeeper.discovery.ZookeeperInstance”,

“id”: “application-1”,

“name”: “user-center”,

“metadata”: {

“instance_status”: “UP”

}

},

“registrationTimeUTC”: 1650099943035,

“serviceType”: “DYNAMIC”,

“uriSpec”: {

“parts”: [{

“value”: “scheme”,

“variable”: true

}, {

“value”: “/”,

“variable”: false

}, {

“value”: “address”,

“variable”: true

}, {

“value”: “:”,

“variable”: false

}, {

“value”: “port”,

“variable”: true

}]

}

}

访问测试:[http://localho](http://localho)st:8080/test

[外链图片转存中...(img-pMcv8MhC-1673963291151)]