ubuntu18.04配置deepo深度学习环境(cuda + cudnn + nvidia-docker + deepo)--超级细致,并把遇到的错误和所有解决方案都列出来了

0 了解本机基本信息

0 参考文档

主要整体是这篇

1.安装cuda和cudnn

2.安装cuda和cudnn

3.安装cuda和cudnn

4.安装cuda和cudnn

1.安装nvidia-docker2

2.安装nvidia-docker2

利用deepo做深度学习环境-官方英文

利用deepo做深度学习环境-中文翻译

1 显卡信息

root@master:/home/hqc# ubuntu-drivers devices

== /sys/devices/pci0000:00/0000:00:01.0/0000:01:00.0 ==

modalias : pci:v000010DEd00001B06sv000010DEsd0000120Fbc03sc00i00

vendor : NVIDIA Corporation

model : GP102 [GeForce GTX 1080 Ti]

driver : nvidia-driver-460-server - distro non-free

driver : nvidia-driver-450-server - distro non-free

driver : nvidia-driver-390 - distro non-free

driver : nvidia-driver-418-server - distro non-free

driver : nvidia-driver-470 - distro non-free

driver : nvidia-driver-470-server - distro non-free

driver : nvidia-driver-460 - distro non-free

driver : nvidia-driver-495 - distro non-free recommended

driver : xserver-xorg-video-nouveau - distro free builtin

提示信息recommend495版本,因此无需重新安装。

2 查看是否安装了cuda/cudnn

root@master:/home/hqc# cat /usr/local/cuda/version.txt

cat: /usr/local/cuda/version.txt: 没有那个文件或目录

root@master:/home/hqc# nvcc -V

Command 'nvcc' not found, but can be installed with:

apt install nvidia-cuda-toolkit

都没有,参考这篇博客

3 关于cuda和cudnn的说明

deepo这个镜像中已经封装了cuda和cudnn,同时直接配置好了绝大多数深度学习的环境。

那为啥还要在本机上安装cuda和cudnn呢?

因为本地开发需要,或者拿到一个现成的深度学习程序需要本地先测试一下是否可运行。

1 安装cuda

1 下载

root@master:/home/hqc# wget https://developer.download.nvidia.com/compute/cuda/11.5.1/local_installers/cuda_11.5.1_495.29.05_linux.run

2 执行

root@master:/home/hqc# sudo sh cuda_11.5.1_495.29.05_linux.run

选择continue

出现原因:可能是验证nivdia-docker2时拉取了一个11.0版本的cuda

输入accept

注:一定不能再次安装driver

操作:移到driver项,按enter键即去掉勾选。然后install。

3 成功

root@master:/home/hqc# sudo sh cuda_11.5.1_495.29.05_linux.run

===========

= Summary =

===========

Driver: Not Selected

Toolkit: Installed in /usr/local/cuda-11.5/

Samples: Installed in /root/, but missing recommended libraries

Please make sure that

- PATH includes /usr/local/cuda-11.5/bin

- LD_LIBRARY_PATH includes /usr/local/cuda-11.5/lib64, or, add /usr/local/cuda-11.5/lib64 to /etc/ld.so.conf and run ldconfig as root

To uninstall the CUDA Toolkit, run cuda-uninstaller in /usr/local/cuda-11.5/bin

***WARNING: Incomplete installation! This installation did not install the CUDA Driver. A driver of version at least 495.00 is required for CUDA 11.5 functionality to work.

To install the driver using this installer, run the following command, replacing <CudaInstaller> with the name of this run file:

sudo <CudaInstaller>.run --silent --driver

Logfile is /var/log/cuda-installer.log

出现此输出时便代表安装成功

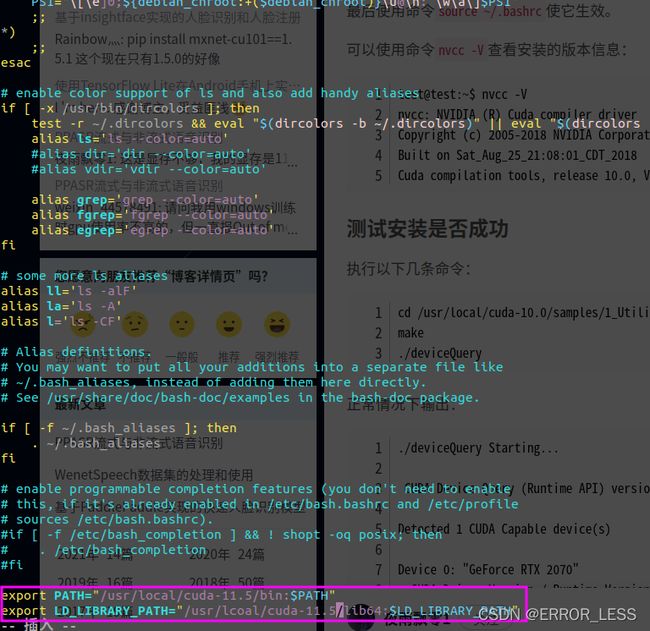

4 配置

root@master:/home/hqc# vi ~/.bashrc

# 在文件结尾添上这两句指令

export PATH="/usr/local/cuda-11.5/bin:$PATH"

export LD_LIBRARY_PATH="/usr/local/cuda-11.5/lib64:$LD_LIBRARY_PATH"

# source一下使之生效

root@master:/home/hqc# source ~/.bashrc

5 验证

root@master:/home/hqc# cd /usr/local/cuda-11.5/samples/1_Utilities/deviceQuery

root@master:/usr/local/cuda-11.5/samples/1_Utilities/deviceQuery# sudo make

/usr/local/cuda/bin/nvcc -ccbin g++ -I../../common/inc -m64 --threads 0 --std=c++11 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_37,code=sm_37 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_52,code=sm_52 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_61,code=sm_61 -gencode arch=compute_70,code=sm_70 -gencode arch=compute_75,code=sm_75 -gencode arch=compute_80,code=sm_80 -gencode arch=compute_86,code=sm_86 -gencode arch=compute_86,code=compute_86 -o deviceQuery.o -c deviceQuery.cpp

nvcc warning : The 'compute_35', 'compute_37', 'compute_50', 'sm_35', 'sm_37' and 'sm_50' architectures are deprecated, and may be removed in a future release (Use -Wno-deprecated-gpu-targets to suppress warning).

/usr/local/cuda/bin/nvcc -ccbin g++ -m64 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_37,code=sm_37 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_52,code=sm_52 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_61,code=sm_61 -gencode arch=compute_70,code=sm_70 -gencode arch=compute_75,code=sm_75 -gencode arch=compute_80,code=sm_80 -gencode arch=compute_86,code=sm_86 -gencode arch=compute_86,code=compute_86 -o deviceQuery deviceQuery.o

nvcc warning : The 'compute_35', 'compute_37', 'compute_50', 'sm_35', 'sm_37' and 'sm_50' architectures are deprecated, and may be removed in a future release (Use -Wno-deprecated-gpu-targets to suppress warning).

mkdir -p ../../bin/x86_64/linux/release

cp deviceQuery ../../bin/x86_64/linux/release

root@master:/usr/local/cuda-11.5/samples/1_Utilities/deviceQuery# ./deviceQuery

./deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "NVIDIA GeForce GTX 1080 Ti"

CUDA Driver Version / Runtime Version 11.5 / 11.5

CUDA Capability Major/Minor version number: 6.1

Total amount of global memory: 11178 MBytes (11721506816 bytes)

(028) Multiprocessors, (128) CUDA Cores/MP: 3584 CUDA Cores

GPU Max Clock rate: 1582 MHz (1.58 GHz)

Memory Clock rate: 5505 Mhz

Memory Bus Width: 352-bit

L2 Cache Size: 2883584 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 98304 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 2048

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 1 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 11.5, CUDA Runtime Version = 11.5, NumDevs = 1

Result = PASS

最后出现Result = PASS,才最终说明安装成功。

6 查看

root@master:/usr/local/cuda-11.5/samples/1_Utilities/deviceQuery# nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Thu_Nov_18_09:45:30_PST_2021

Cuda compilation tools, release 11.5, V11.5.119

Build cuda_11.5.r11.5/compiler.30672275_0

Build cuda_11.5.r11.5/compiler.30672275_0

2 安装cudnn

官网下载

登录之前需要注册会员,可能会报一些错误,注册好了登录还需要填一些东西,麻烦,随便填好了。网速也慢。

1 下载

下载cuDNN Library for Linux即可,安装cuDNN v8.3.0版本

下载速度好慢阿,等待吧。

2 安装

# 进入下载安装包的目录进行查看

root@master:/home/hqc# cd 下载

root@master:/home/hqc/下载# ls

Anaconda3-5.3.1-Linux-x86_64.sh iwlwifi-cc-46.3cfab8da.0

cudnn-11.5-linux-x64-v8.3.0.98.tgz iwlwifi-cc-46.3cfab8da.0.tgz

# 解压缩

root@master:/home/hqc/下载# tar -zxvf cudnn-11.5-linux-x64-v8.3.0.98.tgz

cuda/include/cudnn.h

cuda/include/cudnn_adv_infer.h

cuda/include/cudnn_adv_infer_v8.h

cuda/include/cudnn_adv_train.h

cuda/include/cudnn_adv_train_v8.h

cuda/include/cudnn_backend.h

cuda/include/cudnn_backend_v8.h

cuda/include/cudnn_cnn_infer.h

cuda/include/cudnn_cnn_infer_v8.h

...

# 复制解压出的cuda文件到用户文件夹中

root@master:/home/hqc/下载# cp cuda/lib64/* /usr/local/cuda-11.5/lib64/

root@master:/home/hqc/下载# cp cuda/include/* /usr/local/cuda-11.5/include/

root@master:/home/hqc/下载# cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

# 没有任何输出

# 更改一种方法仍然没有输出

root@master:/home/hqc/下载# sudo cp cuda/include/cudnn.h /usr/local/cuda/include/

root@master:/home/hqc/下载# sudo cp cuda/lib64/libcudnn* /usr/local/cuda/lib64/

root@master:/home/hqc/下载# sudo chmod a+r /usr/local/cuda/include/cudnn.h

root@master:/home/hqc/下载# sudo chmod a+r /usr/local/cuda/lib64/libcudnn*

root@master:/home/hqc/下载# cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

查看cudnn信息不输出问题-参考评论

目前还没解决。----已解决

原因:最新的版本信息在cudnn_version.h里了,不在cudnn.h里

root@master:/home/hqc/下载# cat /usr/local/cuda/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

3 验证

root@master:/home/hqc/下载# cat /usr/local/cuda/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

#define CUDNN_MAJOR 8

#define CUDNN_MINOR 3

#define CUDNN_PATCHLEVEL 0

--

#define CUDNN_VERSION (CUDNN_MAJOR * 1000 + CUDNN_MINOR * 100 + CUDNN_PATCHLEVEL)

#endif /* CUDNN_VERSION_H */

# 代表版本为cudnn8.3.0

更改为cudnn_version.h即可,因为最新的版本信息在cudnn_version.h里了,不在cudnn.h里。

3 安装nivdia-docker2

按官网安装教程操作

查看官网发现:不需要在本机上安装CUDA,只需要有驱动即可

因此决定,在下载cuda和cudnn的同时安装一下nivdia-docker。

具体指令安装nvidia-docker2

1 加入源

root@master:/home/hqc# distribution=$(. /etc/os-release;echo $ID$VERSION_ID) \

> && curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add - \

> && curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

OK

deb https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/$(ARCH) /

#deb https://nvidia.github.io/libnvidia-container/experimental/ubuntu18.04/$(ARCH) /

deb https://nvidia.github.io/nvidia-container-runtime/stable/ubuntu18.04/$(ARCH) /

#deb https://nvidia.github.io/nvidia-container-runtime/experimental/ubuntu18.04/$(ARCH) /

deb https://nvidia.github.io/nvidia-docker/ubuntu18.04/$(ARCH) /

2 更新

root@master:/home/hqc# sudo apt-get update

3 下载

root@master:/home/hqc# sudo apt-get install -y nvidia-docker2

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

下列软件包是自动安装的并且现在不需要了:

chromium-codecs-ffmpeg-extra lib32gcc1 libc6-i386 libopencore-amrnb0 libopencore-amrwb0

linux-hwe-5.4-headers-5.4.0-42

使用'sudo apt autoremove'来卸载它(它们)。

将会同时安装下列软件:

libnvidia-container-tools libnvidia-container1 nvidia-container-toolkit

下列【新】软件包将被安装:

libnvidia-container-tools libnvidia-container1 nvidia-container-toolkit nvidia-docker2

升级了 0 个软件包,新安装了 4 个软件包,要卸载 0 个软件包,有 123 个软件包未被升级。

需要下载 1,075 kB 的归档。

解压缩后会消耗 4,747 kB 的额外空间。

获取:1 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 libnvidia-container1 1.7.0-1 [69.5 kB]

获取:2 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 libnvidia-container-tools 1.7.0-1 [22.7 kB]

获取:3 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 nvidia-container-toolkit 1.7.0-1 [977 kB]

获取:4 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 nvidia-docker2 2.8.0-1 [5,528 B]

已下载 1,075 kB,耗时 6秒 (170 kB/s)

正在选中未选择的软件包 libnvidia-container1:amd64。

(正在读取数据库 ... 系统当前共安装有 221226 个文件和目录。)

正准备解包 .../libnvidia-container1_1.7.0-1_amd64.deb ...

正在解包 libnvidia-container1:amd64 (1.7.0-1) ...

正在选中未选择的软件包 libnvidia-container-tools。

正准备解包 .../libnvidia-container-tools_1.7.0-1_amd64.deb ...

正在解包 libnvidia-container-tools (1.7.0-1) ...

正在选中未选择的软件包 nvidia-container-toolkit。

正准备解包 .../nvidia-container-toolkit_1.7.0-1_amd64.deb ...

正在解包 nvidia-container-toolkit (1.7.0-1) ...

正在选中未选择的软件包 nvidia-docker2。

正准备解包 .../nvidia-docker2_2.8.0-1_all.deb ...

正在解包 nvidia-docker2 (2.8.0-1) ...

正在设置 libnvidia-container1:amd64 (1.7.0-1) ...

正在设置 libnvidia-container-tools (1.7.0-1) ...

正在设置 nvidia-container-toolkit (1.7.0-1) ...

正在设置 nvidia-docker2 (2.8.0-1) ...

配置文件 '/etc/docker/daemon.json'

==> 系统中的这个文件或者是由您创建的,或者是由脚本建立的。

==> 软件包维护者所提供的软件包中也包含了该文件。

您现在希望如何处理呢? 您有以下几个选择:

Y 或 I :安装软件包维护者所提供的版本

N 或 O :保留您原来安装的版本

D :显示两者的区别

Z :把当前进程切换到后台,然后查看现在的具体情况

默认的处理方法是保留您当前使用的版本。

*** daemon.json (Y/I/N/O/D/Z) [默认选项=N] ? N

正在处理用于 libc-bin (2.27-3ubuntu1.2) 的触发器 ...

# 此处我选择的N:保留您当前使用的版本(默认)

4 重启docker

root@master:/home/hqc# sudo systemctl restart docker

5 验证

root@master:/home/hqc# sudo docker run --rm --gpus all nvidia/cuda:11.0-base nvidia-smi

Unable to find image 'nvidia/cuda:11.0-base' locally

11.0-base: Pulling from nvidia/cuda

54ee1f796a1e: Pull complete

f7bfea53ad12: Pull complete

46d371e02073: Pull complete

b66c17bbf772: Pull complete

3642f1a6dfb3: Pull complete

e5ce55b8b4b9: Pull complete

155bc0332b0a: Pull complete

Digest: sha256:774ca3d612de15213102c2dbbba55df44dc5cf9870ca2be6c6e9c627fa63d67a

Status: Downloaded newer image for nvidia/cuda:11.0-base

Wed Dec 15 08:38:22 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 495.44 Driver Version: 495.44 CUDA Version: 11.5 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 Off | N/A |

| 23% 30C P8 8W / 250W | 148MiB / 11178MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

# Unable to find image 'nvidia/cuda:11.0-base' locally,需要先拉取

表示安装成功!

4 拉取deepo镜像配置深度学习环境

官网参考

1 拉取deepo完整版

出现错误:拉取不下来,可能是deepo这个镜像太大了,而docker默认的储存目录/var/lib/docker的空间不够

root@master:/home/hqc# docker pull ufoym/deepo

Using default tag: latest

latest: Pulling from ufoym/deepo

6e0aa5e7af40: Pull complete

d47239a868b3: Pull complete

49cbb10cca85: Pull complete

4450dd082e0f: Pull complete

b4bc5dc4c4f3: Pull complete

5353957e2ca6: Pull complete

f91e05a16062: Pull complete

7a841761f52f: Pull complete

698198ce2872: Pull complete

05a2da03249e: Downloading [==================================================>] 804.9MB/804.9MB

b1761864f72a: Download complete

29479e68065f: Download complete

3b8001916a15: Downloading [==================================================>] 3.871GB/3.871GB

latest: Pulling from ufoym/deepo

6e0aa5e7af40: Downloading [====> ] 2.489MB/26.71MB

d47239a868b3: Download complete

49cbb10cca85: Download complete

4450dd082e0f: Download complete

b4bc5dc4c4f3: Downloading [============================================> ] 7.625MB/8.486MB

5353957e2ca6: Download complete

f91e05a16062: Download complete

7a841761f52f: Downloading [> ] 537.5kB/664.6MB

698198ce2872: Waiting

05a2da03249e: Waiting

b1761864f72a: Waiting

29479e68065f: Waiting

3b8001916a15: Waiting

error pulling image configuration: read tcp 172.27.228.135:60664->104.18.125.25:443: read: connection reset by peer

# 拉取失败

2 解决问题

方法:决定将镜像完整迁移并更改目标路径目录为默认下载目录

# 查看系统内存情况

root@master:/home/hqc# df -h

文件系统 容量 已用 可用 已用% 挂载点

udev 32G 0 32G 0% /dev

tmpfs 6.3G 2.1M 6.3G 1% /run

/dev/nvme0n1p6 29G 1.7G 25G 7% /

/dev/nvme0n1p10 94G 18G 72G 20% /usr

tmpfs 32G 0 32G 0% /dev/shm

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

tmpfs 32G 0 32G 0% /sys/fs/cgroup

/dev/nvme0n1p9 9.4G 37M 8.8G 1% /tmp

/dev/nvme0n1p7 946M 176M 706M 20% /boot

/dev/nvme0n1p8 47G 13G 32G 29% /home

/dev/nvme0n1p11 9.4G 6.6G 2.4G 74% /var

/dev/loop0 640K 640K 0 100% /snap/gnome-logs/106

/dev/nvme0n1p1 96M 32M 65M 33% /boot/efi

/dev/loop1 2.7M 2.7M 0 100% /snap/gnome-system-monitor/174

/dev/loop2 56M 56M 0 100% /snap/core18/2246

/dev/loop4 768K 768K 0 100% /snap/gnome-characters/761

/dev/loop3 219M 219M 0 100% /snap/gnome-3-34-1804/77

/dev/loop5 248M 248M 0 100% /snap/gnome-3-38-2004/87

/dev/loop7 128K 128K 0 100% /snap/bare/5

/dev/loop6 43M 43M 0 100% /snap/snapd/14066

/dev/loop8 384K 384K 0 100% /snap/gnome-characters/550

/dev/loop9 2.5M 2.5M 0 100% /snap/gnome-calculator/748

/dev/loop10 1.0M 1.0M 0 100% /snap/gnome-logs/100

/dev/loop11 63M 63M 0 100% /snap/gtk-common-themes/1506

/dev/loop12 62M 62M 0 100% /snap/core20/1242

/dev/loop13 219M 219M 0 100% /snap/gnome-3-34-1804/72

/dev/loop14 33M 33M 0 100% /snap/snapd/13640

/dev/loop15 2.5M 2.5M 0 100% /snap/gnome-calculator/884

/dev/loop16 56M 56M 0 100% /snap/core18/2253

/dev/loop17 62M 62M 0 100% /snap/core20/1270

/dev/loop18 2.3M 2.3M 0 100% /snap/gnome-system-monitor/148

/dev/loop19 522M 522M 0 100% /snap/pycharm-community/261

/dev/loop20 66M 66M 0 100% /snap/gtk-common-themes/1519

/dev/loop21 243M 243M 0 100% /snap/gnome-3-38-2004/76

tmpfs 6.3G 16K 6.3G 1% /run/user/121

tmpfs 6.3G 72K 6.3G 1% /run/user/1000

# 发现/usr目录下的存储空间最多,因此打算把这个作为默认下载目录

具体操作参考这篇文档

# 停止docker

root@master:/home/hqc# service docker stop

# 或

root@master:/home/hqc# systemctl stop docker.service

# 创建一个/usr下用来存放镜像的新目录

root@master:/home/hqc# mkdir -p /usr/hqc/docker_root

# 将原目录下的所有镜像文件拷贝到这个目录中

root@master:/home/hqc# cp -R /var/lib/docker/* /usr/hqc/docker_root

# 找到/etc/docker下的daemon.json修改配置

root@master:/home/hqc# cd /etc/docker

root@master:/etc/docker# ls

daemon.json daemon.json.dpkg-dist key.json

root@master:/etc/docker# vim daemon.json

{

"data-root":"/usr/hqc/docker_root",# 添加这一行,注意末尾有逗号

"registry-mirrors": ["https://zwir0uyv.mirror.aliyuncs.com"]

}

# 重新配置

root@master:/etc/docker# systemctl daemon-reload

# 再次运行docker

root@master:/etc/docker# systemctl start docker.service

# 再次查看默认目录

root@master:/etc/docker# docker info |grep "Docker Root Dir"

WARNING: No swap limit support

Docker Root Dir: /usr/hqc/docker_root

# 成功更改

3 再次拉取deepo-拉取成功

root@master:/home/hqc# docker pull ufoym/deepo

Using default tag: latest

latest: Pulling from ufoym/deepo

6e0aa5e7af40: Pull complete

d47239a868b3: Pull complete

49cbb10cca85: Pull complete

4450dd082e0f: Pull complete

b4bc5dc4c4f3: Pull complete

5353957e2ca6: Pull complete

f91e05a16062: Pull complete

7a841761f52f: Pull complete

698198ce2872: Pull complete

05a2da03249e: Pull complete

b1761864f72a: Pull complete

29479e68065f: Pull complete

3b8001916a15: Pull complete

Digest: sha256:79473e5e182257ce0aff172670d32d09204c48785c5b5daff1830dad83f4a548

Status: Downloaded newer image for ufoym/deepo:latest

docker.io/ufoym/deepo:latest

# 下载成功!!!

4 验证环境

中文翻译官网

root@master:/home/hqc# nvidia-docker run --rm ufoym/deepo nvidia-smi

docker: Error response from daemon: Unknown runtime specified nvidia.

See 'docker run --help'.

5 解决问题

1 方案一

参考解决(还未解决)

2 方案二

可用nvidia-docker image ls语句先查看nvidia-docker是否安装成功

root@master:/etc/docker# nvidia-docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-beijing.aliyuncs.com/hqc-k8s/ali-array-plus v2.5 7f4f56bbf3c8 3 days ago 928MB

json-plus v1.2 fab5150c7c5e 5 days ago 928MB

python latest f48ea80eae5a 4 weeks ago 917MB

nginx latest ea335eea17ab 4 weeks ago 141MB

quay.io/coreos/flannel v0.15.1 e6ea68648f0c 4 weeks ago 69.5MB

rancher/mirrored-flannelcni-flannel-cni-plugin v1.0.0 cd5235cd7dc2 7 weeks ago 9.03MB

hello-world latest feb5d9fea6a5 2 months ago 13.3kB

registry.cn-beijing.aliyuncs.com/hqc-k8s/hello-world v1.0 feb5d9fea6a5 2 months ago 13.3kB

ufoym/deepo latest f07b2fdc30b2 6 months ago 14.3GB

# 和docker images查看一样的效果,应该是已经装好了

可尝试执行一下sudo docker run --rm --gpus all nvidia/cuda:11.5 nvidia-smi,主要是将11.0-base换成11.5,可能是因为本机上安装的是11.5,这个过程中remove掉了11.0-base。因此nvidia-docker可能失效了。

root@master:/etc/docker# sudo docker run --rm --gpus all nvidia/cuda:11.5 nvidia-smi

Unable to find image 'nvidia/cuda:11.5' locally

docker: Error response from daemon: manifest for nvidia/cuda:11.5 not found: manifest unknown: manifest unknown.

See 'docker run --help'.

失败。

3 方案三

也有博客是运行sudo docker run --runtime=nvidia --rm nvidia/cuda nvidia-smi这句指令,可试试

root@master:/etc/docker# sudo docker run --runtime=nvidia --rm nvidia/cuda nvidia-smi

docker: Error response from daemon: Unknown runtime specified nvidia.

See 'docker run --help'.

# 不行

4 方案四

或者尝试修改/etc/docker/daemon.json文件

在其中加入

{

# "registry-mirrors": ["https://f1z25q5p.mirror.aliyuncs.com"],

# 这句是之前配置的阿里云docker加速

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

}

}

不过这种方法好像是centos系统中的操作,或许不大对

验证过后果然不行,会造成的docker没法启动

5 方案五

参考官网操作

ubuntu下,运行sudo apt-get install nvidia-container-runtime指令

root@master:/etc/docker# sudo apt-get install nvidia-container-runtime

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

下列软件包是自动安装的并且现在不需要了:

chromium-codecs-ffmpeg-extra lib32gcc1 libc6-i386 libopencore-amrnb0 libopencore-amrwb0 linux-hwe-5.4-headers-5.4.0-42

使用'sudo apt autoremove'来卸载它(它们)。

下列【新】软件包将被安装:

nvidia-container-runtime

升级了 0 个软件包,新安装了 1 个软件包,要卸载 0 个软件包,有 123 个软件包未被升级。

需要下载 4,984 B 的归档。

解压缩后会消耗 21.5 kB 的额外空间。

获取:1 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 nvidia-container-runtime 3.7.0-1 [4,984 B]

已下载 4,984 B,耗时 1秒 (5,456 B/s)

正在选中未选择的软件包 nvidia-container-runtime。

(正在读取数据库 ... 系统当前共安装有 221251 个文件和目录。)

正准备解包 .../nvidia-container-runtime_3.7.0-1_all.deb ...

正在解包 nvidia-container-runtime (3.7.0-1) ...

正在设置 nvidia-container-runtime (3.7.0-1) ...

# 验证,还是不行

root@master:/etc/docker# nvidia-docker run --rm ufoym/deepo nvidia-smi

docker: Error response from daemon: Unknown runtime specified nvidia.

See 'docker run --help'.

6 方案六(最后成功的方案)

参考官网方案

root@master:/home/hqc# tee /etc/systemd/system/docker.service.d/override.conf <

> [Service]

> ExecStart=

> ExecStart=/usr/bin/dockerd --host=fd:// --add-runtime=nvidia=/usr/bin/nvidia-container-runtime

> EOF

[Service]

ExecStart=

ExecStart=/usr/bin/dockerd --host=fd:// --add-runtime=nvidia=/usr/bin/nvidia-container-runtime

# 输入方法为:输入第一行按enter键,在依次每行复制进去回车

# 更改配置文件使之生效

root@master:/home/hqc# sudo systemctl daemon-reload

# 重启docker服务

root@master:/home/hqc# sudo systemctl restart docker

# 验证

root@master:/home/hqc# nvidia-docker run --rm ufoym/deepo nvidia-smi

Fri Dec 17 02:59:02 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 495.44 Driver Version: 495.44 CUDA Version: 11.5 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 Off | N/A |

| 23% 28C P8 9W / 250W | 11MiB / 11178MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

# 成功!!

6 再次验证

# 运行验证,并使Deepo能够在docker容器内使用GPU

root@master:/home/hqc# nvidia-docker run --rm ufoym/deepo nvidia-smi

Fri Dec 17 03:04:18 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 495.44 Driver Version: 495.44 CUDA Version: 11.5 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 Off | N/A |

| 23% 28C P8 8W / 250W | 11MiB / 11178MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

# 将一个交互式shell放入一个容器,该容器不会在你退出之后自动删除。

root@master:/home/hqc# nvidia-docker run -it ufoym/deepo bash

# 进入python虚拟环境

root@2e0a741f5da1:/# python

Python 3.6.9 (default, Jan 26 2021, 15:33:00)

[GCC 8.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow

2021-12-17 03:06:21.636087: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /usr/local/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64

# 这段好像报错了,但咱也不大懂为啥呀,好像是说不能使用GPU

2021-12-17 03:06:21.636112: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

>>> print(tensorflow.__name__, tensorflow.__version__)

tensorflow 2.5.0

# 好在可以正常显示tensorflow版本

# 后面再次import tensorflow就没出现之前的输出信息了,不知道为啥

# 再验证一下torch环境

>>> import torch

>>> print(torch.__name__, torch.__version__)

torch 1.9.0.dev20210415+cu101

# 没出现问题

总之,总算成功拉!

5 总结

整个流程下来,好像本地cuda和cudnn都不是搭建的必须,由于网速和文件很大的因素,可选择安装。