kafka实现读文件到hbase 并且实现hbase到hive上的映射

首先还是构建一个父类特质方法

package kafkatohbase

import org.apache.hadoop.hbase.client.Put

import org.apache.kafka.clients.consumer.{ConsumerRecord, ConsumerRecords}

trait DataHandler {

def transform(topic:String,records:ConsumerRecords[String,String]):Array[Put]

}这次读取5个消息队列所以书写了5个特质子类

package kafkatohbase

import org.apache.hadoop.hbase.client.Put

import org.apache.kafka.clients.consumer.{ConsumerRecord, ConsumerRecords}

import org.apache.kafka.common.TopicPartition

trait EventsAttendeesImpl extends DataHandler {

override def transform(topic:String,records: ConsumerRecords[String, String]): Array[Put] = {

val topicPartition = new TopicPartition(topic, 0)

records.records(topicPartition).toArray.map(r=>{

val rec=r.asInstanceOf[ConsumerRecord[String,String]]

val info=rec.value().split(",")

val put=new Put((info(0)+""+info(1)+info(2)).getBytes)

put.addColumn("base".getBytes(),"user_id".getBytes(),info(0).getBytes)

put.addColumn("base".getBytes(),"friend_id".getBytes(),info(1).getBytes)

put.addColumn("base".getBytes(),"attend_type".getBytes(),info(2).getBytes)

put

})

}

}package kafkatohbase

import org.apache.hadoop.hbase.client.Put

import org.apache.kafka.clients.consumer.{ConsumerRecord, ConsumerRecords}

import org.apache.kafka.common.TopicPartition

trait EventsImpl extends DataHandler {

override def transform(topic:String,records: ConsumerRecords[String, String]): Array[Put] = {

val topicPartition = new TopicPartition(topic, 0)

records.records(topicPartition).toArray.map(r=>{

val rec=r.asInstanceOf[ConsumerRecord[String,String]]

val info=rec.value().split(",",-1)

val put=new Put((info(0)+""+info(1)).getBytes())

//event_id,user_id,start_time,city,state,zip,country,lat,lng

put.addColumn("base".getBytes(),"event_id".getBytes(),info(0).getBytes)

put.addColumn("base".getBytes(),"user_id".getBytes(),info(1).getBytes)

put.addColumn("base".getBytes(),"start_time".getBytes(),info(2).getBytes)

put.addColumn("base".getBytes(),"city".getBytes(),info(3).getBytes)

put.addColumn("base".getBytes(),"state".getBytes(),info(4).getBytes)

put.addColumn("base".getBytes(),"zip".getBytes(),info(5).getBytes)

put.addColumn("base".getBytes(),"country".getBytes(),info(6).getBytes)

put.addColumn("base".getBytes(),"lat".getBytes(),info(7).getBytes)

put.addColumn("base".getBytes(),"lng".getBytes(),info(8).getBytes)

var other=""

for(i<-9 until info.length){

other+=info(i) + " "

}

put.addColumn("base".getBytes(),"other".getBytes(),other.trim.getBytes)

put

})

}

}

package kafkatohbase

import org.apache.hadoop.hbase.client.Put

import org.apache.kafka.clients.consumer.{ConsumerRecord, ConsumerRecords}

import org.apache.kafka.common.TopicPartition

trait TrainImpl extends DataHandler {

override def transform(topic:String,records: ConsumerRecords[String, String]): Array[Put] = {

val topicPartition=new TopicPartition(topic,0)

records.records(topicPartition).toArray.map(r=>{

val rec=r.asInstanceOf[ConsumerRecord[String,String]]

val info=rec.value().split(",")

(info(0),info(1),info(2),info(3),info(4),info(5))

}).sortBy(_._4).map(x=>{

val put=new Put((x._1+""+x._2).getBytes())

put.addColumn("base".getBytes(),"user_id".getBytes(),x._1.getBytes)

put.addColumn("base".getBytes(),"event_id".getBytes(),x._2.getBytes)

put.addColumn("base".getBytes(),"invited".getBytes(),x._3.getBytes)

put.addColumn("base".getBytes(),"timestamp".getBytes(),x._4.getBytes)

put.addColumn("base".getBytes(),"interested".getBytes(),x._5.getBytes)

put.addColumn("base".getBytes(),"not_interested".getBytes(),x._6.getBytes)

put

})

}

}

package kafkatohbase

import org.apache.hadoop.hbase.client.Put

import org.apache.kafka.clients.consumer.{ConsumerRecord, ConsumerRecords}

import org.apache.kafka.common.TopicPartition

trait UserFriendImpl extends DataHandler {

override def transform(topic:String,records: ConsumerRecords[String, String]): Array[Put] = {

val topicPartition = new TopicPartition(topic, 0)

records.records(topicPartition).toArray.map(r=>{

val rec=r.asInstanceOf[ConsumerRecord[String,String]]

val info=rec.value().split(",",-1)

val put=new Put((info(0)+""+info(1)).getBytes())

put.addColumn("base".getBytes(),"user_id".getBytes(),info(0).getBytes)

put.addColumn("base".getBytes(),"friend_id".getBytes(),info(1).getBytes)

put

})

}

}package kafkatohbase

import org.apache.hadoop.hbase.client.Put

import org.apache.kafka.clients.consumer.{ConsumerRecord, ConsumerRecords}

import org.apache.kafka.common.TopicPartition

trait UserImpl extends DataHandler {

override def transform(topic:String,records: ConsumerRecords[String, String]): Array[Put] = {

val topicPartition = new TopicPartition(topic, 0)

records.records(topicPartition).toArray.map(r=>{

val rec=r.asInstanceOf[ConsumerRecord[String,String]]

val info=rec.value().split(",",-1)

val put=new Put(info(0).getBytes)

put.addColumn("base".getBytes(),"user_id".getBytes(),info(0).getBytes)

put.addColumn("base".getBytes(),"locale".getBytes(),info(1).getBytes)

put.addColumn("base".getBytes(),"birthyear".getBytes(),info(2).getBytes)

put.addColumn("base".getBytes(),"gender".getBytes(),info(3).getBytes)

put.addColumn("base".getBytes(),"joinedAt".getBytes(),info(4).getBytes)

put.addColumn("base".getBytes(),"location".getBytes(),info(5).getBytes)

put.addColumn("base".getBytes(),"timezone".getBytes(),info(6).getBytes)

put

})

}

}

实现读取方法

package kafkatohbase

import java.time.Duration

import java.util

import com.fenbi.mydh.streamhandler.Params

import org.apache.hadoop.hbase.{HBaseConfiguration, TableName}

import org.apache.hadoop.hbase.client.{ConnectionFactory, Put}

import org.apache.kafka.clients.consumer.{ConsumerRecord, ConsumerRecords, KafkaConsumer}

class ReadKafkaToHbase {

self:DataHandler=>

def job(ip:String,group:String,topic:String,hbaseTable:String)={

val hbaseconf=HBaseConfiguration.create()

hbaseconf.set("hbase.zookeeper.quorum",ip+":2181")

//读取kafka消息

val conn= ConnectionFactory.createConnection(hbaseconf)

val param=new Params

param.IP=ip+":9092"

param.GROUPID=group

val consumer=new KafkaConsumer[String,String](param.getReadKafkaParam)

consumer.subscribe(util.Arrays.asList(topic))

while (true){

val rds:ConsumerRecords[String,String]=consumer.poll(Duration.ofSeconds(5))

//转换数据获得Array[Put]

val puts:Array[Put]=transform(topic,rds)

//将scalaArray【put}转为java ArrayList

val lst=new util.ArrayList[Put]()

puts.foreach(p=>lst.add(p))

val table=conn.getTable(TableName.valueOf(hbaseTable))

//将数据发送到hbase

table.put(lst)

println("..........................................")

}

}

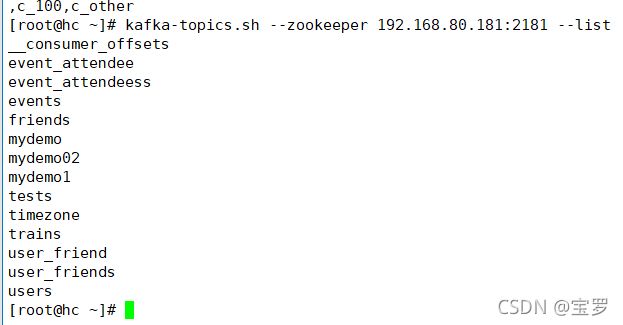

}注意我这里的5个文件已经读取到kafka中了

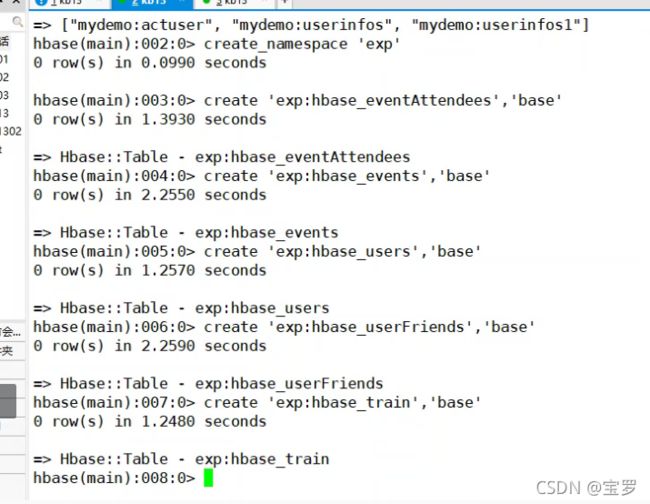

然后启动你的hbase

后面还需要用到hive 所以一起启动吧

habse shell 启动编程

首先创建名空间

create_namespace ’exp‘

创建需要的5张表

然后返回idea 书写test文件

package kafkatohbase

object Test1 {

def main(args: Array[String]): Unit = {

// (new ReadKafkaToHbase() with EventsAttendeesImpl)

// .job("192.168.80.181","ea08","event_attendee","exp:hbase_eventAttendees") //11245008

// (new ReadKafkaToHbase() with EventsImpl)

// .job("192.168.80.181","et05","events","exp:hbase_events") //3137972

// (new ReadKafkaToHbase() with UserImpl)

// .job("192.168.80.181","us01","users","exp:hbase_users") //38209

// (new ReadKafkaToHbase() with UserFriendImpl)

// .job("192.168.80.181","uff07","friends","exp:hbase_userFriend") //30386387

// (new ReadKafkaToHbase() with TrainImpl)

// .job("192.168.80.181","tr01","trains","exp:hbase_train") //15220

}

}

最好是一张一张表的读 然后查看数据是否准确 hbase可以根据列簇去重

全部完成后打开zeppelin

创建一个属于你的工程

我这里创建的名字为hbasetest的工程

-- create database hbasetest 创建数据库

--use hbasetest 使用数据库

--表一

-- create external table hbasetest.hbase_events(

-- id string,

-- event_id string,

-- user_id string,

-- start_time string,

-- city string,

-- state string,

-- zip string,

-- country string,

-- lat string,

-- lng string,

-- other string

-- )

-- row format serde 'org.apache.hadoop.hive.hbase.HBaseSerDe'

-- stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

-- with serdeproperties(

-- "hbase.columns.mapping"=":key,base:event_id,base:user_id,base:start_time,base:city,base:state,base:zip,base:country,base:lat,base:lng,base:other"

-- )

-- tblproperties(

-- "hbase.table.name"="exp:hbase_events"

-- )

--表二

create external table hbasetest.hbase_eventAttendees(

id string,

user_id string,

friend_id string,

attend_type string

)

row format serde 'org.apache.hadoop.hive.hbase.HBaseSerDe'

stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

with serdeproperties(

"hbase.columns.mapping"=":key,base:user_id,base:friend_id,base:attend_type"

)

tblproperties(

"hbase.table.name"="exp:hbase_eventAttendees"

)

-- select * from hbasetest.hbase_events 查询一下数据是否存在数据存在打完收工