深度之眼Pytorch框架训练营第四期——PyTorch中学习率调整策略

文章目录

-

-

- 学习率调整策略

-

- 1、学习率调整的必要性

- 2、`Pytorch`中的六种学习率调整策略

-

- (1)基类`_LRScheduler`

- (2)六种学习率调整方法

-

- <1> `lr_scheduler.StepLR`

- <2> `lr_scheduler.MultiStepLR`

- <3> `lr_scheduler.ExponentialLR`

- <4> `lr_scheduler.CosineAnnealingLR`

- <5> `lr_scheduler.ReduceLROnPlateau`

- <6> `LambdaLR`

- 3、小结

-

学习率调整策略

1、学习率调整的必要性

梯度下降法中,公式为

w i + 1 = w i − L R × g ( w i ) w_{i+1}=w_{i}-L R \times g\left(w_{i}\right) wi+1=wi−LR×g(wi)

通过学习率 L R LR LR控制更新的步伐,但是如果不对学习率进行调整,则有可能出现达不到最优解( L R LR LR过大)或者收敛速度慢( L R LR LR过小)的情况;因此在训练模型时,非常有必要对学习率进行调整;一般在训练初期给予较大的学习率,随着训练 的进行,学习率逐渐减小。学习率什么时候减小,减小多少,这就涉及到学习率调整方法。

2、Pytorch中的六种学习率调整策略

(1)基类_LRScheduler

Pytorch中的六种学习率调整方法都是继承于基类_LRScheduler- 主要属性:

optimizer:关联的优化器last_epoch:记录epoch数base_lrs:记录初始学习率- 主要方法

step:更新下一个epoch的学习率get_lr:虚函数,计算下一个epoch的学习率

(2)六种学习率调整方法

<1> lr_scheduler.StepLR

torch.optim.lr_scheduler.StepLR(optimizer,

step_size,

gamma=0.1,

last_epoch=-1)

-

功能:等间隔调整学习率

-

主要参数:

-

step_size:调整间隔数,若为 30,则会在 30、60、90…个 step 时,将学习率调整为 l r ⋅ g a m m a lr \cdot gamma lr⋅gamma -

gamma:调整系数(学习率调整倍数,默认为 0.1 倍,即下降 10 倍) -

last_epoch:上一个 epoch 数,这个变量用来指示学习率是否需要调整。当last_epoch 符合设定的间隔时,就会对学习率进行调整。当为-1时,学习率设置为初始值 -

调整方法: l r = l r ⋅ g a m m a lr = lr \cdot gamma lr=lr⋅gamma

-

实例

LR = 0.1

iteration = 10

max_epoch = 200

scheduler_lr = optim.lr_scheduler.StepLR(optimizer, step_size=50, gamma=0.1) # 设置学习率下降策略

lr_list, epoch_list = list(), list()

for epoch in range(max_epoch):

lr_list.append(scheduler_lr.get_lr())

epoch_list.append(epoch)

for i in range(iteration):

loss = torch.pow((weights - target), 2)

loss.backward()

optimizer.step()

optimizer.zero_grad()

scheduler_lr.step()

plt.plot(epoch_list, lr_list, label="Step LR Scheduler")

plt.xlabel("Epoch")

plt.ylabel("Learning rate")

plt.legend()

plt.show()

从上面可以看出,每到50的倍数,学习率 L R LR LR为原来的0.1倍

<2> lr_scheduler.MultiStepLR

- 功能:按设定的间隔调整学习率。这个方法适合后期调试使用,观察 loss 曲线,为每个实验 定制学习率调整时机

- 主要参数:

milestones:设定调整时刻数gamma:调整系数last_epoch:上一个 epoch 数,这个变量用来指示学习率是否需要调整- 实例:

LR = 0.1

iteration = 10

max_epoch = 200

milestones = [50, 125, 160]

scheduler_lr = optim.lr_scheduler.MultiStepLR(optimizer, milestones=milestones, gamma=0.1)

lr_list, epoch_list = list(), list()

for epoch in range(max_epoch):

lr_list.append(scheduler_lr.get_lr())

epoch_list.append(epoch)

for i in range(iteration):

loss = torch.pow((weights - target), 2)

loss.backward()

optimizer.step()

optimizer.zero_grad()

scheduler_lr.step()

plt.plot(epoch_list, lr_list, label="Multi Step LR Scheduler\nmilestones:{}".format(milestones))

plt.xlabel("Epoch")

plt.ylabel("Learning rate")

plt.legend()

plt.show()

由于设置了时刻数为50, 125, 160,因此从上图可以看出,在这几个点,学习率为原来的0.1倍

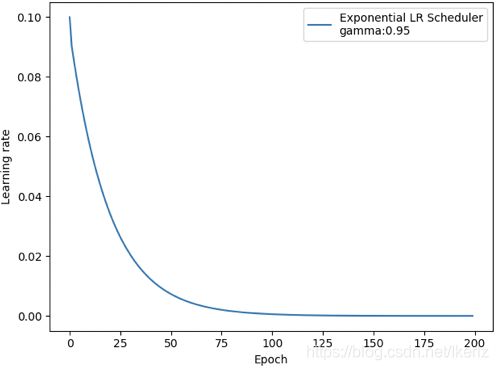

<3> lr_scheduler.ExponentialLR

lr_scheduler.ExponentialLR(optimizer,

gamma,

last_epoch=-1)

- 功能:按指数衰减调整学习率

- 主要参数:

gamma:指数的底,指数为epoch- 调整公式

l r = l r ⋅ g a m m a e p o c h lr = lr \cdot gamma ^{epoch} lr=lr⋅gammaepoch - 实例:

LR = 0.1

iteration = 10

max_epoch = 200

gamma = 0.95

scheduler_lr = optim.lr_scheduler.ExponentialLR(optimizer, gamma=gamma)

lr_list, epoch_list = list(), list()

for epoch in range(max_epoch):

lr_list.append(scheduler_lr.get_lr())

epoch_list.append(epoch)

for i in range(iteration):

loss = torch.pow((weights - target), 2)

loss.backward()

optimizer.step()

optimizer.zero_grad()

scheduler_lr.step()

plt.plot(epoch_list, lr_list, label="Exponential LR Scheduler\ngamma:{}".format(gamma))

plt.xlabel("Epoch")

plt.ylabel("Learning rate")

plt.legend()

plt.show()

<4> lr_scheduler.CosineAnnealingLR

lr_scheduler.CosineAnnealingLR(optimizer,

T_max,

eta_min=0,

last_epoch=-1)

- 功能:以余弦函数为周期,并在每个周期最大值时重新设置学习率

- 参数:

T_max:一次学习率周期的迭代次数,即 T_max 个 epoch 之后重新设置学习率eta_min:最小学习率,即在一个周期中,学习率最小会下降到 eta_min,默认值为0- 调整公式:

η t = η min + 1 2 ( η max − η min ) ( 1 + cos ( T c u r T max π ) ) \eta_{t}=\eta_{\min }+\frac{1}{2}\left(\eta_{\max }-\eta_{\min }\right)\left(1+\cos \left(\frac{T_{c u r}}{T_{\max }} \pi\right)\right) ηt=ηmin+21(ηmax−ηmin)(1+cos(TmaxTcurπ)) - 实例:

LR = 0.1

iteration = 10

max_epoch = 200

t_max = 50

scheduler_lr = optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max=t_max, eta_min=0.)

lr_list, epoch_list = list(), list()

for epoch in range(max_epoch):

lr_list.append(scheduler_lr.get_lr())

epoch_list.append(epoch)

for i in range(iteration):

loss = torch.pow((weights - target), 2)

loss.backward()

optimizer.step()

optimizer.zero_grad()

scheduler_lr.step()

plt.plot(epoch_list, lr_list, label="CosineAnnealingLR Scheduler\nT_max:{}".format(t_max))

plt.xlabel("Epoch")

plt.ylabel("Learning rate")

plt.legend()

plt.show()

<5> lr_scheduler.ReduceLROnPlateau

lr_scheduler.ReduceLROnPlateau(optimizer,

mode='min',

factor=0.1,

patience=10,

verbose=False,

threshold=0.0001,

threshold_mode='rel',

cooldown=0,

min_lr=0,

eps=1e-08)

- 功能:监控指标,当某指标不再变化(下降或升高),调整学习率,这是非常实用的学习率调整策略。 例如,当验证集的 loss 不再下降时,进行学习率调整;或者监测验证集的 accuracy,当 accuracy 不再上升时,则调整学习率

- 主要参数:

mode:min/max 两种模式factor:调整系数patience:“耐心”,接受几次不变化cooldown:“冷却时间”,停止监控一段时间verbose:是否打印日志min_lr:学习率下限eps:学习率衰减最小值- 实例:

LR = 0.1

iteration = 10

max_epoch = 200

loss_value = 0.5

accuray = 0.9

factor = 0.1

mode = "min"

patience = 10

cooldown = 10

min_lr = 1e-4

verbose = True

scheduler_lr = optim.lr_scheduler.ReduceLROnPlateau(optimizer, factor=factor, mode=mode, patience=patience,cooldown=cooldown, min_lr=min_lr, verbose=verbose)

for epoch in range(max_epoch):

for i in range(iteration):

# train(...)

optimizer.step()

optimizer.zero_grad()

if epoch == 5:

loss_value = 0.4

scheduler_lr.step(loss_value)

# Epoch 17: reducing learning rate of group 0 to 1.0000e-02.

# Epoch 38: reducing learning rate of group 0 to 1.0000e-03.

# Epoch 59: reducing learning rate of group 0 to 1.0000e-04.

<6> LambdaLR

class torch.optim.lr_scheduler.LambdaLR(optimizer,

lr_lambda,

last_epoch=-1)

- 功能:自定义调整策略

- 主要参数:

lr_lambda:一个计算学习率调整倍数的函数,输入通常为 step,当有多个参数组时,设为 list- 调整策略:

l r = b a s e _ l r ⋅ l a m b d a ( s e l f . l a s t _ e p o c h ) lr = base\_lr \cdot lambda(self.last\_epoch) lr=base_lr⋅lambda(self.last_epoch) - 实例:

LR = 0.1

iteration = 10

max_epoch = 200

lr_init = 0.1

weights_1 = torch.randn((6, 3, 5, 5))

weights_2 = torch.ones((5, 5))

optimizer = optim.SGD([

{'params': [weights_1]},

{'params': [weights_2]}], lr=lr_init)

lambda1 = lambda epoch: 0.1 ** (epoch // 20)

lambda2 = lambda epoch: 0.95 ** epoch

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=[lambda1, lambda2])

lr_list, epoch_list = list(), list()

for epoch in range(max_epoch):

for i in range(iteration):

# train(...)

optimizer.step()

optimizer.zero_grad()

scheduler.step()

lr_list.append(scheduler.get_lr())

epoch_list.append(epoch)

print('epoch:{:5d}, lr:{}'.format(epoch, scheduler.get_lr()))

plt.plot(epoch_list, [i[0] for i in lr_list], label="lambda 1")

plt.plot(epoch_list, [i[1] for i in lr_list], label="lambda 2")

plt.xlabel("Epoch")

plt.ylabel("Learning Rate")

plt.title("LambdaLR")

plt.legend()

plt.show()

3、小结

PyTorch 提供了六种学习率调整方法,可分为三大类,分别是

- 有序调整:依一定规律有序进行调整,这一类是最常用的,分别是等间隔下降(Step),按需设定下降间隔(MultiStep),指数下降(Exponential)和 CosineAnnealing。这四种方法的调整时机都是人为可控的,也是训练时常用到的

- 自适应调整:依训练状况伺机调整,这就是 ReduceLROnPlateau 方法。该法通过监测某一 指标的变化情况,当该指标不再怎么变化的时候,就是调整学习率的时机,因而属于自适 应的调整

- 自定义调整:lambda 方法提供的调整策略十分灵活,我们可以为不 同的层设定不同的学习率调整方法,这在 fine-tune 中十分有用,我们不仅可为不同的层 设定不同的学习率,还可以为其设定不同的学习率调整策略