Flink kubernetes operator 部署prometheus监控

环境准备

minikube

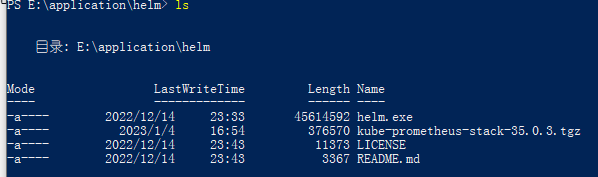

helm,helm直接从github下载对应的包,这样比较快

实操

k8s集群准备

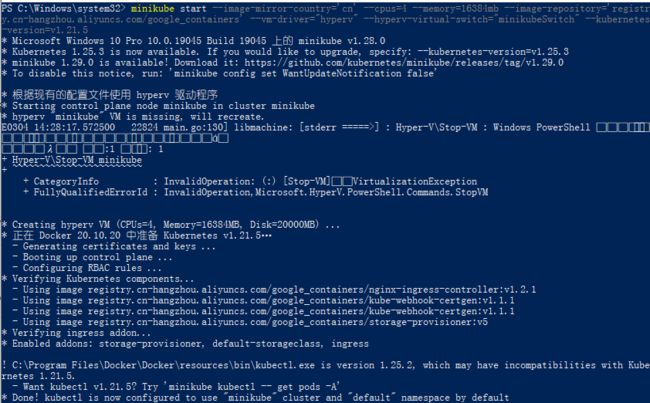

首先,我们先启动一个k8s集群,用下面的命令:

minikube start --image-mirror-country='cn' --cpus=4 --memory=16384mb --image-repository='registry.cn-hangzhou.aliyuncs.com/google_containers' --vm-driver="hyperv" --hyperv-virtual-switch="minikubeSwitch" --kubernetes-version=v1.21.5如上图,我们成功的创建了一个集群,下面我们用命令查看一下集群的资源:

集群准备就绪,现在我们来安装flink kubernetes operator,安装flink kubernetes operator需要使用helm,所以我们这里要先提前将helm准备好,如下:

首先,我们需要先将仓库添加到helm:

helm repo add flink-operator-repo https://downloads.apache.org/flink/flink-kubernetes-operator-1.3.1/接下来,安装:

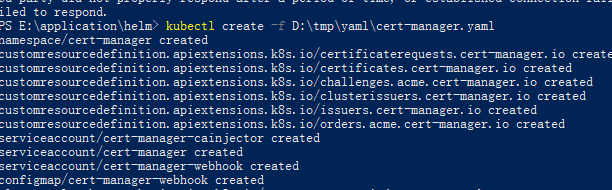

首先,我们需要先安装证书,因为网络的原因,这个文件很难下载下来,笔者直接浏览器下载下来,然后安装,下面是文件地址:

https://github.com/jetstack/cert-manager/releases/download/v1.8.2/cert-manager.yaml接下来,我们安装flink kubernetes operator:

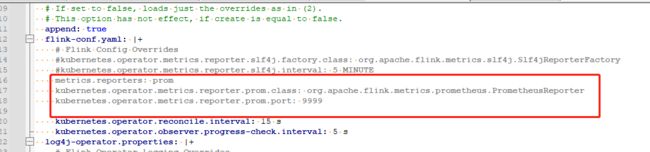

./helm install flink-kubernetes-operator flink-operator-repo/flink-kubernetes-operator -f D:\tmp\yaml\operator-values.yaml注意,这里笔者自定义了安装的value文件,因为flink里面要启用prometheus需要指定端口和指标收集器。

同时注意一下镜像版本。

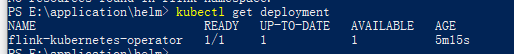

查看operator已经安装成功

prometheus operator 安装

首先我们把 github.com/prometheus-operator/kube-prometheus 这个项目clone下来,因为我们需要里面的部署清单,把资源清单放到一个目录,这里笔者使用的是0.9的版本,下面我们需要对里面的一些资源清单做修改。

因为默认的serviceAccount需要访问任意集群下的 资源,所以我们需要增加对应得集群权限,我们需要修改prometheus-clusterRole.yaml:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.29.1

name: prometheus-k8s

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

同时因为需要访问prometheus和grafana我们需要将对应得service指定为nodePort:

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 8.1.1

name: grafana-service

namespace: monitoring

spec:

ports:

- name: http

port: 3000

nodePort: 30001

targetPort: http

selector:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

type: NodePort

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.29.1

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

ports:

- name: web

port: 9090

nodePort: 30090

targetPort: web

selector:

app: prometheus

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

prometheus: k8s

sessionAffinity: ClientIP

type: NodePort

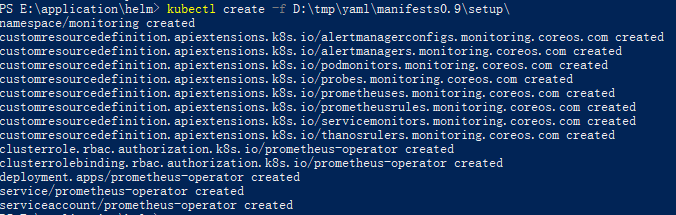

接下来我们开始部署prometheus-operator。

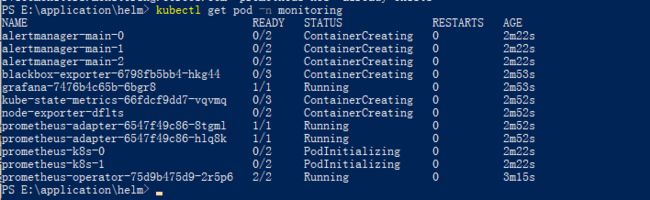

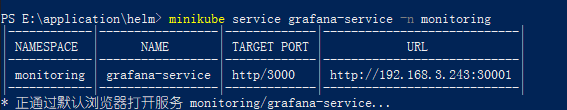

查看一下grafana和promethues对应的服务,看看是否正常。

接下来我们创建对应的podMonitor就可以拿到flink kubernetes operator对应的信息了。

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: flink-kubernetes-operator

labels:

release: prometheus

namespace: monitoring

spec:

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

app.kubernetes.io/name: flink-kubernetes-operator

podMetricsEndpoints:

- port: metrics下面我们创建一个作业,监控作业的指标情况。yaml 文件如下:

################################################################################

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

################################################################################

apiVersion: flink.apache.org/v1beta1

kind: FlinkDeployment

metadata:

name: pod-template-example

namespace: default

spec:

image: flink:1.15

flinkVersion: v1_15

serviceAccount: flink

ingress:

template: "flink.k8s.io/{{namespace}}/{{name}}(/|$)(.*)"

className: "nginx"

annotations:

nginx.ingress.kubernetes.io/rewrite-target: "/$2"

flinkConfiguration:

taskmanager.numberOfTaskSlots: "2"

metrics.reporter.prom.class: org.apache.flink.metrics.prometheus.PrometheusReporter

metrics.reporter.prom.port: 9249-9249

podTemplate:

apiVersion: v1

kind: Pod

metadata:

name: pod-template

spec:

containers:

# Do not change the main container name

- name: flink-main-container

volumeMounts:

- mountPath: /opt/flink/log

name: flink-logs

- mountPath: /opt/flink/downloads

name: downloads

ports:

- containerPort: 9249

name: metric-1

protocol: TCP

# Sample sidecar container for log forwarding

- name: fluentbit

image: fluent/fluent-bit:1.9.6-debug

command: [ 'sh','-c','/fluent-bit/bin/fluent-bit -i tail -p path=/flink-logs/*.log -p multiline.parser=java -o stdout' ]

volumeMounts:

- mountPath: /flink-logs

name: flink-logs

volumes:

- name: flink-logs

emptyDir: { }

- name: downloads

emptyDir: { }

jobManager:

resource:

memory: "2048m"

cpu: 0.5

podTemplate:

apiVersion: v1

kind: Pod

metadata:

name: task-manager-pod-template

spec:

initContainers:

# Sample init container for fetching remote artifacts

- name: busybox

image: busybox:latest

volumeMounts:

- mountPath: /opt/flink/downloads

name: downloads

command:

- /bin/sh

- -c

- "wget -O /opt/flink/downloads/flink-examples-streaming.jar \

https://repo1.maven.org/maven2/org/apache/flink/flink-examples-streaming_2.12/1.15.2/flink-examples-streaming_2.12-1.15.2.jar"

taskManager:

resource:

memory: "2048m"

cpu: 0.5

job:

jarURI: local:///opt/flink/downloads/flink-examples-streaming.jar

entryClass: org.apache.flink.streaming.examples.statemachine.StateMachineExample

parallelism: 2

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: flink-job-metrics

labels:

release: prometheus

namespace: monitoring

spec:

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

type: flink-native-kubernetes

podMetricsEndpoints:

- port: metric-1如下作业已经成功被收集

现在我们在grafana上配置对应的dashboard就可以查看到对应的指标数据了,为了方便,笔者选择了一个模板导入。

现在可以看到对应的数据了。

总结

部署prometheus operator的时候可以直接clone下来部署清单的方式部署,如果使用helm会因为网络问题很难部署上。

prometheus operator 要给对应的serviceAccount 对应的权限,不然无法获取到作业或者flink kubernetes operator的上报指标。

flink kubernetes operator的values文件要注意镜像版本和开启prometheus上报。