01 kubernetes二进制部署

kubernetes二进制部署文档-集群部分

文章目录

-

- kubernetes二进制部署文档-集群部分

- 一、系统规划

-

-

- 1.1 系统组件分布

- 1.2 部署拓扑

- 1.3 系统环境

-

- 二、初始化系统环境

-

-

- 2.1 升级系统内核

- 2.2内核优化

- 2.3 启用Ipvs内核模块

- 2.4 安装常用工具

- 2.5 安装NTP时间服务

- 2.6 关闭Swap分区

- 2.7 关闭系统防火墙和selinux

- 2.8 设置集群内互为免密登陆

-

- 三、部署容器环境

-

-

- 3.1 部署docker

- 3.2 检查docker环境

-

- 四、部署Kubernetes

-

-

- 4.1 准备集群根证书

-

- 4.1.1 安装cfssl

- 4.1.2 创建生成CA证书签名请求(csr)的json配置文件

- 4.1.3 生成CA证书、私钥和csr证书签名请求

- 4.1.4 创建基于根证书的config配置文件

- 4.1.6 创建metrics-server证书

-

- 4.1.6.1 创建 metrics-server 使用的证书

- 4.1.6.2 生成 metrics-server 证书和私钥

- 4.1.6.3 分发证书

- 4.1.7 创建Node节点kubeconfig文件

-

- 4.1.7.1 创建TLS Bootstrapping Token、 kubelet kubeconfig、kube-proxy kubeconfig

- 4.1.7.2 生成证书

- 4.1.7.3 分发证书

- 4.2 部署Etcd集群

-

- 4.2.1 基础设置

- 4.2.2 分发证书

- 4.2.3 编写etcd配置文件脚本 etcd-server-startup.sh

- 4.2.4 执行etcd.sh 生成配置脚本

- 4.2.4 检查etcd01运行情况

- 4.2.5 分发etcd-server-startup.sh脚本至master02、master03上

- 4.2.6 启动etcd02并检查运行状态

- 4.2.7 启动etcd03并检查运行状态

- 4.2.8 检查etcd集群状态

- 4.3 部署APISERVER

-

- 4.3.1 下载kubernetes二进制安装包并解压

- 4.3.4 配置master组件并运行

-

- 4.3.4.1 创建 kube-apiserver 配置文件脚本

- 4.3.4.2 创建生成 kube-controller-manager 配置文件脚本

- 4.3.4.3 创建生成 kube-scheduler 配置文件脚本

- 4.3.5 复制执行文件

- 4.3.7 添加权限并分发至master02和master03

- 4.3.8 运行配置文件

-

- 4.3.8.1 运行k8smaster01

- 4.3.8.2 运行k8smaster02

- 4.3.8.3 运行k8smaster03

- 4.3.9 验证集群健康状态

- 4.4 部署kubelet

-

- 4.4.1 配置kubelet证书自动续期和创建Node授权用户

-

- 4.4.1.1 创建 `Node节点` 授权用户 `kubelet-bootstrap`

- 4.4.1.2 创建自动批准相关 CSR 请求的 ClusterRole

- 4.4.1.3 自动批准 kubelet-bootstrap 用户 TLS bootstrapping 首次申请证书的 CSR 请求

- 4.4.1.4 自动批准 system:nodes 组用户更新 kubelet 自身与 apiserver 通讯证书的 CSR 请求

- 4.4.1.5 自动批准 system:nodes 组用户更新 kubelet 10250 api 端口证书的 CSR 请求

- 4.4.2 各节点准备基础镜像

- 4.4.3 创建`kubelet`配置脚本

- 4.4.4 master节点启用`kubelet`

- 4.4.5 worker节点启用`kubelet`

- 4.5 部署kube-proxy

-

- 4.5.6 创建 `kube-proxy` 配置脚本

- 4.5.7 master节点启动`kube-proxy`

- 4.5.8 worker节点启动`kube-proxy`

- 4.6 检测状态

- 4.7 其他补充设置

-

- 4.7.1 master节点添加taint

- 4.7.2命令补全

-

- 五、部署网络组件

-

-

- 5.1 部署calico网络 BGP模式

-

- 5.1.1 修改部分配置

- 5.1.2 应用calico文件

- 5.2 部署coredns

-

- 5.2.1 编辑coredns配置文件

- 5.2.2 应用部署coredns

-

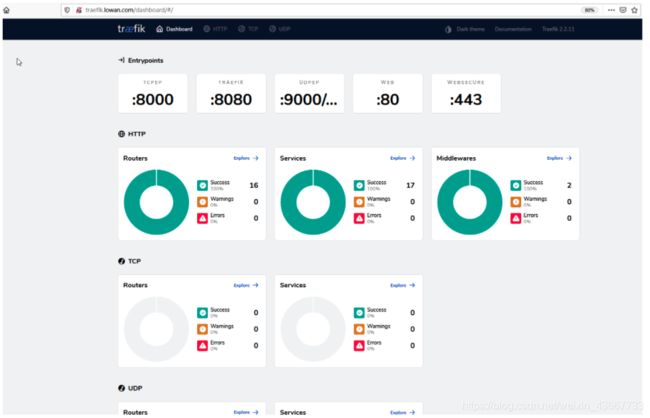

- 六、部署traefik

-

-

- 6.1 编辑traefik资源配置文件

-

- 6.1.1 编辑svc

- 6.1.2 编辑rbac

- 6.1.3 编辑ingress-traefik

- 6.1.4 编辑deployment

- 6.2 应用配置文件

- 6.3 检查状态

- 6.4 添加域名解析及反向代理

- 6.6 测试traefikdashboard

-

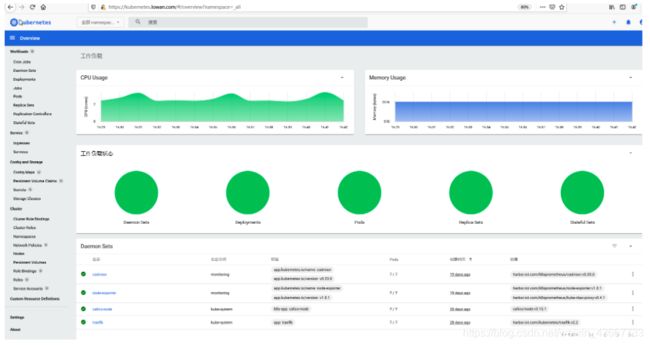

- 七、部署Kubernetes Dashboard

-

-

- 7.1 下载Dashboard的yaml文件

- 7.2 创建Dashboard的命令空间和存储

- 7.3 应用recommended

- 7.4 创建认证

-

- 7.4.1 创建登陆认证和获取token

- 7.4.2 创建自签证书

- 7.4.3 创建secret对象来引用证书文件

- 7.4.4 创建dashboard的ingressrrouter

- 7.4.5 配置nginx反代代理

-

- 7.4.5.1 分发pem和key文件至nginx

- 7.4.5.2 修改nginx.conf

- 7.5 配置DNS解析

- 7.6 登陆测试

- 7.7 配置dashboard分权

-

- 7.7.1 创建ServiceAccount 、ClusterRole和ClusterRoleBinding

-

- 后续参考:

提供者:MappleZF

版本:1.0.0

一、系统规划

1.1 系统组件分布

| 主机名 | IP | 组件 |

|---|---|---|

| lbvip.host.com | 192.168.13.100 | 虚拟VIP地址,由PCS+Haproxy提供 |

| k8smaster01.host.com | 192.168.13.101 | etcd kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy docker calico Ipvs Ceph: mon mgr mds osd |

| k8smaster02.host.com | 192.168.13.102 | etcd kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy docker calico Ipvs Ceph:mon mgr mds ods |

| k8smaster03.host.com | 192.168.13.103 | etcd kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy docker calico Ipvs Ceph: mon mgr mds |

| k8sworker01.host.com | 192.168.13.105 | kubelet kube-proxy docker calico Ipvs osd |

| k8sworker02.host.com | 192.168.13.106 | kubelet kube-proxy docker calico Ipvs osd |

| k8sworker03.host.com | 192.168.13.107 | kubelet kube-proxy docker calico Ipvs osd |

| k8sworker04.host.com | 192.168.13.108 | kubelet kube-proxy docker calico Ipvs osd |

| k8sworker05.host.com | 192.168.13.109 | kubelet kube-proxy docker calico Ipvs osd |

| lb01.host.com | 192.168.13.97 | NFS PCS pacemaker corosync Haproxy nginx |

| lb02.host.com | 192.168.13.98 | harbor PCS pacemaker corosync Haproxy nginx |

| lb03.host.com | 192.168.13.99 | DNS PCS pacemaker corosync Haproxy nginx |

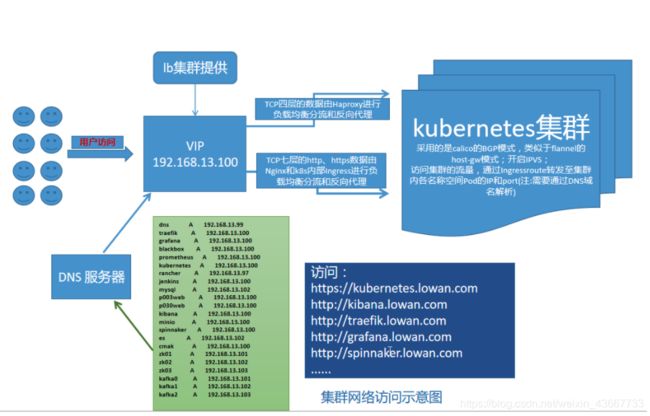

1.2 部署拓扑

集群网络访问示意图

1.3 系统环境

基础系统: CentOS Linux release 7.8.2003 (Core Linux 5.7.10-1.el7.elrepo.x86_64)

Kubernetes 版本: v1.19.0

后端存储: Ceph集群+NFS混合

网络链路: k8s业务网络 + Ceph存储数据网络

网络插件: calico组件,采用BGP模式,类似于flannel的host-gw模式;IPVS

负载均衡及反向代理:

Haproxy 负责k8s集群外的TCP四层流量负载转发和反向代理;

Nginx 负责k8s集群外的TCP七层HTTPS和HTTP流量负载转发和反向代理;

Traefik 负责k8s集群内部的流量负载转发和反向代理。

注:流量特别大的情况下,可尝试在Haproxy之前架设LVS负载均衡集群或物理负载均衡设备,将流量调度至其他k8s集群。

二、初始化系统环境

初始化系统环境,所有主机上操作,且基本相同。

2.1 升级系统内核

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum makecache && yum update -y

rpm -Uvh https://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

yum --enablerepo=elrepo-kernel install kernel-ml -y

grubby --default-kernel

grub2-set-default 0

grub2-mkconfig -o /boot/grub2/grub.cfg

awk -F\' '$1=="menuentry " {print i++ " : " $2}' /boot/grub2/grub.cfg

reboot

yum update -y && yum makecache

注:如果内核升级失败,需要到BIOS里面将securt boot 设置为disable

2.2内核优化

cat >> /etc/sysctl.d/k8s.conf << EOF

net.ipv4.tcp_fin_timeout = 10

net.ipv4.ip_forward = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_timestamps = 1

net.ipv4.tcp_keepalive_time = 600

net.ipv4.ip_local_port_range = 4000 65000

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_max_tw_buckets = 1048576

net.ipv4.route.gc_timeout = 100

net.ipv4.tcp_syn_retries = 1

net.ipv4.tcp_synack_retries = 1

net.core.somaxconn = 32768

net.core.netdev_max_backlog = 16384

net.core.rmem_default=262144

net.core.wmem_default=262144

net.core.rmem_max=16777216

net.core.wmem_max=16777216

net.core.optmem_max=16777216

net.netfilter.nf_conntrack_max=2097152

net.nf_conntrack_max=2097152

net.netfilter.nf_conntrack_tcp_timeout_fin_wait=30

net.netfilter.nf_conntrack_tcp_timeout_time_wait=30

net.netfilter.nf_conntrack_tcp_timeout_close_wait=15

net.netfilter.nf_conntrack_tcp_timeout_established=300

net.ipv4.tcp_max_orphans = 524288

fs.file-max=2097152

fs.nr_open=2097152

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

sysctl --system

cat >> /etc/systemd/system.conf << EOF

DefaultLimitNOFILE=1048576

DefaultLimitNPROC=1048576

EOF

cat /etc/systemd/system.conf

cat >> /etc/security/limits.conf << EOF

* soft nofile 1048576

* hard nofile 1048576

* soft nproc 1048576

* hard nproc 1048576

EOF

cat /etc/security/limits.conf

reboot

最好重启检查

2.3 启用Ipvs内核模块

#创建内核模块载入相关的脚本文件/etc/sysconfig/modules/ipvs.modules,设定自动载入的内核模块。文件内容如下:

每个节点全要开启

# 新建脚本

vim /etc/sysconfig/modules/ipvs.modules

#脚本内容如下

#!/bin/bash

ipvs_modules_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_modules_dir | grep -o "^[^.]*");

do

/sbin/modinfo -F filename $i &> /dev/null

if [ $? -eq 0 ]; then

/sbin/modprobe $i

fi

done

#修改文件权限,并手动为当前系统加载内核模块:

chmod +x /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

#检测加载情况

lsmod | grep ip_vs

2.4 安装常用工具

yum install -y nfs-utils curl yum-utils device-mapper-persistent-data lvm2 net-tools

yum install -y conntrack-tools wget sysstat vim-enhanced bash-completion psmisc traceroute iproute* tree

yum install -y libseccomp libtool-ltdl ipvsadm ipset

2.5 安装NTP时间服务

vim /etc/chrony.conf

修改

server 192.168.20.4 iburst

server time4.aliyun.com iburst

systemctl enable chronyd

systemctl start chronyd

chronyc sources

2.6 关闭Swap分区

swapoff -a

vim /etc/fstab文件中注释掉 swap一行

2.7 关闭系统防火墙和selinux

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

2.8 设置集群内互为免密登陆

ssh-keygen

ssh-copy-id -i ~/.ssh/id_rsa.pub k8smaster01

...省略

三、部署容器环境

3.1 部署docker

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast

yum -y install docker-ce

mkdir -p /data/docker /etc/docker

修改/usr/lib/systemd/system/docker.service文件,在“ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock”一行之后新增一行如下内容:

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

cat < /etc/docker/daemon.json

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"registry-mirrors": ["https://kk0rm6dj.mirror.aliyuncs.com","https://registry.docker-cn.com"],

"insecure-registries": ["harbor.iot.com"],

"bip": "10.244.101.1/24",

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

EOF

注意:https://kk0rm6dj.mirror.aliyuncs.com阿里云的镜像加速地址最好自己申请(免费的)

systemctl enable docker

systemctl daemon-reload

systemctl restart docker

注: big的地址根据主机IP进行相应的变更

3.2 检查docker环境

[[email protected]:/root]# docker info

Client:

Debug Mode: false

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 19.03.12

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

Cgroup Driver: systemd

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 7ad184331fa3e55e52b890ea95e65ba581ae3429

runc version: dc9208a3303feef5b3839f4323d9beb36df0a9dd

init version: fec3683

Security Options:

seccomp

Profile: default

Kernel Version: 5.8.6-2.el7.elrepo.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

CPUs: 20

Total Memory: 30.84GiB

Name: k8smaster01.host.com

ID: FZAG:XAY6:AYKI:7SUR:62AP:ANDH:JM33:RG5O:Q2XJ:NHGJ:ROAO:6LEM

Docker Root Dir: /data/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

harbor.iot.com

registry.lowaniot.com

127.0.0.0/8

Registry Mirrors:

https://*********.mirror.aliyuncs.com/

https://registry.docker-cn.com/

Live Restore Enabled: true

四、部署Kubernetes

4.1 准备集群根证书

kubernetes各组件使用证书如下:

etcd:使用 ca.pem、etcd-key.pem、etcd.pem;(etcd对外提供服务、节点间通信(etcd peer)使用同一套证书)

kube-apiserver:使用 ca.pem、ca-key.pem、kube-apiserver-key.pem、kube-apiserver.pem;

kubelet:使用 ca.pem ca-key.pem;

kube-proxy:使用 ca.pem、kube-proxy-key.pem、kube-proxy.pem;

kubectl:使用 ca.pem、admin-key.pem、admin.pem;

kube-controller-manager:使用 ca-key.pem、ca.pem、kube-controller-manager.pem、kube-controller-manager-key.pem;

kube-scheduler:使用ca-key.pem、ca.pem、kube-scheduler-key.pem、kube-scheduler.pem;

安装证书生成工具 cfssl,并生成相关证书

关于cfssl工具:

cfssl:证书签发的主要工具

cfssl-json:将cfssl生成的整数(json格式)变为文件承载式证书

cfssl-certinfo:验证证书的信息

4.1.1 安装cfssl

//创建目录用于存放 SSL 证书

[[email protected]:/opt/src]# mkdir /data/ssl -p

// 下载生成证书命令

[[email protected]:/opt/src]# wget -c https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[[email protected]:/opt/src]# wget -c https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[[email protected]:/opt/src]# wget -c https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

// 添加执行权限并查看

[[email protected]:/opt/src]# chmod +x cfssl-certinfo_linux-amd64 cfssljson_linux-amd64 cfssl_linux-amd64

[[email protected]:/opt/src]# ll

total 18808

-rwxr-xr-x. 1 root root 6595195 Mar 30 2016 cfssl-certinfo_linux-amd64

-rwxr-xr-x. 1 root root 2277873 Mar 30 2016 cfssljson_linux-amd64

-rwxr-xr-x. 1 root root 10376657 Mar 30 2016 cfssl_linux-amd64

// 移动到 /usr/local/bin 目录下

[[email protected]:/opt/src]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[[email protected]:/opt/src]# mv cfssljson_linux-amd64 /usr/local/bin/cfssl-json

[[email protected]:/opt/src]# mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

// 进入证书目录

[[email protected]:/opt/src]# cd /data/ssl/

PS : cfssl-certinfo -cert serverca

4.1.2 创建生成CA证书签名请求(csr)的json配置文件

自签证书会有个根证书ca(需权威机构签发/可自签 )

CN:浏览器使用该字段验证网站是否合法,一般写的是域名,非常重要

C:国家

ST:州/省

L:地区/城市

O:组织名称/公司名称

OU:组织单位名称,公司部门

[[email protected]:/data/ssl]#

cat > /data/ssl/ca-csr.json <{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

],

"ca": {

"expiry": "175200h"

}

}

EOF

4.1.3 生成CA证书、私钥和csr证书签名请求

会生成运行CA所需求的文件ca-key.pem(私钥)和ca.pem(证书),还会生成ca.csr(证书签名请求),用于交叉签名或重新签名。

[[email protected]:/data/ssl]# cfssl gencert -initca ca-csr.json | cfssl-json -bare ca

2020/09/09 17:53:11 [INFO] generating a new CA key and certificate from CSR

2020/09/09 17:53:11 [INFO] generate received request

2020/09/09 17:53:11 [INFO] received CSR

2020/09/09 17:53:11 [INFO] generating key: rsa-2048

2020/09/09 17:53:11 [INFO] encoded CSR

2020/09/09 17:53:11 [INFO] signed certificate with serial number 633952028496783804989414964353657856875757870668

[[email protected]:/data/ssl]# ls

ca.csr ca-csr.json ca-key.pem ca.pem

[[email protected]:/data/ssl]# ll

total 16

-rw-r--r-- 1 root root 1001 Sep 9 17:53 ca.csr

-rw-r--r-- 1 root root 307 Sep 9 17:52 ca-csr.json

-rw------- 1 root root 1679 Sep 9 17:53 ca-key.pem

-rw-r--r-- 1 root root 1359 Sep 9 17:53 ca.pem

4.1.4 创建基于根证书的config配置文件

解析:

ca-config.json:可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个 profile;

signing:表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE;

server auth:表示client可以用该 CA 对server提供的证书进行验证;

client auth:表示server可以用该CA对client提供的证书进行验证;

expiry: 表示证书过期时间,这里设置10年,可以适当减少

[[email protected]:/data/ssl]#

cat > /data/ssl/ca-config.json <{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"kubernetes": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

[[email protected]:/data/ssl]#

cat > server-csr.json <{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.13.100",

"192.168.13.101",

"192.168.13.102",

"192.168.13.103",

"192.168.13.104",

"192.168.13.105",

"192.168.13.106",

"192.168.13.107",

"192.168.13.108",

"192.168.13.109",

"192.168.13.110",

"10.10.0.1",

"lbvip.host.com",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

[[email protected]:/data/ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssl-json -bare server

2020/09/09 18:03:25 [INFO] generate received request

2020/09/09 18:03:25 [INFO] received CSR

2020/09/09 18:03:25 [INFO] generating key: rsa-2048

2020/09/09 18:03:25 [INFO] encoded CSR

2020/09/09 18:03:25 [INFO] signed certificate with serial number 520236944854379069364132464186673377003193743849

2020/09/09 18:03:25 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[[email protected]:/data/ssl]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem server.csr server-csr.json server-key.pem server.pem

[[email protected]:/data/ssl]#

cat > admin-csr.json <{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

[[email protected]:/data/ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssl-json -bare admin

2020/09/09 18:08:34 [INFO] generate received request

2020/09/09 18:08:34 [INFO] received CSR

2020/09/09 18:08:34 [INFO] generating key: rsa-2048

2020/09/09 18:08:34 [INFO] encoded CSR

2020/09/09 18:08:34 [INFO] signed certificate with serial number 662043138212200112498221957779202406541973930898

2020/09/09 18:08:34 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[[email protected]:/data/ssl]# ls

admin.csr admin-csr.json admin-key.pem admin.pem ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem server.csr server-csr.json server-key.pem server.pem

[[email protected]:/data/ssl]#

cat > kube-proxy-csr.json <{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

[[email protected]:/data/ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssl-json -bare kube-proxy

2020/09/09 18:11:10 [INFO] generate received request

2020/09/09 18:11:10 [INFO] received CSR

2020/09/09 18:11:10 [INFO] generating key: rsa-2048

2020/09/09 18:11:10 [INFO] encoded CSR

2020/09/09 18:11:10 [INFO] signed certificate with serial number 721336273441573081460131627771975553540104946357

2020/09/09 18:11:10 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[[email protected]:/data/ssl]# ls

admin.csr admin-key.pem ca-config.json ca-csr.json ca.pem kube-proxy-csr.json kube-proxy.pem server-csr.json server.pem

admin-csr.json admin.pem ca.csr ca-key.pem kube-proxy.csr kube-proxy-key.pem server.csr server-key.pem

4.1.6 创建metrics-server证书

4.1.6.1 创建 metrics-server 使用的证书

# 注意: "CN": "system:metrics-server" 一定是这个,因为后面授权时用到这个名称,否则会报禁止匿名访问

[[email protected]:/data/ssl]#

cat > metrics-server-csr.json <{

"CN": "system:metrics-server",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

]

}

EOF

4.1.6.2 生成 metrics-server 证书和私钥

[[email protected]:/data/ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes metrics-server-csr.json | cfssl-json -bare metrics-server2020/09/09 18:33:24 [INFO] generate received request

2020/09/09 18:33:24 [INFO] received CSR

2020/09/09 18:33:24 [INFO] generating key: rsa-2048

2020/09/09 18:33:24 [INFO] encoded CSR

2020/09/09 18:33:24 [INFO] signed certificate with serial number 611188048814430694712004119513191220396672578396

2020/09/09 18:33:24 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[[email protected]:/data/ssl]# ls

admin.csr admin.pem ca-csr.json kube-proxy.csr kube-proxy.pem metrics-server-key.pem server-csr.json

admin-csr.json ca-config.json ca-key.pem kube-proxy-csr.json metrics-server.csr metrics-server.pem server-key.pem

admin-key.pem ca.csr ca.pem kube-proxy-key.pem metrics-server-csr.json server.csr server.pem

4.1.6.3 分发证书

[[email protected]:/data/ssl]# cp metrics-server-key.pem metrics-server.pem /opt/kubernetes/ssl/

[[email protected]:/data/ssl]# scp metrics-server-key.pem metrics-server.pem k8smaster02:/opt/kubernetes/ssl/

[[email protected]:/data/ssl]# scp metrics-server-key.pem metrics-server.pem k8smaster03:/opt/kubernetes/ssl/

4.1.7 创建Node节点kubeconfig文件

4.1.7.1 创建TLS Bootstrapping Token、 kubelet kubeconfig、kube-proxy kubeconfig

[[email protected]:/data/ssl]# vim kubeconfig.sh

# 创建 TLS Bootstrapping Token

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > token.csv <{BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

#----------------------

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://lbvip.host.com:7443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

4.1.7.2 生成证书

[[email protected]:/data/ssl]# bash kubeconfig.sh

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".

[[email protected]:/data/ssl]# ls

admin.csr admin.pem ca.csr ca.pem kube-proxy-csr.json kube-proxy.pem metrics-server-key.pem server-csr.json token.csv

admin-csr.json bootstrap.kubeconfig ca-csr.json kubeconfig.sh kube-proxy-key.pem metrics-server.csr metrics-server.pem server-key.pem

admin-key.pem ca-config.json ca-key.pem kube-proxy.csr kube-proxy.kubeconfig metrics-server-csr.json server.csr server.pem

4.1.7.3 分发证书

[[email protected]:/data/ssl]# cp *kubeconfig /opt/kubernetes/cfg

[[email protected]:/data/ssl]# scp *kubeconfig k8smaster02:/opt/kubernetes/cfg

[[email protected]:/data/ssl]# scp *kubeconfig k8smaster03:/opt/kubernetes/cfg

4.2 部署Etcd集群

k8smaster01上操作,把执行文件copy到master02 master03

摘要:etcd部署在master三台节点上做高可用,etcd集群采用raft算法选举Leader, 由于Raft算法在做决策时需要多数节点的投票,所以etcd一般部署集群推荐奇数个节点,推荐的数量为3、5或者7个节点构成一个集群。

二进制包下载地址:(https://github.com/etcd-io/etcd/releases)

4.2.1 基础设置

k8smaster01上操作

# 创建存储etcd数据目录

[[email protected]:/root]# mkdir -p /data/etcd/

# 创建etcd日志目录

[[email protected]:/root]# mkdir -p /data/logs/etcd-server

# 创建kubernetes 集群配置目录

[[email protected]:/root]# mkdir -p /opt/kubernetes/{bin,cfg,ssl}

# 下载二进制etcd包,并把执行文件放到 /opt/kubernetes/bin/ 目录

[[email protected]:/root]# cd /opt/src/

[[email protected]:/opt/src]# wget -c https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

[[email protected]:/opt/src]# tar -xzf etcd-v3.4.13-linux-amd64.tar.gz -C /data/etcd

[[email protected]:/opt/src]# cd /data/etcd/etcd-v3.4.13-linux-amd64/

[[email protected]:/data/etcd/etcd-v3.4.13-linux-amd64]# cp -a etcd etcdctl /opt/kubernetes/bin/

[[email protected]:/data/etcd/etcd-v3.4.13-linux-amd64]# cd

# 把 /opt/kubernetes/bin 目录加入到 PATH

[[email protected]:/root]# echo 'export PATH=$PATH:/opt/kubernetes/bin' >> /etc/profile

[[email protected]:/root]# source /etc/profile

k8smaster02和k8smaster03上操作

mkdir -p /data/etcd/ /data/logs/etcd-server

mkdir -p /opt/kubernetes/{bin,cfg,ssl}

echo 'export PATH=$PATH:/opt/kubernetes/bin' >> /etc/profile

source /etc/profile

在各worker节点上执行

mkdir -p /data/worker

mkdir -p /opt/kubernetes/{bin,cfg,ssl}

echo 'export PATH=$PATH:/opt/kubernetes/bin' >> /etc/profile

source /etc/profile

4.2.2 分发证书

# 进入 K8S 集群证书目录

[[email protected]:/root]# cd /data/ssl/

[[email protected]:/data/ssl]# ls

admin.csr admin-key.pem ca-config.json ca-csr.json ca.pem kube-proxy-csr.json kube-proxy.pem server-csr.json server.pem

admin-csr.json admin.pem ca.csr ca-key.pem kube-proxy.csr kube-proxy-key.pem server.csr server-key.pem

# 把证书 copy 到 k8s-master1 机器 /opt/kubernetes/ssl/ 目录

[[email protected]:/data/ssl]# cp ca*pem server*pem /opt/kubernetes/ssl/

[[email protected]:/data/ssl]# cd /opt/kubernetes/ssl/ && ls

ca-key.pem ca.pem server-key.pem server.pem

其中 etcd-peer-key.pem 是私钥 权限为600

[[email protected]:/opt/kubernetes/ssl]# cd ..

# 把etcd执行文件与证书 copy 到 k8smaster02 k8smaster03

# 把ca.pem证书copy到worker各节点

[[email protected]:/opt/kubernetes]# scp -r /opt/kubernetes/* k8smaster02:/opt/kubernetes/

[[email protected]:/opt/kubernetes]# scp -r /opt/kubernetes/* k8smaster03:/opt/kubernetes/

4.2.3 编写etcd配置文件脚本 etcd-server-startup.sh

k8smaster01上操作

[[email protected]:/opt/kubernetes]# cd /data/etcd/

[[email protected]:/data/etcd]# vim etcd-server-startup.sh

#!/bin/bash

ETCD_NAME=${1:-"etcd01"}

ETCD_IP=${2:-"127.0.0.1"}

ETCD_CLUSTER=${3:-"etcd01=https://127.0.0.1:2379"}

cat </opt/kubernetes/cfg/etcd.yml

name: ${ETCD_NAME}

data-dir: /var/lib/etcd/default.etcd

listen-peer-urls: https://${ETCD_IP}:2380

listen-client-urls: https://${ETCD_IP}:2379,https://127.0.0.1:2379

advertise-client-urls: https://${ETCD_IP}:2379

initial-advertise-peer-urls: https://${ETCD_IP}:2380

initial-cluster: ${ETCD_CLUSTER}

initial-cluster-token: etcd-cluster

initial-cluster-state: new

client-transport-security:

cert-file: /opt/kubernetes/ssl/server.pem

key-file: /opt/kubernetes/ssl/server-key.pem

client-cert-auth: false

trusted-ca-file: /opt/kubernetes/ssl/ca.pem

auto-tls: false

peer-transport-security:

cert-file: /opt/kubernetes/ssl/server.pem

key-file: /opt/kubernetes/ssl/server-key.pem

client-cert-auth: false

trusted-ca-file: /opt/kubernetes/ssl/ca.pem

auto-tls: false

debug: false

logger: zap

log-outputs: [stderr]

EOF

cat </usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

Documentation=https://github.com/etcd-io/etcd

Conflicts=etcd.service

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

LimitNOFILE=65536

Restart=on-failure

RestartSec=5s

TimeoutStartSec=0

ExecStart=/opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

4.2.4 执行etcd.sh 生成配置脚本

[[email protected]:/data/etcd]# chmod +x etcd-server-startup.sh

[[email protected]:/data/etcd]# ./etcd-server-startup.sh etcd01 192.168.13.101 etcd01=https://192.168.13.101:2380,etcd02=https://192.168.13.102:2380,etcd03=https://192.168.13.103:2380

注: etcd与peer三个主机内部通讯是走2380端口,与etcd外部通讯是走2379端口

注: 此处etcd启动时卡启动状态,但是服务已经起来了.需要等etcd02和etcd03启动后,状态才正常。

4.2.4 检查etcd01运行情况

[[email protected]:/data/etcd]# netstat -ntplu | grep etcd

tcp 0 0 192.168.13.101:2379 0.0.0.0:* LISTEN 25927/etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 25927/etcd

tcp 0 0 192.168.13.101:2380 0.0.0.0:* LISTEN 25927/etcd

[[email protected]:/data/etcd]# ps -ef | grep etcd

root 25878 6156 0 15:33 pts/3 00:00:00 /bin/bash ./etcd-server-startup.sh etcd01 192.168.13.101 etcd01=https://192.168.13.101:2380,etcd02=https://192.168.13.102:2380,etcd03=https://192.168.13.103:2380

root 25921 25878 0 15:33 pts/3 00:00:00 systemctl restart etcd

root 25927 1 3 15:33 ? 00:00:16 /opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml

root 25999 3705 0 15:41 pts/0 00:00:00 grep --color=auto etcd

4.2.5 分发etcd-server-startup.sh脚本至master02、master03上

[[email protected]:/data/etcd]# scp /data/etcd/etcd-server-startup.sh k8smaster02:/data/etcd/

[[email protected]:/data/etcd]# scp /data/etcd/etcd-server-startup.sh k8smaster03:/data/etcd/

4.2.6 启动etcd02并检查运行状态

[[email protected]:/root]# cd /data/etcd

[[email protected]:/data/etcd]# ./etcd-server-startup.sh etcd02 192.168.13.102 etcd01=https://192.168.13.101:2380,etcd02=https://192.168.13.102:2380,etcd03=https://192.168.13.103:2380

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

[[email protected]:/data/etcd]# systemctl status etcd -l

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2020-09-09 12:48:49 CST; 35s ago

Docs: https://github.com/etcd-io/etcd

Main PID: 24630 (etcd)

Tasks: 29

Memory: 17.6M

CGroup: /system.slice/etcd.service

└─24630 /opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml

Sep 09 12:48:54 k8smaster02.host.com etcd[24630]: {"level":"warn","ts":"2020-09-09T12:48:54.257+0800","caller":"rafthttp/probing_status.go:70","msg":"prober detected unhealthy status","round-tripper-name":"ROUND_TRIPPER_SNAPSHOT","remote-peer-id":"8ebc349f826f93fe","rtt":"0s","error":"dial tcp 192.168.13.103:2380: connect: connection refused"}

Sep 09 12:48:55 k8smaster02.host.com etcd[24630]: {"level":"info","ts":"2020-09-09T12:48:55.144+0800","caller":"rafthttp/stream.go:250","msg":"set message encoder","from":"a1f9be88a19d2f3c","to":"a1f9be88a19d2f3c","stream-type":"stream MsgApp v2"}

Sep 09 12:48:55 k8smaster02.host.com etcd[24630]: {"level":"info","ts":"2020-09-09T12:48:55.144+0800","caller":"rafthttp/peer_status.go:51","msg":"peer became active","peer-id":"8ebc349f826f93fe"}

Sep 09 12:48:55 k8smaster02.host.com etcd[24630]: {"level":"warn","ts":"2020-09-09T12:48:55.144+0800","caller":"rafthttp/stream.go:277","msg":"established TCP streaming connection with remote peer","stream-writer-type":"stream MsgApp v2","local-member-id":"a1f9be88a19d2f3c","remote-peer-id":"8ebc349f826f93fe"}

Sep 09 12:48:55 k8smaster02.host.com etcd[24630]: {"level":"info","ts":"2020-09-09T12:48:55.144+0800","caller":"rafthttp/stream.go:250","msg":"set message encoder","from":"a1f9be88a19d2f3c","to":"a1f9be88a19d2f3c","stream-type":"stream Message"}

Sep 09 12:48:55 k8smaster02.host.com etcd[24630]: {"level":"warn","ts":"2020-09-09T12:48:55.144+0800","caller":"rafthttp/stream.go:277","msg":"established TCP streaming connection with remote peer","stream-writer-type":"stream Message","local-member-id":"a1f9be88a19d2f3c","remote-peer-id":"8ebc349f826f93fe"}

Sep 09 12:48:55 k8smaster02.host.com etcd[24630]: {"level":"info","ts":"2020-09-09T12:48:55.159+0800","caller":"rafthttp/stream.go:425","msg":"established TCP streaming connection with remote peer","stream-reader-type":"stream MsgApp v2","local-member-id":"a1f9be88a19d2f3c","remote-peer-id":"8ebc349f826f93fe"}

Sep 09 12:48:55 k8smaster02.host.com etcd[24630]: {"level":"info","ts":"2020-09-09T12:48:55.159+0800","caller":"rafthttp/stream.go:425","msg":"established TCP streaming connection with remote peer","stream-reader-type":"stream Message","local-member-id":"a1f9be88a19d2f3c","remote-peer-id":"8ebc349f826f93fe"}

Sep 09 12:48:57 k8smaster02.host.com etcd[24630]: {"level":"info","ts":"2020-09-09T12:48:57.598+0800","caller":"membership/cluster.go:546","msg":"updated cluster version","cluster-id":"6204defa6d59332e","local-member-id":"a1f9be88a19d2f3c","from":"3.0","from":"3.4"}

Sep 09 12:48:57 k8smaster02.host.com etcd[24630]: {"level":"info","ts":"2020-09-09T12:48:57.598+0800","caller":"api/capability.go:76","msg":"enabled capabilities for version","cluster-version":"3.4"}

[[email protected]:/data/etcd]# netstat -ntplu | grep etcd

tcp 0 0 192.168.13.102:2379 0.0.0.0:* LISTEN 24630/etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 24630/etcd

tcp 0 0 192.168.13.102:2380 0.0.0.0:* LISTEN 24630/etcd

[[email protected]:/data/etcd]# ps -ef | grep etcd

root 24630 1 1 12:48 ? 00:00:03 /opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml

root 24675 2602 0 12:52 pts/0 00:00:00 grep --color=auto etcd

4.2.7 启动etcd03并检查运行状态

[[email protected]:/root]# cd /data/etcd/

[[email protected]:/data/etcd]# ./etcd-server-startup.sh etcd03 192.168.13.103 etcd01=https://192.168.13.101:2380,etcd02=https://192.168.13.102:2380,etcd03=https://192.168.13.103:2380

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

[[email protected]:/data/etcd]# systemctl status etcd -l

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2020-09-09 12:48:55 CST; 7s ago

Docs: https://github.com/etcd-io/etcd

Main PID: 1322 (etcd)

Tasks: 20

Memory: 27.7M

CGroup: /system.slice/etcd.service

└─1322 /opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml

Sep 09 12:48:55 k8smaster03.host.com systemd[1]: Started Etcd Server.

Sep 09 12:48:55 k8smaster03.host.com etcd[1322]: {"level":"info","ts":"2020-09-09T12:48:55.190+0800","caller":"embed/serve.go:139","msg":"serving client traffic insecurely; this is strongly discouraged!","address":"192.168.13.103:2379"}

Sep 09 12:48:55 k8smaster03.host.com etcd[1322]: {"level":"info","ts":"2020-09-09T12:48:55.190+0800","caller":"embed/serve.go:139","msg":"serving client traffic insecurely; this is strongly discouraged!","address":"127.0.0.1:2379"}

Sep 09 12:48:55 k8smaster03.host.com etcd[1322]: {"level":"info","ts":"2020-09-09T12:48:55.192+0800","caller":"rafthttp/stream.go:250","msg":"set message encoder","from":"8ebc349f826f93fe","to":"8ebc349f826f93fe","stream-type":"stream Message"}

Sep 09 12:48:55 k8smaster03.host.com etcd[1322]: {"level":"warn","ts":"2020-09-09T12:48:55.192+0800","caller":"rafthttp/stream.go:277","msg":"established TCP streaming connection with remote peer","stream-writer-type":"stream Message","local-member-id":"8ebc349f826f93fe","remote-peer-id":"b255a2baf0f6222c"}

Sep 09 12:48:55 k8smaster03.host.com etcd[1322]: {"level":"info","ts":"2020-09-09T12:48:55.192+0800","caller":"rafthttp/stream.go:250","msg":"set message encoder","from":"8ebc349f826f93fe","to":"8ebc349f826f93fe","stream-type":"stream MsgApp v2"}

Sep 09 12:48:55 k8smaster03.host.com etcd[1322]: {"level":"warn","ts":"2020-09-09T12:48:55.192+0800","caller":"rafthttp/stream.go:277","msg":"established TCP streaming connection with remote peer","stream-writer-type":"stream MsgApp v2","local-member-id":"8ebc349f826f93fe","remote-peer-id":"b255a2baf0f6222c"}

Sep 09 12:48:55 k8smaster03.host.com etcd[1322]: {"level":"info","ts":"2020-09-09T12:48:55.193+0800","caller":"etcdserver/server.go:716","msg":"initialized peer connections; fast-forwarding election ticks","local-member-id":"8ebc349f826f93fe","forward-ticks":8,"forward-duration":"800ms","election-ticks":10,"election-timeout":"1s","active-remote-members":2}

Sep 09 12:48:57 k8smaster03.host.com etcd[1322]: {"level":"info","ts":"2020-09-09T12:48:57.597+0800","caller":"membership/cluster.go:546","msg":"updated cluster version","cluster-id":"6204defa6d59332e","local-member-id":"8ebc349f826f93fe","from":"3.0","from":"3.4"}

Sep 09 12:48:57 k8smaster03.host.com etcd[1322]: {"level":"info","ts":"2020-09-09T12:48:57.598+0800","caller":"api/capability.go:76","msg":"enabled capabilities for version","cluster-version":"3.4"}

[[email protected]:/data/etcd]# netstat -ntplu | grep etcd

tcp 0 0 192.168.13.103:2379 0.0.0.0:* LISTEN 1322/etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 1322/etcd

tcp 0 0 192.168.13.103:2380 0.0.0.0:* LISTEN 1322/etcd

[[email protected]:/data/etcd]# ps -ef | grep etcd

root 1322 1 1 12:48 ? 00:00:04 /opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml

root 1353 6455 0 12:53 pts/0 00:00:00 grep --color=auto etcd

4.2.8 检查etcd集群状态

[[email protected]:/root]# ETCDCTL_API=3 etcdctl --write-out=table \

--cacert=/opt/kubernetes/ssl/ca.pem --cert=/opt/kubernetes/ssl/server.pem --key=/opt/kubernetes/ssl/server-key.pem \

--endpoints=https://192.168.13.101:2379,https://192.168.13.102:2379,https://192.168.13.103:2379 endpoint health

+-----------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+-----------------------------+--------+-------------+-------+

| https://192.168.13.103:2379 | true | 20.491966ms | |

| https://192.168.13.102:2379 | true | 22.203277ms | |

| https://192.168.13.101:2379 | true | 24.576499ms | |

+-----------------------------+--------+-------------+-------+

[[email protected]:/data/etcd]# ETCDCTL_API=3 etcdctl --write-out=table \

--cacert=/opt/kubernetes/ssl/ca.pem --cert=/opt/kubernetes/ssl/server.pem \

--key=/opt/kubernetes/ssl/server-key.pem \

--endpoints=https://192.168.13.101:2379,https://192.168.13.102:2379,https://192.168.13.103:2379 member list

+------------------+---------+--------+-----------------------------+-----------------------------+------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS |ISLEARNER |

+------------------+---------+--------+-----------------------------+-----------------------------+------+

| 968cc9007004cb | started | etcd03 | https://192.168.13.103:2380 | https://192.168.13.103:2379 | false |

| 437d840672a51376 | started | etcd02 | https://192.168.13.102:2380 | https://192.168.13.102:2379 | false |

| fef4dd15ed09253e | started | etcd01 | https://192.168.13.101:2380 | https://192.168.13.101:2379 | false |

+------------------+---------+--------+-----------------------------+-----------------------------+------+

4.3 部署APISERVER

4.3.1 下载kubernetes二进制安装包并解压

[[email protected]:/opt/src]# wget -c https://dl.k8s.io/v1.19.0/kubernetes-server-linux-amd64.tar.gz

[[email protected]:/data]# mkdir -p /data/package

[[email protected]:/opt/src]# tar -xf kubernetes-server-linux-amd64.tar.gz -C /data/package/

注: 解压包中的 kubernetes-src.tar.gz 为go语言编写的源码包,可删除

server目录下的*.tar 和docker_tag为docker镜像文件,可删除

[[email protected]:/opt/src]# cd /data/package/ && cd kubernetes/

[[email protected]:/data/package/kubernetes]# rm -rf kubernetes-src.tar.gz

[[email protected]:/data/package/kubernetes]# cd server/bin

[[email protected]:/data/package/kubernetes/server/bin]# rm -rf *.tar

[[email protected]:/data/package/kubernetes/server/bin]# rm -rf *.docker_tag

[[email protected]:/data/package/kubernetes/server/bin]# ls

apiextensions-apiserver kubeadm kube-aggregator kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler mounter

[[email protected]:/data/package/kubernetes/server/bin]# cp -a kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kubeadm /opt/kubernetes/bin

4.3.4 配置master组件并运行

登录 k8smaster01 k8smaster02 k8smaster03操作

# 创建 /data/master 目录,用于存放 master 配置执行脚本

mkdir -p /data/master

4.3.4.1 创建 kube-apiserver 配置文件脚本

[[email protected]:/data]# cd /data/master/

[[email protected]:/data/master]# vim apiserver.sh

#!/bin/bash

MASTER_ADDRESS=${1:-"192.168.13.101"}

ETCD_SERVERS=${2:-"http://127.0.0.1:2379"}

cat </opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--etcd-servers=${ETCD_SERVERS} \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--advertise-address=${MASTER_ADDRESS} \\

--allow-privileged=true \\

--service-cluster-ip-range=10.10.0.0/16 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--kubelet-https=true \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=5000-65000 \\

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/kubernetes/ssl/ca.pem \\

--etcd-certfile=/opt/kubernetes/ssl/server.pem \\

--etcd-keyfile=/opt/kubernetes/ssl/server-key.pem \\

--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--proxy-client-cert-file=/opt/kubernetes/ssl/metrics-server.pem \\

--proxy-client-key-file=/opt/kubernetes/ssl/metrics-server-key.pem \\

--runtime-config=api/all=true \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-truncate-enabled=true \\

--audit-log-path=/var/log/kubernetes/k8s-audit.log"

EOF

cat </usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

4.3.4.2 创建生成 kube-controller-manager 配置文件脚本

1、Kubernetes控制器管理器是一个守护进程它通过apiserver监视集群的共享状态,并进行更改以尝试将当前状态移向所需状态。

2、kube-controller-manager是有状态的服务,会修改集群的状态信息。如果多个master节点上的相关服务同时生效,则会有同步与一致性问题,所以多master节点中的kube-controller-manager服务只能是主备的关系,kukubernetes采用租赁锁(lease-lock)实现leader的选举,具体到kube-controller-manager,设置启动参数"--leader-elect=true"。

[[email protected]:/data/master]# vim controller-manager.sh

#!/bin/bash

MASTER_ADDRESS=${1:-"127.0.0.1"}

cat </opt/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\

--v=2 \\

--master=${MASTER_ADDRESS}:8080 \\

--leader-elect=true \\

--bind-address=0.0.0.0 \\

--service-cluster-ip-range=10.10.0.0/16 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s \\

--feature-gates=RotateKubeletServerCertificate=true \\

--feature-gates=RotateKubeletClientCertificate=true \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/16 \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem"

EOF

cat </usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

4.3.4.3 创建生成 kube-scheduler 配置文件脚本

摘要:

1、Kube-scheduler作为组件运行在master节点,主要任务是把从kube-apiserver中获取的未被调度的pod通过一系列调度算法找到最适合的node,最终通过向kube-apiserver中写入Binding对象(其中指定了pod名字和调度后的node名字)来完成调度

2、kube-scheduler与kube-controller-manager一样,如果高可用,都是采用leader选举模式。启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用后,剩余节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

[[email protected]:/data/master]# vim scheduler.sh

#!/bin/bash

MASTER_ADDRESS=${1:-"127.0.0.1"}

cat </opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true \\

--v=2 \\

--master=${MASTER_ADDRESS}:8080 \\

--address=0.0.0.0 \\

--leader-elect"

EOF

cat </usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

4.3.5 复制执行文件

执行文件复制至k8smaster02和k8smaster03的/opt/kubernetes/bin/

[[email protected]:/data/package/kubernetes/server/bin]# scp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kubeadm k8smaster02:/opt/kubernetes/bin

[[email protected]:/data/package/kubernetes/server/bin]# scp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kubeadm k8smaster03:/opt/kubernetes/bin

4.3.7 添加权限并分发至master02和master03

[[email protected]:/data/master]# chmod +x *.sh

[[email protected]:/data/master]# scp apiserver.sh controller-manager.sh scheduler.sh k8smaster02:/data/master/

[[email protected]:/data/master]# scp apiserver.sh controller-manager.sh scheduler.sh k8smaster03:/data/master/

4.3.8 运行配置文件

4.3.8.1 运行k8smaster01

[[email protected]:/data/master]# ./apiserver.sh 192.168.13.101 https://192.168.13.101:2379,https://192.168.13.102:2379,https://192.168.13.103:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

[[email protected]:/data/master]# ./controller-manager.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

[[email protected]:/data/master]# ./scheduler.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

查看三个服务的状态

[[email protected]:/data/master]# ps -ef | grep kube

root 8918 1 0 01:11 ? 00:02:36 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=2 --config=/opt/kubernetes/cfg/kube-proxy-config.yml

root 11688 1 2 12:48 ? 00:00:48 /opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml

root 18680 1 2 11:17 ? 00:02:35 /opt/kubernetes/bin/kubelet --logtostderr=true --v=2 --hostname-override=192.168.13.101 --anonymous-auth=false --cgroup-driver=systemd --cluster-domain=cluster.local. --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice --client-ca-file=/opt/kubernetes/ssl/ca.pem --tls-cert-file=/opt/kubernetes/ssl/kubelet.pem --tls-private-key-file=/opt/kubernetes/ssl/kubelet-key.pem --image-gc-high-threshold=85 --image-gc-low-threshold=80 --kubeconfig=/opt/kubrnetes/cfg/kubelet.kubeconfig --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

root 20978 1 7 13:21 ? 00:00:27 /opt/kubernetes/bin/kube-apiserver --logtostderr=false --v=2 --apiserver-count=3 --authorization-mode=RBAC --client-ca-file=/opt/kubernetes/ssl/ca.pem --requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota --etcd-cafile=/opt/kubernetes/ssl/ca.pem --etcd-certfile=/opt/kubernetes/ssl/client.pem --etcd-keyfile=/optkubernetes/ssl/client-key.pem --etcd-servers=http://192.168.13.101:2379,http://192.168.13.102:2379,http://192.168.13.103:2379 --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --service-cluster-ip-range=10.10.0.0/16 --service-node-port-range=30000-50000 --target-ram-mb=1024 --kubelet-client-certificate=/opt/kubernetes/ssl/client.pem --kubelet-client-key=/opt/kubernetes/ssl/client-key.pem --log-dir=/var/log/kubernetes --tls-cert-file=/opt/kubernetes/ssl/apiserver.pem --tls-private-key-file=/opt/kubernetes/ssl/apiserver-key.pem

root 21830 1 2 13:24 ? 00:00:05 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=2 --master=http://127.0.0.1:8080 --leader-elect=true --service-cluster-ip-range=10.10.0.0/16 --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --cluster-cidr=10.244.0.0/16 --root-ca-file=/opt/kubernetes/ssl/ca.pem

root 22669 1 2 13:26 ? 00:00:01 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=2 --master=http://127.0.0.1:8080 --leader-elect

root 22937 18481 0 13:27 pts/0 00:00:00 grep --color=auto kube

[[email protected]:/data/master]# netstat -ntlp | grep kube-

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 20978/kube-apiserve

tcp6 0 0 :::10251 :::* LISTEN 22669/kube-schedule

tcp6 0 0 :::6443 :::* LISTEN 20978/kube-apiserve

tcp6 0 0 :::10252 :::* LISTEN 21830/kube-controll

tcp6 0 0 :::10257 :::* LISTEN 21830/kube-controll

tcp6 0 0 :::10259 :::* LISTEN 22669/kube-schedule

[[email protected]:/data/master]# systemctl status kube-apiserver kube-scheduler kube-controller-manager | grep active

Active: active (running) since Wed 2020-09-09 13:21:27 CST; 7min ago

Active: active (running) since Wed 2020-09-09 13:26:57 CST; 1min 57s ago

Active: active (running) since Wed 2020-09-09 13:24:08 CST; 4min 47s ago

4.3.8.2 运行k8smaster02

[[email protected]:/data/master]# ./apiserver.sh http://192.168.13.101:2379,http://192.168.13.102:2379,http://192.168.13.103:2379

[[email protected]:/data/master]# ./controller-manager.sh 127.0.0.1

[[email protected]:/data/master]# ./scheduler.sh 127.0.0.1

[[email protected]:/data/master]# ps -ef | grep kube

root 24630 1 1 12:48 ? 00:00:43 /opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml

root 25199 1 8 13:23 ? 00:00:31 /opt/kubernetes/bin/kube-apiserver --logtostderr=false --v=2 --apiserver-count=3 --authorization-mode=RBAC --client-ca-file=/opt/kubernetes/ssl/ca.pem --requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota --etcd-cafile=/opt/kubernetes/ssl/ca.pem --etcd-certfile=/opt/kubernetes/ssl/client.pem --etcd-keyfile=/optkubernetes/ssl/client-key.pem --etcd-servers=http://192.168.13.101:2379,http://192.168.13.102:2379,http://192.168.13.103:2379 --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --service-cluster-ip-range=10.10.0.0/16 --service-node-port-range=30000-50000 --target-ram-mb=1024 --kubelet-client-certificate=/opt/kubernetes/ssl/client.pem --kubelet-client-key=/opt/kubernetes/ssl/client-key.pem --log-dir=/var/log/kubernetes --tls-cert-file=/opt/kubernetes/ssl/apiserver.pem --tls-private-key-file=/opt/kubernetes/ssl/apiserver-key.pem

root 25301 1 0 13:24 ? 00:00:02 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=2 --master=http://127.0.0.1:8080 --leader-elect=true --service-cluster-ip-range=10.10.0.0/16 --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --cluster-cidr=10.244.0.0/16 --root-ca-file=/opt/kubernetes/ssl/ca.pem

root 25392 1 2 13:27 ? 00:00:03 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=2 --master=http://127.0.0.1:8080 --leader-elect

root 25429 2602 0 13:29 pts/0 00:00:00 grep --color=auto kube

[[email protected]:/data/master]# netstat -ntlp | grep kube-

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 25199/kube-apiserve

tcp6 0 0 :::10251 :::* LISTEN 25392/kube-schedule

tcp6 0 0 :::6443 :::* LISTEN 25199/kube-apiserve

tcp6 0 0 :::10252 :::* LISTEN 25301/kube-controll

tcp6 0 0 :::10257 :::* LISTEN 25301/kube-controll

tcp6 0 0 :::10259 :::* LISTEN 25392/kube-schedule

[[email protected]:/data/master]# systemctl status kube-apiserver kube-scheduler kube-controller-manager | grep active

Active: active (running) since Wed 2020-09-09 13:23:19 CST; 6min ago

Active: active (running) since Wed 2020-09-09 13:27:18 CST; 2min 26s ago

Active: active (running) since Wed 2020-09-09 13:24:37 CST; 5min ago

4.3.8.3 运行k8smaster03

[[email protected]:/data/master]# ./apiserver.sh http://192.168.13.101:2379,http://192.168.13.102:2379,http://192.168.13.103:2379

[[email protected]:/data/master]# ./controller-manager.sh 127.0.0.1

[[email protected]:/data/master]# ./scheduler.sh 127.0.0.1

[[email protected]:/data/master]# ps -ef | grep kube

root 1322 1 1 12:48 ? 00:00:38 /opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml

root 1561 1 4 13:25 ? 00:00:12 /opt/kubernetes/bin/kube-apiserver --logtostderr=false --v=2 --apiserver-count=3 --authorization-mode=RBAC --client-ca-file=/opt/kubernetes/ssl/ca.pem --requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota --etcd-cafile=/opt/kubernetes/ssl/ca.pem --etcd-certfile=/opt/kubernetes/ssl/client.pem --etcd-keyfile=/optkubernetes/ssl/client-key.pem --etcd-servers=http://192.168.13.101:2379,http://192.168.13.102:2379,http://192.168.13.103:2379 --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --service-cluster-ip-range=10.10.0.0/16 --service-node-port-range=30000-50000 --target-ram-mb=1024 --kubelet-client-certificate=/opt/kubernetes/ssl/client.pem --kubelet-client-key=/opt/kubernetes/ssl/client-key.pem --log-dir=/var/log/kubernetes --tls-cert-file=/opt/kubernetes/ssl/apiserver.pem --tls-private-key-file=/opt/kubernetes/ssl/apiserver-key.pem

root 1633 1 0 13:26 ? 00:00:00 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=2 --master=http://127.0.0.1:8080 --leader-elect=true --service-cluster-ip-range=10.10.0.0/16 --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --cluster-cidr=10.244.0.0/16 --root-ca-file=/opt/kubernetes/ssl/ca.pem

root 1708 1 0 13:27 ? 00:00:00 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=2 --master=http://127.0.0.1:8080 --leader-elect

root 1739 6455 0 13:30 pts/0 00:00:00 grep --color=auto kube

[[email protected]:/data/master]# netstat -ntlp | grep kube-

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 1561/kube-apiserver

tcp6 0 0 :::10251 :::* LISTEN 1708/kube-scheduler

tcp6 0 0 :::6443 :::* LISTEN 1561/kube-apiserver

tcp6 0 0 :::10252 :::* LISTEN 1633/kube-controlle

tcp6 0 0 :::10257 :::* LISTEN 1633/kube-controlle

tcp6 0 0 :::10259 :::* LISTEN 1708/kube-scheduler

[[email protected]:/data/master]# systemctl status kube-apiserver kube-scheduler kube-controller-manager | grep active

Active: active (running) since Wed 2020-09-09 13:25:49 CST; 4min 30s ago

Active: active (running) since Wed 2020-09-09 13:27:33 CST; 2min 47s ago

Active: active (running) since Wed 2020-09-09 13:26:17 CST; 4min 2s ago

4.3.9 验证集群健康状态

[[email protected]:/data/master]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

4.4 部署kubelet

4.4.1 配置kubelet证书自动续期和创建Node授权用户

4.4.1.1 创建 Node节点 授权用户 kubelet-bootstrap

[[email protected]:/data/ssl]# [[email protected]:/data/master]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubelet-bootstrap

4.4.1.2 创建自动批准相关 CSR 请求的 ClusterRole

# 创建证书旋转配置存放目录

[[email protected]:/data/master]# vim tls-instructs-csr.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeserver

rules:

- apiGroups: ["certificates.k8s.io"]

resources: ["certificatesigningrequests/selfnodeserver"]

verbs: ["create"]

# 部署

[[email protected]:/data/master]# kubectl apply -f tls-instructs-csr.yaml

clusterrole.rbac.authorization.k8s.io/system:certificates.k8s.io:certificatesigningrequests:selfnodeserver created

4.4.1.3 自动批准 kubelet-bootstrap 用户 TLS bootstrapping 首次申请证书的 CSR 请求

[[email protected]:/data/master]# kubectl create clusterrolebinding node-client-auto-approve-csr --clusterrole=system:certificates.k8s.io:certificatesigningrequests:nodeclient --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/node-client-auto-approve-csr created

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: node-client-auto-approve-csr

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubelet-bootstrap

~

4.4.1.4 自动批准 system:nodes 组用户更新 kubelet 自身与 apiserver 通讯证书的 CSR 请求

[[email protected]:/data/master]# kubectl create clusterrolebinding node-client-auto-renew-crt --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeclient --group=system:nodes

clusterrolebinding.rbac.authorization.k8s.io/node-client-auto-renew-crt created

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: node-client-auto-renew-crt

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

4.4.1.5 自动批准 system:nodes 组用户更新 kubelet 10250 api 端口证书的 CSR 请求

[[email protected]:/data/master]# kubectl create clusterrolebinding node-server-auto-renew-crt --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeserver --group=system:nodes

clusterrolebinding.rbac.authorization.k8s.io/node-server-auto-renew-crt created

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: node-server-auto-renew-crt

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeserver

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

4.4.2 各节点准备基础镜像

docker pull registry.cn-hangzhou.aliyuncs.com/mapplezf/google_containers-pause-amd64:3.2

或者

docker pull harbor.iot.com/kubernetes/google_containers-pause-amd64:3.2

或者

docker pull registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

或者

docker pull registry.aliyuncs.com/google_containers/pause

4.4.3 创建kubelet配置脚本

[[email protected]:/data/master]# vim kubelet.sh

#!/bin/bash

DNS_SERVER_IP=${1:-"10.10.0.2"}

HOSTNAME=${2:-"`hostname`"}

CLUETERDOMAIN=${3:-"cluster.local"}

cat </opt/kubernetes/cfg/kubelet.conf

KUBELET_OPTS=" --logtostderr=true \\

--v=2 \\

--hostname-override=${HOSTNAME} \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet-config.yml \\

--cert-dir=/opt/kubernetes/ssl \\

--network-plugin=cni \\

--cni-conf-dir=/etc/cni/net.d \\

--cni-bin-dir=/opt/cni/bin \\

--cgroup-driver=systemd \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/mapplezf/google_containers-pause-amd64:3.2"

EOF

# harbor.iot.com/kubernetes/google_containers-pause-amd64:3.2

cat </opt/kubernetes/cfg/kubelet-config.yml

kind: KubeletConfiguration # 使用对象

apiVersion: kubelet.config.k8s.io/v1beta1 # api版本

address: 0.0.0.0 # 监听地址

port: 10250 # 当前kubelet的端口

readOnlyPort: 10255 # kubelet暴露的端口

cgroupDriver: cgroupfs # 驱动,要于docker info显示的驱动一致

clusterDNS:

- ${DNS_SERVER_IP}

clusterDomain: ${CLUETERDOMAIN} # 集群域

failSwapOn: false # 关闭swap

# 身份验证

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

# 授权

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

# Node 资源保留

evictionHard:

imagefs.available: 15%

memory.available: 1G

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

# 镜像删除策略

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

# 旋转证书

rotateCertificates: true # 旋转kubelet client 证书

featureGates:

RotateKubeletServerCertificate: true

RotateKubeletClientCertificate: true

maxOpenFiles: 1000000

maxPods: 110

EOF

cat </usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

4.4.4 master节点启用kubelet

操作k8smaster01

[[email protected]:/data/master]# chmod +x kubelet.sh

[[email protected]:/data/master]# ./kubelet.sh 10.10.0.2 k8smaster01.host.com cluster.local.

[[email protected]:/data/master]# systemctl status kubelet

[[email protected]:/data/master]# scp kubelet.sh k8smaster02:/data/master/

[[email protected]:/data/master]# scp kubelet.sh k8smaster03:/data/master/

操作k8smaster02

[[email protected]:/data/master]# ./kubelet.sh 10.10.0.2 k8smaster02.host.com cluster.local.

[[email protected]:/data/master]# systemctl status kubelet

操作k8smaster03

[[email protected]:/data/master]# ./kubelet.sh 10.10.0.2 k8smaster03.host.com cluster.local.

[[email protected]:/data/master]# systemctl status kubelet

4.4.5 worker节点启用kubelet

清点准备部署文件

[[email protected]:/data/master]# scp kubelet.sh k8sworker01:/data/worker/

[[email protected]:/data/master]# scp kubelet.sh k8sworker02:/data/worker/

[[email protected]:/data/master]# scp kubelet.sh k8sworker03:/data/worker/

[[email protected]:/data/master]# scp kubelet.sh k8sworker04:/data/worker/

[[email protected]:/opt/kubernetes/cfg]# scp kubelet.kubeconfig bootstrap.kubeconfig k8sworker01:/opt/kubernetes/cfg/

[[email protected]:/opt/kubernetes/cfg]# scp kubelet.kubeconfig bootstrap.kubeconfig k8sworker02:/opt/kubernetes/cfg/

[[email protected]:/opt/kubernetes/cfg]# scp kubelet.kubeconfig bootstrap.kubeconfig k8sworker03:/opt/kubernetes/cfg/

[[email protected]:/opt/kubernetes/cfg]# scp kubelet.kubeconfig bootstrap.kubeconfig k8sworker04:/opt/kubernetes/cfg/

[[email protected]:/opt/kubernetes/ssl]# scp ca.pem k8sworker01:/opt/kubernetes/ssl/

[[email protected]:/opt/kubernetes/ssl]# scp ca.pem k8sworker01:/opt/kubernetes/ssl/

[[email protected]:/opt/kubernetes/ssl]# scp ca.pem k8sworker01:/opt/kubernetes/ssl/

[[email protected]:/opt/kubernetes/ssl]# scp ca.pem k8sworker01:/opt/kubernetes/ssl/

注:其实只需要kubelet kube-proxy就可以,(分发kubectl kubeadm是为了自己后期方便,一般不推荐)

[[email protected]:/opt/kubernetes/bin]# scp kubelet kube-proxy kubectl kubeadm k8sworker01:/opt/kubernetes/bin/

[[email protected]:/opt/kubernetes/bin]# scp kubelet kube-proxy kubectl kubeadm k8sworker02:/opt/kubernetes/bin/

[[email protected]:/opt/kubernetes/bin]# scp kubelet kube-proxy kubectl kubeadm k8sworker03:/opt/kubernetes/bin/

[[email protected]:/opt/kubernetes/bin]# scp kubelet kube-proxy kubectl kubeadm k8sworker04:/opt/kubernetes/bin/

操作k8sworker01

[[email protected]:/data/worker]# ./kubelet.sh 10.10.0.2 k8sworker01.host.com cluster.local.

[[email protected]:/data/worker]# systemctl status kubelet

操作k8sworker02

[[email protected]:/data/worker]# ./kubelet.sh 10.10.0.2 k8sworker02.host.com cluster.local.

[[email protected]:/data/worker]# systemctl status kubelet

操作k8sworker03

[[email protected]:/data/worker]# ./kubelet.sh 10.10.0.2 k8sworker03.host.com cluster.local.

[[email protected]:/data/worker]# systemctl status kubelet

操作k8sworker04

[[email protected]:/data/worker]# ./kubelet.sh 10.10.0.2 k8sworker04.host.com cluster.local.

[[email protected]:/data/worker]# systemctl status kubelet

4.5 部署kube-proxy

4.5.6 创建 kube-proxy 配置脚本

摘要:

kube-proxy的作用主要是负责service的实现,具体来说,就是实现了内部从pod到service和外部的从node port向service的访问

[[email protected]:/data/master]# vim proxy.sh

#!/bin/bash

HOSTNAME=${1:-"`hostname`"}

cat </opt/kubernetes/cfg/kube-proxy.conf

KUBE_PROXY_OPTS=" --logtostderr=true \\

--v=2 \\

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

EOF

cat </opt/kubernetes/cfg/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

address: 0.0.0.0 # 监听地址

metricsBindAddress: 0.0.0.0:10249 # 监控指标地址,监控获取相关信息 就从这里获取

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig # 读取配置文件

hostnameOverride: ${HOSTNAME} # 注册到k8s的节点名称唯一

clusterCIDR: 10.10.0.0/16 # service IP范围

#mode: iptables # 使用iptables模式

# 使用 ipvs 模式

mode: ipvs # ipvs 模式

ipvs:

scheduler: " nq"

iptables:

masqueradeAll: true

EOF

cat </usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

4.5.7 master节点启动kube-proxy

操作k8smaster01

[[email protected]:/root]# chmod +x /data/master/proxy.sh

[[email protected]:/data/master]# scp /data/package/kubernetes/server/bin/kube-proxy k8smaster02:/opt/kubernetes/bin

[[email protected]:/data/master]# scp /data/package/kubernetes/server/bin/kube-proxy k8smaster03:/opt/kubernetes/bin

[[email protected]:/opt/kubernetes/cfg]# scp /opt/kubernetes/cfg/kube-proxy.kubeconfig k8smaster02:/opt/kubernetes/cfg/

[[email protected]:/opt/kubernetes/cfg]# scp /opt/kubernetes/cfg/kube-proxy.kubeconfig k8smaster03:/opt/kubernetes/cfg/

[[email protected]:/root]# cp /data/package/kubernetes/server/bin/kube-proxy /opt/kubernetes/bin/

[[email protected]:/root]# cd /data/master

[[email protected]:/data/master]# scp proxy.sh k8smaster02:/data/master/

[[email protected]:/data/master]# scp proxy.sh k8smaster03:/data/master/

[[email protected]:/data/master]# ./proxy.sh k8smaster01.host.com

[[email protected]:/data/master]# systemctl status kube-proxy.service

操作k8smaster02

[[email protected]:/data/master]# ./proxy.sh k8smaster02.host.com

[[email protected]:/data/master]# systemctl status kube-proxy.service

操作k8smaster03

[[email protected]:/data/master]# ./proxy.sh k8smaster03.host.com

[[email protected]:/data/master]# systemctl status kube-proxy.service

查看服务启动情况:

systemctl status kubelet kube-proxy | grep active

[[email protected]:/data/master]# netstat -ntpl | egrep "kubelet|kube-proxy"

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 2490/kubelet

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 30250/kube-proxy

tcp 0 0 127.0.0.1:9943 0.0.0.0:* LISTEN 2490/kubelet

tcp6 0 0 :::10250 :::* LISTEN 2490/kubelet

tcp6 0 0 :::10255 :::* LISTEN 2490/kubelet

tcp6 0 0 :::10256 :::* LISTEN 30250/kube-proxy

[[email protected]:/data/master]# netstat -ntpl | egrep "kubelet|kube-proxy"

tcp 0 0 127.0.0.1:15495 0.0.0.0:* LISTEN 13678/kubelet

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 13678/kubelet

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 12346/kube-proxy

tcp6 0 0 :::10250 :::* LISTEN 13678/kubelet

tcp6 0 0 :::10255 :::* LISTEN 13678/kubelet

tcp6 0 0 :::10256 :::* LISTEN 12346/kube-proxy

[[email protected]:/data/master]# netstat -ntpl | egrep "kubelet|kube-proxy"

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 6342/kubelet

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 6220/kube-proxy

tcp 0 0 127.0.0.1:26793 0.0.0.0:* LISTEN 6342/kubelet

tcp6 0 0 :::10250 :::* LISTEN 6342/kubelet

tcp6 0 0 :::10255 :::* LISTEN 6342/kubelet

tcp6 0 0 :::10256 :::* LISTEN 6220/kube-proxy

4.5.8 worker节点启动kube-proxy

清点准备部署文件

[[email protected]:/opt/kubernetes/cfg]# scp kube-proxy.kubeconfig k8sworker01:/opt/kubernetes/cfg/

[[email protected]:/opt/kubernetes/cfg]# scp kube-proxy.kubeconfig k8sworker02:/opt/kubernetes/cfg/

[[email protected]:/opt/kubernetes/cfg]# scp kube-proxy.kubeconfig k8sworker03:/opt/kubernetes/cfg/

[[email protected]:/opt/kubernetes/cfg]# scp kube-proxy.kubeconfig k8sworker04:/opt/kubernetes/cfg/

[[email protected]:/data/master]# scp proxy.sh k8sworker01:/data/worker

[[email protected]:/data/master]# scp proxy.sh k8sworker02:/data/worker

[[email protected]:/data/master]# scp proxy.sh k8sworker03:/data/worker

[[email protected]:/data/master]# scp proxy.sh k8sworker04:/data/worker

操作k8sworker01

[[email protected]:/data/worker]# ./proxy.sh k8sworker01.host.com

[[email protected]:/data/worker]# systemctl status kube-proxy.service

操作k8sworker02

[[email protected]:/data/worker]# ./proxy.sh k8sworker02.host.com

[[email protected]:/data/worker]# systemctl status kube-proxy.service

操作k8sworker03

[[email protected]:/data/worker]# ./proxy.sh k8sworker03.host.com

[[email protected]:/data/worker]# systemctl status kube-proxy.service

操作k8sworker01

[[email protected]:/data/worker]# ./proxy.sh k8sworker04.host.com

[[email protected]:/data/worker]# systemctl status kube-proxy.service

4.6 检测状态

[[email protected]:/data/yaml]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster01.host.com Ready 165m v1.19.0

k8smaster02.host.com Ready 164m v1.19.0

k8smaster03.host.com Ready 164m v1.19.0

k8sworker01.host.com Ready 15m v1.19.0

k8sworker02.host.com Ready 14m v1.19.0

k8sworker03.host.com Ready 12m v1.19.0

k8sworker04.host.com Ready 12m v1.19.0

[[email protected]:/data/master]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-f5c7c 3m33s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

csr-hhn6n 9m14s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

csr-hqnfv 3m23s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

4.7 其他补充设置

4.7.1 master节点添加taint

[[email protected]:/root]# kubectl describe node | grep -i taint

Taints:

Taints:

Taints:

Taints:

Taints:

Taints:

Taints:

[[email protected]:/root]# kubectl describe node k8smaster01 | grep -i taint

Taints:

[[email protected]:/root]# kubectl taint nodes k8smaster01.host.com node-role.kubernetes.io/master:NoSchedule

node/k8smaster01.host.com tainted

[[email protected]:/root]# kubectl taint nodes k8smaster02.host.com node-role.kubernetes.io/master:NoSchedule

node/k8smaster02.host.com tainted

[[email protected]:/root]# kubectl taint nodes k8smaster03.host.com node-role.kubernetes.io/master:NoSchedule

node/k8smaster03.host.com tainted

[[email protected]:/root]# kubectl describe node | grep -i taint

Taints: node-role.kubernetes.io/master:NoSchedule

Taints: node-role.kubernetes.io/master:NoSchedule

Taints: node-role.kubernetes.io/master:NoSchedule

Taints:

Taints:

Taints:

Taints:

4.7.2命令补全

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> /etc/rc.local

五、部署网络组件

5.1 部署calico网络 BGP模式

calico官方网址:https://docs.projectcalico.org/about/about-calico

5.1.1 修改部分配置

全部代码请查看附件或官方下载

大概位于文件的3500行

其中标记####下面需要修改 处需要重点关注

---

# Source: calico/templates/calico-node.yaml

# This manifest installs the calico-node container, as well

# as the CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

spec:

nodeSelector:

kubernetes.io/os: linux

hostNetwork: true

tolerations:

# Make sure calico-node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

priorityClassName: system-node-critical

initContainers:

# This container performs upgrade from host-local IPAM to calico-ipam.

# It can be deleted if this is a fresh installation, or if you have already

# upgraded to use calico-ipam.

- name: upgrade-ipam

image: calico/cni:v3.16.1

command: ["/opt/cni/bin/calico-ipam", "-upgrade"]

envFrom:

- configMapRef: