小白学Pytorch系列--Torch.nn API (2)

小白学Pytorch系列–Torch.nn API (2)

Convolution Layers

| 方法 | 注释 |

|---|---|

| nn.Conv1d | 对由多个输入平面组成的输入信号应用1D卷积。 |

| nn.Conv2d | 对由多个输入平面组成的输入信号应用2D卷积。 |

| nn.Conv3d | 对由多个输入平面组成的输入信号应用3D卷积。 |

| nn.ConvTranspose1d | 对由多个输入平面组成的输入图像应用一维转置卷积算子。 |

| nn.ConvTranspose2d | 在由多个输入平面组成的输入图像上应用二维转置卷积算子。 |

| nn.ConvTranspose3d | 在由多个输入平面组成的输入图像上应用三维转置卷积算子。 |

| nn.LazyConvTranspose1d | torch.nn.Conv1d模块,对Conv1d的in_channels参数进行惰性初始化,该参数是从input.size(1)推断出来的。 |

| nn.LazyConvTranspose2d | torch.nn.Conv2d模块,从input.size(1)推断Conv2d的in_channels参数进行延迟初始化。 |

| nn.LazyConvTranspose3d | torch.nn.Conv3d模块,从input.size(1)推断Conv3d的in_channels参数进行延迟初始化。 |

| nn.Unfold | 从批处理输入张量中提取滑动局部块。 |

| nn.Fold | 将一个滑动局部块数组合并为一个大的包含张量。 |

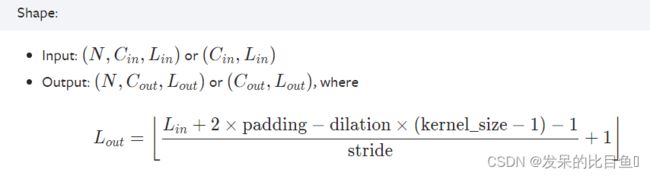

nn.Conv1d

自定义

def myconv1d(infeat, convkernel, padding=0, stride=1):

b, c, h = len(infeat), len(infeat[0]), len(infeat[0][0])

out_c, in_c, lenk = len(convkernel), len(convkernel[0]), len(convkernel[0][0])

# 不使用分组卷积,c = in_c

res = [[[0] * (h-lenk+1) for _ in range(out_c) for _ in range(b)]]

# 最终输出形状:b*out_c*(h-lenk+1)

for i in range(b):

# 关于batch,目前只能串行完成

for j in range(out_c):

# 计算每一组的结果

for m in range(c):

for n in range(h-lenk+1):

# 计算每一个位置的值

ans = 0

for k in range(lenk):

ans += infeat[i][m][n+k] * convkernel[j][m][k]

res[i][j][n] += ans

return res

# 我的卷积

infeat = [[[1,2,3,4], [1,2,4,3]]]

convkernel = [[[0,1,2], [0,2,1]], [[1,0,2], [1,2,0]], [[2,0,1], [2,1,0]]]

outfeat = myconv1d(infeat, convkernel)

print(outfeat)

官方

>>> m = nn.Conv1d(16, 33, 3, stride=2)

>>> input = torch.randn(20, 16, 50)

>>> output = m(input)

conv1d膨胀卷积网络输出解析说明可以参考一下内容:

https://blog.csdn.net/znevegiveup1/article/details/121067573

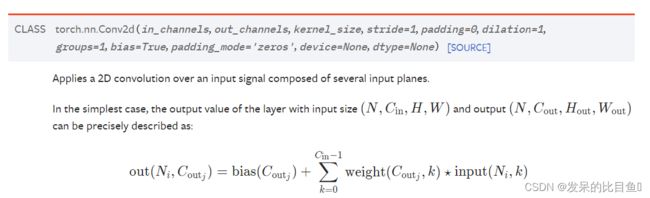

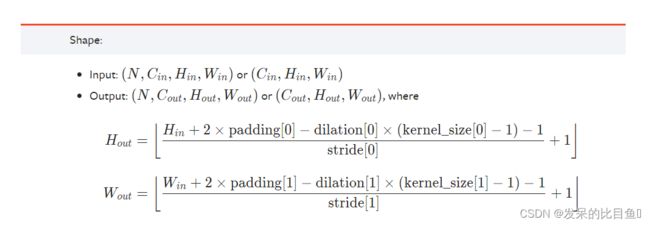

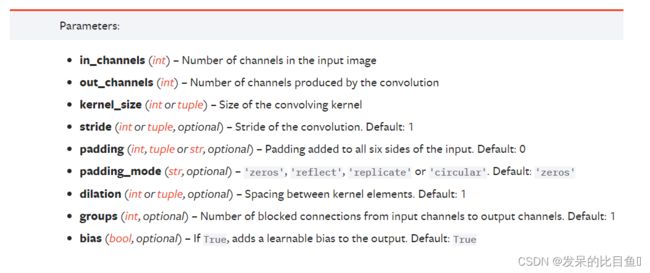

nn.Conv2d

>>> # With square kernels and equal stride

>>> m = nn.Conv2d(16, 33, 3, stride=2)

>>> # non-square kernels and unequal stride and with padding

>>> m = nn.Conv2d(16, 33, (3, 5), stride=(2, 1), padding=(4, 2))

>>> # non-square kernels and unequal stride and with padding and dilation

>>> m = nn.Conv2d(16, 33, (3, 5), stride=(2, 1), padding=(4, 2), dilation=(3, 1))

>>> input = torch.randn(20, 16, 50, 100)

>>> output = m(input)

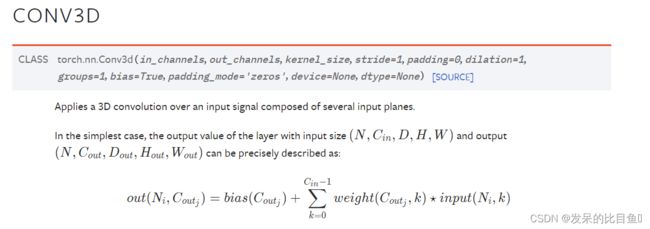

nn.Conv3d

>>> # With square kernels and equal stride

>>> m = nn.Conv3d(16, 33, 3, stride=2)

>>> # non-square kernels and unequal stride and with padding

>>> m = nn.Conv3d(16, 33, (3, 5, 2), stride=(2, 1, 1), padding=(4, 2, 0))

>>> input = torch.randn(20, 16, 10, 50, 100)

>>> output = m(input)

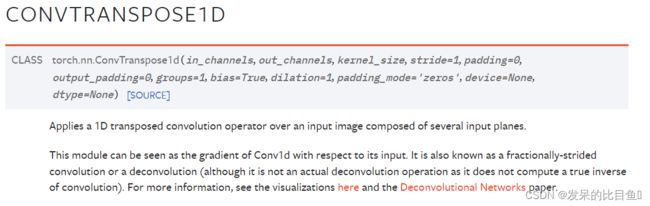

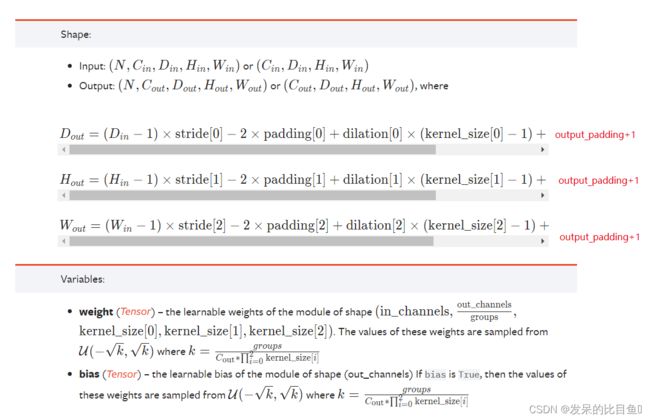

nn.ConvTranspose1d

在由几个输入平面组成的输入图像上应用1D转置卷积算子。

转置卷积(Transposed Convolution) 是卷积的逆过程,也被称为反卷积。在卷积神经网络中,属于 上采样(up-sampling) 的一种方式,常用于提升图像的分辨率,恢复图像的尺寸而不是像素值喔!。

对于转置卷积,我的理解就是把小矩阵变成大矩阵,用术语来说就是上采样。

这一过程恰恰与卷积1过程相反 ,卷积是把一个大矩阵缩小成小矩阵。

原理参考:https://www.freesion.com/article/85561537096/

import torch

from torch import nn

import torch.nn.functional as F

conv1 = nn.Conv1d(1, 2, 3, padding=1)

conv2 = nn.Conv1d(in_channels=2, out_channels=4, kernel_size=3, padding=1)

#转置卷积

dconv1 = nn.ConvTranspose1d(4, 1, kernel_size=3, stride=2, padding=1, output_padding=1)

x = torch.randn(16, 1, 8)

print(x.size())

x1 = conv1(x)

x2 = conv2(x1)

print(x2.size())

x3 = dconv1(x2)

print(x3.size())

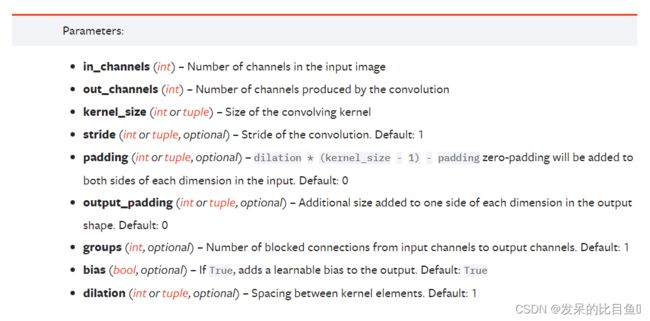

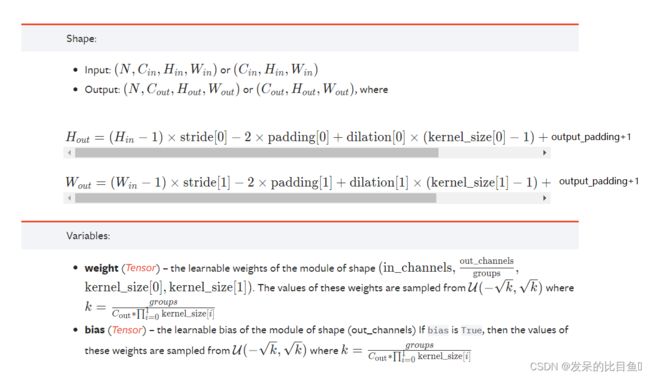

nn.ConvTranspose2d

>>> # With square kernels and equal stride

>>> m = nn.ConvTranspose2d(16, 33, 3, stride=2)

>>> # non-square kernels and unequal stride and with padding

>>> m = nn.ConvTranspose2d(16, 33, (3, 5), stride=(2, 1), padding=(4, 2))

>>> input = torch.randn(20, 16, 50, 100)

>>> output = m(input)

>>> # exact output size can be also specified as an argument

>>> input = torch.randn(1, 16, 12, 12)

>>> downsample = nn.Conv2d(16, 16, 3, stride=2, padding=1)

>>> upsample = nn.ConvTranspose2d(16, 16, 3, stride=2, padding=1)

>>> h = downsample(input)

>>> h.size()

torch.Size([1, 16, 6, 6])

>>> output = upsample(h, output_size=input.size())

>>> output.size()

torch.Size([1, 16, 12, 12])

nn.ConvTranspose3d

在由多个输入平面组成的输入图像上应用3D转置卷积算子。转置卷积算子将每个输入值逐元素乘以可学习内核,并对所有输入特征平面的输出求和。

>>> # With square kernels and equal stride

>>> m = nn.ConvTranspose3d(16, 33, 3, stride=2)

>>> # non-square kernels and unequal stride and with padding

>>> m = nn.ConvTranspose3d(16, 33, (3, 5, 2), stride=(2, 1, 1), padding=(0, 4, 2))

>>> input = torch.randn(20, 16, 10, 50, 100)

>>> output = m(input)

nn.LazyConv1d

LazyConv1d 本质上就是 Conv1d,只不过是使用了延迟初始化的卷积,也就是利用 input.size(1) = Conv1d的参数in_channels 进行延迟初始化。

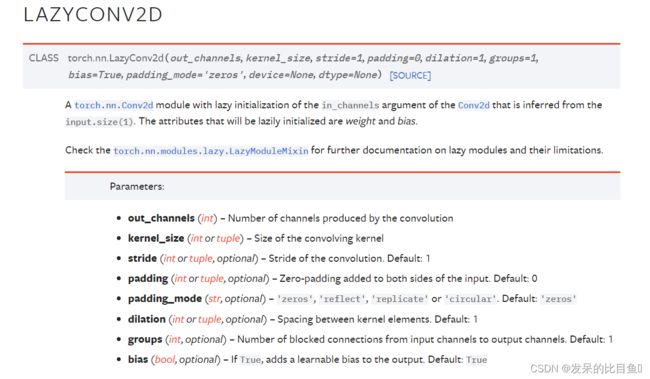

nn.LazyConv2d

nn.LazyConv3d

类似于nn.LazyConv1d

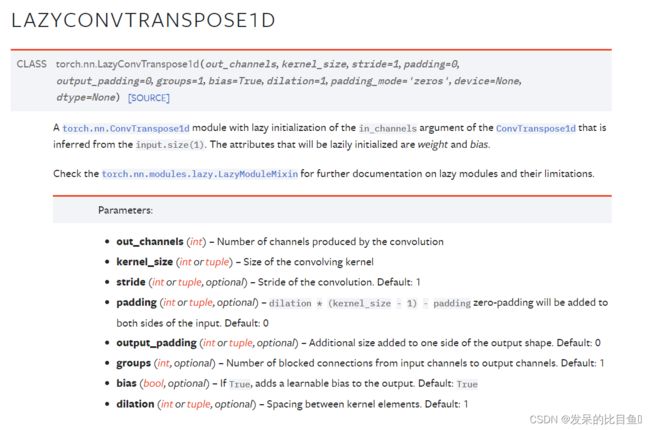

nn.LazyConvTranspose1d

torch.nn.LazyConvTranspose1d 本质上是使用了延迟初始化 ConvTranspose1d,利用 input.size(1) = ConvTranspose1d 的参数 in_channels 进行延迟初始化。

nn.LazyConvTranspose2d

nn.LazyConvTranspose3d

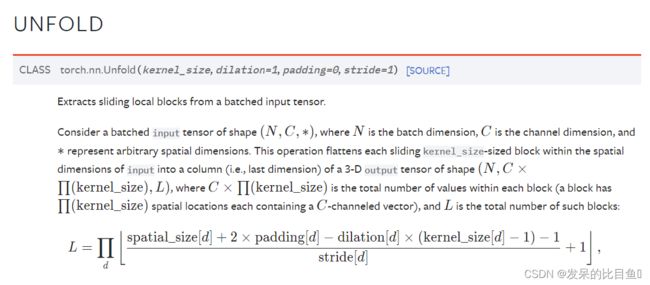

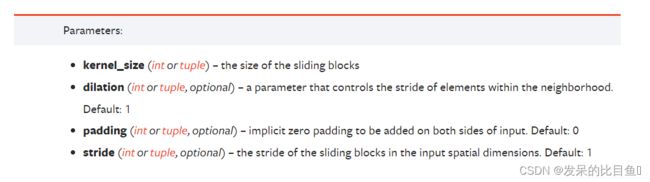

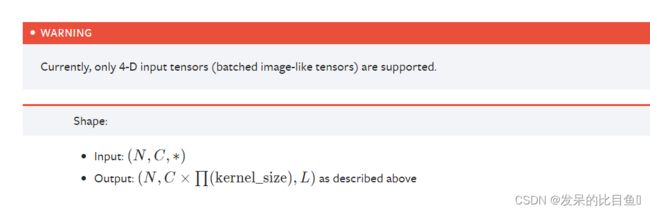

nn.Unfold

参考于:https://blog.csdn.net/qq_37937847/article/details/115663343

在图像处理领域,经常需要用到卷积操作,但是有时我们只需要在图片上进行滑动的窗口操作,将图片切割成patch,而不需要进行卷积核和图片值的卷积乘法操作。这是就需要用到nn.Unfold()函数,该函数是从一个batch图片中,提取出滑动的局部区域块,也就是卷积操作中的提取kernel filter对应的滑动窗口。

import torch.nn as nn

import torch

batches_img=torch.rand(1,2,4,4)#模拟图片数据(bs,2,4,4),通道数C为2

print("batches_img:\n",batches_img)

print("batches_img shape:\n",batches_img.shape)

nn_Unfold=nn.Unfold(kernel_size=(2,2),dilation=1,padding=0,stride=2)

patche_img=nn_Unfold(batches_img)

print("patche_img.shape:",patche_img.shape)

print("patch_img:\n",patche_img)

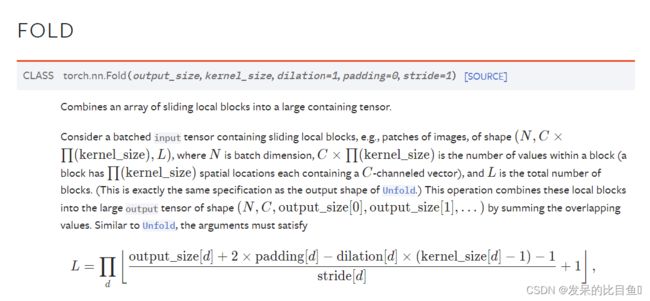

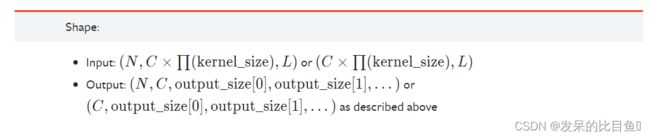

nn.Fold

import torch.nn as nn

import torch

batches_img=torch.rand(1,2,4,4)#模拟图片数据(bs,2,4,4),通道数C为2

print("batches_img:\n",batches_img)

print("batches_img shape:\n",batches_img.shape)

nn_Unfold=nn.Unfold(kernel_size=(2,2),dilation=1,padding=0,stride=2)

patche_img=nn_Unfold(batches_img)

print("patche_img.shape:",patche_img.shape)

print("patch_img:\n",patche_img)

fold = torch.nn.Fold(output_size=(4, 4), kernel_size=(2, 2), stride=2)

inputs_restore = fold(patches)

print(inputs_restore)

print(inputs_restore.size())