Seatunnel源码解析(2)-加载配置文件

Seatunnel源码解析(2)-加载配置文件

需求

公司在使用Seatunnel的过程中,规划将Seatunnel集成在平台中,提供可视化操作。

因此目前有如下几个相关的需求:

- 可以通过Web接口,传递参数,启动一个Seatunnel应用

- 可以自定义日志,收集相关指标,目前想到的包括:应用的入流量、出流量;启动时间、结束时间等

- 在任务结束后,可以用applicationId自动从yarn上收集日志(一是手动收集太麻烦,二是时间稍长日志就没了)

材料

- Seatunnel:2.0.5

目前官方2版本还没有正式发布,只能自己下载源码编译。

从Github下载官方源码,clone到本地Idea

github:https://github.com/apache/incubator-seatunnel

官方地址:http://seatunnel.incubator.apache.org/

Idea下方Terminal命令行里,maven打包,执行:mvn clean install -Dmaven.test.skip=true

打包过程大约十几分钟,执行结束后,seatunnel-dist模块的target目录下,可以找到打好包的*.tar.gz压缩安装包

- Spark:2.4.8

- Hadoop:2.7

任意门

Seatunnel源码解析(1)-启动应用

Seatunnel源码解析(2)-加载配置文件

Seatunnel源码解析(3)-加载插件

Seatunnel源码解析(4) -启动Spark/Flink程序

Seatunnel源码解析(5)-修改启动LOGO

导读

本章将从源码角度,解读Seatunnel如何加载解析配置文件

进一步,尝试修改源码,修改配置文件加载方式,通过内存数据Map加载配置文件

解析封装命令行参数

public class SeatunnelSpark {

public static void main(String[] args) throws Exception {

//sparkconf =

// --conf "spark.app.name=SeaTunnel"

//--conf "spark.executor.memory=1g"

//--conf "spark.executor.cores=1"

//--conf "spark.executor.instances=2"

//JarDepOpts =

//FilesDepOpts =

//assemblyJarName = /opt/seatunnel/lib/seatunnel-core-spark.jar

//CMD_ARGUMENTS =

//--master yarn

//--deploy-mode client

//--config example/spark.batch.conf

CommandLineArgs sparkArgs = CommandLineUtils.parseSparkArgs(args);

Seatunnel.run(sparkArgs, SPARK);

}

}

创建配置类

命令行参数–conf的值,经过解析、封装、传递,在ConfigBuilder类中加载配置文件

public class Seatunnel {

private static final Logger LOGGER = LoggerFactory.getLogger(Seatunnel.class);

public static void run(CommandLineArgs commandLineArgs, Engine engine) throws Exception {

String configFilePath = getConfigFilePath(commandLineArgs, engine);

LOGGER.info("Seatunnel.run.self.configFilePath:" + configFilePath);

...

entryPoint(configFilePath, engine);

...

}

private static void entryPoint(String configFile, Engine engine) throws Exception {

ConfigBuilder configBuilder = new ConfigBuilder(configFile, engine);

...

}

...

}

看ConfigBuilder的构造函数,configFile是–conf传入的配置文件的路径,engine是由启动脚本和入口程序确定的Spark/Flink

- load():函数加载、解析、封装配置文件

- createEnv():创建Spark/Flink对应的执行环境对象

- new ConfigPackage(engine.getEngine()):按照包命名规则,拼接可能用到的包的引用路径

public class ConfigBuilder {

private static final Logger LOGGER = LoggerFactory.getLogger(ConfigBuilder.class);

private static final String PLUGIN_NAME_KEY = "plugin_name";

private final String configFile;

private final Engine engine;

private ConfigPackage configPackage;

private final Config config;

private boolean streaming;

private Config envConfig;

private final RuntimeEnv env;

public ConfigBuilder(String configFile, Engine engine) {

this.configFile = configFile;

this.engine = engine;

// 根据配置文件路径,加载解析配置文件

this.config = load();

// 根据配置文件,创建对应的执行环境

this.env = createEnv();

// 根据对应的执行引擎,按照包命名规则,拼接包引用路径

this.configPackage = new ConfigPackage(engine.getEngine());

}

}

加载、解析配置文件 - load()

根据configFile(.conf文件的路径),最终生成Config对象

public class ConfigBuilder {

private Config load() {

...

// config/example/spark.batch.conf

LOGGER.info("Loading config file: {}", configFile);

Config config = ConfigFactory

.parseFile(new File(configFile))

.resolve(ConfigResolveOptions.defaults().setAllowUnresolved(true))

.resolveWith(ConfigFactory.systemProperties(),

ConfigResolveOptions.defaults().setAllowUnresolved(true));

ConfigRenderOptions options = ConfigRenderOptions.concise().setFormatted(true);

// 解析完成

LOGGER.info("parsed config file: {}", config.root().render(options));

// 加一个自定义日志

LOGGER.info("self.ConfigBuilder.load: " + config.toString());

return config;

}

}

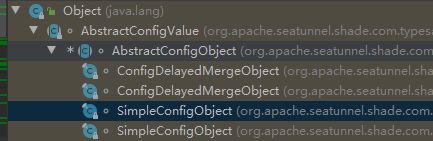

Config是一个接口,接口实现有SimpleConfig

public interface Config extends ConfigMergeable {

...

}

SimpleConfig中,只有一个关键属性AbstractConfigObject object

大概看这个类的方法,基本都是围绕object属性,提供一些操作方法。

并且看到SimpleConfig类提供了toString方法,那就可以调用toString打印SimpleConfig实例,看看有什么信息。

final class SimpleConfig implements Config, MergeableValue, Serializable {

private static final long serialVersionUID = 1L;

private final AbstractConfigObject object;

...

public String toString() {

return "Config(" + this.object.toString() + ")";

}

}

通过打印出来的信息,可以看到object的具体类型是SimpleCofnigObject

并且object里面,封装了配置文件中的具体内容

AbstractConfigObject:

是一个抽象类,有ConfigDelayedMergeObject和SimpleConfigObject两个实现类,AbstractConfigValue的父类

看上面打印出来的日志,这里用到的是SimpleConfigObject子类

在父类AbstractConfigValue中,有个toString方法,通过reder()函数输出具体的配置内容

reder中通过递归的方式,格式化的输出配置内容,不展开看了

abstract class AbstractConfigValue implements ConfigValue, MergeableValue {

...

public String toString() {

StringBuilder sb = new StringBuilder();

this.render(sb, 0, true, (String)null, ConfigRenderOptions.concise());

return this.getClass().getSimpleName() + "(" + sb.toString() + ")";

}

...

}

子类SimpleConfigObject

final class SimpleConfigObject extends AbstractConfigObject implements Serializable {

private static final long serialVersionUID = 2L;

private final Map<String, AbstractConfigValue> value;

private final boolean resolved;

private final boolean ignoresFallbacks;

private static final String EMPTY_NAME = "empty config";

private static final SimpleConfigObject emptyInstance = empty(SimpleConfigOrigin.newSimple("empty config"));

...

}

回到上面的load()方法中,通过ConfigFactory调用parseFile生成Config对象

Config config = ConfigFactory

.parseFile(new File(configFile))

.resolve(ConfigResolveOptions.defaults().setAllowUnresolved(true))

.resolveWith(ConfigFactory.systemProperties(),

ConfigResolveOptions.defaults().setAllowUnresolved(true));

ConfigFactory

ConfigFactory类中有很多生成Config的方式

通过newFile(file, options),会生成一个Parseable的对象

通过parse(),会生成一个ConfigObject对象

通过toConfig(),最终生成一个Config对象

public final class ConfigFactory {

...

public static Config parseFile(File file) {

return parseFile(file, ConfigParseOptions.defaults());

}

public static Config parseFile(File file, ConfigParseOptions options) {

return Parseable.newFile(file, options).parse().toConfig();

}

public static Config parseResources(Class<?> klass, String resource)

public static Config parseResourcesAnySyntax(Class<?> klass, String resourceBasename)

public static Config parseString(String s)

public static Config parseMap(Map<String, ? extends Object> values)

...

Parseable:

点进parse方法,进入Parseable类,一直往下点可以追到rawParseValue方法

方法中有ConfigDocument方式的解析和Properties的解析方式,这里就不再深入追查下去了

public abstract class Parseable implements ConfigParseable {

...

private AbstractConfigValue rawParseValue(Reader reader, ConfigOrigin origin, ConfigParseOptions finalOptions) throws IOException {

if (finalOptions.getSyntax() == ConfigSyntax.PROPERTIES) {

return PropertiesParser.parse(reader, origin);

} else {

Iterator<Token> tokens = Tokenizer.tokenize(origin, reader, finalOptions.getSyntax());

ConfigNodeRoot document = ConfigDocumentParser.parse(tokens, origin, finalOptions);

return ConfigParser.parse(document, origin, finalOptions, this.includeContext());

}

}

...

}

ConfigBuilder中的其他操作

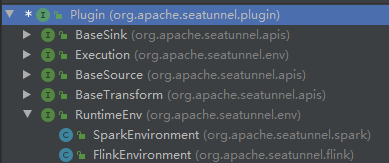

- createEnv

创建Spark/Flink的执行环境,比如Spark的SparkSession、StreamingContext等

private RuntimeEnv createEnv() {

envConfig = config.getConfig("env");

// 通过source插件名称是否带以stream结尾,判断是否为streaming模式

streaming = checkIsStreaming();

RuntimeEnv env = null;

// 根据传入的引擎标志,创建封装了Spark或flink的执行环境的封装类

// 但此时并没有创建真正的Spark/Flink的执行环境

// Spark:SparkSession/StreamingContext

// Flink:StreamExecutionEnvironment/StreamTableEnvironment/ExecutionEnvironment/BatchTableEnvironment等

switch (engine) {

case SPARK:

env = new SparkEnvironment();

break;

case FLINK:

env = new FlinkEnvironment();

break;

default:

throw new IllegalArgumentException("Engine: " + engine + " is not supported");

}

env.setConfig(envConfig);

// 创建执行Spark/Flink的上下文、执行会话等

env.prepare(streaming);

return env;

}

private boolean checkIsStreaming() {

List<? extends Config> sourceConfigList = config.getConfigList(PluginType.SOURCE.getType());

// 根据source插件的后缀是否带有streamng,判断是否为streaming模式

return sourceConfigList.get(0).getString(PLUGIN_NAME_KEY).toLowerCase().endsWith("stream");

}

}

SparkEnvironment

// Spark执行环境

// 不管是否流处理,都会将流执行环境创建

public class SparkEnvironment implements RuntimeEnv {

...

@Override

public void prepare(Boolean prepareEnv) {

SparkConf sparkConf = createSparkConf();

this.sparkSession = SparkSession.builder().config(sparkConf).getOrCreate();

createStreamingContext();

}

private SparkConf createSparkConf() {

SparkConf sparkConf = new SparkConf();

this.config.entrySet().forEach(entry -> sparkConf.set(entry.getKey(), String.valueOf(entry.getValue().unwrapped())));

return sparkConf;

}

private void createStreamingContext() {

SparkConf conf = this.sparkSession.sparkContext().getConf();

long duration = conf.getLong("spark.stream.batchDuration", DEFAULT_SPARK_STREAMING_DURATION);

if (this.streamingContext == null) {

this.streamingContext = new StreamingContext(sparkSession.sparkContext(), Seconds.apply(duration));

}

}

...

}

FlinkEnvironment

// Flink引擎则根据是否为流模式,创建对应的执行环境

public class FlinkEnvironment implements RuntimeEnv {

...

@Override

public void prepare(Boolean isStreaming) {

this.isStreaming = isStreaming;

if (isStreaming) {

createStreamEnvironment();

createStreamTableEnvironment();

} else {

createExecutionEnvironment();

createBatchTableEnvironment();

}

if (config.hasPath("job.name")) {

jobName = config.getString("job.name");

}

}

...

}

- ConfigPackage

ConfigPackage:

根据包命名规则,用spark/flink,拼接可能用到的包的应用路径

public class ConfigPackage {

...

public ConfigPackage(String engine) {

this.packagePrefix = "org.apache.seatunnel." + engine;

this.upperEngine = engine.substring(0, 1).toUpperCase() + engine.substring(1);

this.sourcePackage = packagePrefix + ".source";

this.transformPackage = packagePrefix + ".transform";

this.sinkPackage = packagePrefix + ".sink";

this.envPackage = packagePrefix + ".env";

this.baseSourceClass = packagePrefix + ".Base" + upperEngine + "Source";

this.baseTransformClass = packagePrefix + ".Base" + upperEngine + "Transform";

this.baseSinkClass = packagePrefix + ".Base" + upperEngine + "Sink";

}

...

}

测试跳过配置文件,在代码中指定配置内容

通过上面load()方法追查源码,看到除了parseFile还提供了其他的方式

这里尝试采用parseMap的方式,从内存中解析配置数据

修改源码,重新编译项目,测试将配置文件中的内容,直接放到内存解析

public class ConfigBuilder {

...

private Config load() {

if (configFile.isEmpty()) {

throw new ConfigRuntimeException("Please specify config file");

}

// config/example/spark.batch.conf

LOGGER.info("Loading config file: {}", configFile);

// variables substitution / variables resolution order:

// config file --> system environment --> java properties

// Config config = ConfigFactory

// .parseFile(new File(configFile))

// .resolve(ConfigResolveOptions.defaults().setAllowUnresolved(true))

// .resolveWith(ConfigFactory.systemProperties(),

// ConfigResolveOptions.defaults().setAllowUnresolved(true));

Map<String, Object> configMap = new HashMap<>();

Map<String, Object> envMap = new HashMap<>();

envMap.put("spark.app.name", "SeaTunnel");

envMap.put("spark.executor.cores", 1);

envMap.put("spark.executor.instances", 2);

envMap.put("spark.executor.memory", "1g");

List<Map<String, Object>> sourceList = new ArrayList<>();

Map<String, Object> sourceMap = new HashMap<>();

sourceMap.put("plugin_name", "Fake");

sourceMap.put("result_table_name", "my_conf_map");

sourceList.add(sourceMap);

List<Map<String, Object>> sinkList = new ArrayList<>();

Map<String, Object> sinkMap = new HashMap<>();

sinkMap.put("plugin_name", "Console");

sinkList.add(sinkMap);

configMap.put("env", envMap);

configMap.put("source", sourceList);

configMap.put("sink", sinkList);

configMap.put("transform", new ArrayList<>());

LOGGER.info("自定义配置...");

Config config = ConfigFactory

.parseMap(configMap)

.resolve(ConfigResolveOptions.defaults().setAllowUnresolved(true))

.resolveWith(ConfigFactory.systemProperties(),

ConfigResolveOptions.defaults().setAllowUnresolved(true));

ConfigRenderOptions options = ConfigRenderOptions.concise().setFormatted(true);

// conf -> json config

LOGGER.info("parsed config file: {}", config.root().render(options));

LOGGER.info("self.ConfigBuilder.load: " + config.toString());

return config;

}

...

}

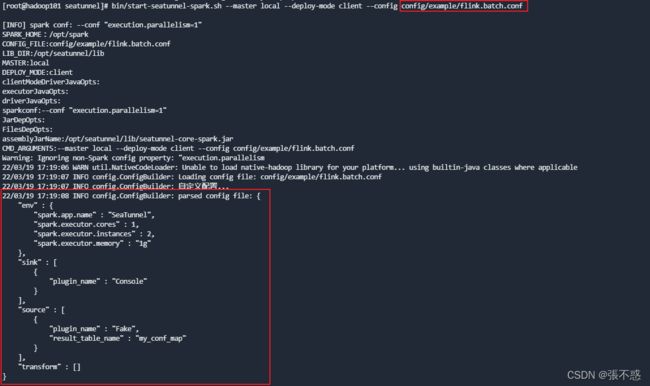

验证源码修改结果

将打包好的新的安装包解压到服务器上,用新的lib目录替换原先的lib目录

随便指定一个和之前不一样配置文件,观察执行后输出的配置日志,验证是否加载代码中指定的配置

bin/start-seatunnel-spark.sh --master local --deploy-mode client --config config/example/flink.bach.conf

可以看到,这里虽然用了官方的flink示例配置文件,但是配置的实际内容是我们在代码中指定的内容

总结

经过上面对源码的梳理,可以大概了解Seatunnel加载配置文件的方式

并且经过测试,Seatunnel提供了从内存数据结构中,加载配置文件的方式

可以进一步,测试JavaAPI启动Seatunnel应用