kubernetes学习笔记

一、kubernetes介绍

K8s,用于自动化容器化应用程序的部署、扩展和管理。kubernetes本质是一组服务器集群,它可以在集群的每个节点上运行特定的程序,来对节点中的容器进行管理。目的是实现资源管理的自动化,主要提供了如下的主要功能:

1、功能特点:

自我修复:如果某个容器挂了,它会在1秒内启动新的容器;

弹性收缩:可以根据流量的大小自动的启停服务;

服务发现:服务可以通过自动发现的形式找到它依赖的服务;

负载均衡:如果一个服务启动了多个,能够自动实现请求的负载均衡;

版本回退:如果发下新发布的程序版本有问题,可以立即退回到原来的版本;

存储编排:可以根据容器自身的需求自动创建存储卷。

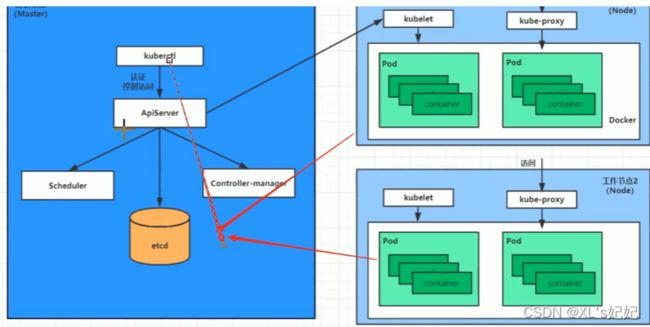

2、kubernetes组件

一个kubernetes集群主要是由控制节点(mester)、工作节点(node),每个节点上都安装不同的组件。

master:集群的控制平面,负责集群的决策(管理)

ApiServer:资源操作的唯一入口,接收用户输入的命令,提供认证、授权、API注册的发现等机制

Scheduler负责集群资源调度,按照预定的调度策略将Pod调度到相应的node节点上

ControllerManager:负责维护集群的状态,比如程序部署安排、故障检测、自动扩展、滚动更新等

Etcd:负责存储集群中各种资源对象的信息

node:集群的数据平面,负责为容器提供运行环境

Kubelet:负责维护容器的生命周期,即通过控制docker,来创建、更新、销毁容器

KubeProxy:负责提供集群内部的服务发现和负载均衡

Docker:负责节点上容器的各种操作

下面,以部署一个nginx服务来说明kubernetes系统各个组件调佣关系:

1、首先要明确,一旦kubernetes环境启动之后,master的node都会将自身的信息存储到etcd数据库中

2、一个Nginx服务的安装请求会首先被发送到master节点的apiserver组件

3、apiserver组件会调用scheduler组件来决定到底应该把这个服务安装到那个node节点上在此时,他会从etcd中读取各个node节点的信息,然后按照一定的算法进行选择,并将结果告知apiserver

4、apiserver调用controller-manager去调度node节点安装Nginx服务

5、kubelet接收到指令后,会通知docker,然后由docker来启动一个Nginx的pod,pod是kubernetes的最小操作单元,容器必须跑在pod中。

6、一个Nginx服务就运行了,如果需要访问Nginx,就需要通过kube-proxy来对pod产生访问的代理,这样用户就可以访问集群中的Nginx服务了。

3、kubernetes概念

Master:集群控制节点,每个集群需要至少一个master节点负责集群的管控;

node:工作负载节点,由master分配容器到这些node工作节点上,然后node节点上的docker负责容器的运行

pod:kubernetes的最小控制单元,容器都是运行在pod中的,一个pod可以有1个或者多个容器

Controller:控制器,通过它来实现对pod的管理,比如启动pod、停止pod、伸缩pod的数量等等

Service:pod对外服务的统一入口,下面可以维护者同一类多个pod

Label:标签,用于对pod进行分类,同一类pod会拥有相同的标签

NameSpace:命名空间,用来隔离pod的运行环境

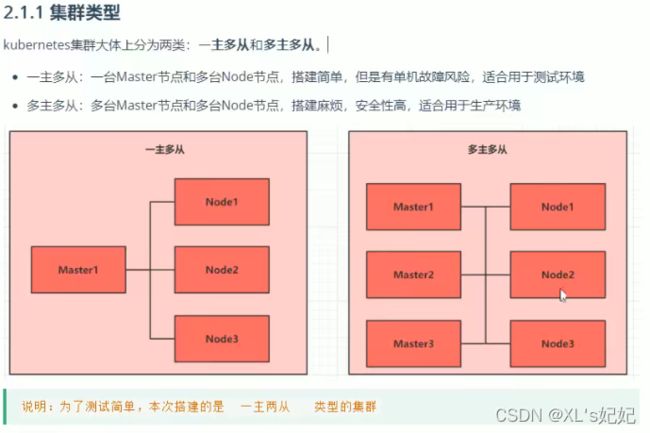

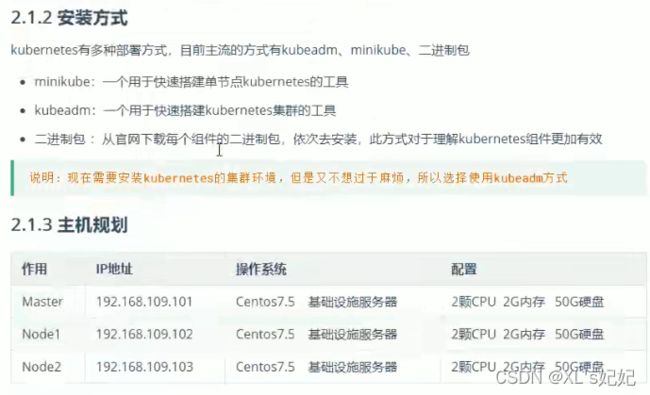

二、环境规划

三、操作系统安装和环境的初始化

1、操作系统的安装

安装三台相同的centos7.5以上的版本系统,并配置IP地址,(安装步骤省略)。

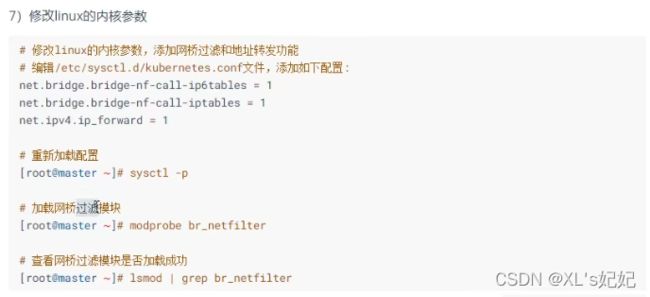

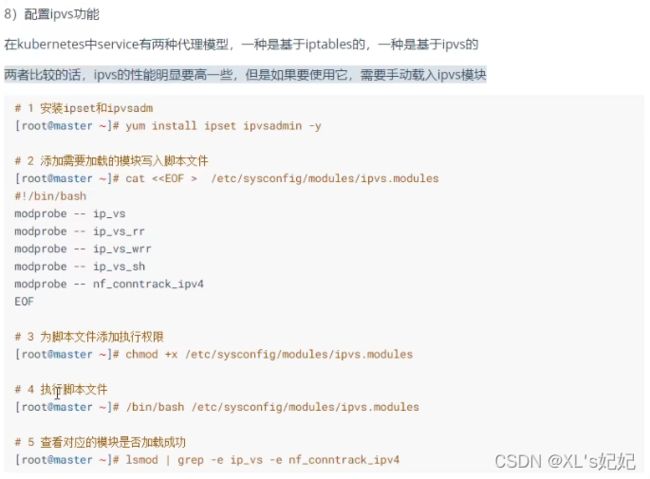

2、环境的初始化

四、安装组件

1、安装docker(三台服务器同时安装)

卸载原有docker:

杀死docker有关的容器:

docker kill $(docker ps -a -q)

删除所有docker容器:

docker rm $(docker ps -a -q)

删除所有docker镜像:

docker rmi $(docker images -q)

停止 docker 服务:

systemctl stop docker

删除docker相关存储目录:

rm -rf /etc/docker

rm -rf /run/docker

rm -rf /var/lib/dockershim

rm -rf /var/lib/docker

然后再重新执行上面那步“删除docker相关存储目录”。

经过上面一系列准备后,我们终于到了最后环节,开始删除docker。

查看系统已经安装了哪些docker包:

[root@localhost ~]# yum list installed | grep docker

containerd.io.x86_64 1.2.13-3.2.el7 @docker-ce-stable

docker-ce.x86_64 3:19.03.12-3.el7 @docker-ce-stable

docker-ce-cli.x86_64 1:19.03.12-3.el7 @docker-ce-stable

卸载相关包:

[root@localhost ~]# yum remove containerd.io.x86_64 docker-ce.x86_64 docker-ce-cli.x86_64

安装docker

(1)下载需要的安装包

yum install -y yum-utils

(2)设置镜像的仓库

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo #国外的地址

# 设置阿里云的Docker镜像仓库

yum-config-manager \

--add-repo \

https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo #阿里云的地址

(4)更新yum软件包索引

yum makecache fast

(5)查看当前镜像源中支持的docker版本

yum list docker-ce --showduplicates

(6)安装特定版本的docker-ce

#必须指定–setopt=obsoletes=8,否则yum会自动安装更高版本

yum install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7 -y

(7)添加一个配置文件

Docker在默认情况下使用的Cgroup Driver为cgroupfs,而kubernetes推荐使用systemd来代替cgroupfs

[root@node1 ~]# mkdir /etc/docker

[root@node1 ~]# cat < /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors":["https://kn0t2bca.mirror.aliyuncs.com"]

}

EOF

(8)启动docker、设置开机启动

2、安装kubernetes组件(三台服务器同时安装)

#由于kubernetes的镜像源在国外,速度比较慢,这里切换成国内的镜像源

#编辑/etc/yum.repos.d/kubernetes.repo,添加下面的配置

[ kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

#安装kubeadm、kubelet和kubectl

[ root@master ~]# yum install --setopt=obsoletes=0 kubeadm-1.17.4-0 kubelet-1.17.4-0 kubectl-1.17.4-0 -y

#配置kubelet的cgroup

#编辑/etc/sysconfig/kubelet,添加下面的配置

KUBELET_CGROUP_ARGS= "--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

#4设置kubelet开机自启

[ root@master ~]# systemctl enable kubelet

3、准备集群镜像

#在安装kubernetes集群之前,必须要提前准备好集群需要的镜像,所需镜像可以通过下面命令查看

[ root@master ~-]# kubeadm config images list

#下戟镜像

#此镜像在kubernetes的仓库中,由于网络原因,无法连接,下面提供了一种替代方案

images=(

kube-apiserver:v1.17.4

kube-controller-manager:v1.17.4

kube-scheduler:v1.17.4

kube-proxy:v1.17.4

pause:3.1

etcd:3.4.3-0

coredns:1.6.5

)

循环安装镜像

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

4、集群初始化

下面开始对集群进行初始化,并将node节点加入到集群中

下面的操作只需要在 master节点上执行即可

#创建集群

[rootomaster ~]# kubeadm init \

--kubernetes-version=v1.17.4 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--apiserver-advertise-address=192.168.29.152

#当看到一下内容则master成功

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.29.152:6443 --token emd0va.l8sljwm2261q0f2n \

--discovery-token-ca-cert-hash sha256:c27ccaeedaed571477786127f66818bd3f965d64880c722f79a9a33bebbe7567

根据提示在master服务器创建必要文件

[ rootemaster ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

根据提示添加node节点,分别在node服务器运行命令

[root@node1 docker]# kubeadm join 192.168.29.152:6443 --token emd0va.l8sljwm2261q0f2n \

> --discovery-token-ca-cert-hash sha256:c27ccaeedaed571477786127f66818bd3f965d64880c722f79a9a33bebbe7567

W0210 17:32:42.398924 3731 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

添加完node节点之后,在master节点上查看是否添加成功

#由于没有安装网络插件,所以状态为notready

[root@master docker]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 11m v1.17.4

node1 NotReady <none> 2m3s v1.17.4

node2 NotReady <none> 52s v1.17.4

5、安装weave网络插件

[root@master ~]# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')" serviceaccount/weave-net configured

clusterrole.rbac.authorization.k8s.io/weave-net configured

clusterrolebinding.rbac.authorization.k8s.io/weave-net configured

role.rbac.authorization.k8s.io/weave-net configured

rolebinding.rbac.authorization.k8s.io/weave-net configured

daemonset.apps/weave-net configured

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 3d21h v1.17.4

node1 Ready <none> 3d21h v1.17.4

node2 Ready <none> 3d21h v1.17.4

6、搭建nginx服务

#安装ngixn镜像

[root@master ~]# kubectl create deployment nginx --image=nginx:1.14-alpine

deployment.apps/nginx created

#暴露80端口

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

#查看pod和serives

[root@master ~]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-6867cdf567-488c7 1/1 Running 0 3m26s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d22h

service/nginx NodePort 10.103.62.75 <none> 80:30104/TCP 85s

[root@master ~]#

五、资源管理

1、资源管理介绍

在kubernetes中,所有的内容都抽象为资源,用户需要通过操作资源来管理kubernetes。

kubernetes的本质上就是一个集群系统,用户可以在集群中部署各种服务,所谓的部署服务,其实就是在kubernetes集群中运行一个个的容器,并将指定的程序跑在容器中。

kubernetes的最小管理单元是pod而不是容器,所以只能将容器放在Pod中,而kubernetes一般也不会直接管理Pod,而是通过Pod控制器来管理Pod的。

Pod可以提供服务之后,就要考虑如何访问Pod中服务,kubernetes提供了Service资源实现这个功能。

当然,如果Pod中程序的数据需要持久化,kubernetes还提供了各种存储系统。

2、YAML语言介绍

YAML是一个类似xml、json的标记性语言。它强调以数据为中心,并不是以标识语言为中心。因而yaml本身的定义比较简单,号称“一种人性化的数据格式语言”。

#如:在xml中

<heima>

<age>15age>

<address>chengduaddress>

heima>

#而在YAML中:

heima:

age:15

address:chengdu

YAML的语法比较简单,主要有下面几个:

- 大小写敏感

- 使用缩进表示层级关系

- 缩进不允许使用tab,只允许空格

- 缩进的空格数不重要,只要相同层级的元素左对齐即可。

- "#'表示注释

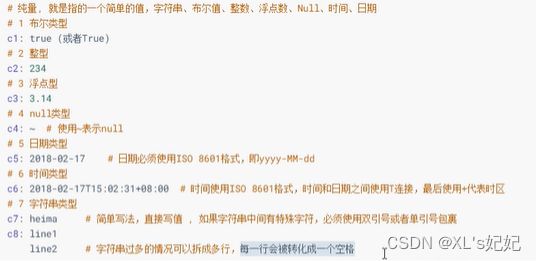

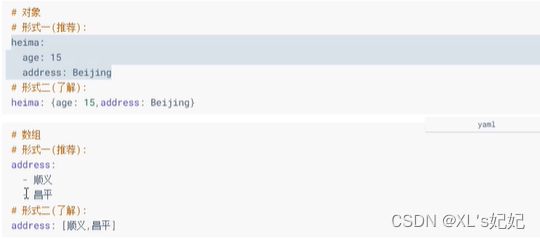

YAML支持以下几种数据类型: - 纯量:单个的、不可再分的值

- 对象:键值对的集合,又称为映射(mapping)/哈希(hash)/字典(dictionary)·

- 数组:—组按次序排列的值,又称为序列(sequence)/列表(list)

2.2、字符类型

提示:

1 书写yaml切记:后面要加一个空格

2 如果需要将多端yaml配置放在一个文件中,中间要使用—分开

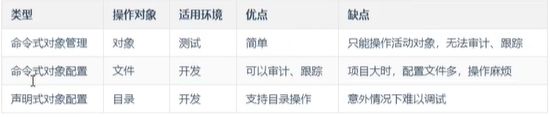

3、资源管理方式

命令式对象管理:直接使用命令操作kubernetes资源

kubectl run nginx-pod --image=nginx:1.17.1 --port=80

命令式对象管理:通过命令配置和配置文件去操作kubernetes资源

create/patch -f nginx-pod.yaml

声明式对象配置:通过apply命令和配置文件去操作kubernetes资源

kubectl apply -f nginx-pod.yaml

3.1、命令式对象管理

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6867cdf567-488c7 1/1 Running 0 68m

[root@master ~]# kubectl get pod nginx-6867cdf567-488c7

NAME READY STATUS RESTARTS AGE

nginx-6867cdf567-488c7 1/1 Running 0 69m

[root@master ~]# kubectl get pod nginx-6867cdf567-488c7 -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-6867cdf567-488c7 1/1 Running 0 70m 10.244.2.2 node2

#是指以yaml的方式显示

[root@master ~]# kubectl get pod nginx-6867cdf567-488c7 -o yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: "2022-02-14T07:20:30Z"

generateName: nginx-6867cdf567-

labels:

app: nginx

pod-template-hash: 6867cdf567

name: nginx-6867cdf567-488c7

namespace: default

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: nginx-6867cdf567

uid: 197f3060-dbd2-4f7a-b62e-d6b102f50244

resourceVersion: "12197"

selfLink: /api/v1/namespaces/default/pods/nginx-6867cdf567-488c7

uid: fadabb6a-cb62-4913-87ff-87a075fdb7c0

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-dvg6g

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: node2

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: default-token-dvg6g

secret:

defaultMode: 420

secretName: default-token-dvg6g

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2022-02-14T07:20:30Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2022-02-14T07:21:18Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2022-02-14T07:21:18Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2022-02-14T07:20:30Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: docker://c7e751c527b277c805ebbfc9bca72c9638eb93aefbb7155bd68c86

image: nginx:1.14-alpine

imageID: docker-pullable://nginx@sha256:485b610fefec7ff6c463ced9623314a04ed6

lastState: {}

name: nginx

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2022-02-14T07:21:16Z"

hostIP: 192.168.29.134

phase: Running

podIP: 10.244.2.2

podIPs:

- ip: 10.244.2.2

qosClass: BestEffort

startTime: "2022-02-14T07:20:30Z"

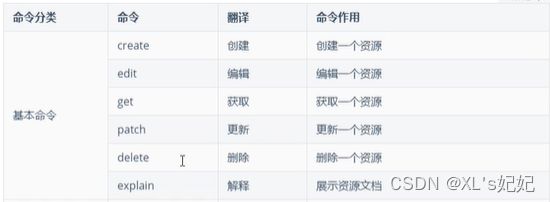

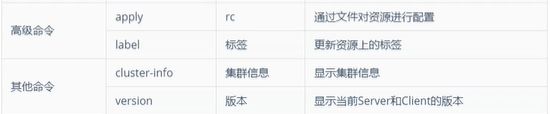

常用的操作命令如下:

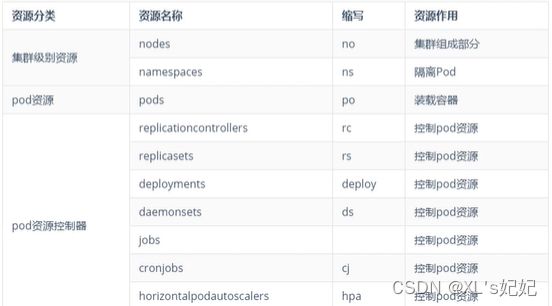

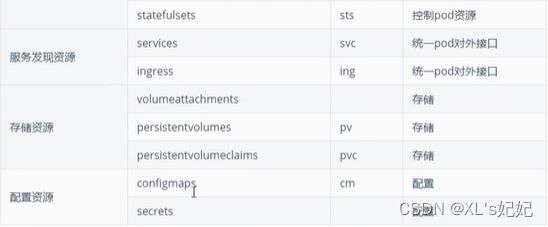

资源类型:

kubernetes中所有的内容都抽象为资源,可以通过下面的命令进行查看:

kubectl api-resources

经常使用的资源有下面这些

下面以一个namespace / pod的创建和删除简单演示命令的使用:

#创建空间

[root@master ~]# kubectl create namespace dev

namespace/dev created

#查看空间

[root@master ~]# kubectl get ns

NAME STATUS AGE

default Active 3d23h

dev Active 31s

kube-node-lease Active 3d23h

kube-public Active 3d23h

kube-system Active 3d23h

#在dev空间下运行一个nginx

[root@master ~]# kubectl run pod --image=nginx:1.17.1 -n dev

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create inste ad.

deployment.apps/pod created

#查看pods节点下的服务但默认查看的是default空间

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-6867cdf567-488c7 1/1 Running 0 100m

#查看指定空间pods节点下的服务

[root@master ~]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pod-cbb995bbf-zz4kh 0/1 ContainerCreating 0 33s

#显示pod-cbb995bbf-zz4kh节点的创建过程

[root@master ~]# kubectl describe pods pod-cbb995bbf-zz4kh -n dev

Name: pod-cbb995bbf-zz4kh

Namespace: dev

Priority: 0

Node: node1/192.168.29.137

Start Time: Mon, 14 Feb 2022 17:01:12 +0800

Labels: pod-template-hash=cbb995bbf

run=pod

Annotations: <none>

Status: Running

IP: 10.244.1.4

IPs:

IP: 10.244.1.4

Controlled By: ReplicaSet/pod-cbb995bbf

Containers:

pod:

Container ID: docker://2f4c95123cc37c166c3b6fe56c145eff7103b1e0a2a557f2583539aa369a5c2f

Image: nginx:1.17.1

Image ID: docker-pullable://nginx@sha256:b4b9b3eee194703fc2fa8afa5b7510c77ae70cfba567af1376a573a967c03dbb

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 14 Feb 2022 17:04:38 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-gbfm9 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-gbfm9:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-gbfm9

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 4m45s default-scheduler Successfully assigned dev/pod-cbb995bbf-zz4kh to node1

Normal Pulling 4m41s kubelet, node1 Pulling image "nginx:1.17.1"

Normal Pulled 80s kubelet, node1 Successfully pulled image "nginx:1.17.1"

Normal Created 79s kubelet, node1 Created container pod

Normal Started 79s kubelet, node1 Started container pod

#删除节点

[root@master ~]# kubectl delete pods pod-cbb995bbf-zz4kh -n dev

pod "pod-cbb995bbf-zz4kh" deleted

#但是当只删除某个节点不删除空间的话,节点被删除后,系统回自动创建一个新的节点

[root@master ~]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pod-cbb995bbf-grfwx 0/1 ContainerCreating 0 19s

#删除空间

[root@master ~]# kubectl delete ns dev

namespace "dev" deleted

#查看空间

[root@master ~]# kubectl get ns

NAME STATUS AGE

default Active 3d23h

kube-node-lease Active 3d23h

kube-public Active 3d23h

kube-system Active 3d23h

3.2命令式对象配置

首先需要编写一个yaml配置文件

[root@master kubernetes]# cat nginxpod.yaml

#创建一个dev的空间

apiVersion: v1

kind: Namespace

metadata:

name: dev

---

#创建一个pod节点,并且指定属于dev空间

apiVersion: v1

kind: Pod

metadata:

name: nginxpod

namespace: dev

#创建一个nginx的镜像

spec:

containers:

- name: nginx-containers

image: nginx:1.17.1

[root@master kubernetes]# kubectl create -f nginxpod.yaml

namespace/dev created

pod/nginxpod created

[root@master kubernetes]# kubectl get ns dev

NAME STATUS AGE

dev Active 16s

[root@master kubernetes]# kubectl get pod -n dev

NAME READY STATUS RESTARTS AGE

nginxpod 1/1 Running 0 28s

[root@master kubernetes]# kubectl delete -f nginxpod.yaml

namespace "dev" deleted

pod "nginxpod" deleted

3.3 声明式对象配置

声明式对象配置跟命令式对象配置很相似,但是她只有一个命令apply

#先执行一次编辑好的yaml文件,他会创建相应的资源

[root@master kubernetes]# kubectl apply -f nginxpod.yaml

namespace/dev created

pod/nginxpod created

[root@master kubernetes]# kubectl get ns dev

NAME STATUS AGE

dev Active 7s

[root@master kubernetes]# kubectl get pod -n dev

NAME READY STATUS RESTARTS AGE

nginxpod 1/1 Running 0 19s

#如果已经在运行,则当使用apply执行yaml文件时,系统就会去更新。

[root@master kubernetes]# kubectl apply -f nginxpod.yaml

namespace/dev unchanged

pod/nginxpod configured

[root@master kubernetes]# kubectl describe pod nginxpod

Error from server (NotFound): pods "nginxpod" not found

[root@master kubernetes]# kubectl describe pod nginxpod -n dev

Name: nginxpod

Namespace: dev

Priority: 0

Node: node1/192.168.29.137

Start Time: Fri, 04 Mar 2022 15:30:43 +0800

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

略。。。。

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m34s default-scheduler Successfully assigned dev/nginxpod to node1

Normal Pulled 2m33s kubelet, node1 Container image "nginx:1.17.1" already present on machine

Normal Created 2m33s kubelet, node1 Created container nginx-containers

Normal Started 2m32s kubelet, node1 Started container nginx-containers

Normal Killing 71s kubelet, node1 Container nginx-containers definition changed, will be restarted

Normal Pulling 71s kubelet, node1 Pulling image "nginx:1.17.2"

[root@master kubernetes]#

扩展:kubectl可以在node节点上运行吗??

kubectl的运行时需要进行配置的,她的配置文件时$HOME/.kube, 如果想要在node节点运行此命令,需要将master上的kube文件复制到node节点上,即在master节点上执行下面操作:

scp -r HOME/.kube node1:HOME/

使用推荐:三中方式因该怎么用?

创建、更新资源 使用式对象配置 kubectl apply -f XXX.yaml

删除资源 使用命令式对象配置 kubectl delete -f XXX.yaml

查询资源 使用命令式对象管理 kubectl get(describe) 资源名称

六、实战入门

1、Namespace

Namespace是kubernetes系统中的一种非常重要资源,他的主要作用是用来实现多套环境的资源隔离或者多租户的资源隔离。

默认情况是,kubernetes集群中的所有的pod都是可以相互访问的。但是