k8s CSI架构介绍及源码分析

k8s本身提供了很多内置的volume plugin,比如ceph,nfs及第三方厂商的存储,这样带来了代码臃肿,不好维护,不灵活等问题,比如如果想修复存储bug还需和k8s一块发布,为了将k8s和存储系统解耦,抽象出了CSI(container storage interface)接口,其提供三种类型的gRPC接口,每个CSI plugin必须实现这些接口,具体可参考CSi spec https://github.com/container-storage-interface/spec/blob/master/spec.md

CSI架构介绍

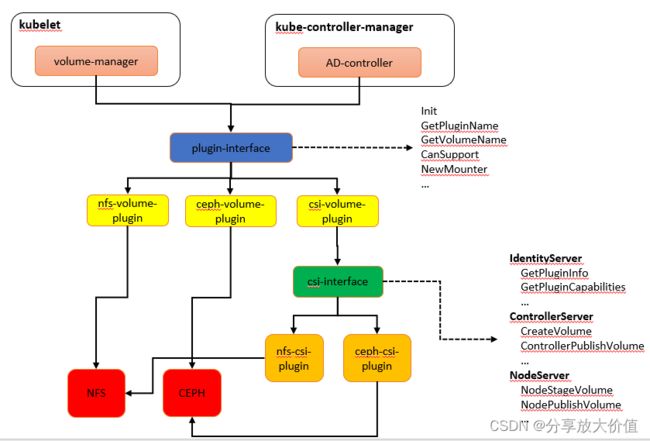

CSI简略图如下所示

kubelet的volume-manager和kube-controller-manager的ad-controller通过plugin-interface和内置的volume交互,比如nfs/ceph等,csi也属于内置的一种volume插件,但是它本身不提供存储功能,它只是又调用CSI-interface来和第三方存储交互。

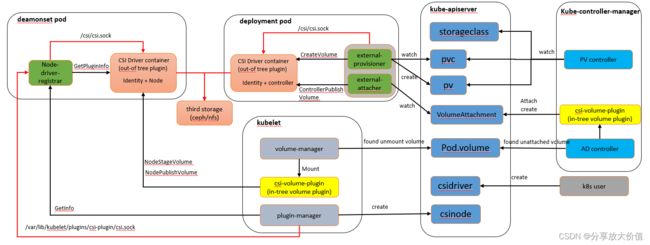

下面看一下CSI更详细的架构,包括涉及到的k8s对象和交互流程等

部署方式

实现了csi接口的插件不能单独工作,需结合k8s官网维护的sidecar容器进行配合工作,具体的部署方式如下:

csi插件需实现Identity和controller接口作为一个容器和external-provisioner/external-attacher作为另外的容器,以deployment方式部署,

同时csi插件还需实现Identity和Node接口和Node-driver-registrar作为容器,以daemonset方式部署。

csi插件初始化

主要关注daemonset pod初始化及kubelet中的plugin-manager如何识别csi插件

a. csi插件容器启动后会创建并监听/csi/csi.sock

b. Node-driver-registrar容器启动时通过/csi/csi.sock连接到csi插件容器,并调用GetPluginInfo接口获取csi插件信息

c. Node-driver-registrar容器启动后还会创建并监听/var/lib/kubelet/plugins/csi-plugin/csi.sock

d. plugin-manager会watch目录/var/lib/kubelet/plugins,发现有socket文件创建,就会通过此文件连接到Node-driver-registrar容器,并调用GetInfo

接口获取插件信息

e. plugin-manager根据获取的插件信息创建csinode

apiVersion: storage.k8s.io/v1

kind: CSINode

metadata:

annotations:

storage.alpha.kubernetes.io/migrated-plugins: kubernetes.io/aws-ebs,kubernetes.io/azure-disk,kubernetes.io/azure-file,kubernetes.io/cinder,kubernetes.io/gce-pd

creationTimestamp: "2022-06-16T05:25:59Z"

name: master

ownerReferences:

- apiVersion: v1

kind: Node

name: master

uid: 5a93ff8c-7b53-404b-a055-56ddc2cc9262

resourceVersion: "2174002"

uid: e7649650-1b49-4a81-b3af-ea634dd78741

spec:

drivers:

- name: nfs.csi.k8s.io

nodeID: master

topologyKeys: null

创建与挂载volume流程

a. 用户创建pvc

b. pv-controller监听到pvc添加事件后,查找合适的pv与其绑定,对csi插件来说肯定是找不到合适的pv的,此时会将volume.kubernetes.io/storage-provisioner

设置为provisionerName

c. external-provisioner监听到pvc的更新事件后,会判断volume.kubernetes.io/storage-provisioner指定的值是否为自己,如果是则调用CreateVolume创建pv

d. pv-controller监听到pvc更新后,将pvc和pv进行绑定

e. 用户创建pod,指定了volume为前面创建的pvc

f. kube-scheduler监听到pod添加事件,将其调度到合适的node上

g. ad-controller监听到pod的volume没有attach,调用csi-volume插件创建volumeattachment。如果csi插件不支持attach操作,则不必创建volumeattachment,

比如nfs-csi插件,在其csidriver中指定了“Attach Required”为false

kubectl describe csiDriver nfs.csi.k8s.io

Name: nfs.csi.k8s.io

...

API Version: storage.k8s.io/v1

Kind: CSIDriver

Spec:

Attach Required: false //指定了nfs不需要attach操作

Fs Group Policy: File

Pod Info On Mount: false

Requires Republish: false

Storage Capacity: false

...

h. external-attacher监听到volumeattachment事件后,调用ControllerPublishVolume进行attach操作

i. volume-manager发现volume没有mount,则调用csi-volume插件进行mount操作

CSI源码分析

这部分主要是贴代码,过一下从创建pvc到volume被mount上整个过程的源码

pv controller

pv controller检测到unbound pvc后,调用syncUnboundClaim

// syncUnboundClaim is the main controller method to decide what to do with an

// unbound claim.

func (ctrl *PersistentVolumeController) syncUnboundClaim(claim *v1.PersistentVolumeClaim) error

//pvc没有指定VolumeName,则先尝试findBestMatchForClaim找到最合适的PV,如果没有找到,则判断是否指定了StorageClassName,

//如果指定了则进行动态provision流程。这也能看出静态provison优先级高,即使指定了StorageClassName,也会先匹配合适的PV

if claim.Spec.VolumeName == "" {

//只有指定的storageClassName才有VolumeBindingMode,如果指定了VolumeBindingWaitForFirstConsumer,说明只有在pod真正引用此pvc时

//才需要创建对应的pv,另一种模式是VolumeBindingImmediate,pv controller监听到此种pvc后就立即创建pv

// User did not care which PV they get.

delayBinding, err := pvutil.IsDelayBindingMode(claim, ctrl.classLister)

volume, err := ctrl.volumes.findBestMatchForClaim(claim, delayBinding)

//如果没有匹配到合适的PV,则根据StorageClassName动态创建PV

if volume == nil {

switch {

case delayBinding && !pvutil.IsDelayBindingProvisioning(claim):

if err = ctrl.emitEventForUnboundDelayBindingClaim(claim); err != nil {

return err

}

//指定了StorageClassName,调用provisionClaim执行动态provision

case storagehelpers.GetPersistentVolumeClaimClass(claim) != "":

if err = ctrl.provisionClaim(claim); err != nil {

return err

}

return nil

default:

//即没有指定VolumeName,也没有找到合适的pv,也没有指定StorageClassName,则记录时间

ctrl.eventRecorder.Event(claim, v1.EventTypeNormal, events.FailedBinding, "no persistent volumes available for this claim and no storage class is set")

}

//更新pvc状态为pending

// Mark the claim as Pending and try to find a match in the next

// periodic syncClaim

if _, err = ctrl.updateClaimStatus(claim, v1.ClaimPending, nil); err != nil {

return err

}

return nil

} else /* pv != nil */ {

//匹配到合适的PV,进行bind

ctrl.bind(volume, claim)

}

} else /* pvc.Spec.VolumeName != nil */ {

obj, found, err := ctrl.volumes.store.GetByKey(claim.Spec.VolumeName)

//指定的pv不存在,则更新pvc状态为pending

if !found {

ctrl.updateClaimStatus(claim, v1.ClaimPending, nil)

} else {//指定的PV存在,静态provision流程

//指定的PV还未被claim,检查是否满足PVC要求,如果满足则进行bind

if volume.Spec.ClaimRef == nil {

checkVolumeSatisfyClaim(volume, claim)

ctrl.bind(volume, claim)

} else if pvutil.IsVolumeBoundToClaim(volume, claim) {

ctrl.bind(volume, claim)

} else {

//PV被其他pvc claim的场景

}

}

}

func (ctrl *PersistentVolumeController) provisionClaim(claim *v1.PersistentVolumeClaim) error

//根据pvc中的StorageClass,找到Provisioner,如果Provisioner为in-tree则返回,如果为out-tree则返回nil

plugin, storageClass, err := ctrl.findProvisionablePlugin(claim)

if plugin == nil {

//out-tree provision的处理,设置AnnBetaStorageProvisioner和AnnStorageProvisioner,external-provisoner会进行处理

_, err = ctrl.provisionClaimOperationExternal(claim, storageClass)

} else {

//in-tree provision的处理

_, err = ctrl.provisionClaimOperation(claim, plugin, storageClass)

}

// findProvisionablePlugin finds a provisioner plugin for a given claim.

// It returns either the provisioning plugin or nil when an external

// provisioner is requested.

func (ctrl *PersistentVolumeController) findProvisionablePlugin(claim *v1.PersistentVolumeClaim) (vol.ProvisionableVolumePlugin, *storage.StorageClass, error) {

// provisionClaim() which leads here is never called with claimClass=="", we

// can save some checks.

claimClass := storagehelpers.GetPersistentVolumeClaimClass(claim)

class, err := ctrl.classLister.Get(claimClass)

...

//根据class.Provisioner名字在in-tree volume plugin中查找,如果查到并且实现了接口ProvisionableVolumePlugin才返回此plugin,

//否则返回err,比如对于in-tree nfs来说,它不支持接口ProvisionableVolumePlugin

plugin, err := ctrl.volumePluginMgr.FindProvisionablePluginByName(class.Provisioner)

if err != nil {

//如果找不到,但是Provisioner名字前缀不是"kubernetes.io/",则认为是外部provision,则不返回err

if !strings.HasPrefix(class.Provisioner, "kubernetes.io/") {

// External provisioner is requested, do not report error

return nil, class, nil

}

//没找到in-tree volume plugin,也不是外部provison,则返回err

return nil, class, err

}

//找到了in-tree volume plugin,则返回

return plugin, class, nil

}

out-tree provison处理,只设置AnnBetaStorageProvisioner和AnnStorageProvisioner即可,external-provisoner会进行处理

func (ctrl *PersistentVolumeController) provisionClaimOperationExternal(

claim *v1.PersistentVolumeClaim,

storageClass *storage.StorageClass) (string, error) {

provisionerName := storageClass.Provisioner

// Add provisioner annotation so external provisioners know when to start

newClaim, err := ctrl.setClaimProvisioner(claim, provisionerName)

claimClone := claim.DeepCopy()

// TODO: remove the beta storage provisioner anno after the deprecation period

//AnnBetaStorageProvisioner = "volume.beta.kubernetes.io/storage-provisioner"

//AnnStorageProvisioner = "volume.kubernetes.io/storage-provisioner"

metav1.SetMetaDataAnnotation(&claimClone.ObjectMeta, pvutil.AnnBetaStorageProvisioner, provisionerName)

metav1.SetMetaDataAnnotation(&claimClone.ObjectMeta, pvutil.AnnStorageProvisioner, provisionerName)

updateMigrationAnnotations(ctrl.csiMigratedPluginManager, ctrl.translator, claimClone.Annotations, true)

newClaim, err := ctrl.kubeClient.CoreV1().PersistentVolumeClaims(claim.Namespace).Update(context.TODO(), claimClone, metav1.UpdateOptions{})

...

}

in-tree provison处理

// provisionClaimOperation provisions a volume. This method is running in

// standalone goroutine and already has all necessary locks.

func (ctrl *PersistentVolumeController) provisionClaimOperation(

claim *v1.PersistentVolumeClaim,

plugin vol.ProvisionableVolumePlugin,

storageClass *storage.StorageClass) (string, error) {

provisionerName := storageClass.Provisioner

// Add provisioner annotation to be consistent with external provisioner workflow

newClaim, err := ctrl.setClaimProvisioner(claim, provisionerName)

claimClone := claim.DeepCopy()

// TODO: remove the beta storage provisioner anno after the deprecation period

//AnnBetaStorageProvisioner = "volume.beta.kubernetes.io/storage-provisioner"

//AnnStorageProvisioner = "volume.kubernetes.io/storage-provisioner"

metav1.SetMetaDataAnnotation(&claimClone.ObjectMeta, pvutil.AnnBetaStorageProvisioner, provisionerName)

metav1.SetMetaDataAnnotation(&claimClone.ObjectMeta, pvutil.AnnStorageProvisioner, provisionerName)

updateMigrationAnnotations(ctrl.csiMigratedPluginManager, ctrl.translator, claimClone.Annotations, true)

newClaim, err := ctrl.kubeClient.CoreV1().PersistentVolumeClaims(claim.Namespace).Update(context.TODO(), claimClone, metav1.UpdateOptions{})

claim = newClaim

options := vol.VolumeOptions{

PersistentVolumeReclaimPolicy: *storageClass.ReclaimPolicy,

MountOptions: storageClass.MountOptions,

CloudTags: &tags,

ClusterName: ctrl.clusterName,

PVName: pvName,

PVC: claim,

Parameters: storageClass.Parameters,

}

//初始化provisioner

// Provision the volume

provisioner, err := plugin.NewProvisioner(options)

//调用provisioner.Provision,创建provisioner自己的volume,同时返回对应的pv

volume, err = provisioner.Provision(selectedNode, allowedTopologies)

// Create Kubernetes PV object for the volume.

if volume.Name == "" {

volume.Name = pvName

}

// Bind it to the claim

volume.Spec.ClaimRef = claimRef

volume.Status.Phase = v1.VolumeBound

volume.Spec.StorageClassName = claimClass

// Add AnnBoundByController (used in deleting the volume)

//AnnBoundByController = "pv.kubernetes.io/bound-by-controller"

metav1.SetMetaDataAnnotation(&volume.ObjectMeta, pvutil.AnnBoundByController, "yes")

//AnnDynamicallyProvisioned = "pv.kubernetes.io/provisioned-by"

metav1.SetMetaDataAnnotation(&volume.ObjectMeta, pvutil.AnnDynamicallyProvisioned, plugin.GetPluginName())

//创建PV

ctrl.kubeClient.CoreV1().PersistentVolumes().Create(context.TODO(), volume, metav1.CreateOptions{})

}

external-provisoner

external-provisoner监听到pvc事件后,调用syncClaim

//external-provisioner-master/vendor/sigs.k8s.io/sig-storage-lib-external-provisioner/v8/controller/controller.go

// syncClaim checks if the claim should have a volume provisioned for it and

// provisions one if so. Returns an error if the claim is to be requeued.

func (ctrl *ProvisionController) syncClaim(ctx context.Context, obj interface{}) error {

claim, ok := obj.(*v1.PersistentVolumeClaim)

//判断pvc是否有annStorageProvisioner或者annBetaStorageProvisioner注释,并且值为ctrl.provisionerName,

//如果是,说明此pvc需要由此provisioner处理

should, err := ctrl.shouldProvision(ctx, claim)

if err != nil {

ctrl.updateProvisionStats(claim, err, time.Time{})

return err

} else if should {

status, err := ctrl.provisionClaimOperation(ctx, claim)

...

}

}

// shouldProvision returns whether a claim should have a volume provisioned for

// it, i.e. whether a Provision is "desired"

func (ctrl *ProvisionController) shouldProvision(ctx context.Context, claim *v1.PersistentVolumeClaim) (bool, error) {

//claim.Spec.VolumeName不为空,说明已经bind到PV,不需要provisoner创建PV,返回false

if claim.Spec.VolumeName != "" {

return false, nil

}

if qualifier, ok := ctrl.provisioner.(Qualifier); ok {

if !qualifier.ShouldProvision(ctx, claim) {

return false, nil

}

}

provisioner, found := claim.Annotations[annStorageProvisioner]

if !found {

provisioner, found = claim.Annotations[annBetaStorageProvisioner]

}

if found {

if ctrl.knownProvisioner(provisioner) {

//获取pvc

claimClass := util.GetPersistentVolumeClaimClass(claim)

//获取pvc中指定的storageclass

class, err := ctrl.getStorageClass(claimClass)

if err != nil {

return false, err

}

//如果storageclass的VolumeBindingMode为VolumeBindingWaitForFirstConsumer,说明不需要立即创建pv,返回false

if class.VolumeBindingMode != nil && *class.VolumeBindingMode == storage.VolumeBindingWaitForFirstConsumer {

// When claim is in delay binding mode, annSelectedNode is

// required to provision volume.

// Though PV controller set annStorageProvisioner only when

// annSelectedNode is set, but provisioner may remove

// annSelectedNode to notify scheduler to reschedule again.

if selectedNode, ok := claim.Annotations[annSelectedNode]; ok && selectedNode != "" {

return true, nil

}

return false, nil

}

return true, nil

}

}

return false, nil

}

func (p *csiProvisioner) ShouldProvision(ctx context.Context, claim *v1.PersistentVolumeClaim) bool {

provisioner, ok := claim.Annotations[annStorageProvisioner]

if !ok {

provisioner = claim.Annotations[annBetaStorageProvisioner]

}

migratedTo := claim.Annotations[annMigratedTo]

//pvc中指定的provisioner是否是csi driver

if provisioner != p.driverName && migratedTo != p.driverName {

// Non-migrated in-tree volume is requested.

return false

}

// Either CSI volume is requested or in-tree volume is migrated to CSI in PV controller

// and therefore PVC has CSI annotation.

//

// But before we start provisioning, check that we are (or can

// become) the owner if there are multiple provisioner instances.

// That we do this here is crucial because if we return false here,

// the claim will be ignored without logging an event for it.

// We don't want each provisioner instance to log events for the same

// claim unless they really need to do some work for it.

owned, err := p.checkNode(ctx, claim, nil, "should provision")

if err == nil {

if !owned {

return false

}

} else {

// This is unexpected. Here we can only log it and let

// a provisioning attempt start. If that still fails,

// a proper event will be created.

klog.V(2).Infof("trying to become an owner of PVC %s/%s in advance failed, will try again during provisioning: %s",

claim.Namespace, claim.Name, err)

}

// Start provisioning.

return true

}

// knownProvisioner checks if provisioner name has been

// configured to provision volumes for

func (ctrl *ProvisionController) knownProvisioner(provisioner string) bool {

if provisioner == ctrl.provisionerName {

return true

}

for _, p := range ctrl.additionalProvisionerNames {

if p == provisioner {

return true

}

}

return false

}

func (ctrl *ProvisionController) provisionClaimOperation(ctx context.Context, claim *v1.PersistentVolumeClaim) (ProvisioningState, error) {

volume, result, err := ctrl.provisioner.Provision(ctx, options)

// Set ClaimRef and the PV controller will bind and set annBoundByController for us

volume.Spec.ClaimRef = claimRef

//AnnDynamicallyProvisioned = "pv.kubernetes.io/provisioned-by"

metav1.SetMetaDataAnnotation(&volume.ObjectMeta, annDynamicallyProvisioned, class.Provisioner)

volume.Spec.StorageClassName = claimClass

ctrl.volumeStore.StoreVolume(claim, volume)

}

//external-provisioner-master/pkg/controller/controller.go

func (p *csiProvisioner) Provision(ctx context.Context, options controller.ProvisionOptions) (*v1.PersistentVolume, controller.ProvisioningState, error) {

provisioner, ok := claim.Annotations[annStorageProvisioner]

if !ok {

provisioner = claim.Annotations[annBetaStorageProvisioner]

}

if provisioner != p.driverName && claim.Annotations[annMigratedTo] != p.driverName {

// The storage provisioner annotation may not equal driver name but the

// PVC could have annotation "migrated-to" which is the new way to

// signal a PVC is migrated (k8s v1.17+)

return nil, controller.ProvisioningFinished, &controller.IgnoredError{

Reason: fmt.Sprintf("PVC annotated with external-provisioner name %s does not match provisioner driver name %s. This could mean the PVC is not migrated",

provisioner,

p.driverName),

}

}

...

//调用csi driver的CreateVolume,创建volume

rep, err := p.csiClient.CreateVolume(createCtx, req)

...

pv := &v1.PersistentVolume{

ObjectMeta: metav1.ObjectMeta{

Name: pvName,

},

Spec: v1.PersistentVolumeSpec{

AccessModes: options.PVC.Spec.AccessModes,

MountOptions: options.StorageClass.MountOptions,

Capacity: v1.ResourceList{

v1.ResourceName(v1.ResourceStorage): bytesToQuantity(respCap),

},

// TODO wait for CSI VolumeSource API

PersistentVolumeSource: v1.PersistentVolumeSource{

CSI: result.csiPVSource,

},

},

}

...

return pv, controller.ProvisioningFinished, nil

}

//external-provisioner-master/vendor/sigs.k8s.io/sig-storage-lib-external-provisioner/v8/controller/volume_store.go

func (b *backoffStore) StoreVolume(claim *v1.PersistentVolumeClaim, volume *v1.PersistentVolume) error {

// Try to create the PV object several times

b.client.CoreV1().PersistentVolumes().Create(context.Background(), volume, metav1.CreateOptions{})

...

}

当provisoner创建PV后,pv controller会监听到PV添加事件,调用syncVolume

func (ctrl *PersistentVolumeController) syncVolume(volume *v1.PersistentVolume)

if volume.Spec.ClaimRef == nil {

...

} else /* pv.Spec.ClaimRef != nil */ { //provisoner创建PV成功后,会设置pv.Spec.ClaimRef为pvc

...

// Get the PVC by _name_

var claim *v1.PersistentVolumeClaim

claimName := claimrefToClaimKey(volume.Spec.ClaimRef)

obj, found, err := ctrl.claims.GetByKey(claimName)

claim, ok = obj.(*v1.PersistentVolumeClaim)

if claim == nil {

...

} else if claim.Spec.VolumeName == "" { //claim.Spec.VolumeName仍然为空,将claim添加到claimQueue,触发syncClaim

ctrl.claimQueue.Add(claimToClaimKey(claim))

return nil

}

...

}

func (ctrl *PersistentVolumeController) syncClaim(claim *v1.PersistentVolumeClaim) error

if !metav1.HasAnnotation(claim.ObjectMeta, pvutil.AnnBindCompleted) {

//此时pvc仍然为pending状态

return ctrl.syncUnboundClaim(claim)

} else {

return ctrl.syncBoundClaim(claim)

}

func (ctrl *PersistentVolumeController) syncUnboundClaim(claim *v1.PersistentVolumeClaim) error

//claim.Spec.VolumeName仍然为空

if claim.Spec.VolumeName == "" {

//因为provisoner已经创建好PV,所以这次会匹配成功

volume, err := ctrl.volumes.findBestMatchForClaim(claim, delayBinding)

if volume == nil {

...

} else /* pv != nil */ {

// Found a PV for this claim

// OBSERVATION: pvc is "Pending", pv is "Available"

claimKey := claimToClaimKey(claim)

klog.V(4).Infof("synchronizing unbound PersistentVolumeClaim[%s]: volume %q found: %s", claimKey, volume.Name, getVolumeStatusForLogging(volume))

//匹配到pv,进行bind

ctrl.bind(volume, claim)

}

} else /* pvc.Spec.VolumeName != nil */ {

...

}

func (ctrl *PersistentVolumeController) bind(volume *v1.PersistentVolume, claim *v1.PersistentVolumeClaim) error {

updatedVolume, err = ctrl.bindVolumeToClaim(volume, claim)

volumeClone, dirty, err := pvutil.GetBindVolumeToClaim(volume, claim)

// Save the volume only if something was changed

if dirty {

return ctrl.updateBindVolumeToClaim(volumeClone, true)

}

volume = updatedVolume

updatedVolume, err = ctrl.updateVolumePhase(volume, v1.VolumeBound, "")

volumeClone := volume.DeepCopy()

volumeClone.Status.Phase = phase

volumeClone.Status.Message = message

newVol, err := ctrl.kubeClient.CoreV1().PersistentVolumes().UpdateStatus(context.TODO(), volumeClone, metav1.UpdateOptions{})

volume = updatedVolume

updatedClaim, err = ctrl.bindClaimToVolume(claim, volume)

dirty := false

// Check if the claim was already bound (either by controller or by user)

shouldBind := false

if volume.Name != claim.Spec.VolumeName {

shouldBind = true

}

// The claim from method args can be pointing to watcher cache. We must not

// modify these, therefore create a copy.

claimClone := claim.DeepCopy()

if shouldBind {

dirty = true

// Bind the claim to the volume

claimClone.Spec.VolumeName = volume.Name

//AnnBoundByController = "pv.kubernetes.io/bound-by-controller"

// Set AnnBoundByController if it is not set yet

if !metav1.HasAnnotation(claimClone.ObjectMeta, pvutil.AnnBoundByController) {

metav1.SetMetaDataAnnotation(&claimClone.ObjectMeta, pvutil.AnnBoundByController, "yes")

}

}

//AnnBindCompleted = "pv.kubernetes.io/bind-completed"

// Set AnnBindCompleted if it is not set yet

if !metav1.HasAnnotation(claimClone.ObjectMeta, pvutil.AnnBindCompleted) {

metav1.SetMetaDataAnnotation(&claimClone.ObjectMeta, pvutil.AnnBindCompleted, "yes")

dirty = true

}

if dirty {

klog.V(2).Infof("volume %q bound to claim %q", volume.Name, claimToClaimKey(claim))

newClaim, err := ctrl.kubeClient.CoreV1().PersistentVolumeClaims(claim.Namespace).Update(context.TODO(), claimClone, metav1.UpdateOptions{})

}

claim = updatedClaim

updatedClaim, err = ctrl.updateClaimStatus(claim, v1.ClaimBound, volume)

claim = updatedClaim

}

kube-scheduler调度时对volume的处理

在kube-scheduler调度时,volumebinding插件只对使用pvc的volume做检查,其他类型的比如cephfs,不在调度时检查,只在kubelet真正调用ceph创建volume时才会检查。比如在prefilter函数中,检查pvc是否为bound状态,如果模式为Immediate,并且状态为unbound,则调度pod失败,需等待pvc为bound状态后才能继续调度。在filter函数中,检查pvc指定的pv的node亲和性,如果此node不满足,则也调度失败。

在prebind函数中,等待pod的所有pvc都变成bound后才继续往下进行(pv controller负责将pvc变成bound状态)

如果在pod yaml指定了unbound的pvc,则会在volumebinding的检查中失败,但是如果同时也指定了nodename,则此pod不会经过kube-scheduler的调度,也就不会在volumebinding中失败,而是在kubelet的getPVCExtractPV中检测到pvc是unbound后失败。

ad controler

ad controller的作用是对调度成功的pod所需要的volume执行attach操作,所以 ProbeAttachableVolumePlugins 只包括提供attach操作的插件,对于csi插件来说,attach操作只是创建对象volumeattachment,真正的attach操作由外部的csi-driver来实现

// ProcessPodVolumes processes the volumes in the given pod and adds them to the

// desired state of the world if addVolumes is true, otherwise it removes them.

func ProcessPodVolumes(pod *v1.Pod, addVolumes bool, desiredStateOfWorld cache.DesiredStateOfWorld, volumePluginMgr *volume.VolumePluginMgr, pvcLister corelisters.PersistentVolumeClaimLister, pvLister corelisters.PersistentVolumeLister, csiMigratedPluginManager csimigration.PluginManager, csiTranslator csimigration.InTreeToCSITranslator) {

// Process volume spec for each volume defined in pod

//遍历pod的volume,如果volume指定了pvc,则从pvc中获取pv,再获取pv spec,其中指定了使用的哪种plugin

for _, podVolume := range pod.Spec.Volumes {

volumeSpec, err := CreateVolumeSpec(podVolume, pod, nodeName, volumePluginMgr, pvcLister, pvLister, csiMigratedPluginManager, csiTranslator)

attachableVolumePlugin, err :=

volumePluginMgr.FindAttachablePluginBySpec(volumeSpec)

volumePlugin, err := pm.FindPluginBySpec(spec)

matches := []VolumePlugin{}

for _, v := range pm.plugins {

//比如csiPlugin的CanSupport,判断spec.PersistentVolume.Spec.CSI是否为空,不为空说明支持此spec

//比如nfs plugin的CanSupport,判断spec.PersistentVolume.Spec.NFS或者spec.Volume.NFS是否为空,不为空说明支持此spec

if v.CanSupport(spec) {

matches = append(matches, v)

}

}

if len(matches) == 0 {

return nil, fmt.Errorf("no volume plugin matched")

}

if err != nil {

return nil, err

}

//确认插件是否支持接口AttachableVolumePlugin,如果支持还要确认是否CanAttach

//对于csiPlugin,是支持AttachableVolumePlugin的,但是是否支持CanAttach还得看具体的csi driver,看下面对CanAttach的注释

//对于nfs plugin是不支持的AttachableVolumePlugin

if attachableVolumePlugin, ok := volumePlugin.(AttachableVolumePlugin); ok {

if canAttach, err := attachableVolumePlugin.CanAttach(spec); err != nil {

return nil, err

} else if canAttach {

return attachableVolumePlugin, nil

}

}

return nil, nil

//没找到plugin,说明提供pv的plugin没提供attach接口,不需要attach操作

if err != nil || attachableVolumePlugin == nil {

klog.V(10).Infof(

"Skipping volume %q for pod %q/%q: it does not implement attacher interface. err=%v",

podVolume.Name,

pod.Namespace,

pod.Name,

err)

continue

}

//将支持attach操作的插件加入/删除期望状态

uniquePodName := util.GetUniquePodName(pod)

if addVolumes {

// Add volume to desired state of world

_, err := desiredStateOfWorld.AddPod(

uniquePodName, pod, volumeSpec, nodeName)

}

else {

// Remove volume from desired state of world

uniqueVolumeName, err := util.GetUniqueVolumeNameFromSpec(

attachableVolumePlugin, volumeSpec)

desiredStateOfWorld.DeletePod(

uniquePodName, uniqueVolumeName, nodeName)

}

}

//csiPlugin的CanSupport,只要pv指定了csi就返回true

func (p *csiPlugin) CanSupport(spec *volume.Spec) bool {

// TODO (vladimirvivien) CanSupport should also take into account

// the availability/registration of specified Driver in the volume source

if spec == nil {

return false

}

if utilfeature.DefaultFeatureGate.Enabled(features.CSIInlineVolume) {

return (spec.PersistentVolume != nil && spec.PersistentVolume.Spec.CSI != nil) ||

(spec.Volume != nil && spec.Volume.CSI != nil)

}

return spec.PersistentVolume != nil && spec.PersistentVolume.Spec.CSI != nil

}

//csiPlugin的CanAttach,通过pv指定的Driver获取csidriver,csidriver只指定了是否需要attach,对于nfs csidriver来说是不支持的

func (p *csiPlugin) CanAttach(spec *volume.Spec) (bool, error) {

...

pvSrc, err := getCSISourceFromSpec(spec)

if err != nil {

return false, err

}

driverName := pvSrc.Driver

skipAttach, err := p.skipAttach(driverName)

return !skipAttach, nil

}

// skipAttach looks up CSIDriver object associated with driver name

// to determine if driver requires attachment volume operation

func (p *csiPlugin) skipAttach(driver string) (bool, error) {

...

csiDriver, err := p.csiDriverLister.Get(driver)

...

if csiDriver.Spec.AttachRequired != nil && *csiDriver.Spec.AttachRequired == false {

return true, nil

}

return false, nil

}

root@master:~/nfs/dy_out# kubectl describe csiDriver nfs.csi.k8s.io

Name: nfs.csi.k8s.io

Namespace:

Labels: <none>

Annotations: <none>

API Version: storage.k8s.io/v1

Kind: CSIDriver

Metadata:

Creation Timestamp: 2022-11-16T13:59:54Z

Managed Fields:

API Version: storage.k8s.io/v1

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

f:attachRequired:

f:fsGroupPolicy:

f:podInfoOnMount:

f:requiresRepublish:

f:storageCapacity:

f:volumeLifecycleModes:

.:

v:"Persistent":

Manager: kubectl-client-side-apply

Operation: Update

Time: 2022-11-16T13:59:54Z

Resource Version: 1185243

UID: d756aba2-0fb5-429f-8e6e-eb2517b44fd7

Spec:

Attach Required: false //指定了nfs不需要attach操作

Fs Group Policy: File

Pod Info On Mount: false

Requires Republish: false

Storage Capacity: false

Volume Lifecycle Modes:

Persistent

Events: <none>

在ad controller reconcile过程中,调用插件的attach接口

reconcile -> attachDesiredVolumes -> AttachVolume

GenerateAttachVolumeFunc

attachableVolumePlugin, err := og.volumePluginMgr.FindAttachablePluginBySpec(volumeToAttach.VolumeSpec)

volumeAttacher, newAttacherErr := attachableVolumePlugin.NewAttacher()

// Execute attach

devicePath, attachErr := volumeAttacher.Attach(volumeToAttach.VolumeSpec, volumeToAttach.NodeName)

//对于csi plugin来说

func (p *csiPlugin) NewAttacher() (volume.Attacher, error) {

return p.newAttacherDetacher()

}

//创建VolumeAttachment对象

func (c *csiAttacher) Attach(spec *volume.Spec, nodeName types.NodeName) (string, error) {

if spec == nil {

klog.Error(log("attacher.Attach missing volume.Spec"))

return "", errors.New("missing spec")

}

pvSrc, err := getPVSourceFromSpec(spec)

if err != nil {

return "", errors.New(log("attacher.Attach failed to get CSIPersistentVolumeSource: %v", err))

}

node := string(nodeName)

attachID := getAttachmentName(pvSrc.VolumeHandle, pvSrc.Driver, node)

attachment, err := c.plugin.volumeAttachmentLister.Get(attachID)

if err != nil && !apierrors.IsNotFound(err) {

return "", errors.New(log("failed to get volume attachment from lister: %v", err))

}

if attachment == nil {

var vaSrc storage.VolumeAttachmentSource

if spec.InlineVolumeSpecForCSIMigration {

// inline PV scenario - use PV spec to populate VA source.

// The volume spec will be populated by CSI translation API

// for inline volumes. This allows fields required by the CSI

// attacher such as AccessMode and MountOptions (in addition to

// fields in the CSI persistent volume source) to be populated

// as part of CSI translation for inline volumes.

vaSrc = storage.VolumeAttachmentSource{

InlineVolumeSpec: &spec.PersistentVolume.Spec,

}

} else {

// regular PV scenario - use PV name to populate VA source

pvName := spec.PersistentVolume.GetName()

vaSrc = storage.VolumeAttachmentSource{

PersistentVolumeName: &pvName,

}

}

attachment := &storage.VolumeAttachment{

ObjectMeta: meta.ObjectMeta{

Name: attachID,

},

Spec: storage.VolumeAttachmentSpec{

NodeName: node,

Attacher: pvSrc.Driver,

Source: vaSrc,

},

}

_, err = c.k8s.StorageV1().VolumeAttachments().Create(context.TODO(), attachment, metav1.CreateOptions{})

}

// Attach and detach functionality is exclusive to the CSI plugin that runs in the AttachDetachController,

// and has access to a VolumeAttachment lister that can be polled for the current status.

if err := c.waitForVolumeAttachmentWithLister(pvSrc.VolumeHandle, attachID, c.watchTimeout); err != nil {

return "", err

}

klog.V(4).Info(log("attacher.Attach finished OK with VolumeAttachment object [%s]", attachID))

// Don't return attachID as a devicePath. We can reconstruct the attachID using getAttachmentName()

return "", nil

}

external-attacher

external-attacher监听到volumeattachment后,调用ControllerPublishVolume进行attach操作

// syncVA deals with one key off the queue. It returns false when it's time to quit.

func (ctrl *CSIAttachController) syncVA()

key, quit := ctrl.vaQueue.Get()

ctrl.handler.SyncNewOrUpdatedVolumeAttachment(va)

func (h *csiHandler) SyncNewOrUpdatedVolumeAttachment(va *storage.VolumeAttachment)

h.syncAttach(va)

va, metadata, err := h.csiAttach(va)

// We're not interested in `detached` return value, the controller will

// issue Detach to be sure the volume is really detached.

publishInfo, _, err := h.attacher.Attach(ctx, volumeHandle, readOnly, nodeID, volumeCapabilities, attributes, secrets)

func (a *attacher) Attach(ctx context.Context, volumeID string, readOnly bool, nodeID string, caps *csi.VolumeCapability, context, secrets map[string]string) (metadata map[string]string, detached bool, err error) {

req := csi.ControllerPublishVolumeRequest{

VolumeId: volumeID,

NodeId: nodeID,

VolumeCapability: caps,

Readonly: readOnly,

VolumeContext: context,

Secrets: secrets,

}

rsp, err := a.client.ControllerPublishVolume(ctx, &req)

if err != nil {

return nil, isFinalError(err), err

}

return rsp.PublishContext, false, nil

}

volumeManager mount

每个node上的kubelet的volumeManager周期调用populatorLoop,遍历其node上的pod将未mount的volume进行mount

func (dswp *desiredStateOfWorldPopulator) Run(sourcesReady config.SourcesReady, stopCh <-chan struct{}) {

// Wait for the completion of a loop that started after sources are all ready, then set hasAddedPods accordingly

klog.InfoS("Desired state populator starts to run")

wait.PollUntil(dswp.loopSleepDuration, func() (bool, error) {

done := sourcesReady.AllReady()

dswp.populatorLoop()

return done, nil

}, stopCh)

dswp.hasAddedPodsLock.Lock()

dswp.hasAddedPods = true

dswp.hasAddedPodsLock.Unlock()

wait.Until(dswp.populatorLoop, dswp.loopSleepDuration, stopCh)

}

func (dswp *desiredStateOfWorldPopulator) populatorLoop() {

dswp.findAndAddNewPods()

...

}

// Iterate through all pods and add to desired state of world if they don't

// exist but should

func (dswp *desiredStateOfWorldPopulator) findAndAddNewPods() {

...

processedVolumesForFSResize := sets.NewString()

for _, pod := range dswp.podManager.GetPods() {

if dswp.podStateProvider.ShouldPodContainersBeTerminating(pod.UID) {

// Do not (re)add volumes for pods that can't also be starting containers

continue

}

dswp.processPodVolumes(pod, mountedVolumesForPod, processedVolumesForFSResize)

}

}

// processPodVolumes processes the volumes in the given pod and adds them to the

// desired state of the world.

func (dswp *desiredStateOfWorldPopulator) processPodVolumes(

pod *v1.Pod,

mountedVolumesForPod map[volumetypes.UniquePodName]map[string]cache.MountedVolume,

processedVolumesForFSResize sets.String) {

// Process volume spec for each volume defined in pod

for _, podVolume := range pod.Spec.Volumes {

// Add volume to desired state of world

uniqueVolumeName, err := dswp.desiredStateOfWorld.AddPodToVolume(

uniquePodName, pod, volumeSpec, podVolume.Name, volumeGidValue)

}

}

func (rc *reconciler) reconcile() {

// Unmounts are triggered before mounts so that a volume that was

// referenced by a pod that was deleted and is now referenced by another

// pod is unmounted from the first pod before being mounted to the new

// pod.

rc.unmountVolumes()

// Next we mount required volumes. This function could also trigger

// attach if kubelet is responsible for attaching volumes.

// If underlying PVC was resized while in-use then this function also handles volume

// resizing.

rc.mountAttachVolumes()

// Ensure devices that should be detached/unmounted are detached/unmounted.

rc.unmountDetachDevices()

}

func (rc *reconciler) mountAttachVolumes() {

// Ensure volumes that should be attached/mounted are attached/mounted.

for _, volumeToMount := range rc.desiredStateOfWorld.GetVolumesToMount() {

err := rc.operationExecutor.MountVolume(

rc.waitForAttachTimeout,

volumeToMount.VolumeToMount,

rc.actualStateOfWorld,

isRemount)

}

}

MountVolume -> GenerateMountVolumeFunc

GenerateMountVolumeFunc

volumeMounter, newMounterErr := volumePlugin.NewMounter(

volumeToMount.VolumeSpec,

volumeToMount.Pod,

volume.VolumeOptions{})

// get deviceMounter, if possible

deviceMountableVolumePlugin, _ := og.volumePluginMgr.FindDeviceMountablePluginBySpec(volumeToMount.VolumeSpec)

var volumeDeviceMounter volume.DeviceMounter

if deviceMountableVolumePlugin != nil {

volumeDeviceMounter, _ = deviceMountableVolumePlugin.NewDeviceMounter()

}

// Mount device to global mount path

err = volumeDeviceMounter.MountDevice(

volumeToMount.VolumeSpec,

devicePath,

deviceMountPath,

volume.DeviceMounterArgs{FsGroup: fsGroup},

)

//执行volume插件的SetUp

// Execute mount

mountErr := volumeMounter.SetUp(volume.MounterArgs{

FsUser: util.FsUserFrom(volumeToMount.Pod),

FsGroup: fsGroup,

DesiredSize: volumeToMount.DesiredSizeLimit,

FSGroupChangePolicy: fsGroupChangePolicy,

})

func (c *csiAttacher) MountDevice(spec *volume.Spec, devicePath string, deviceMountPath string, deviceMounterArgs volume.DeviceMounterArgs) error {

err = csi.NodeStageVolume(ctx,

csiSource.VolumeHandle,

publishContext,

deviceMountPath,

fsType,

accessMode,

nodeStageSecrets,

csiSource.VolumeAttributes,

mountOptions,

nodeStageFSGroupArg)

对于in-tree插件来说,比如nfs,调用SetUp,mount目录

// SetUp attaches the disk and bind mounts to the volume path.

func (nfsMounter *nfsMounter) SetUp(mounterArgs volume.MounterArgs) error {

return nfsMounter.SetUpAt(nfsMounter.GetPath(), mounterArgs)

}

对于out-tree插件csi来说,调用SetUp

func (c *csiMountMgr) SetUp(mounterArgs volume.MounterArgs) error {

return c.SetUpAt(c.GetPath(), mounterArgs)

}

func (c *csiMountMgr) SetUpAt(dir string, mounterArgs volume.MounterArgs) error {

...

err = csi.NodePublishVolume(

ctx,

volumeHandle,

readOnly,

deviceMountPath,

dir,

accessMode,

publishContext,

volAttribs,

nodePublishSecrets,

fsType,

mountOptions,

nodePublishFSGroupArg,

)

...

klog.V(4).Infof(log("mounter.SetUp successfully requested NodePublish [%s]", dir))

return nil

}

//调用csi plugin的NodePublishVolume进行mount操作

func (c *csiDriverClient) NodePublishVolume(...)

nodeClient.NodePublishVolume(ctx, req)

// NodePublishVolume mount the volume

func (ns *NodeServer) NodePublishVolume(ctx context.Context, req *csi.NodePublishVolumeRequest) (*csi.NodePublishVolumeResponse, error) {

ns.mounter.Mount(source, targetPath, "nfs", mountOptions)

///syncPod时等待volume被attach和mount成功

func (kl *Kubelet) syncPod(ctx context.Context, updateType kubetypes.SyncPodType, pod, mirrorPod *v1.Pod, podStatus *kubecontainer.PodStatus)

...

//等待volume attach/mount成功

// Wait for volumes to attach/mount

kl.volumeManager.WaitForAttachAndMount(pod)

// Fetch the pull secrets for the pod

pullSecrets := kl.getPullSecretsForPod(pod)

//创建pod

// Call the container runtime's SyncPod callback

result := kl.containerRuntime.SyncPod(pod, podStatus, pullSecrets, kl.backOff)

func (vm *volumeManager) WaitForAttachAndMount(pod *v1.Pod) error {

if pod == nil {

return nil

}

expectedVolumes := getExpectedVolumes(pod)

if len(expectedVolumes) == 0 {

// No volumes to verify

return nil

}

klog.V(3).InfoS("Waiting for volumes to attach and mount for pod", "pod", klog.KObj(pod))

uniquePodName := util.GetUniquePodName(pod)

// Some pods expect to have Setup called over and over again to update.

// Remount plugins for which this is true. (Atomically updating volumes,

// like Downward API, depend on this to update the contents of the volume).

vm.desiredStateOfWorldPopulator.ReprocessPod(uniquePodName)

err := wait.PollImmediate(

podAttachAndMountRetryInterval,

podAttachAndMountTimeout,

vm.verifyVolumesMountedFunc(uniquePodName, expectedVolumes))

if err != nil {

unmountedVolumes :=

vm.getUnmountedVolumes(uniquePodName, expectedVolumes)

// Also get unattached volumes for error message

unattachedVolumes :=

vm.getUnattachedVolumes(expectedVolumes)

if len(unmountedVolumes) == 0 {

return nil

}

return fmt.Errorf(

"unmounted volumes=%v, unattached volumes=%v: %s",

unmountedVolumes,

unattachedVolumes,

err)

}

klog.V(3).InfoS("All volumes are attached and mounted for pod", "pod", klog.KObj(pod))

return nil

}

// verifyVolumesMountedFunc returns a method that returns true when all expected

// volumes are mounted.

func (vm *volumeManager) verifyVolumesMountedFunc(podName types.UniquePodName, expectedVolumes []string) wait.ConditionFunc {

return func() (done bool, err error) {

if errs := vm.desiredStateOfWorld.PopPodErrors(podName); len(errs) > 0 {

return true, errors.New(strings.Join(errs, "; "))

}

return len(vm.getUnmountedVolumes(podName, expectedVolumes)) == 0, nil

}

}