Nacos注册中心集群数据一致性问题

Nacos集群数据一致性问题

- 一、集群架构

-

- 1.1 Master - Slave

- 1.2 Leader - Follower

- 二、Nacos注册中心集群架构

-

- 2.1 DistroConsistencyServiceImpl

-

- 2.1.1 init() 方法

- 2.1.2 load() 方法

- 2.1.3 Notifier 线程(事件监听)

- 2.1.4 put(String key, Record value) 方法

- 2.1.5 TaskDispatcher

- 2.1.6 DataSyncer

- 2.1.7 Nacos 分区一致性算法总结

- 2.2 RaftConsistencyServiceImpl

-

- 2.2.1 Raft算法

- 2.2.2 Nacos中Raft算法的具体实现

-

- 2.2.2.1 RaftPeerSet(Leader选举)

- 2.2.2.2 RaftCore

-

- 1. RaftCore init()

- 2. Notifier 线程(事件监听)

- 3. MasterElection 线程(投票选举)

- 4. HeartBeat 线程(心跳检测)

- 5. RaftCore.signalPublish()

- 2.2.3 Nacos Raft算法总结

文章系列

【一、Nacos-配置中心原理解析】

【二、Nacos-配置中心原理解析】

【三、Nacos注册中心集群数据一致性问题】

一、集群架构

1.1 Master - Slave

也就是我们说的主从复制,主机的数据更新后根据配置和策略,自动同步到备机的master/slave机制,当主节点宕机后,集群会根据某种分布式一致性协议(Raft、gossip协议、ZAB协议等)选举出新的Master节点。

Master负责读写,Slave只负责读。

在很多组件中都有使用这种思想,比如Mysql主从架构、Redis主从架构、kafka里面的数据副本机制等等。

常见的主从复制架构有:

1.2 Leader - Follower

这也是比较常见的集群架构,各自职责如下:

-

Leader:领导者,主要的工作任务有两项

- 事物请求的唯一调度和处理者,保证集群事物处理的顺序性

- 集群内部各服务器的调度者

-

Follower:跟随者,主要职责是

- 处理客户端非事物请求、转发事物请求给 Leader 服务器

- 参与事物请求的投票,如Zookeeper半数以上Follower通过才能通知 Leader commit数据

- 参与Leader选举的投票

在很多组件中都有使用这种思想,比如ZooKeeper集群等等。

二、Nacos注册中心集群架构

Nacos 注册中心集群架构采用了Leader - Follower模式,在Nacos Server服务启动过程中,会选举出一个Leader节点,如果在运行过程中,Leader节点宕机,服务会进入不可用状态,直到选举出新的Leader节点。

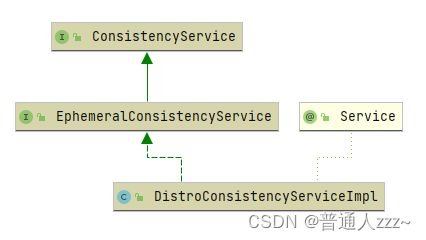

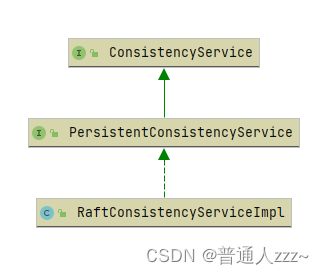

Nacos 提供了顶层的一致性服务类:ConsistencyService,所有的一致性算法实现,都必须实现 ConsistencyService 接口,Nacos内部根据临时节点和持久化节点,分别实现了对应两种一致性协议,整体类关系图如下:

其中,ConsistencyService 直接实现类或接口有三个:EphemeralConsistencyService、PersistentConsistencyService、DelegateConsistencyServiceImpl,其中 DelegateConsistencyServiceImpl采用了委派设计模式,内部存在EphemeralConsistencyService、PersistentConsistencyService两个成员变量,根据key判断,采用哪种一致性协议。

- EphemeralConsistencyService:临时节点一致性协议,临时数据与服务器同生共死,即只要会话仍然存在,临时数据就不会丢失。

- PersistentConsistencyService:持久化节点一致性协议,保证已发布数据的 CP 一致性的一致性协议。

DelegateConsistencyServiceImpl代码实现如下:

@Service("consistencyDelegate")

public class DelegateConsistencyServiceImpl implements ConsistencyService {

@Autowired

private PersistentConsistencyService persistentConsistencyService;

@Autowired

private EphemeralConsistencyService ephemeralConsistencyService;

@Override

public void put(String key, Record value) throws NacosException {

// 根据key判断,采用哪种一致性协议,然后调用其put方法

mapConsistencyService(key).put(key, value);

}

@Override

public void remove(String key) throws NacosException {

// 根据key判断,采用哪种一致性协议,然后调用其remove方法

mapConsistencyService(key).remove(key);

}

@Override

public Datum get(String key) throws NacosException {

// 根据key判断,采用哪种一致性协议,然后调用其get方法

return mapConsistencyService(key).get(key);

}

@Override

public void listen(String key, RecordListener listener) throws NacosException {

// this special key is listened by both:

if (KeyBuilder.SERVICE_META_KEY_PREFIX.equals(key)) {

persistentConsistencyService.listen(key, listener);

ephemeralConsistencyService.listen(key, listener);

return;

}

// 根据key判断,采用哪种一致性协议,然后调用其listen方法

mapConsistencyService(key).listen(key, listener);

}

@Override

public void unlisten(String key, RecordListener listener) throws NacosException {

// 根据key判断,采用哪种一致性协议,然后调用其unlisten方法

mapConsistencyService(key).unlisten(key, listener);

}

@Override

public boolean isAvailable() {

// 判断协议可用状态

return ephemeralConsistencyService.isAvailable() && persistentConsistencyService.isAvailable();

}

// 根据key判断,采用哪种一致性协议

private ConsistencyService mapConsistencyService(String key) {

// 判断key是否以 com.alibaba.nacos.naming.iplist.ephemeral. 开头,如果是,返回true

// 即 以 com.alibaba.nacos.naming.iplist.ephemeral. 开头的采用 ephemeralConsistencyService 一致性算法

// 否则采用 persistentConsistencyService 一致性算法

return KeyBuilder.matchEphemeralKey(key) ? ephemeralConsistencyService : persistentConsistencyService;

}

}

其中 KeyBuilder.matchEphemeralKey(key) 逻辑如下:

public class KeyBuilder {

public static boolean matchEphemeralKey(String key) {

// currently only instance list has ephemeral type:

// 判断key是否以 com.alibaba.nacos.naming.iplist.ephemeral. 开头,如果是,返回true

return matchEphemeralInstanceListKey(key);

}

public static boolean matchEphemeralInstanceListKey(String key) {

// 判断key是否以 com.alibaba.nacos.naming.iplist.ephemeral. 开头,如果是,返回true

return key.startsWith(INSTANCE_LIST_KEY_PREFIX + EPHEMERAL_KEY_PREFIX);

}

}

DelegateConsistencyServiceImpl 类的主要作用是:判断key是否以 com.alibaba.nacos.naming.iplist.ephemeral. 开头,如果是,采用 ephemeralConsistencyService 一致性算法,否则采用 persistentConsistencyService 一致性算法。

2.1 DistroConsistencyServiceImpl

DistroConsistencyServiceImpl 为 EphemeralConsistencyService 的唯一实现,如下:

DistroConsistencyServiceImpl 临时节点一致性协议采用一种分区的一致性协议算法,该算法的特点如下:

- 将数据划分为许多块,每个 Nacos 服务器节点只负责一个数据块。

- 每个数据块都由其负责的服务器生成、删除和同步。

- 每个 Nacos 服务器只处理总服务数据的一个子集的写入。

- 每个 Nacos 服务器都会接收到其他 Nacos 服务器的数据同步。

最终每个 Nacos 服务器最终都会有一套完整的数据。

分区 / 分片 / 分段 在很多中间件或底层源码都要体现,例如Java 7中的 ConcurrentHashMap中的 Segment、Redis集群架构的Hash槽、Kafka中的数据分片、RocketMa中的读写队列等等。

DistroConsistencyServiceImpl 的类结构比较简单,如下:

2.1.1 init() 方法

@org.springframework.stereotype.Service("distroConsistencyService")

public class DistroConsistencyServiceImpl implements EphemeralConsistencyService {

@PostConstruct

public void init() {

// 执行一个线程

GlobalExecutor.submit(new Runnable() {

@Override

public void run() {

try {

// 尝试加载所有服务中的数据,缓存到本地。

load();

} catch (Exception e) {

Loggers.DISTRO.error("load data failed.", e);

}

}

});

// 执行 Notifier线程

executor.submit(notifier);

}

}

2.1.2 load() 方法

尝试加载所有服务中的数据,缓存到本地。

@org.springframework.stereotype.Service("distroConsistencyService")

public class DistroConsistencyServiceImpl implements EphemeralConsistencyService {

public void load() throws Exception {

// 如果是单机模式

if (SystemUtils.STANDALONE_MODE) {

initialized = true;

return;

}

// size = 1 means only myself in the list, we need at least one another server alive:

// size = 1 表示列表中只有我自己,至少需要另一台服务器

while (serverListManager.getHealthyServers().size() <= 1) {

Thread.sleep(1000L);

Loggers.DISTRO.info("waiting server list init...");

}

// 遍历健康的服务列表

for (Server server : serverListManager.getHealthyServers()) {

if (NetUtils.localServer().equals(server.getKey())) {

continue;

}

if (Loggers.DISTRO.isDebugEnabled()) {

Loggers.DISTRO.debug("sync from " + server);

}

// try sync data from remote server:

// 尝试从远程服务器同步数据

if (syncAllDataFromRemote(server)) {

initialized = true;

return;

}

}

}

// 尝试从远程服务器同步数据

public boolean syncAllDataFromRemote(Server server) {

try {

// 获取Server中所有实例数据 server.getKey() -> ip:port

// 发送 /v1/ns/distro/datums 请求,获取所有实例数据

byte[] data = NamingProxy.getAllData(server.getKey());

// 处理数据

processData(data);

return true;

} catch (Exception e) {

Loggers.DISTRO.error("sync full data from " + server + " failed!", e);

return false;

}

}

// 处理数据

public void processData(byte[] data) throws Exception {

if (data.length > 0) {

// 解码

Map<String, Datum<Instances>> datumMap =

serializer.deserializeMap(data, Instances.class);

for (Map.Entry<String, Datum<Instances>> entry : datumMap.entrySet()) {

// 存储数据

dataStore.put(entry.getKey(), entry.getValue());

// 发送事件监听

if (!listeners.containsKey(entry.getKey())) {

// pretty sure the service not exist:

if (switchDomain.isDefaultInstanceEphemeral()) {

// create empty service

Loggers.DISTRO.info("creating service {}", entry.getKey());

Service service = new Service();

String serviceName = KeyBuilder.getServiceName(entry.getKey());

String namespaceId = KeyBuilder.getNamespace(entry.getKey());

service.setName(serviceName);

service.setNamespaceId(namespaceId);

service.setGroupName(Constants.DEFAULT_GROUP);

// now validate the service. if failed, exception will be thrown

service.setLastModifiedMillis(System.currentTimeMillis());

service.recalculateChecksum();

listeners.get(KeyBuilder.SERVICE_META_KEY_PREFIX).get(0)

.onChange(KeyBuilder.buildServiceMetaKey(namespaceId, serviceName), service);

}

}

}

// 发送事件监听

for (Map.Entry<String, Datum<Instances>> entry : datumMap.entrySet()) {

if (!listeners.containsKey(entry.getKey())) {

// Should not happen:

Loggers.DISTRO.warn("listener of {} not found.", entry.getKey());

continue;

}

try {

for (RecordListener listener : listeners.get(entry.getKey())) {

listener.onChange(entry.getKey(), entry.getValue().value);

}

} catch (Exception e) {

Loggers.DISTRO.error("[NACOS-DISTRO] error while execute listener of key: {}", entry.getKey(), e);

// 异常continue

continue;

}

// Update data store if listener executed successfully:

// 如果侦听器成功执行,则更新数据存储

dataStore.put(entry.getKey(), entry.getValue());

}

}

}

}

2.1.3 Notifier 线程(事件监听)

Notifier 为 DistroConsistencyServiceImpl 的一个内部类,用于实现事件监听机制,完成数据变更/删除通知所有Lisenter。

Notifier 采用了生产者消费者模式,通过 BlockingQueue 阻塞队列,完成生产 / 消费。

@org.springframework.stereotype.Service("distroConsistencyService")

public class DistroConsistencyServiceImpl implements EphemeralConsistencyService {

public class Notifier implements Runnable {

private ConcurrentHashMap<String, String> services = new ConcurrentHashMap<>(10 * 1024);

private BlockingQueue<Pair> tasks = new LinkedBlockingQueue<Pair>(1024 * 1024);

public void addTask(String datumKey, ApplyAction action) {

// 判断是否已经处理过当前 datumKey,并且 action == ApplyAction.CHANGE,避免重复处理

if (services.containsKey(datumKey) && action == ApplyAction.CHANGE) {

return;

}

// 添加

if (action == ApplyAction.CHANGE) {

services.put(datumKey, StringUtils.EMPTY);

}

// 生产者消费者模式:生产任务

tasks.add(Pair.with(datumKey, action));

}

public int getTaskSize() {

return tasks.size();

}

@Override

public void run() {

Loggers.DISTRO.info("distro notifier started");

// 死循环:生产者消费者模式,消费任务

while (true) {

try {

// 如果阻塞队列为空,阻塞

Pair pair = tasks.take();

if (pair == null) {

continue;

}

String datumKey = (String) pair.getValue0();

ApplyAction action = (ApplyAction) pair.getValue1();

// 移出当前datumKey

services.remove(datumKey);

int count = 0;

if (!listeners.containsKey(datumKey)) {

continue;

}

for (RecordListener listener : listeners.get(datumKey)) {

count++;

try {

// Change 事件

if (action == ApplyAction.CHANGE) {

listener.onChange(datumKey, dataStore.get(datumKey).value);

continue;

}

// Delete 事件

if (action == ApplyAction.DELETE) {

listener.onDelete(datumKey);

continue;

}

} catch (Throwable e) {

Loggers.DISTRO.error("[NACOS-DISTRO] error while notifying listener of key: {}", datumKey, e);

}

}

if (Loggers.DISTRO.isDebugEnabled()) {

Loggers.DISTRO.debug("[NACOS-DISTRO] datum change notified, key: {}, listener count: {}, action: {}",

datumKey, count, action.name());

}

} catch (Throwable e) {

Loggers.DISTRO.error("[NACOS-DISTRO] Error while handling notifying task", e);

}

}

}

}

}

2.1.4 put(String key, Record value) 方法

我们主要看一下其中的事务处理方法,以 put(String key, Record value) 方法为例,其他的(如remove(String key)) 类似,这里不做过多分析。

@org.springframework.stereotype.Service("distroConsistencyService")

public class DistroConsistencyServiceImpl implements EphemeralConsistencyService {

@Override

public void put(String key, Record value) throws NacosException {

onPut(key, value);

// 添加当前key 数据同步任务

taskDispatcher.addTask(key);

}

public void onPut(String key, Record value) {

// 判断是否为临时实例列表键,以 com.alibaba.nacos.naming.iplist.ephemeral. 开头

if (KeyBuilder.matchEphemeralInstanceListKey(key)) {

Datum<Instances> datum = new Datum<>();

datum.value = (Instances) value;

datum.key = key;

datum.timestamp.incrementAndGet();

// 缓存

dataStore.put(key, datum);

}

if (!listeners.containsKey(key)) {

return;

}

// 发送Change 事件

notifier.addTask(key, ApplyAction.CHANGE);

}

}

2.1.5 TaskDispatcher

数据同步任务调度器。

@Component

public class TaskDispatcher {

// 全局配置对象

@Autowired

private GlobalConfig partitionConfig;

// 数据同步器

@Autowired

private DataSyncer dataSyncer;

// 任务调度列表

private List<TaskScheduler> taskSchedulerList = new ArrayList<>();

// cpu核心数

private final int cpuCoreCount = Runtime.getRuntime().availableProcessors();

@PostConstruct

public void init() {

// 初始化 taskSchedulerList 任务调度列表

for (int i = 0; i < cpuCoreCount; i++) {

TaskScheduler taskScheduler = new TaskScheduler(i);

taskSchedulerList.add(taskScheduler);

GlobalExecutor.submitTaskDispatch(taskScheduler);

}

}

public void addTask(String key) {

// UtilsAndCommons.shakeUp(key, cpuCoreCount):一种散列算法,根据key获取一个均匀分布在 0~cpuCoreCount 之间的数字

// TaskScheduler.addTask 添加任务:生产者消费者模式,生成任务

taskSchedulerList.get(UtilsAndCommons.shakeUp(key, cpuCoreCount)).addTask(key);

}

public class TaskScheduler implements Runnable {

private int index;

private int dataSize = 0;

private long lastDispatchTime = 0L;

private BlockingQueue<String> queue = new LinkedBlockingQueue<>(128 * 1024);

public TaskScheduler(int index) {

this.index = index;

}

public void addTask(String key) {

queue.offer(key);

}

public int getIndex() {

return index;

}

@Override

public void run() {

List<String> keys = new ArrayList<>();

// 死循环,生产者消费者模式,消费任务

while (true) {

try {

// 获取key:带超时时间

String key = queue.poll(partitionConfig.getTaskDispatchPeriod(),

TimeUnit.MILLISECONDS);

if (Loggers.DISTRO.isDebugEnabled() && StringUtils.isNotBlank(key)) {

Loggers.DISTRO.debug("got key: {}", key);

}

if (dataSyncer.getServers() == null || dataSyncer.getServers().isEmpty()) {

continue;

}

if (StringUtils.isBlank(key)) {

continue;

}

if (dataSize == 0) {

keys = new ArrayList<>();

}

keys.add(key);

dataSize++;

// dataSize == 批量同步key || 当前时间 - 最后同步时间 > 任务调度周期

// dataSize == partitionConfig.getBatchSyncKeyCount():控制最大能同时处理的同步key数量

if (dataSize == partitionConfig.getBatchSyncKeyCount() ||

(System.currentTimeMillis() - lastDispatchTime) > partitionConfig.getTaskDispatchPeriod()) {

// 遍历所有服务

for (Server member : dataSyncer.getServers()) {

// 剔除当前服务

if (NetUtils.localServer().equals(member.getKey())) {

continue;

}

// 同步任务

SyncTask syncTask = new SyncTask();

syncTask.setKeys(keys);

syncTask.setTargetServer(member.getKey());

if (Loggers.DISTRO.isDebugEnabled() && StringUtils.isNotBlank(key)) {

Loggers.DISTRO.debug("add sync task: {}", JSON.toJSONString(syncTask));

}

// 提交数据同步任务

dataSyncer.submit(syncTask, 0);

}

// 更新最后同步时间

lastDispatchTime = System.currentTimeMillis();

// 重置dataSize

dataSize = 0;

}

} catch (Exception e) {

Loggers.DISTRO.error("dispatch sync task failed.", e);

}

}

}

}

}

2.1.6 DataSyncer

数据同步处理器。

@Component

@DependsOn("serverListManager")

public class DataSyncer {

@Autowired

private DataStore dataStore;

@Autowired

private GlobalConfig partitionConfig;

@Autowired

private Serializer serializer;

@Autowired

private DistroMapper distroMapper;

@Autowired

private ServerListManager serverListManager;

private Map<String, String> taskMap = new ConcurrentHashMap<>();

@PostConstruct

public void init() {

// 开启 定时同步

startTimedSync();

}

public void submit(SyncTask task, long delay) {

// If it's a new task:

// 判断是否为新的任务:重试次数 == 0

if (task.getRetryCount() == 0) {

Iterator<String> iterator = task.getKeys().iterator();

while (iterator.hasNext()) {

String key = iterator.next();

if (StringUtils.isNotBlank(taskMap.putIfAbsent(buildKey(key, task.getTargetServer()), key))) {

// associated key already exist:

if (Loggers.DISTRO.isDebugEnabled()) {

Loggers.DISTRO.debug("sync already in process, key: {}", key);

}

// 避免重复同步:去除重复请求

iterator.remove();

}

}

}

if (task.getKeys().isEmpty()) {

// all keys are removed:

return;

}

GlobalExecutor.submitDataSync(new Runnable() {

@Override

public void run() {

try {

if (getServers() == null || getServers().isEmpty()) {

Loggers.SRV_LOG.warn("try to sync data but server list is empty.");

return;

}

// 获取所有需要同步的key

List<String> keys = task.getKeys();

if (Loggers.DISTRO.isDebugEnabled()) {

Loggers.DISTRO.debug("sync keys: {}", keys);

}

// 根据key获取Datum

Map<String, Datum> datumMap = dataStore.batchGet(keys);

if (datumMap == null || datumMap.isEmpty()) {

// clear all flags of this task:

for (String key : task.getKeys()) {

taskMap.remove(buildKey(key, task.getTargetServer()));

}

return;

}

// 序列化

byte[] data = serializer.serialize(datumMap);

long timestamp = System.currentTimeMillis();

// 发送数据同步请求:/v1/ns/distro/datum

boolean success = NamingProxy.syncData(data, task.getTargetServer());

if (!success) {

SyncTask syncTask = new SyncTask();

syncTask.setKeys(task.getKeys());

syncTask.setRetryCount(task.getRetryCount() + 1);

syncTask.setLastExecuteTime(timestamp);

syncTask.setTargetServer(task.getTargetServer());

// 异常重试

retrySync(syncTask);

} else {

// clear all flags of this task:

for (String key : task.getKeys()) {

taskMap.remove(buildKey(key, task.getTargetServer()));

}

}

} catch (Exception e) {

Loggers.DISTRO.error("sync data failed.", e);

}

}

}, delay);

}

// 异常重试

public void retrySync(SyncTask syncTask) {

Server server = new Server();

server.setIp(syncTask.getTargetServer().split(":")[0]);

server.setServePort(Integer.parseInt(syncTask.getTargetServer().split(":")[1]));

if (!getServers().contains(server)) {

// if server is no longer in healthy server list, ignore this task:

return;

}

// TODO may choose other retry policy.

// 重试,默认 5s 后重试

submit(syncTask, partitionConfig.getSyncRetryDelay());

}

public void startTimedSync() {

GlobalExecutor.schedulePartitionDataTimedSync(new TimedSync());

}

// 定时同步线程

public class TimedSync implements Runnable {

@Override

public void run() {

try {

if (Loggers.DISTRO.isDebugEnabled()) {

Loggers.DISTRO.debug("server list is: {}", getServers());

}

// send local timestamps to other servers:

Map<String, String> keyChecksums = new HashMap<>(64);

for (String key : dataStore.keys()) {

if (!distroMapper.responsible(KeyBuilder.getServiceName(key))) {

continue;

}

// 添加 checksum

keyChecksums.put(key, dataStore.get(key).value.getChecksum());

}

if (keyChecksums.isEmpty()) {

return;

}

if (Loggers.DISTRO.isDebugEnabled()) {

Loggers.DISTRO.debug("sync checksums: {}", keyChecksums);

}

for (Server member : getServers()) {

// 剔除当前服务

if (NetUtils.localServer().equals(member.getKey())) {

continue;

}

// 同步 checksum,发送 /v1/ns/distro/checksum?source=NetUtils.localServer()

NamingProxy.syncCheckSums(keyChecksums, member.getKey());

}

} catch (Exception e) {

Loggers.DISTRO.error("timed sync task failed.", e);

}

}

}

public List<Server> getServers() {

return serverListManager.getHealthyServers();

}

public String buildKey(String key, String targetServer) {

return key + UtilsAndCommons.CACHE_KEY_SPLITER + targetServer;

}

}

2.1.7 Nacos 分区一致性算法总结

2.2 RaftConsistencyServiceImpl

RaftConsistencyServiceImpl 为 PersistentConsistencyService 的唯一实现,如下:

RaftConsistencyServiceImpl 持久化节点一致性协议采用 Raft算法 实现, 保证已发布数据的 CP 一致性的一致性协议。

2.2.1 Raft算法

Raft 算法主要作用:

- 服务启动时,选举出集群中的 Leader节点

- 崩溃恢复,Leader节点挂掉后,从所有 Follower 节点中选举出新的 Leader

- 数据一致性,Leader 节点处理事务请求,Follower 只处理非事务请求,如果接受到事务请求,将当前请求转发给Leader,由Leader处理后,同步给Follower节点。

Raft算法的Leader选举核心思想:先到先得,少数服从多数。

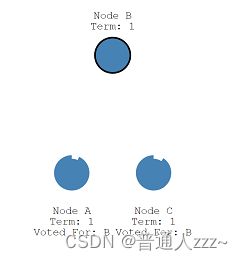

下面简单说明一下 Raft 算法在服务启动时,选举 Leader 的过程,其中 Node 代表节点,Term 代表选举周期。

周期的含义:服务每选举一轮,为一个周期

1.当所有服务启动后,每个服务会随机生成一个 Leader 选举开始倒计时(下图红色框框中的部分)。

2.当集群中存在倒计时为0的节点时,它会进入一个候选状态,例如下面的Node B节点。

3.候选节点会首先发起Leader投票(先到先得)

4.其他节点接收到投票消息后,会返回一个信息给候选节点。

5.当某个候选节点,收到Leader选举票数大于当前集群总节点的 1/2 时,它会变成 Leader 节点,其他节点变成 Follower 节点(少数服从多数)。

6. Leader节点需要每隔一段时间,向 Follower 发送一个心跳包,维持心跳。

7. Follower 节点会开启一个心跳倒计时,在倒计时时间内,如果收到心跳,返回给Leader,并重新开始倒计时;如果倒计时时间内,没有收到心跳,会主动向 Leade r节点发送请求,判断当前 Leader 是否宕机,如果宕机,开始新的 Leader 选举。

当集群中存在 Leader 节点宕机后,还是会重复上面 1-7 类似步骤,选举出新的 Leader 节点。

2.2.2 Nacos中Raft算法的具体实现

RaftConsistencyServiceImpl 持久化节点一致性协议采用 Raft算法 实现, 保证已发布数据的 CP 一致性的一致性协议。

核心逻辑如下:

@Service

public class RaftConsistencyServiceImpl implements PersistentConsistencyService {

/**

* Raft 核心实现

*/

@Autowired

private RaftCore raftCore;

/**

* Raft Leader选举核心实现

*/

@Autowired

private RaftPeerSet peers;

/**

* 开关

*/

@Autowired

private SwitchDomain switchDomain;

@Override

public void put(String key, Record value) throws NacosException {

try {

// 转发事务消息给Leader进行处理:服务发布

raftCore.signalPublish(key, value);

} catch (Exception e) {

Loggers.RAFT.error("Raft put failed.", e);

throw new NacosException(NacosException.SERVER_ERROR, "Raft put failed, key:" + key + ", value:" + value, e);

}

}

@Override

public void remove(String key) throws NacosException {

try {

// 判断要删除的key是否为匹配实例列表key(以com.alibaba.nacos.naming.iplist. 或 iplist. 开始)

// 如果是,并且当前节点不是Leader

if (KeyBuilder.matchInstanceListKey(key) && !raftCore.isLeader()) {

Datum datum = new Datum();

datum.key = key;

// 直接本地删除

raftCore.onDelete(datum.key, peers.getLeader());

// 删除对应所有监听

raftCore.unlistenAll(key);

return;

}

// 转发事务消息给Leader进行处理:服务剔除

raftCore.signalDelete(key);

// 删除对应所有监听

raftCore.unlistenAll(key);

} catch (Exception e) {

Loggers.RAFT.error("Raft remove failed.", e);

throw new NacosException(NacosException.SERVER_ERROR, "Raft remove failed, key:" + key, e);

}

}

@Override

public Datum get(String key) throws NacosException {

// get

return raftCore.getDatum(key);

}

@Override

public void listen(String key, RecordListener listener) throws NacosException {

// 给当前key添加listener

raftCore.listen(key, listener);

}

@Override

public void unlisten(String key, RecordListener listener) throws NacosException {

// 取消当前key的listener

raftCore.unlisten(key, listener);

}

@Override

public boolean isAvailable() {

// 是否可用

return raftCore.isInitialized() || ServerStatus.UP.name().equals(switchDomain.getOverriddenServerStatus());

}

public void onPut(Datum datum, RaftPeer source) throws NacosException {

try {

// 本地发布

raftCore.onPublish(datum, source);

} catch (Exception e) {

Loggers.RAFT.error("Raft onPut failed.", e);

throw new NacosException(NacosException.SERVER_ERROR, "Raft onPut failed, datum:" + datum + ", source: " + source, e);

}

}

public void onRemove(Datum datum, RaftPeer source) throws NacosException {

try {

// 本地剔除

raftCore.onDelete(datum.key, source);

} catch (Exception e) {

Loggers.RAFT.error("Raft onRemove failed.", e);

throw new NacosException(NacosException.SERVER_ERROR, "Raft onRemove failed, datum:" + datum + ", source: " + source, e);

}

}

}

可以看出,Raft的核心实现有两个类,一个是RaftCore,一个是RaftPeerSet,作用分别是:

- RaftPeerSet:Raft Leader选举核心实现

- RaftCore:Raft 核心实现

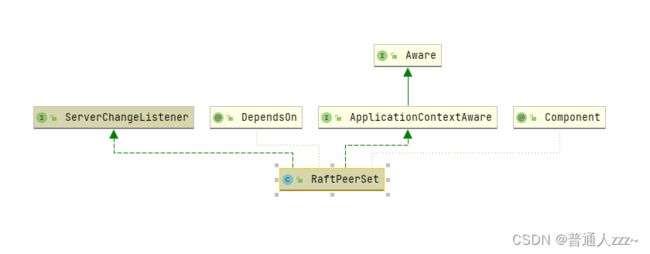

2.2.2.1 RaftPeerSet(Leader选举)

该类主要职责是进行Raft Leader选举,主要包括两个场景:

- 服务启动过程中 Leader 选举;

- Leader 出现宕机后,选举出新的 Leader;

- ServerChangeListener:监听服务列表变化

- @DependsOn(“serverListManager”):在进行注入的时候,先加载serverListManager后,再加载当前实例

- ApplicationContextAware:获取 ApplicationContext 对象

- @Component:注入到Spring IoC容器中

下面我们具体看一下代码实现。

@Component

@DependsOn("serverListManager")

public class RaftPeerSet implements ServerChangeListener, ApplicationContextAware {

// 服务器列表管理器

@Autowired

private ServerListManager serverListManager;

// Spring应用上下文

private ApplicationContext applicationContext;

// Raft选举本地Term

private AtomicLong localTerm = new AtomicLong(0L);

// 集群Leader

private RaftPeer leader = null;

// 集群中所有Leader、Follower对象集合

// key -> ip value -> Raft选举对象

private Map<String, RaftPeer> peers = new HashMap<>();

private Set<String> sites = new HashSet<>();

// 是否可读,port > 0

private boolean ready = false;

public RaftPeerSet() {

}

@Override

public void setApplicationContext(ApplicationContext applicationContext) throws BeansException {

this.applicationContext = applicationContext;

}

@PostConstruct

public void init() {

// 初始化:将当前Listener添加到 ServerListManager 中的列表监听,用于实时监听服务列表变化

serverListManager.listen(this);

}

// 获取Leader对象

public RaftPeer getLeader() {

// 是否为单机模式,通过 nacos.standalone 配置true/false

if (STANDALONE_MODE) {

// 返回当前对象

return local();

}

// 返回 Leader 对象

return leader;

}

public Set<String> allSites() {

return sites;

}

public boolean isReady() {

return ready;

}

// 移除服务

public void remove(List<String> servers) {

for (String server : servers) {

peers.remove(server);

}

}

// 修改服务

public RaftPeer update(RaftPeer peer) {

peers.put(peer.ip, peer);

return peer;

}

/**

* 是否为Leader

*/

public boolean isLeader(String ip) {

if (STANDALONE_MODE) {

// 单机模式,直接返回true

return true;

}

// Leader对象为空,直接返回false

if (leader == null) {

Loggers.RAFT.warn("[IS LEADER] no leader is available now!");

return false;

}

// 判断当前ip是否与Leader ip相等

return StringUtils.equals(leader.ip, ip);

}

// 获取包括当前服务的所有服务ip

public Set<String> allServersIncludeMyself() {

return peers.keySet();

}

// 获取派出当前服务的所有服务ip

public Set<String> allServersWithoutMySelf() {

Set<String> servers = new HashSet<String>(peers.keySet());

// exclude myself

servers.remove(local().ip);

return servers;

}

// 获取集群中所有服务对象

public Collection<RaftPeer> allPeers() {

return peers.values();

}

// 大小

public int size() {

return peers.size();

}

/**

* Leader选举:在进行服务启动时,Leader选举开始时间倒计时为0后,调用当前方法,向除当前本地服务所有服务发起投票(通过Http)

* 具体逻辑详看下面的 RaftCore MasterElection过程

*

* @param candidate 候选者(投票-投候选者)

* @return

*/

public RaftPeer decideLeader(RaftPeer candidate) {

peers.put(candidate.ip, candidate);

// 分拣袋:用于统计各个候选者投票次数,类似于生活中的“画正字”

SortedBag ips = new TreeBag();

// 最大票数

int maxApproveCount = 0;

// 最大票数拥有者

String maxApprovePeer = null;

for (RaftPeer peer : peers.values()) {

// 1.判断当前RaftPeer voteFor(当前RaftPeer的投票对象ip) 是否为空

if (StringUtils.isEmpty(peer.voteFor)) {

continue;

}

// 存在投票者

// 2. 添加投票

ips.add(peer.voteFor);

// 3. 更新最大票数、最大票数拥有者

if (ips.getCount(peer.voteFor) > maxApproveCount) {

maxApproveCount = ips.getCount(peer.voteFor);

maxApprovePeer = peer.voteFor;

}

}

// 判断最大票数是否大于 peers.size() / 2 + 1 ,即集群中节点个数的 二分之一 + 1

if (maxApproveCount >= majorityCount()) {

// 设置Leader

RaftPeer peer = peers.get(maxApprovePeer);

peer.state = RaftPeer.State.LEADER;

if (!Objects.equals(leader, peer)) {

// 设置Leader

leader = peer;

// 发送Leader选举完成事件

applicationContext.publishEvent(new LeaderElectFinishedEvent(this, leader));

Loggers.RAFT.info("{} has become the LEADER", leader.ip);

}

}

return leader;

}

/**

* 决定 or 设置Leader:candidate候选者-当前集群Leader对象

* @param candidate

* @return

*/

public RaftPeer makeLeader(RaftPeer candidate) {

if (!Objects.equals(leader, candidate)) {

leader = candidate;

// 发送决定出Leader事件

applicationContext.publishEvent(new MakeLeaderEvent(this, leader));

Loggers.RAFT.info("{} has become the LEADER, local: {}, leader: {}",

leader.ip, JSON.toJSONString(local()), JSON.toJSONString(leader));

}

for (final RaftPeer peer : peers.values()) {

Map<String, String> params = new HashMap<>(1);

if (!Objects.equals(peer, candidate) && peer.state == RaftPeer.State.LEADER) {

// 当前RaftPeer 不是Leader,并且当前RaftPeer 对象的状态为Leader状态,需要更新为Follower

try {

// url = /v1/ns/raft/peer

String url = RaftCore.buildURL(peer.ip, RaftCore.API_GET_PEER);

// 异步请求:发送 /v1/ns/raft/peer 判断当前对象是否为Leader对象

HttpClient.asyncHttpGet(url, null, params, new AsyncCompletionHandler<Integer>() {

@Override

public Integer onCompleted(Response response) throws Exception {

// 如果不是Leader对象,更新状态为Follower

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.error("[NACOS-RAFT] get peer failed: {}, peer: {}",

response.getResponseBody(), peer.ip);

peer.state = RaftPeer.State.FOLLOWER;

return 1;

}

// 更新结果状态

update(JSON.parseObject(response.getResponseBody(), RaftPeer.class));

return 0;

}

});

} catch (Exception e) {

peer.state = RaftPeer.State.FOLLOWER;

Loggers.RAFT.error("[NACOS-RAFT] error while getting peer from peer: {}", peer.ip);

}

}

}

// 更新结果状态

return update(candidate);

}

// 获取当前服务所属的RaftPeer对象

public RaftPeer local() {

RaftPeer peer = peers.get(NetUtils.localServer());

// peer为空,并且为单机模式,创建一个RaftPeer 并放入 peers 缓存中

if (peer == null && SystemUtils.STANDALONE_MODE) {

RaftPeer localPeer = new RaftPeer();

localPeer.ip = NetUtils.localServer();

localPeer.term.set(localTerm.get());

peers.put(localPeer.ip, localPeer);

return localPeer;

}

if (peer == null) {

throw new IllegalStateException("unable to find local peer: " + NetUtils.localServer() + ", all peers: "

+ Arrays.toString(peers.keySet().toArray()));

}

return peer;

}

// 根据ip获取RaftPeer 对象

public RaftPeer get(String server) {

return peers.get(server);

}

// Raft投票决策出Leader的值:集群节点的二分之一 + 1

public int majorityCount() {

return peers.size() / 2 + 1;

}

// 重置:重置Leader、所有voteFor

public void reset() {

// 重置Leader

leader = null;

// 重置所有voteFor

for (RaftPeer peer : peers.values()) {

peer.voteFor = null;

}

}

// 设置Raft选举过程中的Term(周期)

public void setTerm(long term) {

localTerm.set(term);

}

// 获取Raft选举过程中的Term(周期)

public long getTerm() {

return localTerm.get();

}

public boolean contains(RaftPeer remote) {

return peers.containsKey(remote.ip);

}

/**

* 监听处理集群服务节点变化

*

* @param latestMembers 最新的Server

*/

@Override

public void onChangeServerList(List<Server> latestMembers) {

Map<String, RaftPeer> tmpPeers = new HashMap<>(8);

for (Server member : latestMembers) {

if (peers.containsKey(member.getKey())) {

tmpPeers.put(member.getKey(), peers.get(member.getKey()));

continue;

}

RaftPeer raftPeer = new RaftPeer();

raftPeer.ip = member.getKey();

// first time meet the local server:

if (NetUtils.localServer().equals(member.getKey())) {

raftPeer.term.set(localTerm.get());

}

tmpPeers.put(member.getKey(), raftPeer);

}

// replace raft peer set:

peers = tmpPeers;

if (RunningConfig.getServerPort() > 0) {

ready = true;

}

Loggers.RAFT.info("raft peers changed: " + latestMembers);

}

@Override

public void onChangeHealthyServerList(List<Server> latestReachableMembers) {

}

}

2.2.2.2 RaftCore

Nacos集群中Raft算法的核心实现,包括Leader选举、事务转发Leader等功能。下面会讲解一下其中的核心逻辑。

1. RaftCore init()

@Component

public class RaftCore {

@PostConstruct

public void init() throws Exception {

Loggers.RAFT.info("initializing Raft sub-system");

// 执行一个 Notifier 线程:后面分析

executor.submit(notifier);

long start = System.currentTimeMillis();

// 加载基础数据

// user.home + /nacos/data/naming/data

raftStore.loadDatums(notifier, datums);

// 初始化当前节点Leader选举Term(周期)

setTerm(NumberUtils.toLong(raftStore.loadMeta().getProperty("term"), 0L));

Loggers.RAFT.info("cache loaded, datum count: {}, current term: {}", datums.size(), peers.getTerm());

// 等待 Notifier 线程执行完成

while (true) {

if (notifier.tasks.size() <= 0) {

break;

}

Thread.sleep(1000L);

}

// 初始化

initialized = true;

Loggers.RAFT.info("finish to load data from disk, cost: {} ms.", (System.currentTimeMillis() - start));

// 开启一个Leader选举线程:

// executorService.scheduleAtFixedRate(new MasterElection(), 0, 500L, TimeUnit.MILLISECONDS);

GlobalExecutor.registerMasterElection(new MasterElection());

// 开启一个心跳检查线程

// executorService.scheduleWithFixedDelay(runnable, 0, 500L, TimeUnit.MILLISECONDS);

GlobalExecutor.registerHeartbeat(new HeartBeat());

Loggers.RAFT.info("timer started: leader timeout ms: {}, heart-beat timeout ms: {}",

GlobalExecutor.LEADER_TIMEOUT_MS, GlobalExecutor.HEARTBEAT_INTERVAL_MS);

}

}

2. Notifier 线程(事件监听)

Notifier 为 RaftCore 的一个内部类,用于实现事件监听机制,完成数据变更/删除通知所有Lisenter。

Notifier 采用了生产者消费者模式,通过 BlockingQueue 阻塞队列,完成生产 / 消费。

public class RaftCore {

// 当前RaftCore 所有数据监听Listener

private volatile Map<String, List<RecordListener>> listeners = new ConcurrentHashMap<>();

public class Notifier implements Runnable {

private ConcurrentHashMap<String, String> services = new ConcurrentHashMap<>(10 * 1024);

private BlockingQueue<Pair> tasks = new LinkedBlockingQueue<>(1024 * 1024);

public void addTask(String datumKey, ApplyAction action) {

// 判断是否已经处理过当前 datumKey,并且 action == ApplyAction.CHANGE,避免重复处理

if (services.containsKey(datumKey) && action == ApplyAction.CHANGE) {

return;

}

// 添加

if (action == ApplyAction.CHANGE) {

services.put(datumKey, StringUtils.EMPTY);

}

Loggers.RAFT.info("add task {}", datumKey);

// 生产者消费者模式:生产任务

tasks.add(Pair.with(datumKey, action));

}

public int getTaskSize() {

return tasks.size();

}

@Override

public void run() {

Loggers.RAFT.info("raft notifier started");

// 死循环:生产者消费者模式,消费任务

while (true) {

try {

// 如果阻塞队列为空,阻塞

Pair pair = tasks.take();

if (pair == null) {

continue;

}

String datumKey = (String) pair.getValue0();

ApplyAction action = (ApplyAction) pair.getValue1();

// 移出当前datumKey

services.remove(datumKey);

Loggers.RAFT.info("remove task {}", datumKey);

int count = 0;

// listeners中存在key为:com.alibaba.nacos.naming.domains.meta.

if (listeners.containsKey(KeyBuilder.SERVICE_META_KEY_PREFIX)) {

// KeyBuilder.matchServiceMetaKey(datumKey):是否以 com.alibaba.nacos.naming.domains.meta. 或 meta. 开头

// !KeyBuilder.matchSwitchKey(datumKey):不以 00-00---000-NACOS_SWITCH_DOMAIN-000---00-00 结尾

if (KeyBuilder.matchServiceMetaKey(datumKey) && !KeyBuilder.matchSwitchKey(datumKey)) {

// listeners.get("com.alibaba.nacos.naming.domains.meta.")

for (RecordListener listener : listeners.get(KeyBuilder.SERVICE_META_KEY_PREFIX)) {

try {

// 数据变更事件

if (action == ApplyAction.CHANGE) {

listener.onChange(datumKey, getDatum(datumKey).value);

}

// 数据删除事件

if (action == ApplyAction.DELETE) {

listener.onDelete(datumKey);

}

} catch (Throwable e) {

Loggers.RAFT.error("[NACOS-RAFT] error while notifying listener of key: {}", datumKey, e);

}

}

}

}

if (!listeners.containsKey(datumKey)) {

// 不存在当前datumKey

continue;

}

// 遍历通知

for (RecordListener listener : listeners.get(datumKey)) {

count++;

try {

// 数据变更事件

if (action == ApplyAction.CHANGE) {

listener.onChange(datumKey, getDatum(datumKey).value);

continue;

}

// 数据删除事件

if (action == ApplyAction.DELETE) {

listener.onDelete(datumKey);

continue;

}

} catch (Throwable e) {

Loggers.RAFT.error("[NACOS-RAFT] error while notifying listener of key: {}", datumKey, e);

}

}

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("[NACOS-RAFT] datum change notified, key: {}, listener count: {}", datumKey, count);

}

} catch (Throwable e) {

Loggers.RAFT.error("[NACOS-RAFT] Error while handling notifying task", e);

}

}

}

}

}

3. MasterElection 线程(投票选举)

用于选举出Nacos集群中的Leader节点。

每隔 500L 执行一次。

public class RaftCore {

public class MasterElection implements Runnable {

@Override

public void run() {

try {

if (!peers.isReady()) {

return;

}

RaftPeer local = peers.local();

// local.leaderDueMs -= 500L;

local.leaderDueMs -= GlobalExecutor.TICK_PERIOD_MS;

// 判断当前节点Leader选举倒计时是否已经结束

if (local.leaderDueMs > 0) {

// 没有结束,等待下一个500L执行,再判断

return;

}

/***********当前节点Leader选举倒计时结束,开始选举Leader***********/

// reset timeout

// 重置 leaderDueMs = 15s - (0~5s),leaderDueMs 范围为 10s ~ 15s

local.resetLeaderDue();

// 重置 heartbeatDueMs 心跳倒计时时间:选举出Leader后,Leader 和 Follower 需要维持一个心跳

// 如果心跳超时,需要检查Leader是否宕机,如果宕机,开始新一轮Leader选举

local.resetHeartbeatDue();

// 发送投票:开始Leader选举

sendVote();

} catch (Exception e) {

Loggers.RAFT.warn("[RAFT] error while master election {}", e);

}

}

// 发送投票:开始Leader选举

public void sendVote() {

// 获取当前服务 RaftPeer 对象

RaftPeer local = peers.get(NetUtils.localServer());

Loggers.RAFT.info("leader timeout, start voting,leader: {}, term: {}",

JSON.toJSONString(getLeader()), local.term);

// 重置集群 leader=null

// 重置集群节点投票对象 RaftPeer.voteFor = null

peers.reset();

// 选举term(周期) +1

local.term.incrementAndGet();

// 先投自己

local.voteFor = local.ip;

// 标记自己为候选者(Candidate)状态

local.state = RaftPeer.State.CANDIDATE;

Map<String, String> params = new HashMap<>(1);

params.put("vote", JSON.toJSONString(local));

// 向除当前节点其他所有节点发送投票:投当前节点

for (final String server : peers.allServersWithoutMySelf()) {

// url = "http://" + ip + RunningConfig.getContextPath() + /v1/ns/raft/vote

final String url = buildURL(server, API_VOTE);

try {

// 异步请求

HttpClient.asyncHttpPost(url, null, params, new AsyncCompletionHandler<Integer>() {

@Override

public Integer onCompleted(Response response) throws Exception {

/*******处理/v1/ns/raft/vote 请求结果********/

// 异常直接return

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.error("NACOS-RAFT vote failed: {}, url: {}", response.getResponseBody(), url);

return 1;

}

// 获取投票结果

RaftPeer peer = JSON.parseObject(response.getResponseBody(), RaftPeer.class);

Loggers.RAFT.info("received approve from peer: {}", JSON.toJSONString(peer));

// 根据投票结果,进行Leader选举:方法详解参照 2.2.2.1 RaftPeerSet(Leader选举)

peers.decideLeader(peer);

return 0;

}

});

} catch (Exception e) {

Loggers.RAFT.warn("error while sending vote to server: {}", server);

}

}

}

}

}

在 MasterElection 线程中,会向其他节点发送一个投票请求(/v1/ns/raft/vote),服务收到请求后,最终会调用 RaftCore 中的 RaftPeer receivedVote(RaftPeer remote) 方法,将当前服务节点投票结果发送给请求节点,具体处理逻辑如下:

@Component

public class RaftCore {

/**

* 收到投票

* @param remote 远程 RaftPeer

* @return

*/

public RaftPeer receivedVote(RaftPeer remote) {

// 判断远程 RaftPeer 是否存在当前 peers中,不存在,抛异常

if (!peers.contains(remote)) {

throw new IllegalStateException("can not find peer: " + remote.ip);

}

// 获取当前服务节点RaftPeer

RaftPeer local = peers.get(NetUtils.localServer());

// 先比较选举周期term大小:以term大的为准

// 相对也选举自己

if (remote.term.get() <= local.term.get()) {

String msg = "received illegitimate vote" +

", voter-term:" + remote.term + ", votee-term:" + local.term;

Loggers.RAFT.info(msg);

if (StringUtils.isEmpty(local.voteFor)) {

local.voteFor = local.ip;

}

return local;

}

// 否则,接收远程RaftPeer投票

// 重置 leaderDueMs = 15s - (0~5s),leaderDueMs 范围为 10s ~ 15s

local.resetLeaderDue();

// 设置当前节点为 Follower

local.state = RaftPeer.State.FOLLOWER;

// 设置当前节点投票对象为 remote.ip

local.voteFor = remote.ip;

// 设置当前节点投票周期term为远程term

local.term.set(remote.term.get());

Loggers.RAFT.info("vote {} as leader, term: {}", remote.ip, remote.term);

return local;

}

}

4. HeartBeat 线程(心跳检测)

HeartBeat 用于Nacos集群中,Leader 节点发送心跳包给 Follower 节点,维持心跳。

@Component

public class RaftCore {

public class HeartBeat implements Runnable {

@Override

public void run() {

try {

if (!peers.isReady()) {

return;

}

// 获取当前服务RaftPeer

RaftPeer local = peers.local();

// local.heartbeatDueMs -= 500ms;

local.heartbeatDueMs -= GlobalExecutor.TICK_PERIOD_MS;

if (local.heartbeatDueMs > 0) {

return;

}

// 重置 heartbeatDueMs 心跳倒计时时间:选举出Leader后,Leader 和 Follower 需要维持一个心跳

// 如果心跳超时,需要检查Leader是否宕机,如果宕机,开始新一轮Leader选举

local.resetHeartbeatDue();

// 发送心跳包:Leader节点向其他Follower节点发送心跳包

sendBeat();

} catch (Exception e) {

Loggers.RAFT.warn("[RAFT] error while sending beat {}", e);

}

}

// 发送心跳包:Leader节点向其他Follower节点发送心跳包

public void sendBeat() throws IOException, InterruptedException {

RaftPeer local = peers.local();

// 只有当前节点为Leader节点,并且为集群模式,才需要向其他Follower节点发送心跳包

if (local.state != RaftPeer.State.LEADER && !STANDALONE_MODE) {

return;

}

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("[RAFT] send beat with {} keys.", datums.size());

}

// 重置当前服务集群选举倒计时 leaderDueMs:保证心跳正常,不再重新开始选举

// leaderDueMs = 15s - (0~5s),leaderDueMs 范围为 10s ~ 15s

local.resetLeaderDue();

// build data

// 心跳包

JSONObject packet = new JSONObject();

packet.put("peer", local);

JSONArray array = new JSONArray();

// 是否只发送心跳包:默认false

if (switchDomain.isSendBeatOnly()) {

Loggers.RAFT.info("[SEND-BEAT-ONLY] {}", String.valueOf(switchDomain.isSendBeatOnly()));

}

if (!switchDomain.isSendBeatOnly()) {

for (Datum datum : datums.values()) {

JSONObject element = new JSONObject();

if (KeyBuilder.matchServiceMetaKey(datum.key)) {

element.put("key", KeyBuilder.briefServiceMetaKey(datum.key));

} else if (KeyBuilder.matchInstanceListKey(datum.key)) {

element.put("key", KeyBuilder.briefInstanceListkey(datum.key));

}

element.put("timestamp", datum.timestamp);

array.add(element);

}

}

packet.put("datums", array);

// broadcast

Map<String, String> params = new HashMap<String, String>(1);

params.put("beat", JSON.toJSONString(packet));

String content = JSON.toJSONString(params);

ByteArrayOutputStream out = new ByteArrayOutputStream();

// 压缩

GZIPOutputStream gzip = new GZIPOutputStream(out);

gzip.write(content.getBytes(StandardCharsets.UTF_8));

gzip.close();

byte[] compressedBytes = out.toByteArray();

String compressedContent = new String(compressedBytes, StandardCharsets.UTF_8);

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("raw beat data size: {}, size of compressed data: {}",

content.length(), compressedContent.length());

}

for (final String server : peers.allServersWithoutMySelf()) {

try {

// url = "http://" + ip + RunningConfig.getContextPath() + /v1/ns/raft/beat

final String url = buildURL(server, API_BEAT);

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("send beat to server " + server);

}

// 异步发送

HttpClient.asyncHttpPostLarge(url, null, compressedBytes, new AsyncCompletionHandler<Integer>() {

@Override

public Integer onCompleted(Response response) throws Exception {

/*******处理/v1/ns/raft/beat 请求结果********/

// 异常直接return

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.error("NACOS-RAFT beat failed: {}, peer: {}",

response.getResponseBody(), server);

MetricsMonitor.getLeaderSendBeatFailedException().increment();

return 1;

}

// 更新RaftPeerSet peers;

peers.update(JSON.parseObject(response.getResponseBody(), RaftPeer.class));

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("receive beat response from: {}", url);

}

return 0;

}

@Override

public void onThrowable(Throwable t) {

Loggers.RAFT.error("NACOS-RAFT error while sending heart-beat to peer: {} {}", server, t);

MetricsMonitor.getLeaderSendBeatFailedException().increment();

}

});

} catch (Exception e) {

Loggers.RAFT.error("error while sending heart-beat to peer: {} {}", server, e);

MetricsMonitor.getLeaderSendBeatFailedException().increment();

}

}

}

}

}

在 HeartBeat 线程中,Leader节点会向其他Follower节点发送一个心跳包(/v1/ns/raft/vote),Follower收到心跳请求后,最终会调用 RaftCore 中的 RaftPeer receivedBeat(JSONObject beat) 方法,具体处理逻辑如下:

@Component

public class RaftCore {

/**

* 接收处理心跳包

* @param beat 心跳包

* @return 返回当前节点信息

* @throws Exception

*/

public RaftPeer receivedBeat(JSONObject beat) throws Exception {

final RaftPeer local = peers.local();

// step 1:获取Leader节点信息

final RaftPeer remote = new RaftPeer();

remote.ip = beat.getJSONObject("peer").getString("ip");

remote.state = RaftPeer.State.valueOf(beat.getJSONObject("peer").getString("state"));

remote.term.set(beat.getJSONObject("peer").getLongValue("term"));

remote.heartbeatDueMs = beat.getJSONObject("peer").getLongValue("heartbeatDueMs");

remote.leaderDueMs = beat.getJSONObject("peer").getLongValue("leaderDueMs");

remote.voteFor = beat.getJSONObject("peer").getString("voteFor");

// step 2:不是Leader节点的心跳包,直接抛异常

if (remote.state != RaftPeer.State.LEADER) {

Loggers.RAFT.info("[RAFT] invalid state from master, state: {}, remote peer: {}",

remote.state, JSON.toJSONString(remote));

throw new IllegalArgumentException("invalid state from master, state: " + remote.state);

}

// step 3:本地term > Leader中的term,抛异常

if (local.term.get() > remote.term.get()) {

Loggers.RAFT.info("[RAFT] out of date beat, beat-from-term: {}, beat-to-term: {}, remote peer: {}, and leaderDueMs: {}"

, remote.term.get(), local.term.get(), JSON.toJSONString(remote), local.leaderDueMs);

throw new IllegalArgumentException("out of date beat, beat-from-term: " + remote.term.get()

+ ", beat-to-term: " + local.term.get());

}

// step 4:本地节点不为 Follower 节点,先设置为 Follower

if (local.state != RaftPeer.State.FOLLOWER) {

Loggers.RAFT.info("[RAFT] make remote as leader, remote peer: {}", JSON.toJSONString(remote));

// mk follower

local.state = RaftPeer.State.FOLLOWER;

local.voteFor = remote.ip;

}

// 注意:step 3、step 4的作用是:

// 1. 修改Leader出现后,集群所有其他节点为当前Leader的Follower节点

// 2. 处理Raft 选举中,出现网络分区的请求,以Term大的为主。

final JSONArray beatDatums = beat.getJSONArray("datums");

// 重置

local.resetLeaderDue();

local.resetHeartbeatDue();

// 设置Leader

peers.makeLeader(remote);

Map<String, Integer> receivedKeysMap = new HashMap<>(datums.size());

for (Map.Entry<String, Datum> entry : datums.entrySet()) {

receivedKeysMap.put(entry.getKey(), 0);

}

// now check datums

List<String> batch = new ArrayList<>();

// 更新基本信息:缓存信息等

if (!switchDomain.isSendBeatOnly()) {

int processedCount = 0;

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("[RAFT] received beat with {} keys, RaftCore.datums' size is {}, remote server: {}, term: {}, local term: {}",

beatDatums.size(), datums.size(), remote.ip, remote.term, local.term);

}

for (Object object : beatDatums) {

processedCount = processedCount + 1;

JSONObject entry = (JSONObject) object;

String key = entry.getString("key");

final String datumKey;

if (KeyBuilder.matchServiceMetaKey(key)) {

datumKey = KeyBuilder.detailServiceMetaKey(key);

} else if (KeyBuilder.matchInstanceListKey(key)) {

datumKey = KeyBuilder.detailInstanceListkey(key);

} else {

// ignore corrupted key:

continue;

}

long timestamp = entry.getLong("timestamp");

receivedKeysMap.put(datumKey, 1);

try {

if (datums.containsKey(datumKey) && datums.get(datumKey).timestamp.get() >= timestamp && processedCount < beatDatums.size()) {

continue;

}

if (!(datums.containsKey(datumKey) && datums.get(datumKey).timestamp.get() >= timestamp)) {

batch.add(datumKey);

}

if (batch.size() < 50 && processedCount < beatDatums.size()) {

continue;

}

String keys = StringUtils.join(batch, ",");

if (batch.size() <= 0) {

continue;

}

Loggers.RAFT.info("get datums from leader: {}, batch size is {}, processedCount is {}, datums' size is {}, RaftCore.datums' size is {}"

, getLeader().ip, batch.size(), processedCount, beatDatums.size(), datums.size());

// update datum entry

String url = buildURL(remote.ip, API_GET) + "?keys=" + URLEncoder.encode(keys, "UTF-8");

HttpClient.asyncHttpGet(url, null, null, new AsyncCompletionHandler<Integer>() {

@Override

public Integer onCompleted(Response response) throws Exception {

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

return 1;

}

List<JSONObject> datumList = JSON.parseObject(response.getResponseBody(), new TypeReference<List<JSONObject>>() {

});

for (JSONObject datumJson : datumList) {

OPERATE_LOCK.lock();

Datum newDatum = null;

try {

Datum oldDatum = getDatum(datumJson.getString("key"));

if (oldDatum != null && datumJson.getLongValue("timestamp") <= oldDatum.timestamp.get()) {

Loggers.RAFT.info("[NACOS-RAFT] timestamp is smaller than that of mine, key: {}, remote: {}, local: {}",

datumJson.getString("key"), datumJson.getLongValue("timestamp"), oldDatum.timestamp);

continue;

}

if (KeyBuilder.matchServiceMetaKey(datumJson.getString("key"))) {

Datum<Service> serviceDatum = new Datum<>();

serviceDatum.key = datumJson.getString("key");

serviceDatum.timestamp.set(datumJson.getLongValue("timestamp"));

serviceDatum.value =

JSON.parseObject(JSON.toJSONString(datumJson.getJSONObject("value")), Service.class);

newDatum = serviceDatum;

}

if (KeyBuilder.matchInstanceListKey(datumJson.getString("key"))) {

Datum<Instances> instancesDatum = new Datum<>();

instancesDatum.key = datumJson.getString("key");

instancesDatum.timestamp.set(datumJson.getLongValue("timestamp"));

instancesDatum.value =

JSON.parseObject(JSON.toJSONString(datumJson.getJSONObject("value")), Instances.class);

newDatum = instancesDatum;

}

if (newDatum == null || newDatum.value == null) {

Loggers.RAFT.error("receive null datum: {}", datumJson);

continue;

}

raftStore.write(newDatum);

datums.put(newDatum.key, newDatum);

notifier.addTask(newDatum.key, ApplyAction.CHANGE);

local.resetLeaderDue();

if (local.term.get() + 100 > remote.term.get()) {

getLeader().term.set(remote.term.get());

local.term.set(getLeader().term.get());

} else {

local.term.addAndGet(100);

}

raftStore.updateTerm(local.term.get());

Loggers.RAFT.info("data updated, key: {}, timestamp: {}, from {}, local term: {}",

newDatum.key, newDatum.timestamp, JSON.toJSONString(remote), local.term);

} catch (Throwable e) {

Loggers.RAFT.error("[RAFT-BEAT] failed to sync datum from leader, datum: {}", newDatum, e);

} finally {

OPERATE_LOCK.unlock();

}

}

TimeUnit.MILLISECONDS.sleep(200);

return 0;

}

});

batch.clear();

} catch (Exception e) {

Loggers.RAFT.error("[NACOS-RAFT] failed to handle beat entry, key: {}", datumKey);

}

}

// 获取需要剔除的Key

List<String> deadKeys = new ArrayList<>();

for (Map.Entry<String, Integer> entry : receivedKeysMap.entrySet()) {

if (entry.getValue() == 0) {

deadKeys.add(entry.getKey());

}

}

for (String deadKey : deadKeys) {

try {

// 剔除key

deleteDatum(deadKey);

} catch (Exception e) {

Loggers.RAFT.error("[NACOS-RAFT] failed to remove entry, key={} {}", deadKey, e);

}

}

}

return local;

}

}

5. RaftCore.signalPublish()

RaftCore 中的 signalPublish(String key, Record value) 方法,负责处理事务请求:

- 先判断当前节点是否为Leader节点

- 如果为Leader节点,直接处理(需集群节点半数处理完成,才算成功)

- 否则,转发给Leader节点,进行处理

@Component

public class RaftCore {

public void signalPublish(String key, Record value) throws Exception {

// 先判断当前节点是否为Leader节点

if (!isLeader()) {

// 转发给Leader节点,进行处理

JSONObject params = new JSONObject();

params.put("key", key);

params.put("value", value);

Map<String, String> parameters = new HashMap<>(1);

parameters.put("key", key);

// 转发事务类请求:向Leader发送 /v1/ns/raft/datum 请求

raftProxy.proxyPostLarge(getLeader().ip, API_PUB, params.toJSONString(), parameters);

return;

}

// Leader节点,直接处理

try {

OPERATE_LOCK.lock();

long start = System.currentTimeMillis();

final Datum datum = new Datum();

datum.key = key;

datum.value = value;

if (getDatum(key) == null) {

datum.timestamp.set(1L);

} else {

datum.timestamp.set(getDatum(key).timestamp.incrementAndGet());

}

JSONObject json = new JSONObject();

json.put("datum", datum);

json.put("source", peers.local());

// 先更新Leader节点一些本地信息

onPublish(datum, peers.local());

final String content = JSON.toJSONString(json);

// 半数通过,即事务请求处理成功,否则5s后抛异常

final CountDownLatch latch = new CountDownLatch(peers.majorityCount());

// 同步数据给Follower节点

for (final String server : peers.allServersIncludeMyself()) {

if (isLeader(server)) {

latch.countDown();

continue;

}

// url = /v1/ns/raft/datum/commit

final String url = buildURL(server, API_ON_PUB);

HttpClient.asyncHttpPostLarge(url, Arrays.asList("key=" + key), content, new AsyncCompletionHandler<Integer>() {

@Override

public Integer onCompleted(Response response) throws Exception {

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.warn("[RAFT] failed to publish data to peer, datumId={}, peer={}, http code={}",

datum.key, server, response.getStatusCode());

return 1;

}

latch.countDown();

return 0;

}

@Override

public STATE onContentWriteCompleted() {

return STATE.CONTINUE;

}

});

}

// 最多等待5s,超时直接跑异常

if (!latch.await(UtilsAndCommons.RAFT_PUBLISH_TIMEOUT, TimeUnit.MILLISECONDS)) {

// only majority servers return success can we consider this update success

Loggers.RAFT.error("data publish failed, caused failed to notify majority, key={}", key);

throw new IllegalStateException("data publish failed, caused failed to notify majority, key=" + key);

}

long end = System.currentTimeMillis();

Loggers.RAFT.info("signalPublish cost {} ms, key: {}", (end - start), key);

} finally {

OPERATE_LOCK.unlock();

}

}

}