Yolov5 动态链接库DLL导出(tensorrt版本——C++调用)

延续前两篇yolov5+tensorrt环境部署和C++测试yolov5检测结果文章内容,这里将yolov5源码封装成动态链接库的方式供其他平台调用,这里参考该博主的文档。

Yolov5 动态链接库DLL导出(tensorrt版本——C++调用)

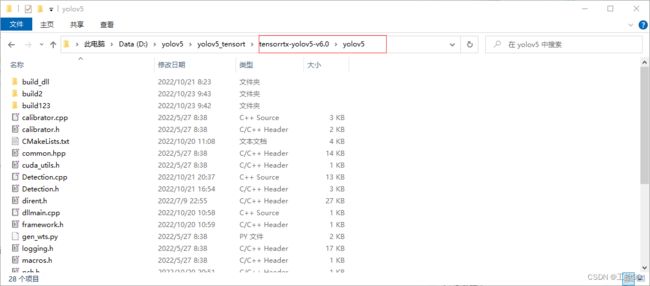

一、文件创建

在tensorrttx/yolov5文件夹下创建以下文件,并填入相关代码

1.dllmain.cpp

// dllmain.cpp : 定义 DLL 应用程序的入口点。

#pragma once

#include "pch.h"

BOOL APIENTRY DllMain(HMODULE hModule,

DWORD ul_reason_for_call,

LPVOID lpReserved

)

{

switch (ul_reason_for_call)

{

case DLL_PROCESS_ATTACH:

case DLL_THREAD_ATTACH:

case DLL_THREAD_DETACH:

case DLL_PROCESS_DETACH:

break;

}

return TRUE;

}

2.framework.h

// framework.h

#pragma once

#define WIN32_LEAN_AND_MEAN // 从 Windows 头文件中排除极少使用的内容

// Windows 头文件

#include 3.Pch.h

//hcp.h

#pragma once

#ifndef PCH_H

#define PCH_H

// 添加要在此处预编译的标头

#include "framework.h"

#include 4.Detection.h

大家注意我代码中加#号的地方,两行代码中的类别数量和标签名称需要根据实际修改,比如使用的是官网80类检测,需要把类别改成80,并贴上对应标签。

//Detection.h

#pragma once

#include "pch.h"

#include "yololayer.h"

#include 5.Detection.cpp

//Detection.cpp

#pragma once

#include "pch.h"

#include "Detection.h"

using namespace std;

static const int INPUT_H = Yolo::INPUT_H;

static const int INPUT_W = Yolo::INPUT_W;

static const int OUTPUT_SIZE = Yolo::MAX_OUTPUT_BBOX_COUNT * sizeof(Yolo::Detection) / sizeof(float) + 1; // we assume the yololayer outputs no more than MAX_OUTPUT_BBOX_COUNT boxes that conf >= 0.1

const char* INPUT_BLOB_NAME = "data";

const char* OUTPUT_BLOB_NAME = "prob";

static Logger gLogger;

static int get_width(int x, float gw, int divisor = 8) {

//return math.ceil(x / divisor) * divisor

if (int(x * gw) % divisor == 0) {

return int(x * gw);

}

return (int(x * gw / divisor) + 1) * divisor;

}

static int get_depth(int x, float gd) {

if (x == 1) {

return 1;

}

else {

return round(x * gd) > 1 ? round(x * gd) : 1;

}

}

ICudaEngine* build_engine(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt, float& gd, float& gw, std::string& wts_name) {

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{ 3, INPUT_H, INPUT_W });

assert(data);

std::map<std::string, Weights> weightMap = loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 = focus(network, weightMap, *data, 3, get_width(64, gw), 3, "model.0");

auto conv1 = convBlock(network, weightMap, *focus0->getOutput(0), get_width(128, gw), 3, 2, 1, "model.1");

auto bottleneck_CSP2 = C3(network, weightMap, *conv1->getOutput(0), get_width(128, gw), get_width(128, gw), get_depth(3, gd), true, 1, 0.5, "model.2");

auto conv3 = convBlock(network, weightMap, *bottleneck_CSP2->getOutput(0), get_width(256, gw), 3, 2, 1, "model.3");

auto bottleneck_csp4 = C3(network, weightMap, *conv3->getOutput(0), get_width(256, gw), get_width(256, gw), get_depth(9, gd), true, 1, 0.5, "model.4");

auto conv5 = convBlock(network, weightMap, *bottleneck_csp4->getOutput(0), get_width(512, gw), 3, 2, 1, "model.5");

auto bottleneck_csp6 = C3(network, weightMap, *conv5->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(9, gd), true, 1, 0.5, "model.6");

auto conv7 = convBlock(network, weightMap, *bottleneck_csp6->getOutput(0), get_width(1024, gw), 3, 2, 1, "model.7");

auto spp8 = SPP(network, weightMap, *conv7->getOutput(0), get_width(1024, gw), get_width(1024, gw), 5, 9, 13, "model.8");

/* ------ yolov5 head ------ */

auto bottleneck_csp9 = C3(network, weightMap, *spp8->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.9");

auto conv10 = convBlock(network, weightMap, *bottleneck_csp9->getOutput(0), get_width(512, gw), 1, 1, 1, "model.10");

auto upsample11 = network->addResize(*conv10->getOutput(0));

assert(upsample11);

upsample11->setResizeMode(ResizeMode::kNEAREST);

upsample11->setOutputDimensions(bottleneck_csp6->getOutput(0)->getDimensions());

ITensor* inputTensors12[] = { upsample11->getOutput(0), bottleneck_csp6->getOutput(0) };

auto cat12 = network->addConcatenation(inputTensors12, 2);

auto bottleneck_csp13 = C3(network, weightMap, *cat12->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.13");

auto conv14 = convBlock(network, weightMap, *bottleneck_csp13->getOutput(0), get_width(256, gw), 1, 1, 1, "model.14");

auto upsample15 = network->addResize(*conv14->getOutput(0));

assert(upsample15);

upsample15->setResizeMode(ResizeMode::kNEAREST);

upsample15->setOutputDimensions(bottleneck_csp4->getOutput(0)->getDimensions());

ITensor* inputTensors16[] = { upsample15->getOutput(0), bottleneck_csp4->getOutput(0) };

auto cat16 = network->addConcatenation(inputTensors16, 2);

auto bottleneck_csp17 = C3(network, weightMap, *cat16->getOutput(0), get_width(512, gw), get_width(256, gw), get_depth(3, gd), false, 1, 0.5, "model.17");

// yolo layer 0

IConvolutionLayer* det0 = network->addConvolutionNd(*bottleneck_csp17->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.0.weight"], weightMap["model.24.m.0.bias"]);

auto conv18 = convBlock(network, weightMap, *bottleneck_csp17->getOutput(0), get_width(256, gw), 3, 2, 1, "model.18");

ITensor* inputTensors19[] = { conv18->getOutput(0), conv14->getOutput(0) };

auto cat19 = network->addConcatenation(inputTensors19, 2);

auto bottleneck_csp20 = C3(network, weightMap, *cat19->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.20");

//yolo layer 1

IConvolutionLayer* det1 = network->addConvolutionNd(*bottleneck_csp20->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.1.weight"], weightMap["model.24.m.1.bias"]);

auto conv21 = convBlock(network, weightMap, *bottleneck_csp20->getOutput(0), get_width(512, gw), 3, 2, 1, "model.21");

ITensor* inputTensors22[] = { conv21->getOutput(0), conv10->getOutput(0) };

auto cat22 = network->addConcatenation(inputTensors22, 2);

auto bottleneck_csp23 = C3(network, weightMap, *cat22->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.23");

IConvolutionLayer* det2 = network->addConvolutionNd(*bottleneck_csp23->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.2.weight"], weightMap["model.24.m.2.bias"]);

auto yolo = addYoLoLayer(network, weightMap, det0, det1, det2);

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16 * (1 << 20)); // 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout << "Your platform support int8: " << (builder->platformHasFastInt8() ? "true" : "false") << std::endl;

assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator = new Int8EntropyCalibrator2(1, INPUT_W, INPUT_H, "./coco_calib/", "int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout << "Building engine, please wait for a while..." << std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

std::cout << "Build engine successfully!" << std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for (auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

void APIToModel(unsigned int maxBatchSize, IHostMemory** modelStream, float& gd, float& gw, std::string& wts_name) {

// Create builder

IBuilder* builder = createInferBuilder(gLogger);

IBuilderConfig* config = builder->createBuilderConfig();

// Create model to populate the network, then set the outputs and create an engine

ICudaEngine* engine = build_engine(maxBatchSize, builder, config, DataType::kFLOAT, gd, gw, wts_name);

assert(engine != nullptr);

// Serialize the engine

(*modelStream) = engine->serialize();

// Close everything down

engine->destroy();

builder->destroy();

config->destroy();

}

inline void doInference(IExecutionContext& context, cudaStream_t& stream, void** buffers, float* input, float* output, int batchSize) {

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

CUDA_CHECK(cudaMemcpyAsync(buffers[0], input, batchSize * 3 * INPUT_H * INPUT_W * sizeof(float), cudaMemcpyHostToDevice, stream));

context.enqueue(batchSize, buffers, stream, nullptr);

CUDA_CHECK(cudaMemcpyAsync(output, buffers[1], batchSize * OUTPUT_SIZE * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

}

void Detection::Initialize(const char* model_path, int num)

{

if (num < 0 || num>3) {

cout << "=================="

"0, yolov5s"

"1, yolov5m"

"2, yolov5l"

"3, yolov5x" << endl;

return;

}

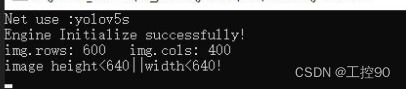

cout << "Net use :" << yolo_nets[num].netname << endl;

this->gd = yolo_nets[num].gd;

this->gw = yolo_nets[num].gw;

//初始化GPU引擎

cudaSetDevice(DEVICE);

std::ifstream file(model_path, std::ios::binary);

if (!file.good()) {

std::cerr << "read " << model_path << " error!" << std::endl;

return;

}

file.seekg(0, file.end);

size = file.tellg(); //统计模型字节流大小

file.seekg(0, file.beg);

trtModelStream = new char[size]; // 申请模型字节流大小的空间

assert(trtModelStream);

file.read(trtModelStream, size); // 读取字节流到trtModelStream

file.close();

// prepare input data ------NCHW---------------------

runtime = createInferRuntime(gLogger);

assert(runtime != nullptr);

engine = runtime->deserializeCudaEngine(trtModelStream, size);

assert(engine != nullptr);

context = engine->createExecutionContext();

assert(context != nullptr);

delete[] trtModelStream;

assert(engine->getNbBindings() == 2);

inputIndex = engine->getBindingIndex(INPUT_BLOB_NAME);

outputIndex = engine->getBindingIndex(OUTPUT_BLOB_NAME);

assert(inputIndex == 0);

assert(outputIndex == 1);

// Create GPU buffers on device

CUDA_CHECK(cudaMalloc(&buffers[inputIndex], BATCH_SIZE * 3 * INPUT_H * INPUT_W * sizeof(float)));

CUDA_CHECK(cudaMalloc(&buffers[outputIndex], BATCH_SIZE * OUTPUT_SIZE * sizeof(float)));

CUDA_CHECK(cudaStreamCreate(&stream));

std::cout << "Engine Initialize successfully!" << endl;

}

void Detection::setClassNum(int num)

{

CLASS_NUM = num;

}

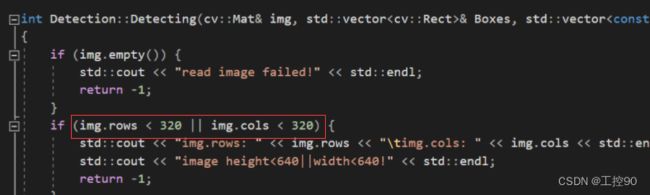

int Detection::Detecting(cv::Mat& img, std::vector<cv::Rect>& Boxes, std::vector<const char*>& ClassLables)

{

if (img.empty()) {

std::cout << "read image failed!" << std::endl;

return -1;

}

if (img.rows < 640 || img.cols < 640) {

std::cout << "img.rows: "<< img.rows <<"\timg.cols: "<< img.cols << std::endl;

std::cout << "image height<640||width<640!" << std::endl;

return -1;

}

cv::Mat pr_img = preprocess_img(img, INPUT_W, INPUT_H); // letterbox BGR to RGB

int i = 0;

for (int row = 0; row < INPUT_H; ++row) {

uchar* uc_pixel = pr_img.data + row * pr_img.step;

for (int col = 0; col < INPUT_W; ++col) {

data[i] = (float)uc_pixel[2] / 255.0;

data[i + INPUT_H * INPUT_W] = (float)uc_pixel[1] / 255.0;

data[i + 2 * INPUT_H * INPUT_W] = (float)uc_pixel[0] / 255.0;

uc_pixel += 3;

++i;

}

}

// Run inference

auto start = std::chrono::system_clock::now();

doInference(*context, stream, buffers, data, prob, BATCH_SIZE);

auto end = std::chrono::system_clock::now();

std::cout << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

std::vector<Yolo::Detection> batch_res;

nms(batch_res, &prob[0], CONF_THRESH, NMS_THRESH);

for (size_t j = 0; j < batch_res.size(); j++) {

cv::Rect r = get_rect(img, batch_res[j].bbox);

Boxes.push_back(r);

ClassLables.push_back(classes[(int)batch_res[j].class_id]);

cv::rectangle(img, r, cv::Scalar(0x27, 0xC1, 0x36), 2);

cv::putText(

img,

classes[(int)batch_res[j].class_id],

cv::Point(r.x, r.y - 2),

cv::FONT_HERSHEY_COMPLEX,

1.8,

cv::Scalar(0xFF, 0xFF, 0xFF),

2

);

}

return 0;

}

Detection::Detection() {}

Detection::~Detection()

{

// Release stream and buffers

cudaStreamDestroy(stream);

CUDA_CHECK(cudaFree(buffers[inputIndex]));

CUDA_CHECK(cudaFree(buffers[outputIndex]));

// Destroy the engine

context->destroy();

engine->destroy();

runtime->destroy();

}

Connect::Connect()

{}

Connect::~Connect()

{}

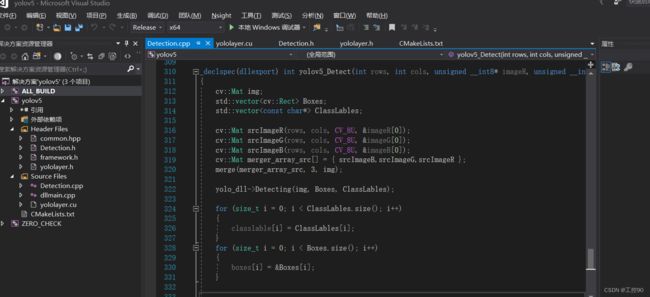

YOLOV5* Connect::Create_YOLOV5_Object()

{

return new Detection; //注意此处

}

void Connect::Delete_YOLOV5_Object(YOLOV5* _bp)

{

if (_bp)

delete _bp;

}

二、编译

1、修改CMakeLists.txt 修改生成目标为动态链接库。

#修改前

add_executable(yolov5 ${PROJECT_SOURCE_DIR}/yolov5.cpp ${PROJECT_SOURCE_DIR}/common.hpp ${PROJECT_SOURCE_DIR}/yololayer.cu ${PROJECT_SOURCE_DIR}/yololayer.h)

#修改后

add_library(yolov5 SHARED ${PROJECT_SOURCE_DIR}/common.hpp ${PROJECT_SOURCE_DIR}/yololayer.cu ${PROJECT_SOURCE_DIR}/preprocess.cu ${PROJECT_SOURCE_DIR}/yololayer.h "Detection.h" "Detection.cpp" "framework.h" "dllmain.cpp" "preprocess.h" )

2.新建一个文件夹build_dll:打开cmake

3.依次点击configure—Generate----open project进行realses和debug编译:

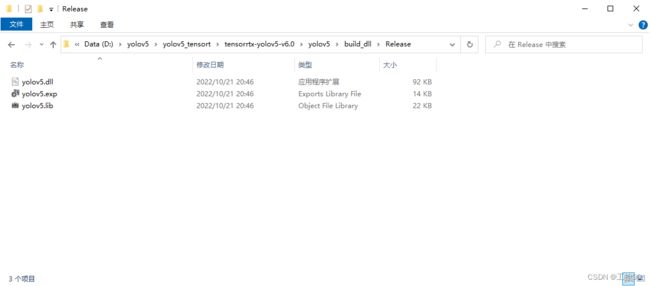

编译完成后对应的release文件夹会生成yolov5.dll文件

三、测试

利用VS 新建自己的C++工程项目

将以上生成的三个文件以及yolov5.engine权重文件和测试图片放入工程目录文件夹中

复制以下代码到自己工程中,按实际测试需求解除相应注释部分代码,图片和摄像头测试均可

#pragma once

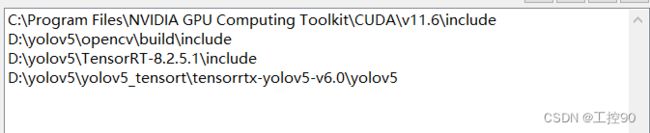

#include 添加包含目录:

添加库目录:

输入链接库添加:

opencv_world460.lib

opencv_world460d.lib

cudart.lib

cudart_static.lib

yolov5.lib

nvinfer.lib

nvinfer_plugin.lib

nvonnxparser.lib

nvparsers.lib

进入detection.cpp 更改Detecting函数中的图片大小设置

另外打包的时候注意版本问题,例如用的yolov5源码是V4.0版本,tensortx下载的是针对源码V6.0版本,有些函数定义不一样,对应修改即可