k8s 常用工作负载控制器(Deployment、DaemonSet、Job、CronJob)

常用工作负载控制器

-

- 工作负载控制器是什么

- Deployment

-

- Deployment 使用流程

-

- Deployment 部署

- Deployment 升级

-

- 应用升级(更新镜像三种方式)

- 滚动升级

-

- 示例

- 水平扩缩容

- Deployment 回滚

- Deployment 下线

- Deployment ReplicaSet

- DaemonSet

- Job

- CronJob

- 遇到的报错

-

- 第一个问题

- 解决方法

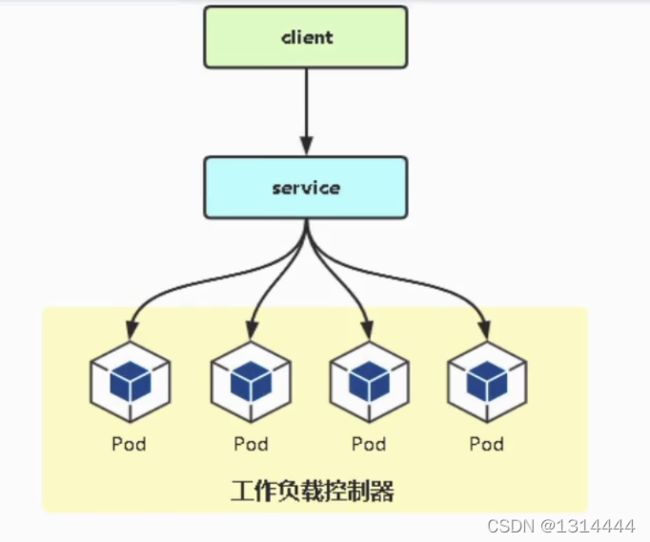

工作负载控制器是什么

工作负载控制器(Workload Controllers)是K8s的一个抽象概念,用于更高级层次对象,部署和管理Pod

常用工作负载控制器

- Deployment :无状态应用部署- StatefulSet :有状态应用部署

- DaemonSet :确保所有Node运行同一个Pod

- Job :一次性任务

- Cronjob:定时任务

控制器的作用

- 管理Pod对象

- 使用标签与Pod关联

- 控制器实现了Pod的运维,例如滚动更新、伸缩、副本管理、维护Pod状态等。

Deployment

Deployment的功能

- 管理Pod和ReplicaSet

- 具有上线部署、副本设定、滚动升级、回滚等功能

- 提供声明式更新,例如只更新一个新的Image

应用场景

- 网站、API、微服务

Deployment 使用流程

Deployment 部署

部署镜像

方法1:kubectl apply -f xxx.yaml

方法2:kubectl create deployment web --image=ngin:

[root@master test]# cat deploy.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: b1

template:

metadata:

labels:

app: b1

spec:

containers:

- name: b1

image: busybox

imagePullPolicy: IfNotPresent

command: ["bin/sh","-c","sleep 9000"]

[root@master test]# kubectl apply -f deploy.yml

deployment.apps/test created

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

test-7964df7b4-bdbkx 1/1 Running 0 8s

test-7964df7b4-l67kj 1/1 Running 0 8s

test-7964df7b4-zwhhf 1/1 Running 0 8s

[root@master test]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-7964df7b4-bdbkx 1/1 Running 0 50s 10.244.1.137 node1 <none> <none>

test-7964df7b4-l67kj 1/1 Running 0 50s 10.244.1.139 node1 <none> <none>

test-7964df7b4-zwhhf 1/1 Running 0 50s 10.244.1.138 node1 <none> <none>

Deployment 升级

应用升级(更新镜像三种方式)

kubectl apply -f xxx.yaml

kubectl set image deployment/web nginx=nginx

kubectl edit deployment/web #此方法不建议使用

[root@master test]# kubectl edit deployment/test

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

kubectl.kubernetes.io/last-applied-configuration: |

creationTimestamp: "2021-12-24T13:41:04Z"

generation: 1

name: test

namespace: default

resourceVersion: "157409"

uid: 0742158a-a570-4dfa-b6ba-561b806b69f7

spec:

progressDeadlineSeconds: 600

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

app: b1

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: b1

spec:

containers:

- command:

- bin/sh

- -c

- sleep 9000

image: busybox

imagePullPolicy: IfNotPresent

name: b1

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 3

conditions:

- lastTransitionTime: "2021-12-24T13:41:06Z"

lastUpdateTime: "2021-12-24T13:41:06Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2021-12-24T13:41:04Z"

lastUpdateTime: "2021-12-24T13:41:06Z"

message: ReplicaSet "test-7964df7b4" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 1

readyReplicas: 3

replicas: 3

updatedReplicas: 3

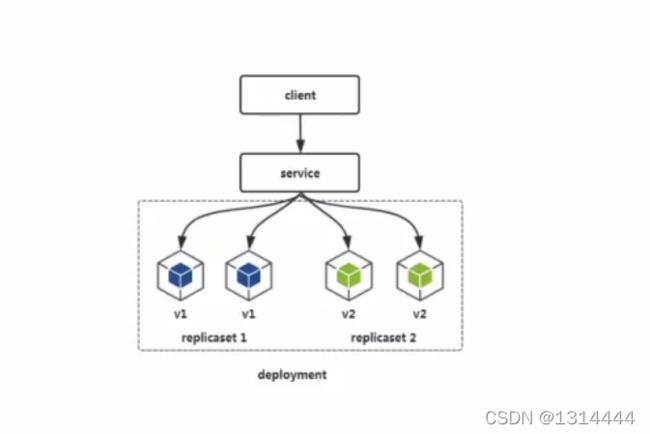

滚动升级

K8s对寸Pod升级的默认策略,通过使用新版本Pod逐步更新旧版本Pod,实现零停机发布,用户无感知。

滚动升级在K8s中的实现

- 1个Deployment.

- 2个ReplicaSet

滚动更新策略

- maxSurge:

滚动更新过程中最大Pod副本数,确保在更新时启动的Pod数量比期望(replicas)Pod数量最大多出25% - maxUnavailable:

滚动更新过程中最大不可用Pod副本数,确保在更新时最大25% Pod数量不可用,即确保75% Pod数量是可用状态 有四舍五入的功能,是根据副本数量而决定的

示例

[root@master test]# cat test.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: web

image: 1314444/httpd:v0.1

imagePullPolicy: IfNotPresent

[root@master test]# kubectl apply -f test.yml

deployment.apps/web created

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-c45fdccd9-shmdj 1/1 Running 0 2m1s

web-c45fdccd9-xthqz 1/1 Running 0 2m1s

web-c45fdccd9-ztzk7 1/1 Running 0 2m1s

//更新

[root@master test]# cat test.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 4

strategy:

rollingUpdate:

maxSurge: 25% #默认便是25%,所以可以不用写

maxUnavailable: 25% #默认便是25%,所以可以不用写

type: RollingUpdate

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: web

image: 1314444/httpd:v0.2

imagePullPolicy: IfNotPresent

[root@master test]# kubectl apply -f test.yml

deployment.apps/web configured

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-c45fdccd9-bjt6r 1/1 Running 0 2s

web-c45fdccd9-shmdj 1/1 Running 0 3m

web-c45fdccd9-xthqz 1/1 Running 0 3m

web-c45fdccd9-ztzk7 1/1 Running 0 3m

rollingUpdate 有四舍五入的功能,是根据副本数量而决定

-------双数副本数量-------

[root@master test]# cat test.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 4

strategy:

rollingUpdate:

maxSurge: 50%

maxUnavailable: 50%

type: RollingUpdate

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: web

image: 1314444/httpd:v0.1

imagePullPolicy: IfNotPresent

[root@master test]# kubectl apply -f test.yml

deployment.apps/web configured

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-865bffff44-ft6xg 1/1 Running 0 3s

web-865bffff44-gdjdj 1/1 Running 0 3s

web-865bffff44-rgwv6 1/1 Running 0 3s

web-865bffff44-whdhh 1/1 Running 0 3s

web-c45fdccd9-bjt6r 1/1 Terminating 0 9m11s

web-c45fdccd9-shmdj 1/1 Terminating 0 12m

web-c45fdccd9-xthqz 1/1 Terminating 0 12m

web-c45fdccd9-ztzk7 1/1 Terminating 0 12m

-------副本数量单数-------

[root@master test]# cat test.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 5

strategy:

rollingUpdate:

maxSurge: 50%

maxUnavailable: 50%

type: RollingUpdate

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: web

image: 1314444/httpd:v0.2

imagePullPolicy: IfNotPresent

[root@master test]# kubectl apply -f test.yml

deployment.apps/web configured

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-865bffff44-ft6xg 1/1 Terminating 0 4m4s

web-865bffff44-gdjdj 1/1 Terminating 0 4m4s

web-865bffff44-nqskm 0/1 Terminating 0 2s

web-865bffff44-rgwv6 1/1 Terminating 0 4m4s

web-865bffff44-whdhh 1/1 Running 0 4m4s

web-c45fdccd9-6s8vr 1/1 Running 0 2s

web-c45fdccd9-jgcgm 0/1 ContainerCreating 0 2s

web-c45fdccd9-qmw2k 0/1 ContainerCreating 0 2s

web-c45fdccd9-wdnvj 0/1 ContainerCreating 0 2s

web-c45fdccd9-zf4mt 1/1 Running 0 2s

-------四舍五入-------

[root@master test]# cat test.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 5

strategy:

rollingUpdate:

maxSurge: 49%

maxUnavailable: 49%

type: RollingUpdate

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: web

image: 1314444/httpd:v0.1

imagePullPolicy: IfNotPresent

[root@master test]# kubectl apply -f test.yml

deployment.apps/web configured

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-865bffff44-89grr 1/1 Running 0 1s

web-865bffff44-d2ggx 0/1 ContainerCreating 0 1s

web-865bffff44-fzg6s 1/1 Running 0 1s

web-865bffff44-sv27k 1/1 Running 0 1s

web-865bffff44-w2jdq 1/1 Running 0 1s

web-c45fdccd9-6s8vr 1/1 Terminating 0 5m20s

web-c45fdccd9-jgcgm 1/1 Terminating 0 5m20s

web-c45fdccd9-qmw2k 1/1 Terminating 0 5m20s

web-c45fdccd9-wdnvj 1/1 Terminating 0 5m20s

web-c45fdccd9-zf4mt 1/1 Terminating 0 5m20s

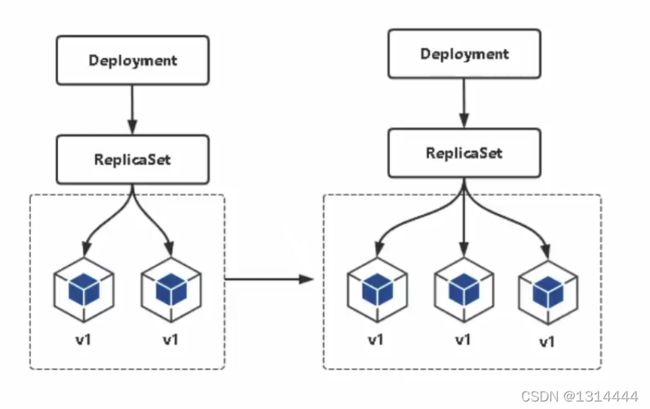

水平扩缩容

水平扩缩容(启动多实例,提高并发)

- 修改yaml里replicas值,再apply

- kubectl scale deployment web --replicas=10

[root@master test]# cat test.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 3 #副本数量

strategy:

rollingUpdate:

maxSurge: 49%

maxUnavailable: 49%

type: RollingUpdate

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: web

image: 1314444/httpd:v0.2

imagePullPolicy: IfNotPresent

[root@master test]# kubectl apply -f test.yml

deployment.apps/web configured

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-865bffff44-89grr 1/1 Terminating 0 8m35s

web-865bffff44-d2ggx 1/1 Terminating 0 8m35s

web-865bffff44-fzg6s 1/1 Running 0 8m35s

web-865bffff44-sv27k 1/1 Running 0 8m35s

web-865bffff44-w2jdq 1/1 Running 0 8m35s

Deployment 回滚

回滚(发布失败恢复正常版本)

kubectl rollout history deployment/web #查看历史发布版本

kubectl rollout undo deployment/web #回滚上一个版本

kubectl rollout undo deployment/web --to-revision=2 #回滚历史指定版本

注:回滚是重新部署某一次部署时的状态,即当时版本所有配置

//查看历史发布版本

[root@master test]# kubectl rollout history deploy/web

deployment.apps/web

REVISION CHANGE-CAUSE

3 <none>

4 <none>

//回滚上一个版本

[root@master test]# cat test.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 3

strategy:

rollingUpdate:

maxSurge: 49%

maxUnavailable: 49%

type: RollingUpdate

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: web

image: 1314444/httpd:v0.1

imagePullPolicy: IfNotPresent

[root@master test]# kubectl apply -f test.yml

deployment.apps/web configured

[root@master test]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-865bffff44-hpqw9 1/1 Running 0 67s 10.244.1.173 node1 <none> <none>

web-865bffff44-nwbx4 1/1 Running 0 67s 10.244.1.174 node1 <none> <none>

web-865bffff44-w9568 1/1 Running 0 67s 10.244.1.172 node1 <none> <none>

[root@master test]# curl 10.244.1.174

test page on jjyy

[root@master test]# kubectl rollout undo deploy/web

deployment.apps/web rolled back

[root@master test]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-865bffff44-hpqw9 1/1 Terminating 0 67s 10.244.1.173 node1 <none> <none>

web-865bffff44-nwbx4 1/1 Terminating 0 67s 10.244.1.174 node1 <none> <none>

web-865bffff44-w9568 1/1 Terminating 0 67s 10.244.1.172 node1 <none> <none>

web-c45fdccd9-8cm86 1/1 Running 0 8s 10.244.1.175 node1 <none> <none>

web-c45fdccd9-bhndv 1/1 Running 0 8s 10.244.1.176 node1 <none> <none>

web-c45fdccd9-k8c7b 1/1 Running 0 8s 10.244.1.177 node1 <none> <none>

[root@master test]# curl 10.244.1.174

test page on jjyy

[root@master test]# curl 10.244.1.177

test page on 666

Deployment 下线

- kubectl delete deploy/web

- kubectl delete svc/web

//删除(推荐使用)

[root@master test]# kubectl delete -f test.yml

deployment.apps "web" deleted

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-865bffff44-9sbnl 1/1 Terminating 0 5m26s

web-865bffff44-b44nb 1/1 Terminating 0 5m26s

web-865bffff44-tvzvw 1/1 Terminating 0 5m26s

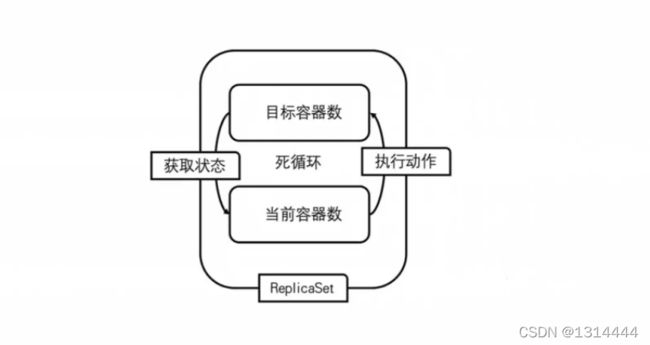

Deployment ReplicaSet

ReplicaSet控制器用途

- Pod副本数量管理,不断对比当前Pod数量与期望Pod数量

- Deployment每次发布都会创建一个RS作为记录,用于实现回滚

- 默认为一个Pod数量

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-865bffff44-49zns 1/1 Running 0 42s

web-865bffff44-7g7b5 1/1 Running 0 42s

web-865bffff44-rq59f 1/1 Running 0 42s

[root@master test]# kubectl get rs #查看RS记录(根据副本的多少来判断)

NAME DESIRED CURRENT READY AGE

web-865bffff44 3 3 3 3s

[root@master test]# kubectl rollout history deploy web #版本对应RS记录

deployment.apps/web

REVISION CHANGE-CAUSE

1 <none>

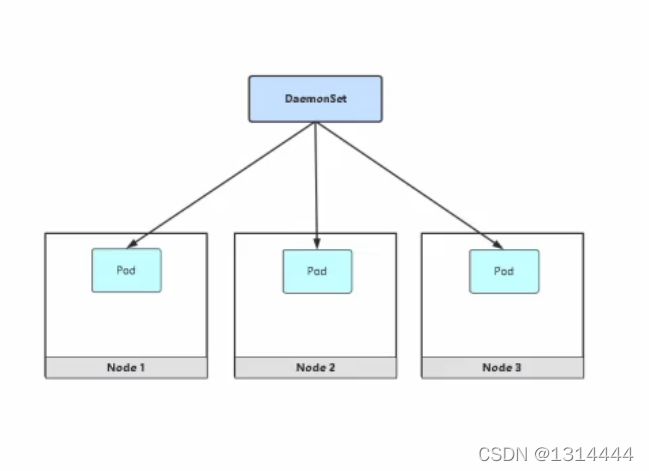

DaemonSet

DaemonSet功能

- 在每一个Node上运行一个Pod

- 新加入的Node也同样会自动运行一个Pod

应用场景

- 网络插件(kube-proxy、calico)、其他Agent

示例:部署一个日志采集程序

[root@master test]# cat daemon.yml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-system

spec:

selector:

matchLabels:

app: filebeat

template:

metadata:

labels:

app: filebeat

spec:

containers:

- name: log

image: elastic/filebeat:7.16.2

imagePullPolicy: IfNotPresent

[root@master test]# kubectl apply -f daemon.yml

daemonset.apps/filebeat created

[root@master test]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-ptq5m 1/1 Running 14 6d7h

coredns-7f89b7bc75-v6chh 1/1 Running 14 6d7h

etcd-master 1/1 Running 16 6d7h

filebeat-4l848 0/1 ContainerCreating 0 8s #首次拉取镜像有点慢

filebeat-wk9dt 0/1 ContainerCreating 0 8s

kube-apiserver-master 1/1 Running 16 6d7h

kube-controller-manager-master 1/1 Running 16 6d7h

kube-flannel-ds-cchk6 1/1 Running 12 6d6h

kube-flannel-ds-d2wpq 1/1 Running 14 6d6h

kube-flannel-ds-z6f85 1/1 Running 0 160m

kube-proxy-6f99q 1/1 Running 13 6d7h

kube-proxy-9z78l 1/1 Running 11 6d7h

kube-proxy-gz4bs 1/1 Running 16 6d7h

kube-scheduler-master 1/1 Running 16 6d7h

//DaemonSet类型的会在除了master节点以外的所有节点上创建

[root@master test]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-7f89b7bc75-ptq5m 1/1 Running 14 6d7h 10.244.0.38 master <none> <none>

coredns-7f89b7bc75-v6chh 1/1 Running 14 6d7h 10.244.0.39 master <none> <none>

etcd-master 1/1 Running 16 6d7h 192.168.129.250 master <none> <none>

filebeat-4l848 1/1 Running 0 3m5s 10.244.2.59 node2 <none> <none>

filebeat-wk9dt 1/1 Running 0 3m5s 10.244.1.183 node1 <none> <none>

kube-apiserver-master 1/1 Running 16 6d7h 192.168.129.250 master <none> <none>

kube-controller-manager-master 1/1 Running 16 6d7h 192.168.129.250 master <none> <none>

kube-flannel-ds-cchk6 1/1 Running 12 6d6h 192.168.129.136 node2 <none> <none>

kube-flannel-ds-d2wpq 1/1 Running 14 6d6h 192.168.129.250 master <none> <none>

kube-flannel-ds-z6f85 1/1 Running 0 163m 192.168.129.135 node1 <none> <none>

kube-proxy-6f99q 1/1 Running 13 6d7h 192.168.129.136 node2 <none> <none>

kube-proxy-9z78l 1/1 Running 11 6d7h 192.168.129.135 node1 <none> <none>

kube-proxy-gz4bs 1/1 Running 16 6d7h 192.168.129.250 master <none> <none>

kube-scheduler-master 1/1 Running 16 6d7h 192.168.129.250 master <none> <none>

加入新节点

//查看本地节点

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 6d22h v1.20.0

node1 Ready <none> 6d22h v1.20.0

node2 Ready <none> 6d22h v1.20.0

//加入新的节点

//注释swap分区

[root@master ~]# sed -i.bak '/swap/s/^/#/' /etc/fstab

//关闭防火墙

[root@node3 ~]# systemctl disable --now firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@node3 ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

//重启

[root@node3 ~]# reboot

//配置yum源

[root@node3 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-8.repo

[root@node3 ~]# sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

[root@node3 ~]# yum clean all && yum makecache

//时间同步

[root@node3 ~]# yum -y install chrony

[root@node3 ~]# sed -i 's/2.centos.pool.ntp.org/time1.aliyun.com/' /etc/chrony.conf

[root@node3 ~]# systemctl enable --now chronyd

[root@node3 ~]# date

2021年 12月 25日 星期六 14:51:13 CST

//安装Docker

[root@node3 ~]# yum -y install wget

[root@node3 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

[root@node3 ~]# yum -y install docker-ce

[root@node3 ~]# systemctl enable --now docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@node3 ~]# docker --version

Docker version 20.10.12, build e91ed57

[root@master ~]# ls /etc/docker/

key.json

//镜像加速

[root@master ~]# cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

//添加kubernetes阿里云YUM软件源

[root@node3 ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@node3 ~]# yum clean all && yum makecache

//安装kubeadm,kubelet和kubectl,由于版本更新频繁,这里指定版本号部署

[root@node3 ~]# yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0

[root@node3 ~]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@node3 ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: inactive (dead)

//master端做域名解析

[root@master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.129.250 master master.example.com

192.168.129.135 node1 node1.example.com

192.168.129.136 node2 node2.example.com

192.168.129.205 node3 node3.example.com

//做免密登录

[root@master ~]# ssh-copy-id node3

//加入集群

//此时需要通过 kubedam重新生成token

[root@master ~]# token=$(kubeadm token generate)

[root@master ~]# kubeadm token create $token --print-join-command --ttl=0

kubeadm join 192.168.129.250:6443 --token k1m9je.bh5l7nddlw548eva --discovery-token-ca-cert-hash sha256:f6be891c6cd273a9283a6c05ca795811f7350afa2b5072990a294b7070ff9a4f

[root@node3 ~]# kubeadm join 192.168.129.250:6443 --token k1m9je.bh5l7nddlw548eva --discovery-token-ca-cert-hash sha256:f6be891c6cd273a9283a6c05ca795811f7350afa2b5072990a294b7070ff9a4f

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.12. Latest validated version: 19.03

[WARNING Hostname]: hostname "node3" could not be reached

[WARNING Hostname]: hostname "node3": lookup node3 on 114.114.114.114:53: no such host

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 7d v1.20.0

node1 Ready <none> 7d v1.20.0

node2 Ready <none> 7d v1.20.0

node3 Ready <none> 57s v1.20.0

//会在新加入的节点上创建

[root@master ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-7f89b7bc75-4qsdc 1/1 Running 0 41m 10.244.1.218 node1 <none> <none>

coredns-7f89b7bc75-zx59r 1/1 Running 0 41m 10.244.1.219 node1 <none> <none>

etcd-master 1/1 Running 17 7d 192.168.129.250 master <none> <none>

filebeat-97ch5 1/1 Running 0 140m 10.244.1.217 node1 <none> <none>

filebeat-nk4jb 1/1 Running 0 54s 10.244.4.2 node3 <none> <none>

filebeat-qbmjk 1/1 Running 0 140m 10.244.2.60 node2 <none> <none>

kube-apiserver-master 1/1 Running 17 7d 192.168.129.250 master <none> <none>

//删除DaemonSet

[root@master ~]# kubectl delete daemonset/filebeat -n kube-system

Job

Job分为普通任务(Job)和定时任务(CronJob):一次性执行

应用场景:离线数据处理,视频解码等业务

[root@master test]# cat job.yml

apiVersion: batch/v1

kind: Job

metadata:

name: b1

spec:

template:

spec:

containers:

- name: b1

image: busybox

imagePullPolicy: IfNotPresent

command: [" /bin/sh" , "-c" , "echo hello world"]

restartPolicy: Never

backoffLimit: 2 #重启次数

[root@master test]# kubectl apply -f job.yml

job.batch/b1 created

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

b1-hw5ls 0/1 ContainerCannotRun 0 2s

b1-v7th8 0/1 ContainerCreating 0 1s

[root@master test]# kubectl describe job/b1

Name: b1

Namespace: default

Selector: controller-uid=8850b237-a360-405e-87e8-f5c83254e741

Labels: controller-uid=8850b237-a360-405e-87e8-f5c83254e741

job-name=b1

Annotations: <none>

Parallelism: 1

Completions: 1

Start Time: Fri, 24 Dec 2021 23:51:13 +0800

Pods Statuses: 1 Running / 0 Succeeded / 1 Failed

Pod Template:

Labels: controller-uid=8850b237-a360-405e-87e8-f5c83254e741

job-name=b1

Containers:

b1:

Image: busybox

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

echo hello world

Environment: <none>

Mounts: <none>

Volumes: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 6s job-controller Created pod: b1-hw5ls

Normal SuccessfulCreate 5s job-controller Created pod: b1-v7th8

CronJob

CronJob用于实现定时任务,像Linux的Crontab一样:定时任务

应用场景:通知,备份

[root@master test]# cat cronjob.yml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- "-c"

- "date;echo Hello world"

restartPolicy: OnFailure

[root@master test]# kubectl apply -f cronjob.yml

cronjob.batch/hello created

[root@master test]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-1640362320-cvdhv 0/1 Completed 0 60s

[root@master test]# kubectl get pod #每隔一分钟

NAME READY STATUS RESTARTS AGE

hello-1640362320-cvdhv 0/1 Completed 0 60s

hello-1640362380-vpbs5 0/1 ContainerCreating 0 0s

遇到的报错

(将新的节点加入集群时遇到的)

第一个问题

[root@node3 ~]# kubeadm join 192.168.129.250:6443 --token hglo7o.0ya3tbi82wqdjif4 \

--discovery-token-ca-cert-hash sha256:f6be891c6cd273a9283a6c05ca795811f7350afa2b5072990a294b7070ff9a4f

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.12. Latest validated version: 19.03

[WARNING Hostname]: hostname "node3" could not be reached

[WARNING Hostname]: hostname "node3": lookup node3 on 192.168.129.2:53: no such host

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

error execution phase kubelet-start: error uploading crisocket: timed out waiting for the condition

To see the stack trace of this error execute with --v=5 or higher

解决方法

token 过期

此时需要通过kubedam重新生成token

//生成新的token

[root@master ~]# kubeadm token list

[root@master ~]# token=$(kubeadm token generate)

//创建永不过期的token

[root@master ~]# kubeadm token create $token --print-join-command --ttl=0

kubeadm join 192.168.129.250:6443 --token q8gddz.5ihmiuop488jvjvu --discovery-token-ca-cert-hash sha256:f6be891c6cd273a9283a6c05ca795811f7350afa2b5072990a294b7070ff9a4f