机器学习_kedro框架使用简单示意

简介及安装包

kedro用来构建可复用,易维护,模块化的机器学习代码。相比于Notebook的超级灵活性,便于探索数据和算法, Kedro 定位于解决版本控制,可复用性,文档,单元测试,部署等工程方面的问题。

python -m pip install --upgrade pip

pip install kedro

pip install kedro-viz

# check

kedro info

一、创建项目

项目创建

kedro new

# 并输入

iris_demo

生成项目的目录如下

(base) [~iris_demo]$ tree

.

├── conf

│ ├── base

│ │ ├── catalog.yml

│ │ ├── logging.yml

│ │ └── parameters.yml

│ ├── local

│ │ └── credentials.yml

│ └── README.md

├── data

│ ├── 01_raw

│ ├── 02_intermediate

│ ├── 03_primary

│ ├── 04_feature

│ ├── 05_model_input

│ ├── 06_models

│ ├── 07_model_output

│ └── 08_reporting

├── docs

│ └── source

│ ├── conf.py

│ └── index.rst

├── logs

├── notebooks

├── pyproject.toml

├── README.md

├── setup.cfg

└── src

├── iris_demo

│ ├── init.py

│ ├── main.py

│ ├── pipeline_registry.py

│ ├── pipelines

│ │ └── init.py

│ └── settings.py

├── requirements.txt

├── setup.py

└── tests

├── init.py

├── pipelines

│ └── init.py

└── test_run.py

- 目录作用说明

iris_demo # Parent directory of the template

├── conf # Project configuration files

├── data # 项目本地文件 (not committed to version control)

├── docs # 项目文档 Project documentation

├── logs # 项目日志 Project output logs (not committed to version control)

├── notebooks # 项目的一些notebooks Project related Jupyter notebooks (can be used for experimental code before moving the code to src)

├── README.md # Project README

├── setup.cfg # Configuration options for `pytest` when doing `kedro test` and for the `isort` utility when doing `kedro lint`

└── src # Project source code

2.1 data 完善

直接将sklearn中的iris数据导出

cd data/05_model_input

# 进入python

python

生成 iris.csv 文件

from sklearn.datasets import load_iris

import pandas as pd

iris = load_iris()

df = pd.DataFrame(iris.data, columns=[i[:-5].replace(' ', '_') for i in iris.feature_names])

df['target'] = iris.target

df.to_csv('iris.csv', index=False)

2.2 src 完善

cd src/iris_demo/pipelines

mkdir training_pipline

cd training_pipline

touch train_func_node.py

touch train_pip.py

train_func_node.py将训练脚本写成function 然后用于后续封装

# train_func_node.py

# python3

# Create date: 2022-09-05

# Author: Scc_hy

# Func: 模型训练

# ===============================================================================

import logging

import pandas as pd

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import f1_score

from sklearn.model_selection import train_test_split

def split_data(data, parameters):

X = data[parameters["features"]]

y = data[parameters["target"]]

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=parameters["test_size"], random_state=parameters["random_state"]

)

return X_train, X_test, y_train, y_test

def train_model(X_train, y_train):

regressor = LogisticRegression()

regressor.fit(X_train, y_train.values.ravel())

return regressor

def evaluate_model(estimator, X_test, y_test):

y_pred = estimator.predict(X_test)

score = f1_score(y_test.values.ravel(), y_pred.ravel(), average='macro')

logger = logging.getLogger(__name__)

logger.info(f"[ valid ] f1-score {score:.3f}")

train_pip.py将train_func_node.py中的方法封装成node, 然后构建一个pipeline- 如果输入不是上面的node的输出,则需要在

conf/base/catalog.yml或者conf/base/parameters.yml中定义的- parameters.yml 中

params:model_options - conf/base/catalog.yml 中

iris_data

- parameters.yml 中

- 对于模型以及一些中间数据,我们可以在 conf/base/catalog.yml 中设置添加

- 如果输入不是上面的node的输出,则需要在

# python3

# Create date: 2022-09-05

# Author: Scc_hy

# Func: 模型训练 pipeline

# ===============================================================================

from kedro.pipeline import pipeline, node

from .train_func_node import evaluate_model, split_data, train_model

def create_pipeline(**kwargs):

return pipeline(

[

node(

func=split_data,

inputs=["irir_data", "params:model_options"],

outputs=["X_train", "X_test", "y_train", "y_test"],

name="split_data_node",

),

node(

func=train_model,

inputs=["X_train", "y_train"],

outputs="logistic_model_v1",

name="train_model_node",

),

node(

func=evaluate_model,

inputs=["logistic_model_v1", "X_test", "y_test"],

outputs=None,

name="evaluate_model_node",

),

]

)

完善 iris-demo\src\iris_demo\pipeline_registry.py

"""Project pipelines."""

from typing import Dict

from iris_demo.pipelines.training_pipline.train_pip import create_pipeline

from kedro.pipeline import Pipeline, pipeline

def register_pipelines() -> Dict[str, Pipeline]:

"""Register the project's pipeline.

Returns:

A mapping from a pipeline name to a ``Pipeline`` object.

"""

data_science_pipeline = create_pipeline()

return {

"__default__": data_science_pipeline,

"ds": data_science_pipeline,

}

2.3 conf 完善

文件作用说明

# conf/base

catalog.yml # with the file paths and load/save configuration required for different datasets

logging.yml # Uses Python’s default logging library to set up logging

parameters.yml # Allows you to define parameters for machine learning experiments e.g. train / test split and number of iterations

# settings that should not be shared

# the contents of conf/local/ is ignored by git

# conf/local

credentials.yml

完善 catalog.yml

在 conf/base/catalog.yml 中定义所有的数据

irir_data:

type: pandas.CSVDataSet

filepath: data/05_model_input/iris.csv

logistic_model_v1:

type: pickle.PickleDataSet

filepath: data/06_models/logistic_model_v1.pickle

versioned: true

完善 parameters.yml

model_options:

test_size: 0.2

random_state: 66

features:

- sepal_length

- sepal_width

- petal_length

- petal_width

target:

- target

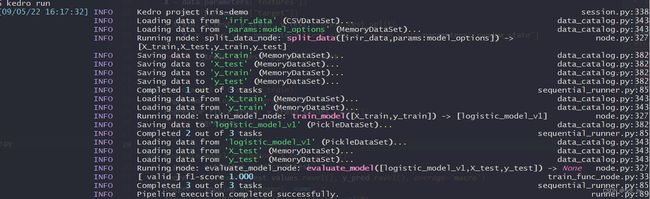

2.4 运行项目

二、创建训练视图

参考

https://kedro.readthedocs.io/en/stable/get_started/example_project.html#run-the-example-project