k8s+haproxy+keepalived高可用集群安装

目录

一、环境介绍

二、基础环境

三、部署keeplived

四、安装haproxy

五、部署docker(所有节点)

六、部署kubeadm

七、部署容器网络(CNI)

八、测试kubernetes集群

九、部署官方Dashboard(UI)

一、环境介绍

1)环境介绍

- CentOS: 7.6

- Docker: 18.06.1-ce

- Kubernetes:1.18.0

- Kuberadm:1.18.0

- Kuberlet:1.18.0

- Kuberctl: 1.18.0

2)软件列表

| 类别 |

名称 |

版本号 |

| 公共基础资源 |

k8s-master |

1.18.0 |

| ceph |

14.2.10 |

|

| Harbor |

1.6.1 |

|

| MySQL |

5.7 |

|

| keepalived |

1.3.5 |

|

| haproxy |

1.5.18 |

|

| coreDNS |

1.6.7 |

|

| docker |

18.06.1 |

|

| node_exporter |

0.15.2 |

3)资源规划

| 资源类型 |

虚拟机资源配置 |

数量 |

用途 |

||

| cpu/核 |

内存/G |

硬盘/G |

|||

| 计算资源 |

4 |

6 |

60 |

3 |

master 3台 |

| 4 |

6 |

60+40(未格式化) |

3 |

node3台 |

|

| 软件资源 |

X86 |

运行环境:关闭swap |

|||

| 操作系统:centOS 7.6 |

|||||

| 数据库:MySQL 5.7 |

|||||

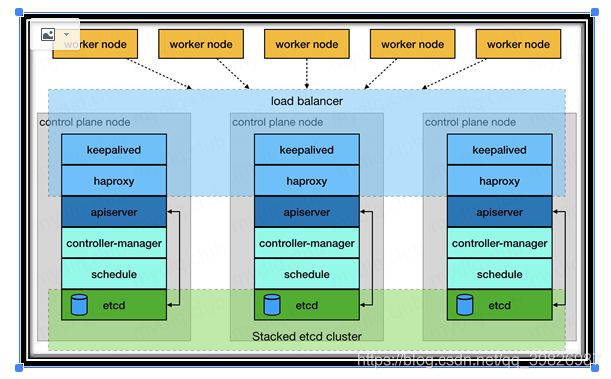

3)集群架构

4)Kuberadm 作用

- Kubeadm 是一个工具,它提供了 kubeadm init 以及 kubeadm join 这两个命令作为快速创建 kubernetes 集群的最佳实践。

- kubeadm 通过执行必要的操作来启动和运行一个最小可用的集群。它被故意设计为只关心启动集群,而不是之前的节点准备工作。同样的,诸如安装各种各样值得拥有的插件,例如 Kubernetes Dashboard、监控解决方案以及特定云提供商的插件,这些都不在它负责的范围。

- 相反,我们期望由一个基于 kubeadm 从更高层设计的更加合适的工具来做这些事情;并且,理想情况下,使用 kubeadm 作为所有部署的基础将会使得创建一个符合期望的集群变得容易。

5)Kuberadm 功能

- kubeadm init #启动一个 Kubernetes 主节点

- kubeadm join #启动一个 Kubernetes 工作节点并且将其加入到集群

- kubeadm upgrade #更新一个 Kubernetes 集群到新版本

- kubeadm config #如果使用 v1.7.x 或者更低版本的 kubeadm 初始化集群,您需要对集群做一些配置以便使用 kubeadm upgrade 命令

- kubeadm token #管理 kubeadm join 使用的令牌

- kubeadm reset #还原 kubeadm init 或者 kubeadm join 对主机所做的任何更改

- kubeadm version #打印 kubeadm 版本

- kubeadm alpha #预览一组可用的新功能以便从社区搜集反馈

6)功能版本

| Area |

Maturity Level |

| Command line UX |

GA |

| Implementation |

GA |

| Config file API |

beta |

| CoreDNS |

GA |

| kubeadm alpha subcommands |

alpha |

| High availability |

alpha |

| DynamicKubeletConfig |

alpha |

| Self-hosting |

alpha |

7)虚拟机分配说明

| 地址 |

主机名 |

内存&CPU |

角色 |

| 192.168.4.100 |

— |

— |

vip |

| 192.168.4.114 |

k8s-master-01 |

4C & 4G |

master |

| 192.168.4.119 |

k8s-master-02 |

4C & 4G |

master |

| 192.168.4.204 |

k8s-master-03 |

4C & 4G |

master |

| 192.168.4.115 |

k8s-node-01 |

4c & 4G |

node |

| 192.168.4.116 |

k8s-node-02 |

4c & 4G |

node |

| 192.168.4.118 |

k8s-node-03 |

4c & 4G |

node |

8)各个节点端口占用

- Master 节点

| 规则 |

方向 |

端口范围 |

作用 |

使用者 |

| TCP |

Inbound |

6443* |

Kubernetes API |

server All |

| TCP |

Inbound |

2379-2380 |

etcd server |

client API kube-apiserver, etcd |

| TCP |

Inbound |

10250 |

Kubelet API |

Self, Control plane |

| TCP |

Inbound |

10251 |

kube-scheduler |

Self |

| TCP |

Inbound |

10252 |

kube-controller-manager |

Sel |

- node 节点

| 规则 |

方向 |

端口范围 |

作用 |

使用者 |

| TCP |

Inbound |

10250 |

Kubelet API |

Self, Control plane |

| TCP |

Inbound |

30000-32767 |

NodePort Services** |

All |

二、基础环境

1)修改hosts文件

#配置每台主机的hosts(/etc/hosts),添加host_ip $hostname到/etc/hosts文件中。例如:

cat >>/etc/hosts<> /etc/profile;source /etc/profile

时间同步

#同步阿里云时区

yum install ntpdate -y

ntpdate time1.aliyun.com

#设置1小时同步一次

[root@localhost cm]# crontab -e

0 */1 * * * ntpdate time1.aliyun.com

6)关闭交换分区

#临时关闭交换分区:swapoff -a

swapoff -a && sysctl -w vm.swappiness=0

#永久关闭交换分区:修改/etc/fstab,

sed -ri 's/.*swap.*/#&/' /etc/fstab

7)配置网桥过滤功能

#编辑文件: /etc/sysctl.d/k8s.conf

cat >/etc/sysctl.d/k8s.conf</etc/sysconfig/modules/ipvs.modules<> /etc/security/limits.conf

echo "* hard nofile 65536" >> /etc/security/limits.conf

echo "* soft nproc 65536" >> /etc/security/limits.conf

echo "* hard nproc 65536" >> /etc/security/limits.conf

echo "* soft memlock unlimited" >> /etc/security/limits.conf

echo "* hard memlock unlimited" >> /etc/security/limits.conf

10)升级内核

#由于3点几的内核会导致 kube-proxy-*的pod报错,所以直接升级到5点几

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

yum install -y https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm

yum -y --enablerepo=elrepo-kernel install kernel-ml

内核版本介绍:

lt:longterm的缩写:长期维护版;

ml:mainline的缩写:最新稳定版;

[root@k8s-node-02 ~]# cat /boot/grub2/grub.cfg | grep CentOS

menuentry 'CentOS Linux (5.19.8-1.el7.elrepo.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-3.10.0-957.el7.x86_64-advanced-3d5d2c00-e156-4175-9fd9-3df6df91dc76' {

menuentry 'CentOS Linux (5.4.212-1.el7.elrepo.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-3.10.0-957.el7.x86_64-advanced-3d5d2c00-e156-4175-9fd9-3df6df91dc76' {

menuentry 'CentOS Linux (3.10.0-1127.19.1.el7.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-3.10.0-957.el7.x86_64-advanced-3d5d2c00-e156-4175-9fd9-3df6df91dc76' {

menuentry 'CentOS Linux (3.10.0-957.el7.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-3.10.0-957.el7.x86_64-advanced-3d5d2c00-e156-4175-9fd9-3df6df91dc76' {

menuentry 'CentOS Linux (0-rescue-df2da3c566ed497795d970fc58760acd) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-0-rescue-df2da3c566ed497795d970fc58760acd-advanced-3d5d2c00-e156-4175-9fd9-3df6df91dc76' {

【选择说明】ELRepo有两种类型的Linux内核包,kernel-lt和kernel-ml。kernel-ml软件包是根据Linux Kernel Archives的主线稳定分支提供的源构建的。 内核配置基于默认的RHEL-7配置,并根据需要启用了添加的功能。 这些软件包有意命名为kernel-ml,以免与RHEL-7内核发生冲突,因此,它们可以与常规内核一起安装和更新。 kernel-lt包是从Linux Kernel Archives提供的源代码构建的,就像kernel-ml软件包一样。 不同之处在于kernel-lt基于长期支持分支,而kernel-ml基于主线稳定分支。

[root@k8s-node-02 ~]# grub2-set-default 'CentOS Linux (5.19.8-1.el7.elrepo.x86_64) 7 (Core)'

[root@k8s-node-02 ~]# reboot

[root@k8s-node-02 ~]# uname -r

5.19.8-1.el7.elrepo.x86_64 三、部署keeplived

1)简介

- keepalived 介绍: 是集群管理中保证集群高可用的一个服务软件,其功能类似于heartbeat,用来防止单点故障

- Keepalived 作用: 为haproxy提供vip(192.168.4.100)在三个haproxy实例之间提供主备,降低当其中一个haproxy失效的时对服务的影响。

2)所有master节点安装keepalived

#安装

yum -y install keepalived

#修改配置文件

cat /etc/keepalived/keepalived.conf

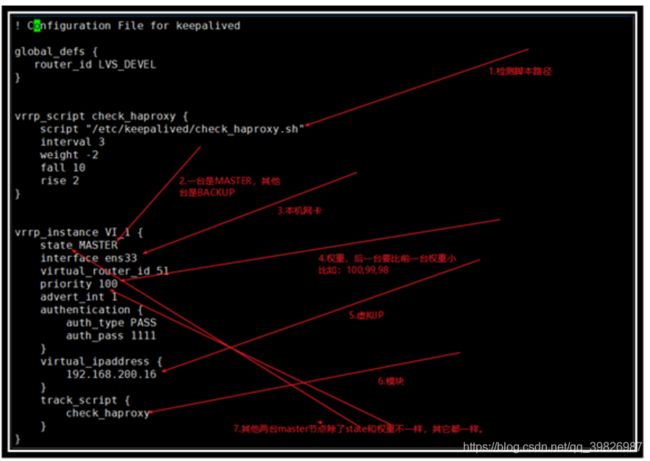

3)keepalived的master配置

cat < /etc/keepalived/keepalived.conf

! Configuration File for keepalived

# 主要是配置故障发生时的通知对象以及机器标识。

global_defs {

# 标识本节点的字条串,通常为 hostname,但不一定非得是 hostname。故障发生时,邮件通知会用到。

router_id LVS_k8s

}

# 用来做健康检查的,当时检查失败时会将 vrrp_instance 的 priority 减少相应的值。

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh" # "killall -0 haproxy" #根据进程名称检测进程是否存活

interval 3

weight -2

fall 10

rise 2

}

# rp_instance用来定义对外提供服务的 VIP 区域及其相关属性。

vrrp_instance VI_1 {

state MASTER #当前节点为MASTER,其他两个节点设置为BACKUP

interface ens32 #改为自己的网卡

virtual_router_id 51

priority 100 #BACKUP的权重比它小

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.4.100 #虚拟ip,即VIP,需要和master同网段

}

track_script {

check_haproxy

}

}

EOF !!!当前节点的配置中 state 配置为 MASTER,其它两个节点设置为 BACKUP

配置说明:

- virtual_ipaddress #vip

- track_script #执行上面定义好的检测的script

- interface #节点固有IP(非VIP)的网卡,用来发VRRP包。

- virtual_router_id #取值在0-255之间,用来区分多个instance的VRRP组播

- advert_int #发VRRP包的时间间隔,即多久进行一次master选举(可以认为是健康查检时间间隔)。

- authentication #认证区域,认证类型有PASS和HA(IPSEC),推荐使用PASS(密码只识别前8位)。

- state #可以是MASTER或BACKUP,不过当其他节点keepalived启动时会将priority比较大的节点选举为MASTER,因此该项其实没有实质用途。

- priority #用来选举master的,要成为master,那么这个选项的值最好高于其他机器50个点,该项取值范围是1-255(在此范围之外会被识别成默认值100)。

4)keepalived的BACKUP配置

cat < /etc/keepalived/keepalived.conf

! Configuration File for keepalived

# 主要是配置故障发生时的通知对象以及机器标识。

global_defs {

# 标识本节点的字条串,通常为 hostname,但不一定非得是 hostname。故障发生时,邮件通知会用到。

router_id LVS_k8s

}

# 用来做健康检查的,当时检查失败时会将 vrrp_instance 的 priority 减少相应的值。

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh" # "killall -0 haproxy" #根据进程名称检测进程是否存活

interval 3

weight -2

fall 10

rise 2

}

# rp_instance用来定义对外提供服务的 VIP 区域及其相关属性。

vrrp_instance VI_1 {

state BACKUP #当前节点为BACKUP

interface ens32 #改为自己的网卡

virtual_router_id 51

priority 90 #BACKUP的权重比它小,如果还有一个BACKUP则更小

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.4.100 #虚拟ip,即VIP,需要和master同网段

}

track_script {

check_haproxy

}

}

EOF 5)健康监测脚本

#所有keepalived端都添加脚本

cat > /etc/keepalived/check_haproxy.sh<

- !!!当关掉当前节点的keeplived服务后将进行虚拟IP转移,将会推选state 为 BACKUP 的节点的某一节点为新的MASTER,可以在那台节点上查看网卡,将会查看到虚拟IP。

- 如果有两个 BACKUP,则权重一个比一个小。

- 注意网卡名称

四、安装haproxy

此处的haproxy为apiserver提供反向代理,haproxy将所有请求轮询转发到每个master节点上。相对于仅仅使用keepalived主备模式仅单个master节点承载流量,这种方式更加合理、健壮。

1)所有master节点安装haporxy

yum install -y haproxy

2)配置haproxy.cfg

[root@k8s-master ~]# cat /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

#注意修改

frontend kubernetes-apiserver

mode tcp

bind *:16443

option tcplog

default_backend kubernetes-apiserver

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

#注意修改

listen stats

bind *:1080

stats auth admin:awesomePassword

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /admin?stats

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend kubernetes-apiserver

mode tcp

balance roundrobin

server k8s-master-01 192.168.4.114:6443 check

server k8s-master-02 192.168.4.119:6443 check

server k8s-master-02 192.168.4.204:6443 check

# server k8s-master-03 192.168.x.x:6443 check

#配置规则:servcer + hostname + IP + 端口 + check

#haproxy配置在其他master节点上(192.168.x.x和192.168.x.x)相同

3)启动keepalived和haproxy

#启动加入开机启动

systemctl start keepalived && systemctl enable keepalived

systemctl start haproxy && systemctl enable haproxy

#检测检测haproxy端口

[root@k8s-master keepalived]# ss -lnt | grep -E "16443|1080"

LISTEN 0 128 *:1080 *:*

LISTEN 0 128 *:16443 *:*

4)检测vip IP是否漂移

#首先查看k8s-master-01

[root@k8s-master-01 ~]# ip address show ens32

2: ens32: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:89:68:17 brd ff:ff:ff:ff:ff:ff

inet 192.168.4.114/24 brd 192.168.4.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

inet 192.168.4.100/32 scope global ens32

valid_lft forever preferred_lft forever

#然后停止k8s-master-01,查看k8s-master-02是否有VIP

systemctl stop keepalived

#查看k8s-master-02是否有VIP

[root@k8s-master-02 ~]# ip address show ens32

2: ens32: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:89:58:af brd ff:ff:ff:ff:ff:ff

inet 192.168.4.119/24 brd 192.168.4.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

inet 192.168.4.100/32 scope global ens32

valid_lft forever preferred_lft forever

#然后停止k8s-master-01,k8s-master-02,查看k8s-master-03是否有VIP

systemctl stop keepalived

#查看k8s-master-02是否有VIP

[root@k8s-master-03 ~]# ip address show ens32

2: ens32: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:89:6b:0e brd ff:ff:ff:ff:ff:ff

inet 192.168.4.204/24 brd 192.168.4.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

inet 192.168.4.100/32 scope global ens32

valid_lft forever preferred_lft forever

#重启k8s-master-01、k8s-master-02、k8s-master-03,确认k8s-master-01的VIP是否存在

systemctl start keepalived

#确认VIP是否漂移回来

[root@k8s-master-01 ~]# ip address show ens32 #存在VIP

2: ens32: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:89:68:17 brd ff:ff:ff:ff:ff:ff

inet 192.168.4.114/24 brd 192.168.4.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

inet 192.168.4.100/32 scope global ens32

valid_lft forever preferred_lft forever

[root@k8s-master-02 ~]# ip address show ens32 #不存在VIP

2: ens32: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:89:58:af brd ff:ff:ff:ff:ff:ff

inet 192.168.4.119/24 brd 192.168.4.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

[root@k8s-master-03 ~]# ip address show ens32 #不存在VIP

2: ens32: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:89:6b:0e brd ff:ff:ff:ff:ff:ff

inet 192.168.4.204/24 brd 192.168.4.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

!!!此时说明keepalived高可用成功 五、部署docker(所有节点)

1)移除之前安装过的Docker

sudo yum -y remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-ce-cli \

docker-engine

#查看还有没有存在的docker组件

rpm -qa|grep docker

#有则通过命令 yum -y remove XXX 来删除,比如:

#yum remove docker-ce-cli

2)配置docker的yum源

#获取docker-ce的yum源

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#获取epel源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

yum -y install epel-release

3)安装docker

#显示docker-ce所有可安装版本:

#yum list docker-ce --showduplicates | sort -r

#安装指定docker版本

sudo yum install docker-ce-18.06.1.ce-3.el7 -y

4)设置镜像存储目录

#创建镜像目录

mkdir -p /data/docker

#修改镜像存储目录

vim /lib/systemd/system/docker.service

!!!找到 ExecStart 这行,王后面加上存储目录,例如这里是 --graph /data/docker

ExecStart=/usr/bin/dockerd --graph /data/docker

5)启动docker并设置docker开机启动

systemctl enable docker

systemctl start docker

docker ps

#确认镜像目录是否改变

docker info |grep "Docker Root Dir"

6)创建镜像加速

cat >>/etc/docker/daemon.json < /etc/docker/daemon.json << EOF

{

"data-root": "/data/docker"

}

EOF

rm -rvf /var/lib/docker

systemctl daemon-reload

systemctl restart docker

docker info |grep "Docker Root Dir" 六、部署kubeadm

- 每个节点安装kubeadm,kubelet和kubectl

- 安装的kubeadm、kubectl和kubelet要和kubernetes版本一致

- kubelet加入开机启动之后不手动启动,要不然会报错

- 初始化集群之后集群会自动启动kubelet服务!!!

1)配置yum源

#配置yum源

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpghttps://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2)安装kubeadm、kubelet、kubectl

yum -y install kubeadm-1.18.0 kubelet-1.18.0 kubectl-1.18.0

systemctl enable kubelet && systemctl daemon-reload

3)获取默认配置文件

cd /root/

kubeadm config print init-defaults > kubeadm-config.yaml

4)修改初始化配置文件

cat > kubeadm-config.yaml < 两个地方设置:

- - certSANs: 虚拟ip地址(为了安全起见,把所有集群地址都加上)

- - controlPlaneEndpoint: 虚拟IP:监控端口号

配置说明:

- imageRepository: registry.aliyuncs.com/google_containers (使用阿里云镜像仓库)

- podSubnet: 10.244.0.0/16 (pod地址池)

- serviceSubnet: 10.10.0.0/16

5)下载相关镜像

kubeadm config images pull --config kubeadm-config.yaml

6)初始化集群

kubeadm init --config kubeadm-config.yaml

日志:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

#配置master-kubectl环境变量

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

#master集群加入认证

kubeadm join 192.168.4.100:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:b481f825c95ee215fdbaba9783e028af2672c92da4bd8d8b488e8e2bf4fb1823 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

#node集群加入认证

kubeadm join 192.168.4.100:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:b481f825c95ee215fdbaba9783e028af2672c92da4bd8d8b488e8e2bf4fb1823

【报错】

[root@k8s-master-03 ~]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

【解决】

#本机只需要生成,只需要创建,不需要再加入

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

7)把主节点证书复制到其它master节点

#k8s-master-01添加master集群

#本机只需要生成,只需要创建,不需要再加入

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#k8s-master-02添加master集群

ssh [email protected] mkdir -p /etc/kubernetes/pki/etcd

scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes

scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} [email protected]:/etc/kubernetes/pki

scp /etc/kubernetes/pki/etcd/ca.* [email protected]:/etc/kubernetes/pki/etcd

#k8s-master-03添加master集群

ssh [email protected] mkdir -p /etc/kubernetes/pki/etcd

scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes

scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} [email protected]:/etc/kubernetes/pki

scp /etc/kubernetes/pki/etcd/ca.* [email protected]:/etc/kubernetes/pki/etcd

#k8s-master-xx添加master集群

ssh [email protected] mkdir -p /etc/kubernetes/pki/etcd

scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes

scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} [email protected]:/etc/kubernetes/pki

scp /etc/kubernetes/pki/etcd/ca.* [email protected]:/etc/kubernetes/pki/etcd

8)master节点加入集群

kubeadm join 192.168.4.100:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:b481f825c95ee215fdbaba9783e028af2672c92da4bd8d8b488e8e2bf4fb1823 \

--control-plane

!!!注意,在生成master之前已经加入高可用网段或者写生成master网段才能加入master,否则不能识别机器。

!!!如果加入失败想重新尝试,请输入 kubeadm reset 命令清除之前的设置,重新执行从“复制秘钥”和“加入集群”这两步

日志

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

9)配置kubectl环境变量

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#查看master节点

[root@k8s-master-01 kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01 NotReady master 19m v1.16.9

k8s-master-02 NotReady master 9m29s v1.16.9

k8s-master-03 NotReady master 4m46s v1.16.9

10)kubectl命令自动补全(master安装)

# 配置自动补全命令(三台都需要操作)

yum -y install bash-completion

# 设置kubectl与kubeadm命令补全,下次login生效

kubectl completion bash > /etc/bash_completion.d/kubectl

kubeadm completion bash > /etc/bash_completion.d/kubeadm

#退出重新登录生效

exit

11)把主节点admin.conf证书复制到其他node节点

#scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes/

scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes/

scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes/

scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes/

12)node节点加入集群

#除了让master节点加入集群组成高可用外,node节点也要加入集群中。

kubeadm join 192.168.4.100:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:b481f825c95ee215fdbaba9783e028af2672c92da4bd8d8b488e8e2bf4fb1823

#查看nodes

[root@k8s-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01 NotReady master 26m v1.16.9

k8s-master-02 NotReady master 16m v1.16.9

k8s-master-03 NotReady master 12m v1.16.9

k8s-node-01 NotReady 28s v1.16.9

k8s-node-02 NotReady 26s v1.16.9

k8s-node-03 NotReady 23s v1.16.9

13)忘记加入集群的token和sha256 (如正常则跳过)

#显示获取token列表

kubeadm token list

#默认情况下 Token 过期是时间是24小时,如果 Token 过期以后,可以输入以下命令,生成新的 Token

kubeadm token create

#获取ca证书sha256编码hash值

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

#获取高可用域名

[root@k8s-master-01 opt]# cat kubeadm-config.yaml |grep controlPlaneEndpoint:

controlPlaneEndpoint: "master.k8s.io:16443"

#拼接命令

kubeadm join 192.168.80.100:16443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:b481f825c95ee215fdbaba9783e028af2672c92da4bd8d8b488e8e2bf4fb1823

!!!如果是master加入,请在最后面加上 -control-plane 这个参数

kubeadm join 192.168.80.100:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:e00917e2ec706ee16db774feeb0ef219b041a74518c021f62271721ccbe81dd6 \

--control-plane

14)删除节点

https://www.cnblogs.com/douh/p/12503067.html 由于生产环境数据量很大,所以需要更改数据目录,所以参考我另外博客修改数据目录

修改k8s的数据目录_烟雨话浮生的博客-CSDN博客

七、部署容器网络(CNI)

1)Flannel网络

Flannel是CoreOS维护的一个网络组件,Flannel为每个Pod提供全局唯一的IP,Flannel使用ETCD来存储Pod子网与Node IP之间的关系。flanneld守护进程在每台主机上运行,并负责维护ETCD信息和路由数据包。

下载地址:https://github.com/coreos/flannel/blob/master/Documentation/kube-flannel.yml

#master创建目录

mkdir -p /opt/k8s/flannel

cd /opt/k8s/flannel

#去浏览器找打将其文件复制粘贴

https://github.com/coreos/flannel/blob/master/Documentation/kube-flannel.yml

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#修改国内镜像地址,注意版本

sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.11.0-amd64#g" kube-flannel.yml

#创建

kubectl apply -f kube-flannel.yml

#查看

kubectl get pods -n kube-system

!!!注意“Network”: “10.244.0.0/16”要和kubeadm-config.yaml配置文件中podSubnet: 10.244.0.0/16相同

2) 解决kube-flannel.yml不能下载

#解决GitHub的raw.githubusercontent.com无法连接问题

cat >>/etc/hosts< 4m46s v1.16.9

k8s-node-02 Ready 4m44s v1.16.9

k8s-node-03 Ready 4m41s v1.16.9

#查看pods

[root@k8s-master-02 kubernetes]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-58cc8c89f4-2zcv8 1/1 Running 0 5m55s

coredns-58cc8c89f4-lfz4d 1/1 Running 0 6m6s

etcd-k8s-master-01 1/1 Running 0 27m

etcd-k8s-master-02 1/1 Running 0 27m

kube-apiserver-k8s-master-01 1/1 Running 0 27m

kube-apiserver-k8s-master-02 1/1 Running 0 27m

kube-controller-manager-k8s-master-01 1/1 Running 1 27m

kube-controller-manager-k8s-master-02 1/1 Running 0 27m

kube-flannel-ds-7t6hs 1/1 Running 0 6m36s

kube-flannel-ds-kk9fq 1/1 Running 0 6m36s

kube-flannel-ds-rv9jl 1/1 Running 0 6m36s

kube-flannel-ds-trqx7 1/1 Running 0 6m36s

kube-flannel-ds-vwsvj 1/1 Running 0 6m36s

kube-proxy-clh4r 1/1 Running 0 16m

kube-proxy-hk7rk 1/1 Running 0 28m

kube-proxy-nxqbf 1/1 Running 0 27m

kube-proxy-vxvdk 1/1 Running 0 16m

kube-proxy-xcn2r 1/1 Running 0 16m

kube-scheduler-k8s-master-01 1/1 Running 1 27m

kube-scheduler-k8s-master-02 1/1 Running 0 27m

2)部署calico网络

#下载

wget -c https://docs.projectcalico.org/v3.8/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml --no-check-certificate

#更改calico.yaml

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# 下方新增

- name: IP_AUTODETECTION_METHOD

value: "interface=ens32"

# ens32为本地网卡名字

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

#修改为与 kubeadm-config.yaml 中一致的pod地址池。

value: "10.244.0.0/16"

# Disable file logging so `kubectl logs` works.

#创建

kubectl apply -f calico.yaml

#查看是否是IPVS模式

[root@k8s-master-01 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 192.168.4.114:6443 Masq 1 1 0

-> 192.168.4.119:6443 Masq 1 1 0

TCP 10.96.0.10:53 rr

-> 10.224.183.129:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.224.183.129:9153 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.224.183.129:53 Masq 1 0 0

#查看日志

kubectl logs -n kube-system kube-proxy-75pvh |grep IPVS八、测试kubernetes集群

- 验证Pod工作

#能否创建pod成功、能成功则无问题

[root@k8s-master ~]# kubectl create deployment web --image=nginx

[root@k8s-master ~]# kubectl get pods|grep web

web-5dcb957ccc-4hdv9 1/1 Running 0 41s

- 验证Pod网络通信

#能否ping通正在运行pod的vip,能ping通则没有问题

[root@k8s-master ~]# kubectl get pods -o wide |grep web

web-5dcb957ccc-4hdv9 1/1 Running 0 109s 10.244.36.69 k8s-node1

[root@k8s-master ~]# ping -c 2 10.244.36.69

PING 10.244.36.69 (10.244.36.69) 56(84) bytes of data.

64 bytes from 10.244.36.69: icmp_seq=1 ttl=63 time=0.576 ms

64 bytes from 10.244.36.69: icmp_seq=2 ttl=63 time=0.396 ms

--- 10.244.36.69 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.396/0.486/0.576/0.090 ms

- 验证DNS解析

#启动一个busybox,版本号固定1.28.4

[root@k8s-master ~]# kubectl run dns-test -it --rm --image=busybox:1.28.4 -- sh

If you don't see a command prompt, try pressing enter.

/ # ping www.baidu.com #ping百度是否能通

PING www.baidu.com (183.232.231.172): 56 data bytes

64 bytes from 183.232.231.172: seq=0 ttl=127 time=44.849 ms

/ # nslookup kube-dns.kube-system #查看dns是否能够解析

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kube-dns.kube-system

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

#测试外网能否访问nginx镜像,如果能够访问则正常

[root@k8s-master ~]# kubectl expose deployment web --port=80 --target-port=80 --type=NodePort

[root@k8s-master ~]# kubectl get svc |grep web

web NodePort 10.104.236.186 80:32726/TCP 17s

访问地址:http://NodeIP:32726

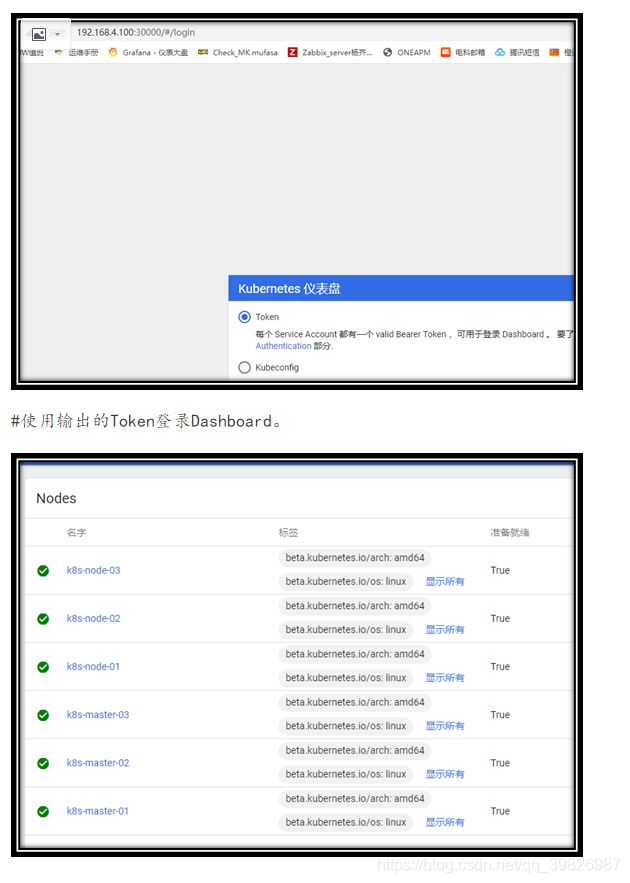

九、部署官方Dashboard(UI)

默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:

mkdir -p /opt/k8s/dashboard

cd /opt/k8s/dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

mv recommended.yaml kubernertes-dashboard.yaml

$ vi recommended.yaml

spec:

type: NodePort #加入

ports:

- port: 443

targetPort: 8443

nodePort: 30000 #加入

selector:

k8s-app: kubernetes-dashboard

#修改名称

mv recommended.yaml kubernertes-dashboard.yaml

#启动

kubectl create -f kubernertes-dashboard.yaml

#查看

kubectl get pods -n kubernetes-dashboard

#详情

[root@k8s-master-01 dashboard]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-c79c65bb7-fvhl4 1/1 Running 0 5m36s

kubernetes-dashboard-56484d4c5-htsh7 1/1 Running 0 5m36s

#创建service account并绑定默认cluster-admin管理员集群角色:

# 创建用户

kubectl create serviceaccount dashboard-admin -n kube-system

# 用户授权

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

#解决WEB页面报错

kubectl create clusterrolebinding system:anonymous --clusterrole=cluster-admin --user=system:anonymous

# 获取用户Token

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

#访问地址:https://NodeIP:30001

【报错】Client sent an HTTP request to an HTTPS server.

【解决】使用https访问

https://192.168.4.116:30000/

#VIP地址加端口

https://192.168.4.100:30000/#/login