RocketMQ源码构建和重点解读

文章目录

- 一、源码环境搭建

-

- 1 源码拉取

- 2 源码调试

- 2.1 启动NameServer

- 2.2 启动Broker

- 2.3 发送消息

- 2.4 消费消息

- 2.5 如何看源码

- 二、NameServer启动

-

- 1 核心问题

- 2 源码重点

- 三、Broker启动

- 四、Broker注册

- 五、Producer

-

- **1 功能回顾**

- 2 源码重点

- 六、消息存储

-

- 6.1-commitLog写入

- 6.2-分发ConsumeQueue和IndexFile

- 6.3 文件同步刷盘与异步刷盘

- 6.4 过期文件删除

- 6.5 文件存储部分的总结

- 七、消费者

-

- 7.1 启动

- 7.2 消息拉取

- 7.3 长轮询拉取机制

- 7.4 客户端负载均衡策略

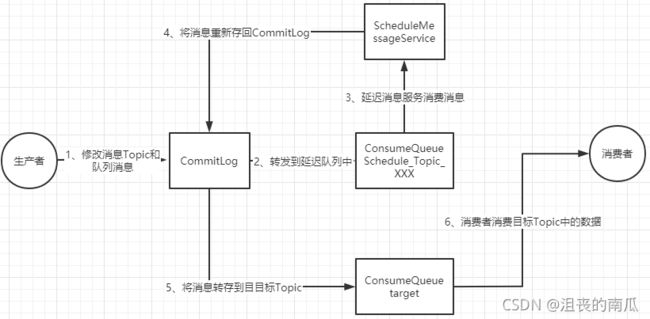

- 八、延迟消息

-

- 消费者部分小结

- 源码解读小结

一、源码环境搭建

1 源码拉取

RocketMQ的官方Git仓库地址:https://github.com/apache/rocketmq 可以用git把项目clone下来或者直接下载代码包。

也可以到RocketMQ的官方网站上下载指定版本的源码: http://rocketmq.apache.org/dowloading/releases/

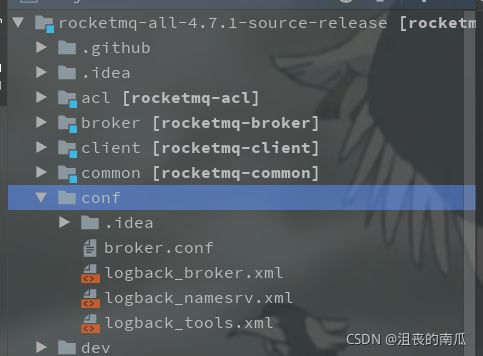

下载后就可以解压导入到IDEA中进行解读了。我们只要注意下是下载的4.7.1版本就行了。

源码下很多的功能模块,我们只关注下几个最为重要的模块:

- broker: broker 模块(broke 启动进程)

- client :消息客户端,包含消息生产者、消息消费者相关类

- example: RocketMQ 示例代码

- namesrv:NameServer实现相关类(NameServer启动进程)

- store:消息存储实现相关类

各个模块的功能大都从名字上就能看懂。我们可以在有需要的时候再进去看源码。

但是这些模块有些东西还是要关注的。例如docs文件夹下的文档,以及各个模块下都有非常丰富的junit测试代码,这些都是非常有用的。

2 源码调试

将源码导入IDEA后,需要先对源码进行编译。编译指令 clean install -Dmaven.test.skip=true

编译完成后就可以开始调试代码了。调试时需要按照以下步骤:

调试时,先在项目目录下创建一个conf目录,并从distribution拷贝broker.conf和logback_broker.xml和logback_namesrv.xml

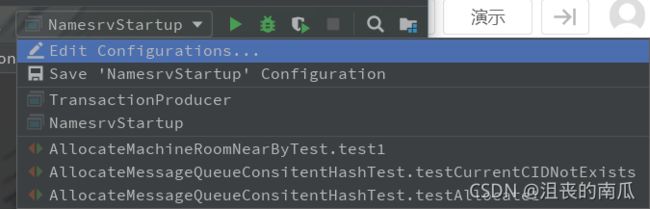

2.1 启动NameServer

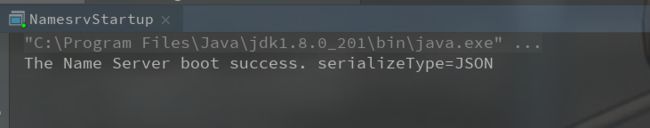

展开namesrv模块,运行NamesrvStartup类即可启动NameServer。

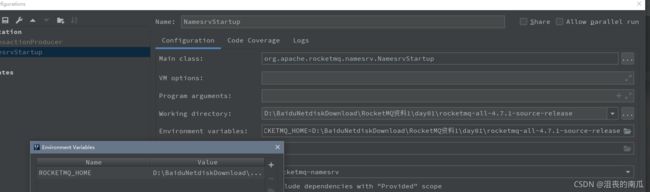

启动时,会报错,提示需要配置一个ROCKETMQ_HOME环境变量。这个环境变量我们可以在机器上配置,跟配置JAVA_HOME环境变量一样。也可以在IDEA的运行环境中配置。目录指向源码目录即可。

配置完成后,再次执行,看到以下日志内容,表示NameServer启动成功。

The Name Server boot success. serializeType=JSON

2.2 启动Broker

启动Broker之前,我们需要先修改之前复制的broker.conf文件.

brokerClusterName = DefaultCluster

brokerName = broker-a

brokerId = 0

deleteWhen = 04

fileReservedTime = 48

brokerRole = ASYNC_MASTER

flushDiskType = ASYNC_FLUSH

# 自动创建Topic

autoCreateTopicEnable=true

# nameServ地址

namesrvAddr=127.0.0.1:9876

# 存储路径

storePathRootDir=E:\\RocketMQ\\data\\rocketmq\\dataDir

# commitLog路径

storePathCommitLog=E:\\RocketMQ\\data\\rocketmq\\dataDir\\commitlog

# 消息队列存储路径

storePathConsumeQueue=E:\\RocketMQ\\data\\rocketmq\\dataDir\\consumequeue

# 消息索引存储路径

storePathIndex=E:\\RocketMQ\\data\\rocketmq\\dataDir\\index

# checkpoint文件路径

storeCheckpoint=E:\\RocketMQ\\data\\rocketmq\\dataDir\\checkpoint

# abort文件存储路径

abortFile=E:\\RocketMQ\\data\\rocketmq\\dataDir\\abort

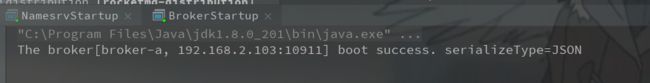

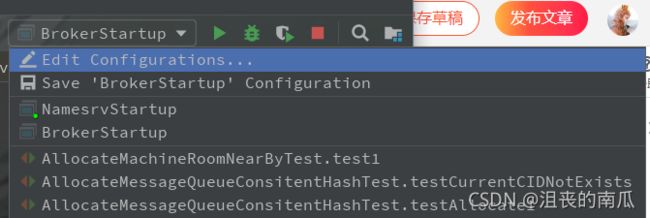

Broker的启动类是broker模块下的BrokerStartup。

启动Broker时,同样需要ROCKETMQ_HOME环境变量,并且还需要配置一个-c 参数,指向broker.conf配置文件。

2.3 发送消息

在源码的example模块下,提供了非常详细的测试代码。例如我们启动example模块下的org.apache.rocketmq.example.quickstart.Producer类即可发送消息。

但是在测试源码中,需要指定NameServer地址。这个NameServer地址有两种指定方式,一种是配置一个NAMESRV_ADDR的环境变量。

另一种是在源码中指定。我们可以在源码中加一行代码指定NameServer。

producer.setNamesrvAddr("127.0.0.1:9876");

然后就可以发送消息了。

如果我们启动生产者的时候出现如下问题说磁盘内存不足,我们可以修改conf下的broker.conf文件,来加大这个比例。

# 设置磁盘剩余内存比例不足98%才报错

diskMaxUsedSpaceRatio=98

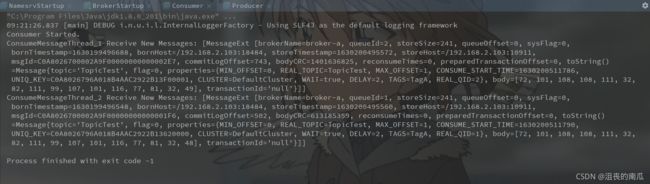

2.4 消费消息

我们可以使用同一模块下的org.apache.rocketmq.example.quickstart.Consumer类来消费消息。运行时同样需要指定NameServer地址。

consumer.setNamesrvAddr("127.0.0.1:9876");

这样整个调试环境就搭建好了。

2.5 如何看源码

我们在看源码的时候,要注意,不要一看源码就一行行代码都逐步看,更不要期望一遍就把代码给看明白。这样会陷入到代码的复杂细节中,瞬间打击到放弃。

看源码时,需要用层层深入的方法。每一次阅读源码时,先了解程序执行的流程性代码,略过服务实现的细节性代码,形成大概的概念框架。然后再回头按同样的方法,逐步深入到之前略过的代码。这样才能从源码中看出一点门道来。

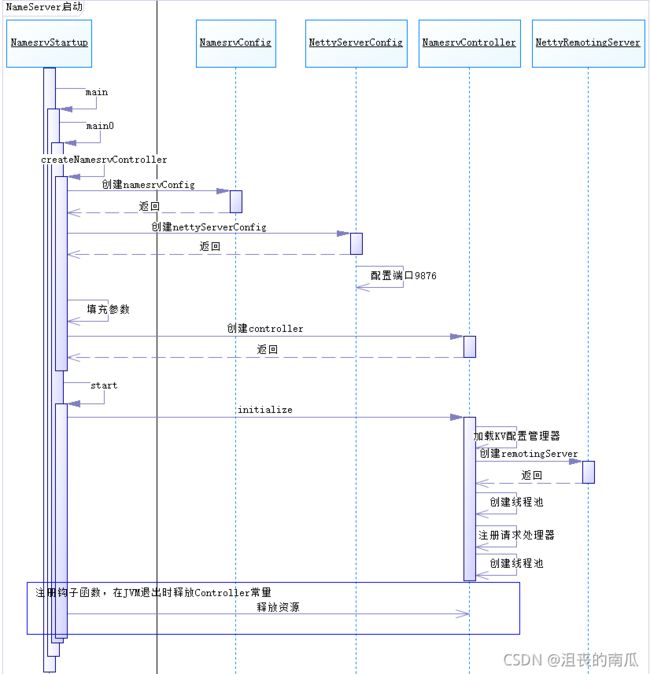

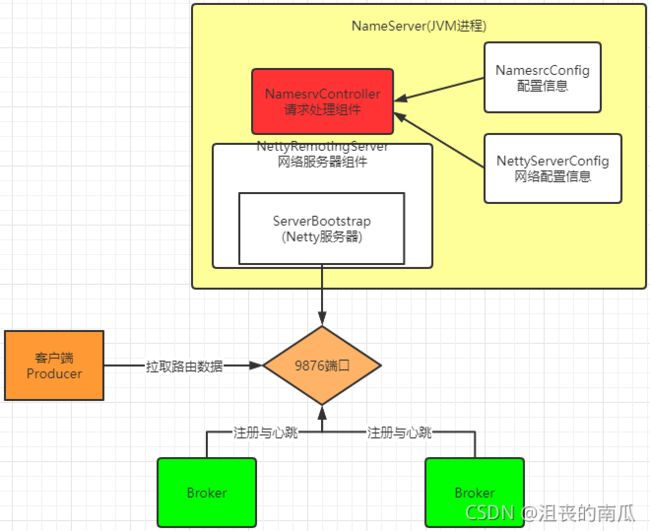

二、NameServer启动

NameServer的启动入口为NamesrvStartup类的main方法,我们可以进行逐步调试。这次看源码,我们不要太过陷入其中的细节,我们的目的是先搞清楚NameServer的大体架构。

1 核心问题

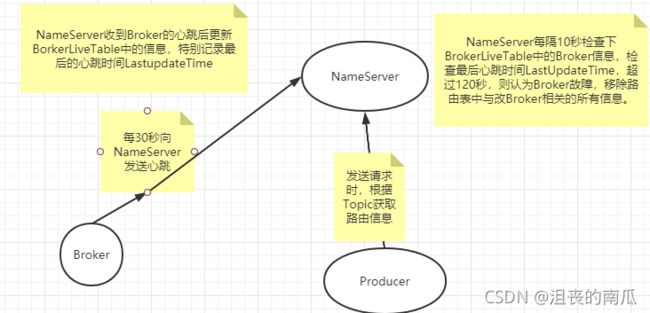

从之前的介绍中,我们已经了解到,在RocketMQ中,实际进行消息存储、推送等核心功能的是Broker。那NameServer具体做什么用呢?NameServer的核心作用其实就只有两个,一是维护Broker的服务地址并进行及时的更新。二是给Producer和Consumer提供服务获取Broker列表。

2 源码重点

整个NameServer的核心就是一个NamesrvController对象。这个controller对象就跟java Web开发中的Controller功能类似,都是响应客户端请求的。

在创建NamesrvController对象时,有两个关键的配置文件NamesrvConfig这个是NameServer自己运行需要的配置信息,还一个NettyServerConfig包含Netty服务端的配置参数,固定的占用了9876端口。

比较有意思的是这个9876端口并没有提供覆盖的方法

然后在启动服务时,启动了一个RemotingServer。这个就是用来响应请求的。

在关闭服务时,关闭了四个东西remotingServer,响应请求的服务;remotingExecutor Netty服务线程池; scheduledExecutorService 定时任务;fileWatchService 这个是用来跟踪acl配置的(acl的配置文件是实时热加载的)。

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.rocketmq.namesrv;

import ch.qos.logback.classic.LoggerContext;

import ch.qos.logback.classic.joran.JoranConfigurator;

import ch.qos.logback.core.joran.spi.JoranException;

import java.io.BufferedInputStream;

import java.io.FileInputStream;

import java.io.IOException;

import java.io.InputStream;

import java.util.Properties;

import java.util.concurrent.Callable;

import org.apache.commons.cli.CommandLine;

import org.apache.commons.cli.Option;

import org.apache.commons.cli.Options;

import org.apache.commons.cli.PosixParser;

import org.apache.rocketmq.common.MQVersion;

import org.apache.rocketmq.common.MixAll;

import org.apache.rocketmq.common.constant.LoggerName;

import org.apache.rocketmq.logging.InternalLogger;

import org.apache.rocketmq.logging.InternalLoggerFactory;

import org.apache.rocketmq.common.namesrv.NamesrvConfig;

import org.apache.rocketmq.remoting.netty.NettyServerConfig;

import org.apache.rocketmq.remoting.protocol.RemotingCommand;

import org.apache.rocketmq.srvutil.ServerUtil;

import org.apache.rocketmq.srvutil.ShutdownHookThread;

import org.slf4j.LoggerFactory;

//nameServer的启动类

public class NamesrvStartup {

private static InternalLogger log;

private static Properties properties = null;

private static CommandLine commandLine = null;

//K2 看启动方法时,注意要综合下启动脚本中的JVM参数配置。

public static void main(String[] args) {

main0(args);

}

public static NamesrvController main0(String[] args) {

//K2 NameServer启动的核心组件,NamesrvController

//类似于Web应用里的Controller,这个组件就是用来接收网络请求的。那NameServer到底要接收哪些网络请求?

try {

NamesrvController controller = createNamesrvController(args);

start(controller);

String tip = "The Name Server boot success. serializeType=" + RemotingCommand.getSerializeTypeConfigInThisServer();

log.info(tip);

System.out.printf("%s%n", tip);

return controller;

} catch (Throwable e) {

e.printStackTrace();

System.exit(-1);

}

return null;

}

public static NamesrvController createNamesrvController(String[] args) throws IOException, JoranException {

System.setProperty(RemotingCommand.REMOTING_VERSION_KEY, Integer.toString(MQVersion.CURRENT_VERSION));

//PackageConflictDetect.detectFastjson();

//K2 检测命令行参数。简略

Options options = ServerUtil.buildCommandlineOptions(new Options());

commandLine = ServerUtil.parseCmdLine("mqnamesrv", args, buildCommandlineOptions(options), new PosixParser());

if (null == commandLine) {

System.exit(-1);

return null;

}

//K1 NameServer最核心的三个配置

//NameSrvConfig 包含NameServer自身运行的参数

//NettyServerConfig 包含Netty服务端的配置参数,Netty服务端口默认指定了9876。修改端口参见测试案例

final NamesrvConfig namesrvConfig = new NamesrvConfig();

final NettyServerConfig nettyServerConfig = new NettyServerConfig();

nettyServerConfig.setListenPort(9876);

//K2 解析三个配置对象

if (commandLine.hasOption('c')) {

String file = commandLine.getOptionValue('c');

if (file != null) {

InputStream in = new BufferedInputStream(new FileInputStream(file));

properties = new Properties();

properties.load(in);

MixAll.properties2Object(properties, namesrvConfig);

MixAll.properties2Object(properties, nettyServerConfig);

namesrvConfig.setConfigStorePath(file);

System.out.printf("load config properties file OK, %s%n", file);

in.close();

}

}

if (commandLine.hasOption('p')) {

InternalLogger console = InternalLoggerFactory.getLogger(LoggerName.NAMESRV_CONSOLE_NAME);

MixAll.printObjectProperties(console, namesrvConfig);

MixAll.printObjectProperties(console, nettyServerConfig);

System.exit(0);

}

MixAll.properties2Object(ServerUtil.commandLine2Properties(commandLine), namesrvConfig);

//ROCKETMQ_HOME环境变量检测

if (null == namesrvConfig.getRocketmqHome()) {

System.out.printf("Please set the %s variable in your environment to match the location of the RocketMQ installation%n", MixAll.ROCKETMQ_HOME_ENV);

System.exit(-2);

}

//日志相关配置

LoggerContext lc = (LoggerContext) LoggerFactory.getILoggerFactory();

JoranConfigurator configurator = new JoranConfigurator();

configurator.setContext(lc);

lc.reset();

configurator.doConfigure(namesrvConfig.getRocketmqHome() + "/conf/logback_namesrv.xml");

//最后会把全部配置信息打印一下。

log = InternalLoggerFactory.getLogger(LoggerName.NAMESRV_LOGGER_NAME);

MixAll.printObjectProperties(log, namesrvConfig);

MixAll.printObjectProperties(log, nettyServerConfig);

final NamesrvController controller = new NamesrvController(namesrvConfig, nettyServerConfig);

// remember all configs to prevent discard

controller.getConfiguration().registerConfig(properties);

return controller;

}

public static NamesrvController start(final NamesrvController controller) throws Exception {

if (null == controller) {

throw new IllegalArgumentException("NamesrvController is null");

}

//初始化,主要是初始化几个定时任务

boolean initResult = controller.initialize();

if (!initResult) {

controller.shutdown();

System.exit(-3);

}

Runtime.getRuntime().addShutdownHook(new ShutdownHookThread(log, new Callable<Void>() {

@Override

public Void call() throws Exception {

controller.shutdown();

return null;

}

}));

controller.start();

return controller;

}

public static void shutdown(final NamesrvController controller) {

controller.shutdown();

}

public static Options buildCommandlineOptions(final Options options) {

Option opt = new Option("c", "configFile", true, "Name server config properties file");

opt.setRequired(false);

options.addOption(opt);

opt = new Option("p", "printConfigItem", false, "Print all config item");

opt.setRequired(false);

options.addOption(opt);

return options;

}

public static Properties getProperties() {

return properties;

}

}

//K1 NameController初始化

public boolean initialize() {

//加载KV配置

this.kvConfigManager.load();

//创建NettyServer网络处理对象

this.remotingServer = new NettyRemotingServer(this.nettyServerConfig, this.brokerHousekeepingService);

//Netty服务器的工作线程池

this.remotingExecutor =

Executors.newFixedThreadPool(nettyServerConfig.getServerWorkerThreads(), new ThreadFactoryImpl("RemotingExecutorThread_"));

//注册Processor,把remotingExecutor注入到remotingServer中

this.registerProcessor();

//开启定时任务:每隔10s扫描一次Broker,移除不活跃的Broker

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

NamesrvController.this.routeInfoManager.scanNotActiveBroker();

}

}, 5, 10, TimeUnit.SECONDS);

//开启定时任务:每隔10min打印一次KV配置

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

NamesrvController.this.kvConfigManager.printAllPeriodically();

}

}, 1, 10, TimeUnit.MINUTES);

if (TlsSystemConfig.tlsMode != TlsMode.DISABLED) {

// Register a listener to reload SslContext

try {

fileWatchService = new FileWatchService(

new String[] {

TlsSystemConfig.tlsServerCertPath,

TlsSystemConfig.tlsServerKeyPath,

TlsSystemConfig.tlsServerTrustCertPath

},

new FileWatchService.Listener() {

boolean certChanged, keyChanged = false;

@Override

public void onChanged(String path) {

if (path.equals(TlsSystemConfig.tlsServerTrustCertPath)) {

log.info("The trust certificate changed, reload the ssl context");

reloadServerSslContext();

}

if (path.equals(TlsSystemConfig.tlsServerCertPath)) {

certChanged = true;

}

if (path.equals(TlsSystemConfig.tlsServerKeyPath)) {

keyChanged = true;

}

if (certChanged && keyChanged) {

log.info("The certificate and private key changed, reload the ssl context");

certChanged = keyChanged = false;

reloadServerSslContext();

}

}

private void reloadServerSslContext() {

((NettyRemotingServer) remotingServer).loadSslContext();

}

});

} catch (Exception e) {

log.warn("FileWatchService created error, can't load the certificate dynamically");

}

}

return true;

}

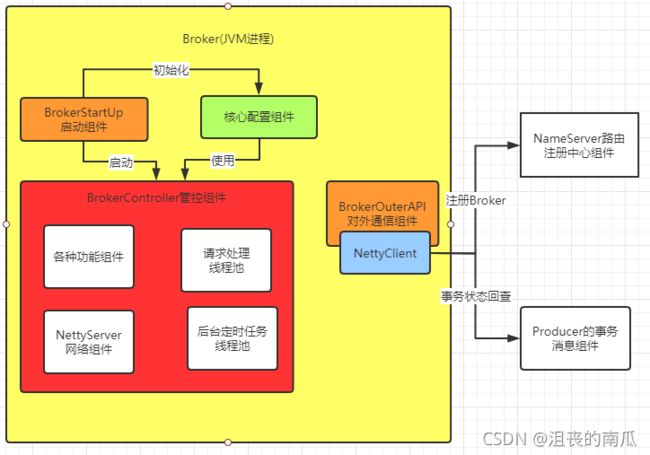

三、Broker启动

Broker启动的入口在BrokerStartup这个类,可以从他的main方法开始调试。

启动过程关键点:

重点也是围绕一个BrokerController对象,先创建,然后再启动。

在BrokerStartup.createBrokerController方法中可以看到Broker的几个核心配置:

-

BrokerConfig

-

NettyServerConfig :Netty服务端占用了10911端口。又是一个神奇的端口。

-

NettyClientConfig

-

MessageStoreConfig

然后在BrokerController.start方法可以看到启动了一大堆Broker的核心服务,我们挑一些重要的。

this.messageStore.start();启动核心的消息存储组件

this.remotingServer.start();

this.fastRemotingServer.start(); 启动两个Netty服务

this.brokerOuterAPI.start();启动客户端,往外发请求

BrokerController.this.registerBrokerAll: 向NameServer注册心跳。

this.brokerStatsManager.start();

this.brokerFastFailure.start();这也是一些负责具体业务的功能组件

我们现在不需要了解这些核心组件的具体功能,只要有个大概,Broker中有一大堆的功能组件负责具体的业务。

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.rocketmq.broker;

import ch.qos.logback.classic.LoggerContext;

import ch.qos.logback.classic.joran.JoranConfigurator;

import org.apache.commons.cli.CommandLine;

import org.apache.commons.cli.Option;

import org.apache.commons.cli.Options;

import org.apache.commons.cli.PosixParser;

import org.apache.rocketmq.common.BrokerConfig;

import org.apache.rocketmq.common.MQVersion;

import org.apache.rocketmq.common.MixAll;

import org.apache.rocketmq.common.constant.LoggerName;

import org.apache.rocketmq.logging.InternalLogger;

import org.apache.rocketmq.logging.InternalLoggerFactory;

import org.apache.rocketmq.remoting.common.RemotingUtil;

import org.apache.rocketmq.remoting.common.TlsMode;

import org.apache.rocketmq.remoting.netty.NettyClientConfig;

import org.apache.rocketmq.remoting.netty.NettyServerConfig;

import org.apache.rocketmq.remoting.netty.NettySystemConfig;

import org.apache.rocketmq.remoting.netty.TlsSystemConfig;

import org.apache.rocketmq.remoting.protocol.RemotingCommand;

import org.apache.rocketmq.srvutil.ServerUtil;

import org.apache.rocketmq.store.config.BrokerRole;

import org.apache.rocketmq.store.config.MessageStoreConfig;

import org.slf4j.LoggerFactory;

import java.io.BufferedInputStream;

import java.io.FileInputStream;

import java.io.InputStream;

import java.util.Properties;

import java.util.concurrent.atomic.AtomicInteger;

import static org.apache.rocketmq.remoting.netty.TlsSystemConfig.TLS_ENABLE;

public class BrokerStartup {

public static Properties properties = null;

public static CommandLine commandLine = null;

public static String configFile = null;

public static InternalLogger log;

public static void main(String[] args) {

start(createBrokerController(args));

}

public static BrokerController start(BrokerController controller) {

try {

//K1 Controller启动

controller.start();

String tip = "The broker[" + controller.getBrokerConfig().getBrokerName() + ", "

+ controller.getBrokerAddr() + "] boot success. serializeType=" + RemotingCommand.getSerializeTypeConfigInThisServer();

if (null != controller.getBrokerConfig().getNamesrvAddr()) {

tip += " and name server is " + controller.getBrokerConfig().getNamesrvAddr();

}

log.info(tip);

System.out.printf("%s%n", tip);

return controller;

} catch (Throwable e) {

e.printStackTrace();

System.exit(-1);

}

return null;

}

public static void shutdown(final BrokerController controller) {

if (null != controller) {

controller.shutdown();

}

}

//K1 创建Broker核心配置

public static BrokerController createBrokerController(String[] args) {

System.setProperty(RemotingCommand.REMOTING_VERSION_KEY, Integer.toString(MQVersion.CURRENT_VERSION));

if (null == System.getProperty(NettySystemConfig.COM_ROCKETMQ_REMOTING_SOCKET_SNDBUF_SIZE)) {

NettySystemConfig.socketSndbufSize = 131072;

}

if (null == System.getProperty(NettySystemConfig.COM_ROCKETMQ_REMOTING_SOCKET_RCVBUF_SIZE)) {

NettySystemConfig.socketRcvbufSize = 131072;

}

try {

//PackageConflictDetect.detectFastjson();

Options options = ServerUtil.buildCommandlineOptions(new Options());

commandLine = ServerUtil.parseCmdLine("mqbroker", args, buildCommandlineOptions(options),

new PosixParser());

if (null == commandLine) {

System.exit(-1);

}

//K1 Broker的核心配置信息

final BrokerConfig brokerConfig = new BrokerConfig();

final NettyServerConfig nettyServerConfig = new NettyServerConfig();

final NettyClientConfig nettyClientConfig = new NettyClientConfig();

//TLS加密相关

nettyClientConfig.setUseTLS(Boolean.parseBoolean(System.getProperty(TLS_ENABLE,

String.valueOf(TlsSystemConfig.tlsMode == TlsMode.ENFORCING))));

//Netty服务端的监听端口10911

nettyServerConfig.setListenPort(10911);

//K2 这个明显是Broker用来存储消息的一些配置信息。

final MessageStoreConfig messageStoreConfig = new MessageStoreConfig();

//如果是SLAVE,会设置一个参数。这参数干嘛的,可以去官网查查。

if (BrokerRole.SLAVE == messageStoreConfig.getBrokerRole()) {

int ratio = messageStoreConfig.getAccessMessageInMemoryMaxRatio() - 10;

messageStoreConfig.setAccessMessageInMemoryMaxRatio(ratio);

}

//这段代码就比较熟悉了。处理命令行参数,最后全部打印出来。

if (commandLine.hasOption('c')) {

String file = commandLine.getOptionValue('c');

if (file != null) {

configFile = file;

InputStream in = new BufferedInputStream(new FileInputStream(file));

properties = new Properties();

properties.load(in);

properties2SystemEnv(properties);

MixAll.properties2Object(properties, brokerConfig);

MixAll.properties2Object(properties, nettyServerConfig);

MixAll.properties2Object(properties, nettyClientConfig);

MixAll.properties2Object(properties, messageStoreConfig);

BrokerPathConfigHelper.setBrokerConfigPath(file);

in.close();

}

}

//填充brokerConfig

MixAll.properties2Object(ServerUtil.commandLine2Properties(commandLine), brokerConfig);

if (null == brokerConfig.getRocketmqHome()) {

System.out.printf("Please set the %s variable in your environment to match the location of the RocketMQ installation", MixAll.ROCKETMQ_HOME_ENV);

System.exit(-2);

}

String namesrvAddr = brokerConfig.getNamesrvAddr();

if (null != namesrvAddr) {

try {

String[] addrArray = namesrvAddr.split(";");

for (String addr : addrArray) {

RemotingUtil.string2SocketAddress(addr);

}

} catch (Exception e) {

System.out.printf(

"The Name Server Address[%s] illegal, please set it as follows, \"127.0.0.1:9876;192.168.0.1:9876\"%n",

namesrvAddr);

System.exit(-3);

}

}

//判断集群角色。通过BrokerId判断主从

switch (messageStoreConfig.getBrokerRole()) {

case ASYNC_MASTER:

case SYNC_MASTER:

brokerConfig.setBrokerId(MixAll.MASTER_ID);

break;

case SLAVE:

if (brokerConfig.getBrokerId() <= 0) {

System.out.printf("Slave's brokerId must be > 0");

System.exit(-3);

}

break;

default:

break;

}

//K2 这里可以看到,判断是否基于Dledger技术来管理主从同步和CommitLog的条件就是brokerId设置为-1

if (messageStoreConfig.isEnableDLegerCommitLog()) {

brokerConfig.setBrokerId(-1);

}

messageStoreConfig.setHaListenPort(nettyServerConfig.getListenPort() + 1);

//日志相关的代码。可以稍后再关注

LoggerContext lc = (LoggerContext) LoggerFactory.getILoggerFactory();

JoranConfigurator configurator = new JoranConfigurator();

configurator.setContext(lc);

lc.reset();

//注意下这里又是个神奇的配置文件指定。

configurator.doConfigure(brokerConfig.getRocketmqHome() + "/conf/logback_broker.xml");

// -p和-m参数都只打印配置

if (commandLine.hasOption('p')) {

InternalLogger console = InternalLoggerFactory.getLogger(LoggerName.BROKER_CONSOLE_NAME);

MixAll.printObjectProperties(console, brokerConfig);

MixAll.printObjectProperties(console, nettyServerConfig);

MixAll.printObjectProperties(console, nettyClientConfig);

MixAll.printObjectProperties(console, messageStoreConfig);

System.exit(0);

} else if (commandLine.hasOption('m')) {

InternalLogger console = InternalLoggerFactory.getLogger(LoggerName.BROKER_CONSOLE_NAME);

MixAll.printObjectProperties(console, brokerConfig, true);

MixAll.printObjectProperties(console, nettyServerConfig, true);

MixAll.printObjectProperties(console, nettyClientConfig, true);

MixAll.printObjectProperties(console, messageStoreConfig, true);

System.exit(0);

}

//又是参数打印

log = InternalLoggerFactory.getLogger(LoggerName.BROKER_LOGGER_NAME);

MixAll.printObjectProperties(log, brokerConfig);

MixAll.printObjectProperties(log, nettyServerConfig);

MixAll.printObjectProperties(log, nettyClientConfig);

MixAll.printObjectProperties(log, messageStoreConfig);

//创建Controllerg

final BrokerController controller = new BrokerController(

brokerConfig,

nettyServerConfig,

nettyClientConfig,

messageStoreConfig);

// remember all configs to prevent discard

controller.getConfiguration().registerConfig(properties);

//初始化 注意从中理清楚Broker的组件结构

boolean initResult = controller.initialize();

if (!initResult) {

controller.shutdown();

System.exit(-3);

}

//优雅关闭。释放资源

Runtime.getRuntime().addShutdownHook(new Thread(new Runnable() {

private volatile boolean hasShutdown = false;

private AtomicInteger shutdownTimes = new AtomicInteger(0);

@Override

public void run() {

synchronized (this) {

log.info("Shutdown hook was invoked, {}", this.shutdownTimes.incrementAndGet());

if (!this.hasShutdown) {

this.hasShutdown = true;

long beginTime = System.currentTimeMillis();

controller.shutdown();

long consumingTimeTotal = System.currentTimeMillis() - beginTime;

log.info("Shutdown hook over, consuming total time(ms): {}", consumingTimeTotal);

}

}

}

}, "ShutdownHook"));

return controller;

} catch (Throwable e) {

e.printStackTrace();

System.exit(-1);

}

return null;

}

private static void properties2SystemEnv(Properties properties) {

if (properties == null) {

return;

}

String rmqAddressServerDomain = properties.getProperty("rmqAddressServerDomain", MixAll.WS_DOMAIN_NAME);

String rmqAddressServerSubGroup = properties.getProperty("rmqAddressServerSubGroup", MixAll.WS_DOMAIN_SUBGROUP);

System.setProperty("rocketmq.namesrv.domain", rmqAddressServerDomain);

System.setProperty("rocketmq.namesrv.domain.subgroup", rmqAddressServerSubGroup);

}

private static Options buildCommandlineOptions(final Options options) {

Option opt = new Option("c", "configFile", true, "Broker config properties file");

opt.setRequired(false);

options.addOption(opt);

opt = new Option("p", "printConfigItem", false, "Print all config item");

opt.setRequired(false);

options.addOption(opt);

opt = new Option("m", "printImportantConfig", false, "Print important config item");

opt.setRequired(false);

options.addOption(opt);

return options;

}

}

初始化方法:

//K1 Broker初始化过程

public boolean initialize() throws CloneNotSupportedException {

//加载磁盘上的配置信息。这些配置信息就用到了MessageStoreConfig

boolean result = this.topicConfigManager.load();

result = result && this.consumerOffsetManager.load();

result = result && this.subscriptionGroupManager.load();

result = result && this.consumerFilterManager.load();

//配置加载成功后干了些什么?

if (result) {

try {

//消息存储管理组件,管理磁盘上的消息的。

this.messageStore =

new DefaultMessageStore(this.messageStoreConfig, this.brokerStatsManager, this.messageArrivingListener,

this.brokerConfig);

//如果启用了Dledger,他就初始化一堆Dledger相关的组件

if (messageStoreConfig.isEnableDLegerCommitLog()) {

DLedgerRoleChangeHandler roleChangeHandler = new DLedgerRoleChangeHandler(this, (DefaultMessageStore) messageStore);

((DLedgerCommitLog)((DefaultMessageStore) messageStore).getCommitLog()).getdLedgerServer().getdLedgerLeaderElector().addRoleChangeHandler(roleChangeHandler);

}

//broker的统计组件

this.brokerStats = new BrokerStats((DefaultMessageStore) this.messageStore);

//load plugin

//加载插件?

MessageStorePluginContext context = new MessageStorePluginContext(messageStoreConfig, brokerStatsManager, messageArrivingListener, brokerConfig);

this.messageStore = MessageStoreFactory.build(context, this.messageStore);

this.messageStore.getDispatcherList().addFirst(new CommitLogDispatcherCalcBitMap(this.brokerConfig, this.consumerFilterManager));

} catch (IOException e) {

result = false;

log.error("Failed to initialize", e);

}

}

result = result && this.messageStore.load();

//K2 Broker的Netty组件。注意,Broker需要既是服务端(接收Producer和Consumer的请求),又是客户端(要往NameServer和Producer发送请求)。

if (result) {

//Netty网络组件

this.remotingServer = new NettyRemotingServer(this.nettyServerConfig, this.clientHousekeepingService);

NettyServerConfig fastConfig = (NettyServerConfig) this.nettyServerConfig.clone();

fastConfig.setListenPort(nettyServerConfig.getListenPort() - 2);

this.fastRemotingServer = new NettyRemotingServer(fastConfig, this.clientHousekeepingService);

//发送消息的处理线程池

this.sendMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getSendMessageThreadPoolNums(),

this.brokerConfig.getSendMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.sendThreadPoolQueue,

new ThreadFactoryImpl("SendMessageThread_"));

//处理consumer的pull请求的线程池

this.pullMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getPullMessageThreadPoolNums(),

this.brokerConfig.getPullMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.pullThreadPoolQueue,

new ThreadFactoryImpl("PullMessageThread_"));

//回复消息的线程池

this.replyMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getProcessReplyMessageThreadPoolNums(),

this.brokerConfig.getProcessReplyMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.replyThreadPoolQueue,

new ThreadFactoryImpl("ProcessReplyMessageThread_"));

//查询消息的线程池。

this.queryMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getQueryMessageThreadPoolNums(),

this.brokerConfig.getQueryMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.queryThreadPoolQueue,

new ThreadFactoryImpl("QueryMessageThread_"));

//这个一看就是管理Broker的一些命令执行的线程池

this.adminBrokerExecutor =

Executors.newFixedThreadPool(this.brokerConfig.getAdminBrokerThreadPoolNums(), new ThreadFactoryImpl(

"AdminBrokerThread_"));

//这个就是管理客户端的线程池

this.clientManageExecutor = new ThreadPoolExecutor(

this.brokerConfig.getClientManageThreadPoolNums(),

this.brokerConfig.getClientManageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.clientManagerThreadPoolQueue,

new ThreadFactoryImpl("ClientManageThread_"));

//心跳请求线程池

this.heartbeatExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getHeartbeatThreadPoolNums(),

this.brokerConfig.getHeartbeatThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.heartbeatThreadPoolQueue,

new ThreadFactoryImpl("HeartbeatThread_", true));

//事务消息结束线程池?一看就是跟事务消息有关。

this.endTransactionExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getEndTransactionThreadPoolNums(),

this.brokerConfig.getEndTransactionThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.endTransactionThreadPoolQueue,

new ThreadFactoryImpl("EndTransactionThread_"));

//管理consumer的线程池

this.consumerManageExecutor =

Executors.newFixedThreadPool(this.brokerConfig.getConsumerManageThreadPoolNums(), new ThreadFactoryImpl(

"ConsumerManageThread_"));

//注册各种处理器。用上了之前那一大堆的线程池。

this.registerProcessor();

//又是一些后台定时任务

final long initialDelay = UtilAll.computeNextMorningTimeMillis() - System.currentTimeMillis();

final long period = 1000 * 60 * 60 * 24;

//定时进行broker统计的任务

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.getBrokerStats().record();

} catch (Throwable e) {

log.error("schedule record error.", e);

}

}

}, initialDelay, period, TimeUnit.MILLISECONDS);

//定时进行consumer消费offset持久化到磁盘的任务

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.consumerOffsetManager.persist();

} catch (Throwable e) {

log.error("schedule persist consumerOffset error.", e);

}

}

}, 1000 * 10, this.brokerConfig.getFlushConsumerOffsetInterval(), TimeUnit.MILLISECONDS);

//对consumer的filter过滤器进行持久化的任务。这里可以看到,消费者的filter是被下推到了Broker来执行的。

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.consumerFilterManager.persist();

} catch (Throwable e) {

log.error("schedule persist consumer filter error.", e);

}

}

}, 1000 * 10, 1000 * 10, TimeUnit.MILLISECONDS);

//定时进行broker保护

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.protectBroker();

} catch (Throwable e) {

log.error("protectBroker error.", e);

}

}

}, 3, 3, TimeUnit.MINUTES);

//定时打印水位线

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.printWaterMark();

} catch (Throwable e) {

log.error("printWaterMark error.", e);

}

}

}, 10, 1, TimeUnit.SECONDS);

//定时进行落后commitlog分发的任务

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

log.info("dispatch behind commit log {} bytes", BrokerController.this.getMessageStore().dispatchBehindBytes());

} catch (Throwable e) {

log.error("schedule dispatchBehindBytes error.", e);

}

}

}, 1000 * 10, 1000 * 60, TimeUnit.MILLISECONDS);

//设置NameServer的地址列表。可以配置加载,也可以发远程请求加载。

if (this.brokerConfig.getNamesrvAddr() != null) {

this.brokerOuterAPI.updateNameServerAddressList(this.brokerConfig.getNamesrvAddr());

log.info("Set user specified name server address: {}", this.brokerConfig.getNamesrvAddr());

} else if (this.brokerConfig.isFetchNamesrvAddrByAddressServer()) {

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.brokerOuterAPI.fetchNameServerAddr();

} catch (Throwable e) {

log.error("ScheduledTask fetchNameServerAddr exception", e);

}

}

}, 1000 * 10, 1000 * 60 * 2, TimeUnit.MILLISECONDS);

}

//开启Dledger后的一些操作

if (!messageStoreConfig.isEnableDLegerCommitLog()) {

if (BrokerRole.SLAVE == this.messageStoreConfig.getBrokerRole()) {

if (this.messageStoreConfig.getHaMasterAddress() != null && this.messageStoreConfig.getHaMasterAddress().length() >= 6) {

this.messageStore.updateHaMasterAddress(this.messageStoreConfig.getHaMasterAddress());

this.updateMasterHAServerAddrPeriodically = false;

} else {

this.updateMasterHAServerAddrPeriodically = true;

}

} else {

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.printMasterAndSlaveDiff();

} catch (Throwable e) {

log.error("schedule printMasterAndSlaveDiff error.", e);

}

}

}, 1000 * 10, 1000 * 60, TimeUnit.MILLISECONDS);

}

}

//跟安全文件相关的代码,先不管他。看清主线再说。

if (TlsSystemConfig.tlsMode != TlsMode.DISABLED) {

// Register a listener to reload SslContext

try {

fileWatchService = new FileWatchService(

new String[] {

TlsSystemConfig.tlsServerCertPath,

TlsSystemConfig.tlsServerKeyPath,

TlsSystemConfig.tlsServerTrustCertPath

},

new FileWatchService.Listener() {

boolean certChanged, keyChanged = false;

@Override

public void onChanged(String path) {

if (path.equals(TlsSystemConfig.tlsServerTrustCertPath)) {

log.info("The trust certificate changed, reload the ssl context");

reloadServerSslContext();

}

if (path.equals(TlsSystemConfig.tlsServerCertPath)) {

certChanged = true;

}

if (path.equals(TlsSystemConfig.tlsServerKeyPath)) {

keyChanged = true;

}

if (certChanged && keyChanged) {

log.info("The certificate and private key changed, reload the ssl context");

certChanged = keyChanged = false;

reloadServerSslContext();

}

}

private void reloadServerSslContext() {

((NettyRemotingServer) remotingServer).loadSslContext();

((NettyRemotingServer) fastRemotingServer).loadSslContext();

}

});

} catch (Exception e) {

log.warn("FileWatchService created error, can't load the certificate dynamically");

}

}

//这三行一看就是一些初始化的东西,暂时先不关注。

//看完主线可以回头再来关注下,使用的SPI机制加载的服务,而SPI机制往往意味着可扩展。

initialTransaction();

initialAcl();

initialRpcHooks();

}

return result;

}

四、Broker注册

BrokerController.this.registerBrokerAll方法会发起向NameServer注册心跳。启动时会立即注册,同时也会启动一个线程池,以10秒延迟,默认30秒的间隔 持续向NameServer发送心跳。

BrokerController.this.registerBrokerAll这个方法就是注册心跳的入口。

然后,在NameServer中也会启动一个定时任务,扫描不活动的Broker。具体观察NamesrvController.initalize方法。

registerBrokerAll方法:

public synchronized void registerBrokerAll(final boolean checkOrderConfig, boolean oneway, boolean forceRegister) {

//Topic配置相关的东东

TopicConfigSerializeWrapper topicConfigWrapper = this.getTopicConfigManager().buildTopicConfigSerializeWrapper();

if (!PermName.isWriteable(this.getBrokerConfig().getBrokerPermission())

|| !PermName.isReadable(this.getBrokerConfig().getBrokerPermission())) {

ConcurrentHashMap<String, TopicConfig> topicConfigTable = new ConcurrentHashMap<String, TopicConfig>();

for (TopicConfig topicConfig : topicConfigWrapper.getTopicConfigTable().values()) {

TopicConfig tmp =

new TopicConfig(topicConfig.getTopicName(), topicConfig.getReadQueueNums(), topicConfig.getWriteQueueNums(),

this.brokerConfig.getBrokerPermission());

topicConfigTable.put(topicConfig.getTopicName(), tmp);

}

topicConfigWrapper.setTopicConfigTable(topicConfigTable);

}

//这里才是比较关键的地方。先判断是否需要注册,然后调用doRegisterBrokerAll方法真正去注册。

if (forceRegister || needRegister(this.brokerConfig.getBrokerClusterName(),

this.getBrokerAddr(),

this.brokerConfig.getBrokerName(),

this.brokerConfig.getBrokerId(),

this.brokerConfig.getRegisterBrokerTimeoutMills())) {

doRegisterBrokerAll(checkOrderConfig, oneway, topicConfigWrapper);

}

}

//K2 roker注册最核心的部分

private void doRegisterBrokerAll(boolean checkOrderConfig, boolean oneway,

TopicConfigSerializeWrapper topicConfigWrapper) {

//为什么返回的是个List?这就是因为Broker是向所有的NameServer进行注册。

List<RegisterBrokerResult> registerBrokerResultList = this.brokerOuterAPI.registerBrokerAll(

this.brokerConfig.getBrokerClusterName(),

this.getBrokerAddr(),

this.brokerConfig.getBrokerName(),

this.brokerConfig.getBrokerId(),

this.getHAServerAddr(),

topicConfigWrapper,

this.filterServerManager.buildNewFilterServerList(),

oneway,

this.brokerConfig.getRegisterBrokerTimeoutMills(),

this.brokerConfig.isCompressedRegister());

//如果注册结果的数量大于0,那么就对结果进行处理

if (registerBrokerResultList.size() > 0) {

RegisterBrokerResult registerBrokerResult = registerBrokerResultList.get(0);

if (registerBrokerResult != null) {

//主节点地址

if (this.updateMasterHAServerAddrPeriodically && registerBrokerResult.getHaServerAddr() != null) {

this.messageStore.updateHaMasterAddress(registerBrokerResult.getHaServerAddr());

}

//从节点地址

this.slaveSynchronize.setMasterAddr(registerBrokerResult.getMasterAddr());

if (checkOrderConfig) {

this.getTopicConfigManager().updateOrderTopicConfig(registerBrokerResult.getKvTable());

}

}

}

}

registerBrokerAll方法:

public List<RegisterBrokerResult> registerBrokerAll(

final String clusterName,

final String brokerAddr,

final String brokerName,

final long brokerId,

final String haServerAddr,

final TopicConfigSerializeWrapper topicConfigWrapper,

final List<String> filterServerList,

final boolean oneway,

final int timeoutMills,

final boolean compressed) {

//初始化一个list,用来放向每个NameServer注册的结果。

final List<RegisterBrokerResult> registerBrokerResultList = Lists.newArrayList();

//NameSrv的地址列表

List<String> nameServerAddressList = this.remotingClient.getNameServerAddressList();

if (nameServerAddressList != null && nameServerAddressList.size() > 0) {

//K2 构建Broker注册的网络请求。可以看看有哪些关键参数。

final RegisterBrokerRequestHeader requestHeader = new RegisterBrokerRequestHeader();

requestHeader.setBrokerAddr(brokerAddr);

requestHeader.setBrokerId(brokerId);

requestHeader.setBrokerName(brokerName);

requestHeader.setClusterName(clusterName);

requestHeader.setHaServerAddr(haServerAddr);

requestHeader.setCompressed(compressed);

//请求体

RegisterBrokerBody requestBody = new RegisterBrokerBody();

requestBody.setTopicConfigSerializeWrapper(topicConfigWrapper);

requestBody.setFilterServerList(filterServerList);

final byte[] body = requestBody.encode(compressed);

final int bodyCrc32 = UtilAll.crc32(body);

requestHeader.setBodyCrc32(bodyCrc32);

//这个东东的作用是等待向全部的Nameserver都进行了注册后再往下走。

final CountDownLatch countDownLatch = new CountDownLatch(nameServerAddressList.size());

for (final String namesrvAddr : nameServerAddressList) {

brokerOuterExecutor.execute(new Runnable() {

@Override

public void run() {

try {

//K2 Broker真正执行注册的方法

RegisterBrokerResult result = registerBroker(namesrvAddr,oneway, timeoutMills,requestHeader,body);

//注册完了,在本地保存下来。

if (result != null) {

registerBrokerResultList.add(result);

}

log.info("register broker[{}]to name server {} OK", brokerId, namesrvAddr);

} catch (Exception e) {

log.warn("registerBroker Exception, {}", namesrvAddr, e);

} finally {

countDownLatch.countDown();

}

}

});

}

try {

//在这里等待所有的NameSrv都注册完成了才往下走。

countDownLatch.await(timeoutMills, TimeUnit.MILLISECONDS);

} catch (InterruptedException e) {

}

}

return registerBrokerResultList;

}

这里使用了countDownLatch来保证注册每一个broker。

五、Producer

1 功能回顾

注意Producer有两种。

- 普通发送者DefaultMQProducer,这个只需要构建一个Netty客户端。

- 事务消息发送者TransactionMQProducer,这个需要构建一个Netty客户端同时也要构建Netty服务端。

对于整个Producer的流程,其实还是挺复杂的,大致分两个步骤, start()方法,准备一大堆信息,send发送消息。我们先抽取下主线。

首先,关于Borker路由信息的管理: Producer需要拉取Broker列表,然后跟Broker建立连接等等很多核心的流程,其实都是在发送消息时建立的。因为在启动时,还不知道要拉取哪个Topic的Broker列表呢。所以对于这个问题,我们关注的重点,不应该是start方法,而是send方法。

Send方法中,首先需要获得Topic的路由信息。这会从本地缓存中获取,如果本地缓存中没有,就从NameServer中去申请。

路由信息大致的管理流程:

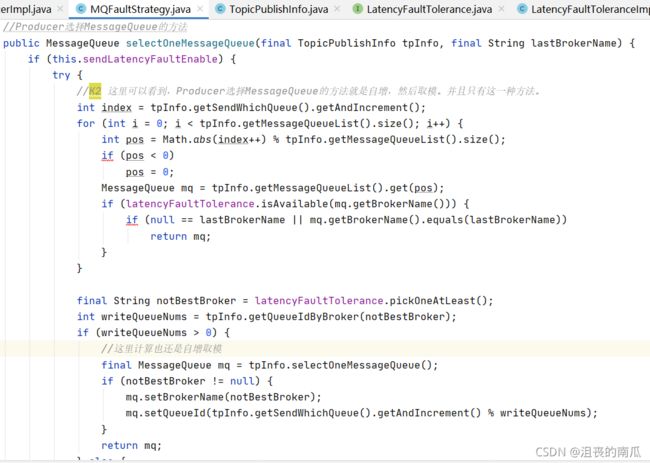

然后 获取路由信息后,会选出一个MessageQueue去发送消息。这个选MessageQueue的方法就是一个索引自增然后取模的方式。

然后 封装Netty请求发送消息。消息发从到Borker后,会由一个CommitLog类写入到CommitLog文件中。

2 源码重点

启动方法:

/**

* Start this producer instance.

*

* Much internal initializing procedures are carried out to make this instance prepared, thus, it's a must

* to invoke this method before sending or querying messages.

*

* @throws MQClientException if there is any unexpected error.

*/

@Override

public void start() throws MQClientException {

this.setProducerGroup(withNamespace(this.producerGroup));

this.defaultMQProducerImpl.start();

if (null != traceDispatcher) {

try {

traceDispatcher.start(this.getNamesrvAddr(), this.getAccessChannel());

} catch (MQClientException e) {

log.warn("trace dispatcher start failed ", e);

}

}

}

public void start() throws MQClientException {

this.start(true);

}

//K2 Producer启动方法

public void start(final boolean startFactory) throws MQClientException {

switch (this.serviceState) {

case CREATE_JUST:

this.serviceState = ServiceState.START_FAILED;

//检查生产者组是否符合要求

this.checkConfig();

//修改当前的instanceName为当前进程ID

if (!this.defaultMQProducer.getProducerGroup().equals(MixAll.CLIENT_INNER_PRODUCER_GROUP)) {

this.defaultMQProducer.changeInstanceNameToPID();

}

//获取MQ实例

this.mQClientFactory = MQClientManager.getInstance().getOrCreateMQClientInstance(this.defaultMQProducer, rpcHook);

//注册MQClientInstance实例,方便后续调用

boolean registerOK = mQClientFactory.registerProducer(this.defaultMQProducer.getProducerGroup(), this);

if (!registerOK) {

this.serviceState = ServiceState.CREATE_JUST;

throw new MQClientException("The producer group[" + this.defaultMQProducer.getProducerGroup()

+ "] has been created before, specify another name please." + FAQUrl.suggestTodo(FAQUrl.GROUP_NAME_DUPLICATE_URL),

null);

}

this.topicPublishInfoTable.put(this.defaultMQProducer.getCreateTopicKey(), new TopicPublishInfo());

//启动实例

if (startFactory) {

mQClientFactory.start();

}

log.info("the producer [{}] start OK. sendMessageWithVIPChannel={}", this.defaultMQProducer.getProducerGroup(),

this.defaultMQProducer.isSendMessageWithVIPChannel());

this.serviceState = ServiceState.RUNNING;

break;

case RUNNING:

case START_FAILED:

case SHUTDOWN_ALREADY:

throw new MQClientException("The producer service state not OK, maybe started once, "

+ this.serviceState

+ FAQUrl.suggestTodo(FAQUrl.CLIENT_SERVICE_NOT_OK),

null);

default:

break;

}

this.mQClientFactory.sendHeartbeatToAllBrokerWithLock();

this.timer.scheduleAtFixedRate(new TimerTask() {

@Override

public void run() {

try {

RequestFutureTable.scanExpiredRequest();

} catch (Throwable e) {

log.error("scan RequestFutureTable exception", e);

}

}

}, 1000 * 3, 1000);

}

我们再来看一下 CREATE_JUST这个下的“mQClientFactory.start();”汇总的这个start方法:

//K1 消费者核心启动过程。难得有点注释了。

public void start() throws MQClientException {

synchronized (this) {

switch (this.serviceState) {

case CREATE_JUST:

this.serviceState = ServiceState.START_FAILED;

// If not specified,looking address from name server

if (null == this.clientConfig.getNamesrvAddr()) {

this.mQClientAPIImpl.fetchNameServerAddr();

}

// Start request-response channel

this.mQClientAPIImpl.start();

// Start various schedule tasks

this.startScheduledTask();

// Start pull service

this.pullMessageService.start();

// Start rebalance service

//K2 客户端负载均衡

this.rebalanceService.start();

// Start push service

this.defaultMQProducer.getDefaultMQProducerImpl().start(false);

log.info("the client factory [{}] start OK", this.clientId);

this.serviceState = ServiceState.RUNNING;

break;

case START_FAILED:

throw new MQClientException("The Factory object[" + this.getClientId() + "] has been created before, and failed.", null);

default:

break;

}

}

}

我们把重点放在send方法上:

@Override

public SendResult send(

Message msg) throws MQClientException, RemotingException, MQBrokerException, InterruptedException {

Validators.checkMessage(msg, this);

msg.setTopic(withNamespace(msg.getTopic()));

return this.defaultMQProducerImpl.send(msg);

}

/**

* DEFAULT SYNC -------------------------------------------------------

*/

public SendResult send(

Message msg) throws MQClientException, RemotingException, MQBrokerException, InterruptedException {

return send(msg, this.defaultMQProducer.getSendMsgTimeout());

}

public SendResult send(Message msg,

long timeout) throws MQClientException, RemotingException, MQBrokerException, InterruptedException {

return this.sendDefaultImpl(msg, CommunicationMode.SYNC, null, timeout);

}

再跟进去,找到具体实现的send方法:

//K1 Producer发送消息的实现类

private SendResult sendDefaultImpl(

Message msg,

final CommunicationMode communicationMode,

final SendCallback sendCallback,

final long timeout

) throws MQClientException, RemotingException, MQBrokerException, InterruptedException {

this.makeSureStateOK();

Validators.checkMessage(msg, this.defaultMQProducer);

final long invokeID = random.nextLong();

long beginTimestampFirst = System.currentTimeMillis();

long beginTimestampPrev = beginTimestampFirst;

long endTimestamp = beginTimestampFirst;

//生产者获取Topic的公开信息,注意下有哪些信息。重点关注怎么选择MessageQueue的。

TopicPublishInfo topicPublishInfo = this.tryToFindTopicPublishInfo(msg.getTopic());

if (topicPublishInfo != null && topicPublishInfo.ok()) {

boolean callTimeout = false;

MessageQueue mq = null;

Exception exception = null;

SendResult sendResult = null;

int timesTotal = communicationMode == CommunicationMode.SYNC ? 1 + this.defaultMQProducer.getRetryTimesWhenSendFailed() : 1;

int times = 0;

String[] brokersSent = new String[timesTotal];

for (; times < timesTotal; times++) {

String lastBrokerName = null == mq ? null : mq.getBrokerName();

//K2 Producer计算把消息发到哪个MessageQueue中。

MessageQueue mqSelected = this.selectOneMessageQueue(topicPublishInfo, lastBrokerName);

if (mqSelected != null) {

mq = mqSelected;

//根据MessageQueue去获取目标节点的信息。

brokersSent[times] = mq.getBrokerName();

try {

beginTimestampPrev = System.currentTimeMillis();

if (times > 0) {

//Reset topic with namespace during resend.

msg.setTopic(this.defaultMQProducer.withNamespace(msg.getTopic()));

}

long costTime = beginTimestampPrev - beginTimestampFirst;

if (timeout < costTime) {

callTimeout = true;

break;

}

//实际发送消息的方法

sendResult = this.sendKernelImpl(msg, mq, communicationMode, sendCallback, topicPublishInfo, timeout - costTime);

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, false);

switch (communicationMode) {

case ASYNC:

return null;

case ONEWAY:

return null;

case SYNC:

if (sendResult.getSendStatus() != SendStatus.SEND_OK) {

if (this.defaultMQProducer.isRetryAnotherBrokerWhenNotStoreOK()) {

continue;

}

}

return sendResult;

default:

break;

}

} catch (RemotingException e) {

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, true);

log.warn(String.format("sendKernelImpl exception, resend at once, InvokeID: %s, RT: %sms, Broker: %s", invokeID, endTimestamp - beginTimestampPrev, mq), e);

log.warn(msg.toString());

exception = e;

continue;

} catch (MQClientException e) {

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, true);

log.warn(String.format("sendKernelImpl exception, resend at once, InvokeID: %s, RT: %sms, Broker: %s", invokeID, endTimestamp - beginTimestampPrev, mq), e);

log.warn(msg.toString());

exception = e;

continue;

} catch (MQBrokerException e) {

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, true);

log.warn(String.format("sendKernelImpl exception, resend at once, InvokeID: %s, RT: %sms, Broker: %s", invokeID, endTimestamp - beginTimestampPrev, mq), e);

log.warn(msg.toString());

exception = e;

switch (e.getResponseCode()) {

case ResponseCode.TOPIC_NOT_EXIST:

case ResponseCode.SERVICE_NOT_AVAILABLE:

case ResponseCode.SYSTEM_ERROR:

case ResponseCode.NO_PERMISSION:

case ResponseCode.NO_BUYER_ID:

case ResponseCode.NOT_IN_CURRENT_UNIT:

continue;

default:

if (sendResult != null) {

return sendResult;

}

throw e;

}

} catch (InterruptedException e) {

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, false);

log.warn(String.format("sendKernelImpl exception, throw exception, InvokeID: %s, RT: %sms, Broker: %s", invokeID, endTimestamp - beginTimestampPrev, mq), e);

log.warn(msg.toString());

log.warn("sendKernelImpl exception", e);

log.warn(msg.toString());

throw e;

}

} else {

break;

}

}

if (sendResult != null) {

return sendResult;

}

String info = String.format("Send [%d] times, still failed, cost [%d]ms, Topic: %s, BrokersSent: %s",

times,

System.currentTimeMillis() - beginTimestampFirst,

msg.getTopic(),

Arrays.toString(brokersSent));

info += FAQUrl.suggestTodo(FAQUrl.SEND_MSG_FAILED);

MQClientException mqClientException = new MQClientException(info, exception);

if (callTimeout) {

throw new RemotingTooMuchRequestException("sendDefaultImpl call timeout");

}

if (exception instanceof MQBrokerException) {

mqClientException.setResponseCode(((MQBrokerException) exception).getResponseCode());

} else if (exception instanceof RemotingConnectException) {

mqClientException.setResponseCode(ClientErrorCode.CONNECT_BROKER_EXCEPTION);

} else if (exception instanceof RemotingTimeoutException) {

mqClientException.setResponseCode(ClientErrorCode.ACCESS_BROKER_TIMEOUT);

} else if (exception instanceof MQClientException) {

mqClientException.setResponseCode(ClientErrorCode.BROKER_NOT_EXIST_EXCEPTION);

}

throw mqClientException;

}

validateNameServerSetting();

throw new MQClientException("No route info of this topic: " + msg.getTopic() + FAQUrl.suggestTodo(FAQUrl.NO_TOPIC_ROUTE_INFO),

null).setResponseCode(ClientErrorCode.NOT_FOUND_TOPIC_EXCEPTION);

}

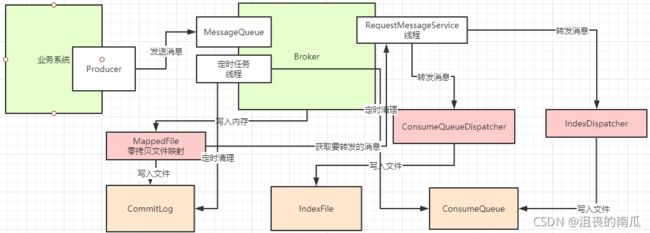

六、消息存储

我们接着上面的流程,来关注下Broker是如何把消息进行存储的。

消息存储的入口在:DefaultMessageStore.putMessage

最终存储的文件有哪些?

- commitLog:消息存储目录

- config:运行期间一些配置信息

- consumerqueue:消息消费队列存储目录

- index:消息索引文件存储目录

- abort:如果存在改文件寿命Broker非正常关闭

- checkpoint:文件检查点,存储CommitLog文件最后一次刷盘时间戳、consumerquueue最后一次刷盘时间,index索引文件最后一次刷盘时间戳

6.1-commitLog写入

CommitLog的doAppend方法就是Broker写入消息的实际入口。这个方法最终会把消息追加到MappedFile映射的一块内存里,并没有直接写入磁盘。写入消息的过程是串行的,一次只会允许一个线程写入。

//K2 CommitLog写入的过程

public PutMessageResult putMessage(final MessageExtBrokerInner msg) {

// Set the storage time

msg.setStoreTimestamp(System.currentTimeMillis());

// Set the message body BODY CRC (consider the most appropriate setting

// on the client)

msg.setBodyCRC(UtilAll.crc32(msg.getBody()));

// Back to Results

AppendMessageResult result = null;

StoreStatsService storeStatsService = this.defaultMessageStore.getStoreStatsService();

String topic = msg.getTopic();

int queueId = msg.getQueueId();

final int tranType = MessageSysFlag.getTransactionValue(msg.getSysFlag());

if (tranType == MessageSysFlag.TRANSACTION_NOT_TYPE

|| tranType == MessageSysFlag.TRANSACTION_COMMIT_TYPE) {

// Delay Delivery

if (msg.getDelayTimeLevel() > 0) {

if (msg.getDelayTimeLevel() > this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel()) {

msg.setDelayTimeLevel(this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel());

}

topic = TopicValidator.RMQ_SYS_SCHEDULE_TOPIC;

queueId = ScheduleMessageService.delayLevel2QueueId(msg.getDelayTimeLevel());

// Backup real topic, queueId

MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_TOPIC, msg.getTopic());

MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_QUEUE_ID, String.valueOf(msg.getQueueId()));

msg.setPropertiesString(MessageDecoder.messageProperties2String(msg.getProperties()));

msg.setTopic(topic);

msg.setQueueId(queueId);

}

}

InetSocketAddress bornSocketAddress = (InetSocketAddress) msg.getBornHost();

if (bornSocketAddress.getAddress() instanceof Inet6Address) {

msg.setBornHostV6Flag();

}

InetSocketAddress storeSocketAddress = (InetSocketAddress) msg.getStoreHost();

if (storeSocketAddress.getAddress() instanceof Inet6Address) {

msg.setStoreHostAddressV6Flag();

}

long elapsedTimeInLock = 0;

MappedFile unlockMappedFile = null;

//mappedFile 零拷贝实现

MappedFile mappedFile = this.mappedFileQueue.getLastMappedFile();

//线程锁 注意使用锁的这种方式

putMessageLock.lock(); //spin or ReentrantLock ,depending on store config

try {

long beginLockTimestamp = this.defaultMessageStore.getSystemClock().now();

this.beginTimeInLock = beginLockTimestamp;

// Here settings are stored timestamp, in order to ensure an orderly

// global

msg.setStoreTimestamp(beginLockTimestamp);

if (null == mappedFile || mappedFile.isFull()) {

mappedFile = this.mappedFileQueue.getLastMappedFile(0); // Mark: NewFile may be cause noise

}

if (null == mappedFile) {

log.error("create mapped file1 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString());

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, null);

}

//直接以Append的方式写入文件

result = mappedFile.appendMessage(msg, this.appendMessageCallback);

//文件写入的结果

switch (result.getStatus()) {

case PUT_OK:

break;

//文件写满了,就创建一个新文件,重写消息

case END_OF_FILE:

unlockMappedFile = mappedFile;

// Create a new file, re-write the message

mappedFile = this.mappedFileQueue.getLastMappedFile(0);

if (null == mappedFile) {

// XXX: warn and notify me

log.error("create mapped file2 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString());

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, result);

}

result = mappedFile.appendMessage(msg, this.appendMessageCallback);

break;

case MESSAGE_SIZE_EXCEEDED:

case PROPERTIES_SIZE_EXCEEDED:

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.MESSAGE_ILLEGAL, result);

case UNKNOWN_ERROR:

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result);

default:

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result);

}

elapsedTimeInLock = this.defaultMessageStore.getSystemClock().now() - beginLockTimestamp;

beginTimeInLock = 0;

} finally {

putMessageLock.unlock();

}

if (elapsedTimeInLock > 500) {

log.warn("[NOTIFYME]putMessage in lock cost time(ms)={}, bodyLength={} AppendMessageResult={}", elapsedTimeInLock, msg.getBody().length, result);

}

if (null != unlockMappedFile && this.defaultMessageStore.getMessageStoreConfig().isWarmMapedFileEnable()) {

this.defaultMessageStore.unlockMappedFile(unlockMappedFile);

}

PutMessageResult putMessageResult = new PutMessageResult(PutMessageStatus.PUT_OK, result);

// Statistics

storeStatsService.getSinglePutMessageTopicTimesTotal(msg.getTopic()).incrementAndGet();

storeStatsService.getSinglePutMessageTopicSizeTotal(topic).addAndGet(result.getWroteBytes());

//文件刷盘

handleDiskFlush(result, putMessageResult, msg);

//主从同步

handleHA(result, putMessageResult, msg);

return putMessageResult;

}

6.2-分发ConsumeQueue和IndexFile

当CommitLog写入一条消息后,会有一个后台线程reputMessageService每隔1毫秒就会去拉取CommitLog中最新更新的一批消息,然后分别转发到ComsumeQueue和IndexFile里去,这就是他底层的实现逻辑。

并且,如果服务异常宕机,会造成CommitLog和ConsumeQueue、IndexFile文件不一致,有消息写入CommitLog后,没有分发到索引文件,这样消息就丢失了。DefaultMappedStore的load方法提供了恢复索引文件的方法,入口在load方法。

//K2 每隔1毫秒,会往ConsumeQueue和IndexFile中转发一次CommitLog写入的消息。

@Override

public void run() {

DefaultMessageStore.log.info(this.getServiceName() + " service started");

while (!this.isStopped()) {

try {

Thread.sleep(1);

this.doReput();

} catch (Exception e) {

DefaultMessageStore.log.warn(this.getServiceName() + " service has exception. ", e);

}

}

DefaultMessageStore.log.info(this.getServiceName() + " service end");

}

private void doReput() {

if (this.reputFromOffset < DefaultMessageStore.this.commitLog.getMinOffset()) {

log.warn("The reputFromOffset={} is smaller than minPyOffset={}, this usually indicate that the dispatch behind too much and the commitlog has expired.",

this.reputFromOffset, DefaultMessageStore.this.commitLog.getMinOffset());

this.reputFromOffset = DefaultMessageStore.this.commitLog.getMinOffset();

}

for (boolean doNext = true; this.isCommitLogAvailable() && doNext; ) {

if (DefaultMessageStore.this.getMessageStoreConfig().isDuplicationEnable()

&& this.reputFromOffset >= DefaultMessageStore.this.getConfirmOffset()) {

break;

}

SelectMappedBufferResult result = DefaultMessageStore.this.commitLog.getData(reputFromOffset);

if (result != null) {

try {

this.reputFromOffset = result.getStartOffset();

for (int readSize = 0; readSize < result.getSize() && doNext; ) {

//从CommitLog中获取一个DispatchRequest,拿到一份需要进行转发的消息,也就是从commitlog中读取的。

DispatchRequest dispatchRequest =

DefaultMessageStore.this.commitLog.checkMessageAndReturnSize(result.getByteBuffer(), false, false);

int size = dispatchRequest.getBufferSize() == -1 ? dispatchRequest.getMsgSize() : dispatchRequest.getBufferSize();

if (dispatchRequest.isSuccess()) {

if (size > 0) {

//分发CommitLog写入消息

DefaultMessageStore.this.doDispatch(dispatchRequest);

//K2 长轮询: 如果有消息到了主节点,并且开启了长轮询。

if (BrokerRole.SLAVE != DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole()

&& DefaultMessageStore.this.brokerConfig.isLongPollingEnable()) {

//唤醒NotifyMessageArrivingListener的arriving方法,进行一次请求线程的检查

DefaultMessageStore.this.messageArrivingListener.arriving(dispatchRequest.getTopic(),

dispatchRequest.getQueueId(), dispatchRequest.getConsumeQueueOffset() + 1,

dispatchRequest.getTagsCode(), dispatchRequest.getStoreTimestamp(),

dispatchRequest.getBitMap(), dispatchRequest.getPropertiesMap());

}

this.reputFromOffset += size;

readSize += size;

if (DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole() == BrokerRole.SLAVE) {

DefaultMessageStore.this.storeStatsService

.getSinglePutMessageTopicTimesTotal(dispatchRequest.getTopic()).incrementAndGet();

DefaultMessageStore.this.storeStatsService

.getSinglePutMessageTopicSizeTotal(dispatchRequest.getTopic())

.addAndGet(dispatchRequest.getMsgSize());

}

} else if (size == 0) {

this.reputFromOffset = DefaultMessageStore.this.commitLog.rollNextFile(this.reputFromOffset);

readSize = result.getSize();

}

} else if (!dispatchRequest.isSuccess()) {

if (size > 0) {

log.error("[BUG]read total count not equals msg total size. reputFromOffset={}", reputFromOffset);

this.reputFromOffset += size;

} else {

doNext = false;

// If user open the dledger pattern or the broker is master node,

// it will not ignore the exception and fix the reputFromOffset variable

if (DefaultMessageStore.this.getMessageStoreConfig().isEnableDLegerCommitLog() ||

DefaultMessageStore.this.brokerConfig.getBrokerId() == MixAll.MASTER_ID) {

log.error("[BUG]dispatch message to consume queue error, COMMITLOG OFFSET: {}",

this.reputFromOffset);

this.reputFromOffset += result.getSize() - readSize;

}

}

}

}

} finally {

result.release();

}

} else {

doNext = false;

}

}

}

6.3 文件同步刷盘与异步刷盘

入口:CommitLog.putMessage -> CommitLog.handleDiskFlush

其中主要涉及到是否开启了堆外内存。TransientStorePoolEnable。如果开启了堆外内存,会在启动时申请一个跟CommitLog文件大小一致的堆外内存,这部分内存就可以确保不会被交换到虚拟内存中。

//K2 处理数据刷盘

public void handleDiskFlush(AppendMessageResult result, PutMessageResult putMessageResult, MessageExt messageExt) {

// Synchronization flush 同步刷盘

if (FlushDiskType.SYNC_FLUSH == this.defaultMessageStore.getMessageStoreConfig().getFlushDiskType()) {

final GroupCommitService service = (GroupCommitService) this.flushCommitLogService;

if (messageExt.isWaitStoreMsgOK()) {

//构建一个GroupCommitRequest,交给GroupCommitService处理。

GroupCommitRequest request = new GroupCommitRequest(result.getWroteOffset() + result.getWroteBytes());

service.putRequest(request);

CompletableFuture<PutMessageStatus> flushOkFuture = request.future();

PutMessageStatus flushStatus = null;

//同步等待文件刷新

try {

flushStatus = flushOkFuture.get(this.defaultMessageStore.getMessageStoreConfig().getSyncFlushTimeout(),

TimeUnit.MILLISECONDS);

} catch (InterruptedException | ExecutionException | TimeoutException e) {

//flushOK=false;

}

if (flushStatus != PutMessageStatus.PUT_OK) {

log.error("do groupcommit, wait for flush failed, topic: " + messageExt.getTopic() + " tags: " + messageExt.getTags()

+ " client address: " + messageExt.getBornHostString());

putMessageResult.setPutMessageStatus(PutMessageStatus.FLUSH_DISK_TIMEOUT);

}

} else {

service.wakeup();

}

}

// Asynchronous flush 异步刷盘

//异步刷盘是把消息映射到MappedFile后,单独唤醒一个服务来进行刷盘

else {

if (!this.defaultMessageStore.getMessageStoreConfig().isTransientStorePoolEnable()) {

flushCommitLogService.wakeup();

} else {

commitLogService.wakeup();

}

}

}

6.4 过期文件删除

入口: DefaultMessageStore.addScheduleTask -> DefaultMessageStore.this.cleanFilesPeriodically()

默认情况下, Broker会启动后台线程,每60秒,检查CommitLog、ConsumeQueue文件。然后对超过72小时的数据进行删除。也就是说,默认情况下, RocketMQ只会保存3天内的数据。这个时间可以通过fileReservedTime来配置。注意他删除时,并不会检查消息是否被消费了。

整个文件存储的核心入口入口在DefaultMessageStore的start方法中。

//K2 Broker启动删除过期文件的定时任务

this.addScheduleTask();

private void addScheduleTask() {

//K1 定时删除过期消息的任务

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

DefaultMessageStore.this.cleanFilesPeriodically();

}

}, 1000 * 60, this.messageStoreConfig.getCleanResourceInterval(), TimeUnit.MILLISECONDS);

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

DefaultMessageStore.this.checkSelf();

}

}, 1, 10, TimeUnit.MINUTES);

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

if (DefaultMessageStore.this.getMessageStoreConfig().isDebugLockEnable()) {

try {

if (DefaultMessageStore.this.commitLog.getBeginTimeInLock() != 0) {

long lockTime = System.currentTimeMillis() - DefaultMessageStore.this.commitLog.getBeginTimeInLock();

if (lockTime > 1000 && lockTime < 10000000) {

String stack = UtilAll.jstack();

final String fileName = System.getProperty("user.home") + File.separator + "debug/lock/stack-"

+ DefaultMessageStore.this.commitLog.getBeginTimeInLock() + "-" + lockTime;

MixAll.string2FileNotSafe(stack, fileName);

}

}

} catch (Exception e) {

}

}

}

}, 1, 1, TimeUnit.SECONDS);

// this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

// @Override

// public void run() {

// DefaultMessageStore.this.cleanExpiredConsumerQueue();

// }

// }, 1, 1, TimeUnit.HOURS);

this.diskCheckScheduledExecutorService.scheduleAtFixedRate(new Runnable() {

public void run() {

DefaultMessageStore.this.cleanCommitLogService.isSpaceFull();

}

}, 1000L, 10000L, TimeUnit.MILLISECONDS);

}

我们重点关注一下定时删除方法:

private void cleanFilesPeriodically() {

//定时删除过期commitlog

this.cleanCommitLogService.run();

//定时删除过期的consumequeue

this.cleanConsumeQueueService.run();

}

//K2 定期删除CommitLog文件的地方

public void run() {

try {

this.deleteExpiredFiles();

this.redeleteHangedFile();

} catch (Throwable e) {

DefaultMessageStore.log.warn(this.getServiceName() + " service has exception. ", e);

}

}

class CleanConsumeQueueService {

private long lastPhysicalMinOffset = 0;

public void run() {

try {

this.deleteExpiredFiles();

} catch (Throwable e) {

DefaultMessageStore.log.warn(this.getServiceName() + " service has exception. ", e);

}

}

6.5 文件存储部分的总结

RocketMQ的存储文件包括消息文件(Commitlog)、消息消费队列文件(ConsumerQueue)、Hash索引文件(IndexFile)、监测点文件(checkPoint)、abort(关闭异常文件)。

单个消息存储文件、消息消费队列文件、Hash索引文件长度固定以便使用内存映射机制进行文件的读写操作。RocketMQ组织文件以文件的起始偏移量来命令文件,这样根据偏移量能快速定位到真实的物理文件。

RocketMQ基于内存映射文件机制提供了同步刷盘和异步刷盘两种机制,异步刷盘是指在消息存储时先追加到内存映射文件,然后启动专门的刷盘线程定时将内存中的文件数据刷写到磁盘。

CommitLog,消息存储文件,RocketMQ为了保证消息发送的高吞吐量,采用单一文件存储所有主题消息,保证消息存储是完全的顺序写,但这样给文件读取带来了不便,为此RocketMQ为了方便消息消费构建了消息消费队列文件,基于主题与队列进行组织,同时RocketMQ为消息实现了Hash索引,可以为消息设置索引键,根据所以能够快速从CommitLog文件中检索消息。

当消息达到CommitLog后,会通过ReputMessageService线程接近实时地将消息转发给消息消费队列文件与索引文件。为了安全起见,RocketMQ引入abort文件,记录Broker的停机是否是正常关闭还是异常关闭,在重启Broker时为了保证CommitLog文件,消息消费队列文件与Hash索引文件的正确性,分别采用不同策略来恢复文件。

RocketMQ不会永久存储消息文件、消息消费队列文件,而是启动文件过期机制并在磁盘空间不足或者默认凌晨4点删除过期文件,文件保存72小时并且在删除文件时并不会判断该消息文件上的消息是否被消费。

七、消费者

消费者以消费者组的模式开展。消费者组之间有集群模式和广播模式两种消费模式。然后消费模式有推模式和拉模式。推模式是由拉模式封装组成。

集群模式下,消费队列负载均衡的通用原理:一个消费队列同一时间只能被一个消费者消费,而一个消费者可以同时消费多个队列。

消息顺序:RocketMQ只支持一个队列上的局部消息顺序,不保证全局消息顺序。 要实现顺序消息,可以把有序的消息指定为一个queue,或者给Topic只指定一个Queue,这个不推荐。

7.1 启动

efaultMQPushConsumer.start方法。

启动过程不用太过关注,有个概念就行,然后客户端启动的核心是mQClientFactory 主要是启动了一大堆的服务。

这些服务可以结合具体场景再进行深入。例如pullMessageService主要处理拉取消息服务,rebalanceService主要处理客户端的负载均衡。

//K2 启动过程

@Override

public void start() throws MQClientException {

setConsumerGroup(NamespaceUtil.wrapNamespace(this.getNamespace(), this.consumerGroup));

this.defaultMQPushConsumerImpl.start();

if (null != traceDispatcher) {

try {

traceDispatcher.start(this.getNamesrvAddr(), this.getAccessChannel());

} catch (MQClientException e) {

log.warn("trace dispatcher start failed ", e);

}

}

}

我们按时不看消息轨迹,直接看消费者的启动:

//消费者端实际启动过程

public synchronized void start() throws MQClientException {

switch (this.serviceState) {

case CREATE_JUST:

log.info("the consumer [{}] start beginning. messageModel={}, isUnitMode={}", this.defaultMQPushConsumer.getConsumerGroup(),

this.defaultMQPushConsumer.getMessageModel(), this.defaultMQPushConsumer.isUnitMode());

this.serviceState = ServiceState.START_FAILED;

this.checkConfig();

this.copySubscription();

if (this.defaultMQPushConsumer.getMessageModel() == MessageModel.CLUSTERING) {

this.defaultMQPushConsumer.changeInstanceNameToPID();

}

//K2 客户端创建工厂,这个是核心对象

this.mQClientFactory = MQClientManager.getInstance().getOrCreateMQClientInstance(this.defaultMQPushConsumer, this.rpcHook);

this.rebalanceImpl.setConsumerGroup(this.defaultMQPushConsumer.getConsumerGroup());

this.rebalanceImpl.setMessageModel(this.defaultMQPushConsumer.getMessageModel());

this.rebalanceImpl.setAllocateMessageQueueStrategy(this.defaultMQPushConsumer.getAllocateMessageQueueStrategy());

this.rebalanceImpl.setmQClientFactory(this.mQClientFactory);

this.pullAPIWrapper = new PullAPIWrapper(

mQClientFactory,

this.defaultMQPushConsumer.getConsumerGroup(), isUnitMode());

this.pullAPIWrapper.registerFilterMessageHook(filterMessageHookList);

if (this.defaultMQPushConsumer.getOffsetStore() != null) {

this.offsetStore = this.defaultMQPushConsumer.getOffsetStore();

} else {

switch (this.defaultMQPushConsumer.getMessageModel()) {

case BROADCASTING:

this.offsetStore = new LocalFileOffsetStore(this.mQClientFactory, this.defaultMQPushConsumer.getConsumerGroup());

break;

case CLUSTERING:

this.offsetStore = new RemoteBrokerOffsetStore(this.mQClientFactory, this.defaultMQPushConsumer.getConsumerGroup());

break;

default:

break;

}

this.defaultMQPushConsumer.setOffsetStore(this.offsetStore);

}

this.offsetStore.load();

//根据客户端配置实例化不同的consumeMessageService

if (this.getMessageListenerInner() instanceof MessageListenerOrderly) {

this.consumeOrderly = true;

this.consumeMessageService =

new ConsumeMessageOrderlyService(this, (MessageListenerOrderly) this.getMessageListenerInner());

} else if (this.getMessageListenerInner() instanceof MessageListenerConcurrently) {

this.consumeOrderly = false;

this.consumeMessageService =

new ConsumeMessageConcurrentlyService(this, (MessageListenerConcurrently) this.getMessageListenerInner());

}

this.consumeMessageService.start();

//注册本地的消费者组缓存。

boolean registerOK = mQClientFactory.registerConsumer(this.defaultMQPushConsumer.getConsumerGroup(), this);

if (!registerOK) {

this.serviceState = ServiceState.CREATE_JUST;

this.consumeMessageService.shutdown(defaultMQPushConsumer.getAwaitTerminationMillisWhenShutdown());

throw new MQClientException("The consumer group[" + this.defaultMQPushConsumer.getConsumerGroup()

+ "] has been created before, specify another name please." + FAQUrl.suggestTodo(FAQUrl.GROUP_NAME_DUPLICATE_URL),

null);

}

mQClientFactory.start();

log.info("the consumer [{}] start OK.", this.defaultMQPushConsumer.getConsumerGroup());

this.serviceState = ServiceState.RUNNING;

break;

case RUNNING:

case START_FAILED:

case SHUTDOWN_ALREADY:

throw new MQClientException("The PushConsumer service state not OK, maybe started once, "

+ this.serviceState

+ FAQUrl.suggestTodo(FAQUrl.CLIENT_SERVICE_NOT_OK),

null);

default:

break;

}

this.updateTopicSubscribeInfoWhenSubscriptionChanged();

this.mQClientFactory.checkClientInBroker();

this.mQClientFactory.sendHeartbeatToAllBrokerWithLock();

this.mQClientFactory.rebalanceImmediately();

}

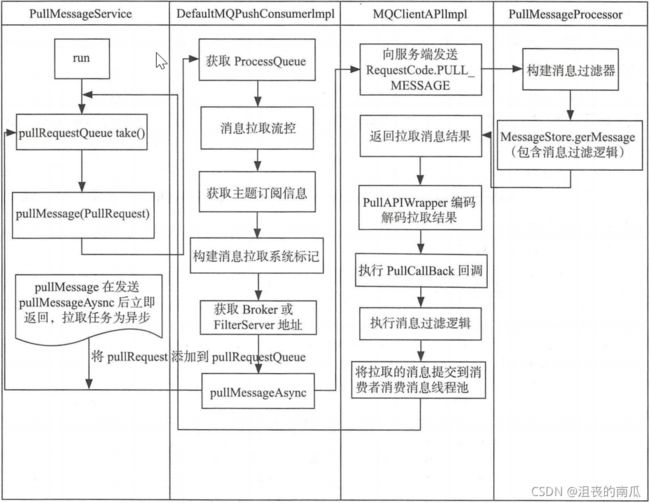

7.2 消息拉取

拉模式: PullMessageService

PullRequest里有messageQueue和processQueue,其中messageQueue负责拉取消息,拉取到后,将消息存入processQueue,进行处理。 存入后就可以清空messageQueue,继续拉取了。

//K2 拉取消息的核心流程

public void pullMessage(final PullRequest pullRequest) {

//获取要处理的消息:ProcessQueue

final ProcessQueue processQueue = pullRequest.getProcessQueue();

//如果队列被抛弃,直接返回

if (processQueue.isDropped()) {

log.info("the pull request[{}] is dropped.", pullRequest.toString());

return;

}

//先更新时间戳

pullRequest.getProcessQueue().setLastPullTimestamp(System.currentTimeMillis());

try {

this.makeSureStateOK();

} catch (MQClientException e) {

log.warn("pullMessage exception, consumer state not ok", e);

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

return;

}

//如果处理队列被挂起,延迟1S后再执行。

if (this.isPause()) {

log.warn("consumer was paused, execute pull request later. instanceName={}, group={}", this.defaultMQPushConsumer.getInstanceName(), this.defaultMQPushConsumer.getConsumerGroup());

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_SUSPEND);

return;

}

//获得最大待处理消息数量

long cachedMessageCount = processQueue.getMsgCount().get();

//获得最大待处理消息大小

long cachedMessageSizeInMiB = processQueue.getMsgSize().get() / (1024 * 1024);

//从数量进行流控

if (cachedMessageCount > this.defaultMQPushConsumer.getPullThresholdForQueue()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_FLOW_CONTROL);

if ((queueFlowControlTimes++ % 1000) == 0) {

log.warn(

"the cached message count exceeds the threshold {}, so do flow control, minOffset={}, maxOffset={}, count={}, size={} MiB, pullRequest={}, flowControlTimes={}",

this.defaultMQPushConsumer.getPullThresholdForQueue(), processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), cachedMessageCount, cachedMessageSizeInMiB, pullRequest, queueFlowControlTimes);

}

return;

}

//从消息大小进行流控

if (cachedMessageSizeInMiB > this.defaultMQPushConsumer.getPullThresholdSizeForQueue()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_FLOW_CONTROL);

if ((queueFlowControlTimes++ % 1000) == 0) {

log.warn(

"the cached message size exceeds the threshold {} MiB, so do flow control, minOffset={}, maxOffset={}, count={}, size={} MiB, pullRequest={}, flowControlTimes={}",

this.defaultMQPushConsumer.getPullThresholdSizeForQueue(), processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), cachedMessageCount, cachedMessageSizeInMiB, pullRequest, queueFlowControlTimes);

}

return;

}

if (!this.consumeOrderly) {

if (processQueue.getMaxSpan() > this.defaultMQPushConsumer.getConsumeConcurrentlyMaxSpan()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_FLOW_CONTROL);

if ((queueMaxSpanFlowControlTimes++ % 1000) == 0) {

log.warn(

"the queue's messages, span too long, so do flow control, minOffset={}, maxOffset={}, maxSpan={}, pullRequest={}, flowControlTimes={}",

processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), processQueue.getMaxSpan(),

pullRequest, queueMaxSpanFlowControlTimes);

}

return;

}

} else {

if (processQueue.isLocked()) {

if (!pullRequest.isLockedFirst()) {

final long offset = this.rebalanceImpl.computePullFromWhere(pullRequest.getMessageQueue());

boolean brokerBusy = offset < pullRequest.getNextOffset();

log.info("the first time to pull message, so fix offset from broker. pullRequest: {} NewOffset: {} brokerBusy: {}",

pullRequest, offset, brokerBusy);

if (brokerBusy) {

log.info("[NOTIFYME]the first time to pull message, but pull request offset larger than broker consume offset. pullRequest: {} NewOffset: {}",

pullRequest, offset);

}

pullRequest.setLockedFirst(true);

pullRequest.setNextOffset(offset);

}

} else {

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

log.info("pull message later because not locked in broker, {}", pullRequest);

return;