EFK——安装部署(监控nginx日志)

EFK安装部署_监控nginx日志

-

-

- 环境:

- 开始部署:

-

-

- 1、修改主机名

- 2、修改hosts文件

- 3、时间同步

- 4、关闭防火墙

- 5、安装jdk

- 6、安装zookeeper

- 7、编辑zoo.cfg文件

- 8、创建data目录

- 9、配置myid

- 10、运行zookeeper服务

- 11、查看zookeeper状态

- 12、安装kafka

- 13、编辑/usr/local/kafka/config/server.properties

- 14、启动kafka

- 15、创建一个topic

- 16、模拟生产者

- 17、模拟消费者

- 18、开始模拟

- 19、查看当前的topic

- 20、安装filebeat(收集日志)

- 21、编辑filebeat.yml

- 22、启动filebeat

- 23、安装logstash

- 24、编辑/etc/logstash/conf.d/nginx.conf

- 25、上传nginx正则相关文件和文件路径并完成配置

- 26、启动logstash

- 27、安装elasticsearch

- 28、修改elasticsearch配置文件

- 29、启动elasticsearch

- 30、安装kibana

- 31、配置/etc/kibana/kibana.yml

- 32、启动kibana

- 33、安装测压工具和nginx服务

- 34、启动nginx并压测

- 35、查看索引

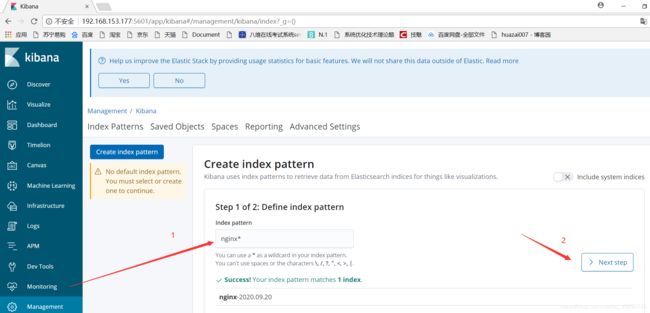

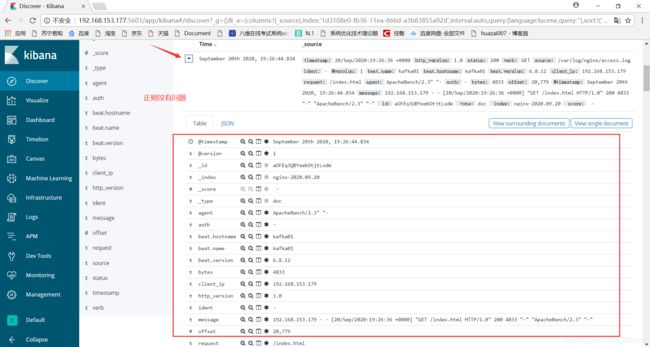

- 36、kibana(http://ip:5601)进入图形化界面操作

-

-

环境:

centos7

| 主机IP | 安装软件 |

|---|---|

| 192.168.153.179 | jdk,zookeeper,kafka,filebeat,elasticsearch |

| 192.168.153.178 | jdk,zookeeper,kafka,logstash |

| 192.168.153.177 | jdk,zookeeper,kafka,kibana |

开始部署:

1、修改主机名

三台上依次操作

[root@localhost ~]# hostname kafka01

[root@localhost ~]# hostname kafka02

[root@localhost ~]# hostname kafka03

2、修改hosts文件

三台上执行相同操作

[root@kafka01 ~]# tail -n 3 /etc/hosts

192.168.153.179 kafka01

192.168.153.178 kafka02

192.168.153.177 kafka03

3、时间同步

三台上执行相同操作

[root@kafka01 ~]# ntpdate pool.ntp.org

19 Sep 14:00:48 ntpdate[11588]: adjust time server 122.117.253.246 offset 0

4、关闭防火墙

三台上执行相同操作

[root@kafka01 ~]# systemctl stop firewalld

[root@kafka01 ~]# setenforce 0

5、安装jdk

三台上执行相同操作:在相同目录

[root@kafka01 ELK三剑客]# pwd

/usr/local/src/ELK三剑客

[root@kafka01 ELK三剑客]# rpm -ivh jdk-8u131-linux-x64_.rpm

6、安装zookeeper

三台上执行相同操作

解压移动并修改配置文件下的文件名称

[root@kafka01 EFK]# pwd

/usr/local/src/EFK

[root@kafka01 EFK]# tar xf zookeeper-3.4.14.tar.gz

[root@kafka01 EFK]# mv zookeeper-3.4.14 /usr/local/zookeeper

[root@kafka01 EFK]# cd /usr/local/zookeeper/conf/

[root@kafka01 conf]# mv zoo_sample.cfg zoo.cfg

7、编辑zoo.cfg文件

三台上执行相同操作

[root@kafka01 conf]# pwd

/usr/local/zookeeper/conf

[root@kafka01 conf]# tail -n 3 zoo.cfg

server.1=192.168.153.179:2888:3888

server.2=192.168.153.178:2888:3888

server.3=192.168.153.177:2888:3888

8、创建data目录

三台上执行相同操作

[root@kafka01 conf]# pwd

/usr/local/zookeeper/conf

[root@kafka01 conf]# mkdir /tmp/zookeeper

9、配置myid

三台上依次执行

[root@kafka01 conf]# echo "1" > /tmp/zookeeper/myid

[root@kafka02 conf]# echo "2" > /tmp/zookeeper/myid

[root@kafka03 conf]# echo "3" > /tmp/zookeeper/myid

10、运行zookeeper服务

三台上执行相同操作

[root@kafka01 conf]# /usr/local/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

11、查看zookeeper状态

三台上执行相同操作

[root@kafka01 conf]# /usr/local/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Mode: follower

2个follower

1个leader

12、安装kafka

三台上执行相同操作

[root@kafka01 EFK]# pwd

/usr/local/src/EFK

[root@kafka01 EFK]# tar xf kafka_2.11-2.2.0.tgz

[root@kafka01 EFK]# mv kafka_2.11-2.2.0 /usr/local/kafka

13、编辑/usr/local/kafka/config/server.properties

数字为行号

kafka01主机

21 broker.id=0

36 advertised.listeners=PLAINTEXT://kafka01:9092

123 zookeeper.connect=192.168.153.179:2181,192.168.153.178:2181,192.168.153.177:2181

kafka02主机

21 broker.id=1

36 advertised.listeners=PLAINTEXT://kafka02:9092

123 zookeeper.connect=192.168.153.179:2181,192.168.153.178:2181,192.168.153.177:2181

kafka03

21 broker.id=2

36 advertised.listeners=PLAINTEXT://kafka03:9092

123 zookeeper.connect=192.168.153.177:2181,192.168.153.178:2181,192.168.153.177:2181

- broker.id=#分别为0 1 2

- advertised.listeners=PLAINTEXT://(主机名kafka01,kafka02,kafk03):9092 #分别为kafka01 02 03

- zookeeper.connect=192.168.10.130:2181,192.168.10.131:2181,192.168.10.132:2181 #这行内容相同

14、启动kafka

三台上执行相同操作

[root@kafka01 ~]# /usr/local/kafka/bin/kafka-server-start.sh -daemon /usr/local/kafka/config/server.properties

[root@kafka01 ~]# ss -nltp|grep 9092

LISTEN 0 50 :::9092 :::* users:(("java",pid=23352,fd=105))

15、创建一个topic

kafka01主机操作

[root@kafka01 ~]# /usr/local/kafka/bin/kafka-topics.sh --create --zookeeper 192.168.153.179:2181 --replication-factor 2 --partitions 3 --topic wg007

Created topic wg007.

解释:

- –replication-factor 2 (指定副本数)高可用

- –partitions 3 (指定主题的分区数)提高并发

- –topic wg007 指定一个主题

16、模拟生产者

kafka01主机操作

[root@kafka01 ~]# /usr/local/kafka/bin/kafka-console-producer.sh --broker-list 192.168.153.179:9092 --topic wg007

>

17、模拟消费者

kafka02主机操作

[root@kafka02 ~]# /usr/local/kafka/bin/kafka-console-consumer.sh --bootstrap-server 192.168.153.179:9092 --topic wg007 --from-beginning

18、开始模拟

kafka01上输入a,检测kafka02上是否会出现a

kafka01输入

[root@kafka01 ~]# /usr/local/kafka/bin/kafka-console-producer.sh --broker-list 192.168.153.179:9092 --topic wg007

>a

>

kafka02查看

[root@kafka02 ~]# /usr/local/kafka/bin/kafka-console-consumer.sh --bootstrap-server 192.168.153.179:9092 --topic wg007 --from-beginning

a

19、查看当前的topic

kafka01主机操作

[root@kafka01 ~]# /usr/local/kafka/bin/kafka-topics.sh --list --zookeeper 192.168.153.179:2181

__consumer_offsets

wg007

20、安装filebeat(收集日志)

kafka01主机安装

[root@kafka01 EFK]# pwd

/usr/local/src/EFK

[root@kafka01 EFK]# rpm -ivh filebeat-6.8.12-x86_64.rpm

21、编辑filebeat.yml

kafka01主机操作

改名filebeat.yml文件名称为filebeat.yml.bak

自己编写一个filebeat.yml文件

[root@kafka01 filebeat]# pwd

/etc/filebeat

[root@kafka01 filebeat]# mv filebeat.yml filebeat.yml.bak

[root@kafka01 filebeat]# vim filebeat.yml

如下配置

[root@localhost filebeat]# pwd

/etc/filebeat

[root@localhost filebeat]# cat filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

fields:

log_topics: nginx007

output.kafka:

enabled: true

hosts: ["192.168.153.179:9092","192.168.153.178:9092","192.168.153.177:9092"]

topic: nginx007

22、启动filebeat

kafka01操作

[root@kafka01 ~]# systemctl start filebeat

23、安装logstash

kafka02主机操作

[root@kafka02 ELK三剑客]# pwd

/usr/local/src/ELK三剑客

[root@kafka02 ELK三剑客]# rpm -ivh logstash-6.6.0.rpm

24、编辑/etc/logstash/conf.d/nginx.conf

kafka02操作

[root@kafka02 conf.d]# pwd

/etc/logstash/conf.d

[root@kafka02 conf.d]# cat nginx.conf

input{

kafka{

bootstrap_servers => ["192.168.153.179:9092,192.168.153.178:9092,192.168.153.177:9092"]

group_id => "logstash"

topics => "nginx007"

consumer_threads => 5

}

}

filter {

json{

source => "message"

}

mutate {

remove_field => ["host","prospector","fields","input","log"]

}

grok {

match => { "message" => "%{NGX}" }

}

}

output{

elasticsearch {

hosts => "192.168.153.179:9200"

index => "nginx-%{+YYYY.MM.dd}"

}

#stdout {

# codec => rubydebug

#}

}

25、上传nginx正则相关文件和文件路径并完成配置

kafka02主机操作

[root@kafka02 src]# pwd

/usr/local/src

[root@kafka02 src]# ls

alter EFK ELK三剑客 nginx_reguler_log_path.txt nginx_reguler_log.txt

[root@kafka02 src]# cat nginx_reguler_log_path.txt

/usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns

[root@kafka02 src]# mv nginx_reguler_log.txt /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/nginx

[root@kafka02 src]# cat /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/nginx

NGX %{IPORHOST:client_ip} (%{USER:ident}|- ) (%{USER:auth}|-) \[%{HTTPDATE:timestamp}\] "(?:%{WORD:verb} (%{NOTSPACE:request}|-)(?: HTTP/%{NUMBER:http_version})?|-)" %{NUMBER:status} (?:%{NUMBER:bytes}|-) "(?:%{URI:referrer}|-)" "%{GREEDYDATA:agent}"

26、启动logstash

kafka02操作

[root@kafka02 conf.d]# systemctl start logstash

[root@kafka02 conf.d]# ss -nltp|grep 9600

LISTEN 0 50 ::ffff:127.0.0.1:9600 :::* users:(("java",pid=18470,fd=137))

27、安装elasticsearch

kafka01操作

[root@kafka01 ELK三剑客]# pwd

/usr/local/src/ELK三剑客

[root@kafka01 ELK三剑客]# rpm -ivh elasticsearch-6.6.2.rpm

28、修改elasticsearch配置文件

kafka01操作

[root@kafka01 ~]# grep -v "#" /etc/elasticsearch/elasticsearch.yml

cluster.name: nginx

node.name: node-1

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 192.168.153.179

http.port: 9200

29、启动elasticsearch

kafka01操作

[root@kafka01 ~]# systemctl start elasticsearch

[root@kafka01 ~]# systemctl enable elasticsearch

Created symlink from /etc/systemd/system/multi-user.target.wants/elasticsearch.service to /usr/lib/systemd/system/elasticsearch.service.

[root@kafka01 ~]# ss -nltp|grep 9200

LISTEN 0 128 ::ffff:192.168.153.179:9200 :::* users:(("java",pid=27812,fd=205))

[root@kafka01 ~]# ss -nltp|grep 9300

LISTEN 0 128 ::ffff:192.168.153.179:9300 :::* users:(("java",pid=27812,fd=191))

30、安装kibana

kafka03操作

[root@kafka03 ELK三剑客]# pwd

/usr/local/src/ELK三剑客

[root@kafka03 ELK三剑客]# yum -y install kibana-6.6.2-x86_64.rpm

31、配置/etc/kibana/kibana.yml

kafka03操作

[root@kafka03 ~]# grep -Ev '#|^$' /etc/kibana/kibana.yml

server.port: 5601

server.host: "192.168.153.177"

elasticsearch.hosts: ["http://192.168.153.179:9200"]

- server.port: 5601

- #kibana服务端口

- server.host: “192.168.153.177”

- #kibana服务主机IP

- elasticsearch.hosts: [“http://192.168.153.179:9200”]

#elasticsearch服务主机IP

32、启动kibana

kafka03操作

[root@kafka03 ~]# systemctl start kibana

[root@kafka03 ~]# ss -nltp|grep 5601

LISTEN 0 128 192.168.153.177:5601 *:* users:(("node",pid=16965,fd=18))

33、安装测压工具和nginx服务

kafka01操作

[root@kafka01 ~]# yum -y install httpd-tools epel-release && yum -y install nginx

34、启动nginx并压测

kafka01操作

[root@kafka01 ~]# nginx

[root@kafka01 ~]# ab -n100 -c100 http://192.168.153.179/index.html

35、查看索引

kafka01操作

[root@kafka01 ~]# curl -X GET http://192.168.153.179:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

yellow open nginx-2020.09.20 cBEQUbJxTZCbiLWfJbOc-w 5 1 105 0 169kb 169kb