golang内存分配概述

golang内存分配概述

golang的内存分配机制主要类似于tcmalloc机制,来快速高效的分配与管理内存,从而高效分配与管理内存。

有关 tcmalloc 的详细资料大家可参考官网,简单概括就是通过预先分配一个大的堆,每个线程都管理一块小内存的对象列表,大对象都是直接通过堆来直接管理,每个线程申请内存的时候如果小对象就直接在堆上分配,大对象就通过堆分配,每个内存都可以再堆上管理到。大家有兴趣课详细看一个官网的介绍,golang的内存分配也是按照如上原理来实现的,大概了解了tcmalloc的流程就很好理解golang的内存分配流程。

golang内存分配理解

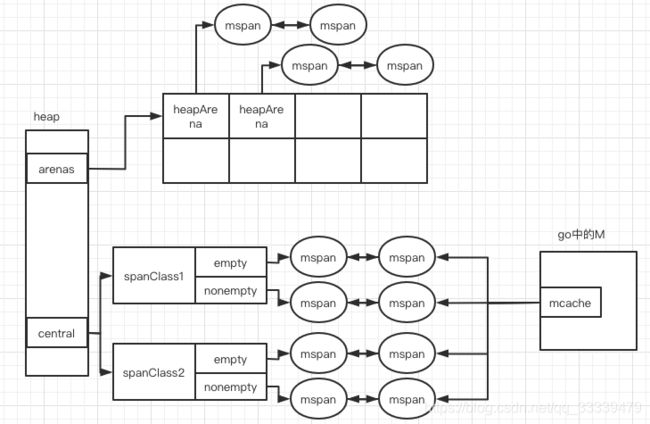

go运行时的内存布局,相关的理解如下(基于go1.12版本);

- 在go启动的时候,会生成一个全局唯一的heap_对象,然后通过该对象来管理与分配内存。

- 在heap_中arenas是通过操作系统申请分配的内存,并且heap中保存了golang定义的不同大小的mspan对象。

- 在go的启动过程中每一个M都会生成一个唯一的mache对象,来分配在M运行过程中申请的小对象或者微小对象。

- 在go中内存管理的单位为mspan,默认管理8KB的page大小,通过分配的对象的大小不同而调整将管理的内存分配多个pages,所有的最终的数据都保存在mspan中,可以在heap中统一管理。

- 在go中,预先定义好了一些微小对象和小对象的大小定义,并分配给了central中,在mcache中申请对应的spanClass的时候,该大小的内存时会通过central来分配,如果是大对象分配则直接分配到heap上,通过arenas来分配管理。

微小对象与小对象

// class bytes/obj bytes/span objects tail waste max waste

// 1 8 8192 1024 0 87.50%

// 2 16 8192 512 0 43.75%

// 3 32 8192 256 0 46.88%

// 4 48 8192 170 32 31.52%

// 5 64 8192 128 0 23.44%

// 6 80 8192 102 32 19.07%

// 7 96 8192 85 32 15.95%

// 8 112 8192 73 16 13.56%

// 9 128 8192 64 0 11.72%

// 10 144 8192 56 128 11.82%

// 11 160 8192 51 32 9.73%

// 12 176 8192 46 96 9.59%

// 13 192 8192 42 128 9.25%

// 14 208 8192 39 80 8.12%

// 15 224 8192 36 128 8.15%

// 16 240 8192 34 32 6.62%

// 17 256 8192 32 0 5.86%

// 18 288 8192 28 128 12.16%

// 19 320 8192 25 192 11.80%

// 20 352 8192 23 96 9.88%

// 21 384 8192 21 128 9.51%

// 22 416 8192 19 288 10.71%

// 23 448 8192 18 128 8.37%

// 24 480 8192 17 32 6.82%

// 25 512 8192 16 0 6.05%

// 26 576 8192 14 128 12.33%

// 27 640 8192 12 512 15.48%

// 28 704 8192 11 448 13.93%

// 29 768 8192 10 512 13.94%

// 30 896 8192 9 128 15.52%

// 31 1024 8192 8 0 12.40%

// 32 1152 8192 7 128 12.41%

// 33 1280 8192 6 512 15.55%

// 34 1408 16384 11 896 14.00%

// 35 1536 8192 5 512 14.00%

// 36 1792 16384 9 256 15.57%

// 37 2048 8192 4 0 12.45%

// 38 2304 16384 7 256 12.46%

// 39 2688 8192 3 128 15.59%

// 40 3072 24576 8 0 12.47%

// 41 3200 16384 5 384 6.22%

// 42 3456 24576 7 384 8.83%

// 43 4096 8192 2 0 15.60%

// 44 4864 24576 5 256 16.65%

// 45 5376 16384 3 256 10.92%

// 46 6144 24576 4 0 12.48%

// 47 6528 32768 5 128 6.23%

// 48 6784 40960 6 256 4.36%

// 49 6912 49152 7 768 3.37%

// 50 8192 8192 1 0 15.61%

// 51 9472 57344 6 512 14.28%

// 52 9728 49152 5 512 3.64%

// 53 10240 40960 4 0 4.99%

// 54 10880 32768 3 128 6.24%

// 55 12288 24576 2 0 11.45%

// 56 13568 40960 3 256 9.99%

// 57 14336 57344 4 0 5.35%

// 58 16384 16384 1 0 12.49%

// 59 18432 73728 4 0 11.11%

// 60 19072 57344 3 128 3.57%

// 61 20480 40960 2 0 6.87%

// 62 21760 65536 3 256 6.25%

// 63 24576 24576 1 0 11.45%

// 64 27264 81920 3 128 10.00%

// 65 28672 57344 2 0 4.91%

// 66 32768 32768 1 0 12.50%

位于sizeclasses.go中的文件描述了不同大小的文件,总共有67中大小,假如objects大小为1024字节则8192字节刚刚好可以分配个八个对象使用,最坏的情况就是只保存了一个对象即最大浪费率为87.5%,如果刚刚好保存了八个对象则没有任何浪费。在go程序中分配对象的时候都会通过计算一下对象的大小,如果在这个列表来计算对象保存的spanClass。

分配内存newobject

分配内存的过程主要分三种情况,都集中在mallocgc这个函数中。

// Allocate an object of size bytes.

// Small objects are allocated from the per-P cache's free lists.

// Large objects (> 32 kB) are allocated straight from the heap.

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

...

if size <= maxSmallSize {

if noscan && size < maxTinySize {

// Tiny allocator.

//

// Tiny allocator combines several tiny allocation requests

// into a single memory block. The resulting memory block

// is freed when all subobjects are unreachable. The subobjects

// must be noscan (don't have pointers), this ensures that

// the amount of potentially wasted memory is bounded.

//

// Size of the memory block used for combining (maxTinySize) is tunable.

// Current setting is 16 bytes, which relates to 2x worst case memory

// wastage (when all but one subobjects are unreachable).

// 8 bytes would result in no wastage at all, but provides less

// opportunities for combining.

// 32 bytes provides more opportunities for combining,

// but can lead to 4x worst case wastage.

// The best case winning is 8x regardless of block size.

//

// Objects obtained from tiny allocator must not be freed explicitly.

// So when an object will be freed explicitly, we ensure that

// its size >= maxTinySize.

//

// SetFinalizer has a special case for objects potentially coming

// from tiny allocator, it such case it allows to set finalizers

// for an inner byte of a memory block.

//

// The main targets of tiny allocator are small strings and

// standalone escaping variables. On a json benchmark

// the allocator reduces number of allocations by ~12% and

// reduces heap size by ~20%.

off := c.tinyoffset

// Align tiny pointer for required (conservative) alignment.

if size&7 == 0 {

off = round(off, 8)

} else if size&3 == 0 {

off = round(off, 4)

} else if size&1 == 0 {

off = round(off, 2)

}

if off+size <= maxTinySize && c.tiny != 0 {

// The object fits into existing tiny block.

x = unsafe.Pointer(c.tiny + off)

c.tinyoffset = off + size

c.local_tinyallocs++

mp.mallocing = 0

releasem(mp)

return x

}

// Allocate a new maxTinySize block.

span := c.alloc[tinySpanClass]

v := nextFreeFast(span)

if v == 0 {

v, _, shouldhelpgc = c.nextFree(tinySpanClass)

}

x = unsafe.Pointer(v)

(*[2]uint64)(x)[0] = 0

(*[2]uint64)(x)[1] = 0

// See if we need to replace the existing tiny block with the new one

// based on amount of remaining free space.

if size < c.tinyoffset || c.tiny == 0 {

c.tiny = uintptr(x)

c.tinyoffset = size

}

size = maxTinySize

} else {

var sizeclass uint8

if size <= smallSizeMax-8 {

sizeclass = size_to_class8[(size+smallSizeDiv-1)/smallSizeDiv]

} else {

sizeclass = size_to_class128[(size-smallSizeMax+largeSizeDiv-1)/largeSizeDiv]

}

size = uintptr(class_to_size[sizeclass])

spc := makeSpanClass(sizeclass, noscan)

span := c.alloc[spc]

v := nextFreeFast(span)

if v == 0 {

v, span, shouldhelpgc = c.nextFree(spc)

}

x = unsafe.Pointer(v)

if needzero && span.needzero != 0 {

memclrNoHeapPointers(unsafe.Pointer(v), size)

}

}

} else {

var s *mspan

shouldhelpgc = true

systemstack(func() {

s = largeAlloc(size, needzero, noscan)

})

s.freeindex = 1

s.allocCount = 1

x = unsafe.Pointer(s.base())

size = s.elemsize

}

...

}

- 分配对象内存大小是否小于16字节,如果小于16字节直接通过Tiny allocator分配。

- 分配对象大于等于16字节小于等于32KB,通过小对象快速分配来分配空间。

- 分配大于32KB的空间时,直接使用largeAlloc来分配一个mspan来进行分配。

Tiny allocator分配

off := c.tinyoffset

// Align tiny pointer for required (conservative) alignment.

if size&7 == 0 {

off = round(off, 8)

} else if size&3 == 0 {

off = round(off, 4)

} else if size&1 == 0 {

off = round(off, 2)

}

if off+size <= maxTinySize && c.tiny != 0 {

// The object fits into existing tiny block.

x = unsafe.Pointer(c.tiny + off)

c.tinyoffset = off + size

c.local_tinyallocs++

mp.mallocing = 0

releasem(mp)

return x

}

// Allocate a new maxTinySize block.

span := c.alloc[tinySpanClass]

v := nextFreeFast(span)

if v == 0 {

v, _, shouldhelpgc = c.nextFree(tinySpanClass)

}

x = unsafe.Pointer(v)

(*[2]uint64)(x)[0] = 0

(*[2]uint64)(x)[1] = 0

// See if we need to replace the existing tiny block with the new one

// based on amount of remaining free space.

if size < c.tinyoffset || c.tiny == 0 {

c.tiny = uintptr(x)

c.tinyoffset = size

}

size = maxTinySize

首先查看当前tiny分配的偏移位置,如果当前偏移位置可以容下待分配的大小则直接分配到该位置,否则则通过alloc来获取一块新的内存块大小来进行分配。

小对象

var sizeclass uint8

if size <= smallSizeMax-8 {

sizeclass = size_to_class8[(size+smallSizeDiv-1)/smallSizeDiv]

} else {

sizeclass = size_to_class128[(size-smallSizeMax+largeSizeDiv-1)/largeSizeDiv]

}

size = uintptr(class_to_size[sizeclass])

spc := makeSpanClass(sizeclass, noscan)

span := c.alloc[spc]

v := nextFreeFast(span)

if v == 0 {

v, span, shouldhelpgc = c.nextFree(spc)

}

x = unsafe.Pointer(v)

if needzero && span.needzero != 0 {

memclrNoHeapPointers(unsafe.Pointer(v), size)

}

先检查计算当前对象的size,获取当前的spanClass的值,然后再去快速找到一块span来分配内存,首先先快速去查找该span是否有空闲的,如果有空闲的则标记为空闲并返回该空闲的span,否则就通过c.nextFree来分配一个新的内存空间。

// nextFree returns the next free object from the cached span if one is available.

// Otherwise it refills the cache with a span with an available object and

// returns that object along with a flag indicating that this was a heavy

// weight allocation. If it is a heavy weight allocation the caller must

// determine whether a new GC cycle needs to be started or if the GC is active

// whether this goroutine needs to assist the GC.

//

// Must run in a non-preemptible context since otherwise the owner of

// c could change.

func (c *mcache) nextFree(spc spanClass) (v gclinkptr, s *mspan, shouldhelpgc bool) {

s = c.alloc[spc]

shouldhelpgc = false

freeIndex := s.nextFreeIndex() // 获取下一个free的index

if freeIndex == s.nelems { // 如果当前的spanClass元素已经满了则调用refill来重新拿到一个空闲的span

// The span is full.

if uintptr(s.allocCount) != s.nelems {

println("runtime: s.allocCount=", s.allocCount, "s.nelems=", s.nelems)

throw("s.allocCount != s.nelems && freeIndex == s.nelems")

}

c.refill(spc)

shouldhelpgc = true

s = c.alloc[spc]

freeIndex = s.nextFreeIndex()

}

if freeIndex >= s.nelems {

throw("freeIndex is not valid")

}

v = gclinkptr(freeIndex*s.elemsize + s.base())

s.allocCount++

if uintptr(s.allocCount) > s.nelems {

println("s.allocCount=", s.allocCount, "s.nelems=", s.nelems)

throw("s.allocCount > s.nelems")

}

return

}

如果当前分配的元素已经满了则通过refill函数来拿到一个新的空闲空间;

// refill acquires a new span of span class spc for c. This span will

// have at least one free object. The current span in c must be full.

//

// Must run in a non-preemptible context since otherwise the owner of

// c could change.

func (c *mcache) refill(spc spanClass) {

// Return the current cached span to the central lists.

s := c.alloc[spc]

if uintptr(s.allocCount) != s.nelems {

throw("refill of span with free space remaining")

}

if s != &emptymspan {

// Mark this span as no longer cached.

if s.sweepgen != mheap_.sweepgen+3 {

throw("bad sweepgen in refill")

}

atomic.Store(&s.sweepgen, mheap_.sweepgen)

}

// Get a new cached span from the central lists.

s = mheap_.central[spc].mcentral.cacheSpan()

if s == nil {

throw("out of memory")

}

if uintptr(s.allocCount) == s.nelems {

throw("span has no free space")

}

// Indicate that this span is cached and prevent asynchronous

// sweeping in the next sweep phase.

s.sweepgen = mheap_.sweepgen + 3

c.alloc[spc] = s

}

通过cacheSpan函数来获取最新一块的内存

// Allocate a span to use in an mcache.

func (c *mcentral) cacheSpan() *mspan {

// Deduct credit for this span allocation and sweep if necessary.

spanBytes := uintptr(class_to_allocnpages[c.spanclass.sizeclass()]) * _PageSize

deductSweepCredit(spanBytes, 0)

lock(&c.lock)

traceDone := false

if trace.enabled {

traceGCSweepStart()

}

sg := mheap_.sweepgen

retry:

var s *mspan

for s = c.nonempty.first; s != nil; s = s.next { // 获取非空闲的列表头部

if s.sweepgen == sg-2 && atomic.Cas(&s.sweepgen, sg-2, sg-1) { // 检查当前是否空闲

c.nonempty.remove(s) // 如果空闲则从该列表中移除 加入到空闲列表中 然后跳转到havespan进行后续处理

c.empty.insertBack(s)

unlock(&c.lock)

s.sweep(true)

goto havespan

}

if s.sweepgen == sg-1 {

// the span is being swept by background sweeper, skip

continue

}

// we have a nonempty span that does not require sweeping, allocate from it

c.nonempty.remove(s) // 因为最少有一个元素可以用所以就使用该span

c.empty.insertBack(s)

unlock(&c.lock)

goto havespan

}

for s = c.empty.first; s != nil; s = s.next { // 检查空列表 等待sweep

if s.sweepgen == sg-2 && atomic.Cas(&s.sweepgen, sg-2, sg-1) {

// we have an empty span that requires sweeping,

// sweep it and see if we can free some space in it

c.empty.remove(s)

// swept spans are at the end of the list

c.empty.insertBack(s)

unlock(&c.lock)

s.sweep(true)

freeIndex := s.nextFreeIndex()

if freeIndex != s.nelems {

s.freeindex = freeIndex

goto havespan

}

lock(&c.lock)

// the span is still empty after sweep

// it is already in the empty list, so just retry

goto retry

}

if s.sweepgen == sg-1 {

// the span is being swept by background sweeper, skip

continue

}

// already swept empty span,

// all subsequent ones must also be either swept or in process of sweeping

break

}

if trace.enabled {

traceGCSweepDone()

traceDone = true

}

unlock(&c.lock)

// Replenish central list if empty.

s = c.grow() // 如果一直没有找到则想heap中申请内存

if s == nil {

return nil

}

lock(&c.lock)

c.empty.insertBack(s) // 插入新申请到的span

unlock(&c.lock)

// At this point s is a non-empty span, queued at the end of the empty list,

// c is unlocked.

havespan:

if trace.enabled && !traceDone {

traceGCSweepDone()

}

n := int(s.nelems) - int(s.allocCount)

if n == 0 || s.freeindex == s.nelems || uintptr(s.allocCount) == s.nelems {

throw("span has no free objects")

}

// Assume all objects from this span will be allocated in the

// mcache. If it gets uncached, we'll adjust this.

atomic.Xadd64(&c.nmalloc, int64(n))

usedBytes := uintptr(s.allocCount) * s.elemsize

atomic.Xadd64(&memstats.heap_live, int64(spanBytes)-int64(usedBytes))

if trace.enabled {

// heap_live changed.

traceHeapAlloc()

}

if gcBlackenEnabled != 0 {

// heap_live changed.

gcController.revise()

}

freeByteBase := s.freeindex &^ (64 - 1)

whichByte := freeByteBase / 8

// Init alloc bits cache.

s.refillAllocCache(whichByte)

// Adjust the allocCache so that s.freeindex corresponds to the low bit in

// s.allocCache.

s.allocCache >>= s.freeindex % 64

return s

}

通过在列表中进行查找,查看是否有空闲可用的span,如果没有找到则申请一个新的span来使用。

大对象

var s *mspan

shouldhelpgc = true

systemstack(func() {

s = largeAlloc(size, needzero, noscan)

})

s.freeindex = 1

s.allocCount = 1

x = unsafe.Pointer(s.base())

size = s.elemsize

主要就是通过largeAlloc来分配内存,

func largeAlloc(size uintptr, needzero bool, noscan bool) *mspan {

// print("largeAlloc size=", size, "\n")

if size+_PageSize < size { // 检查是否oom

throw("out of memory")

}

npages := size >> _PageShift // 将当前的大小转换成页数

if size&_PageMask != 0 {

npages++

}

// Deduct credit for this span allocation and sweep if

// necessary. mHeap_Alloc will also sweep npages, so this only

// pays the debt down to npage pages.

deductSweepCredit(npages*_PageSize, npages)

s := mheap_.alloc(npages, makeSpanClass(0, noscan), true, needzero) // 申请对应的页数内存

if s == nil {

throw("out of memory")

}

s.limit = s.base() + size // 增加边界值

heapBitsForAddr(s.base()).initSpan(s) // 初始化heapBits的值

return s

}

此时通过mheap_的alloc来申请内存;

// alloc allocates a new span of npage pages from the GC'd heap.

//

// Either large must be true or spanclass must indicates the span's

// size class and scannability.

//

// If needzero is true, the memory for the returned span will be zeroed.

func (h *mheap) alloc(npage uintptr, spanclass spanClass, large bool, needzero bool) *mspan {

// Don't do any operations that lock the heap on the G stack.

// It might trigger stack growth, and the stack growth code needs

// to be able to allocate heap.

var s *mspan

systemstack(func() {

s = h.alloc_m(npage, spanclass, large) // 申请内存

})

if s != nil {

if needzero && s.needzero != 0 {

memclrNoHeapPointers(unsafe.Pointer(s.base()), s.npages<<_PageShift)

}

s.needzero = 0

}

return s

}

在go栈上调用alloc_m申请内存

func (h *mheap) alloc_m(npage uintptr, spanclass spanClass, large bool) *mspan {

_g_ := getg()

// To prevent excessive heap growth, before allocating n pages

// we need to sweep and reclaim at least n pages.

if h.sweepdone == 0 {

h.reclaim(npage)

}

lock(&h.lock)

// transfer stats from cache to global

memstats.heap_scan += uint64(_g_.m.mcache.local_scan)

_g_.m.mcache.local_scan = 0

memstats.tinyallocs += uint64(_g_.m.mcache.local_tinyallocs)

_g_.m.mcache.local_tinyallocs = 0

s := h.allocSpanLocked(npage, &memstats.heap_inuse) // 先上heap锁然后申请内存

if s != nil {

// Record span info, because gc needs to be

// able to map interior pointer to containing span.

atomic.Store(&s.sweepgen, h.sweepgen) // 保存申请到的span

h.sweepSpans[h.sweepgen/2%2].push(s) // Add to swept in-use list.

s.state = mSpanInUse // 更新状态

s.allocCount = 0

s.spanclass = spanclass

if sizeclass := spanclass.sizeclass(); sizeclass == 0 {

s.elemsize = s.npages << _PageShift

s.divShift = 0

s.divMul = 0

s.divShift2 = 0

s.baseMask = 0

} else {

s.elemsize = uintptr(class_to_size[sizeclass])

m := &class_to_divmagic[sizeclass]

s.divShift = m.shift

s.divMul = m.mul

s.divShift2 = m.shift2

s.baseMask = m.baseMask

}

// Mark in-use span in arena page bitmap.

arena, pageIdx, pageMask := pageIndexOf(s.base()) // 在arena中加入该span 并标记在使用

arena.pageInUse[pageIdx] |= pageMask

// update stats, sweep lists

h.pagesInUse += uint64(npage)

if large {

memstats.heap_objects++

mheap_.largealloc += uint64(s.elemsize)

mheap_.nlargealloc++

atomic.Xadd64(&memstats.heap_live, int64(npage<<_PageShift))

}

}

...

return s

}

继续查看allocSpanLocked函数;

// Allocates a span of the given size. h must be locked.

// The returned span has been removed from the

// free structures, but its state is still mSpanFree.

func (h *mheap) allocSpanLocked(npage uintptr, stat *uint64) *mspan {

var s *mspan

s = h.pickFreeSpan(npage) // 先检查是否有这么多空闲的业务

if s != nil {

goto HaveSpan // 如果有则不用分配

}

// On failure, grow the heap and try again.

if !h.grow(npage) { // 申请空间

return nil

}

s = h.pickFreeSpan(npage) // 再尝试获取新的内存

if s != nil {

goto HaveSpan

}

throw("grew heap, but no adequate free span found")

HaveSpan:

// Mark span in use.

if s.state != mSpanFree {

throw("candidate mspan for allocation is not free")

}

if s.npages < npage {

throw("candidate mspan for allocation is too small")

}

// First, subtract any memory that was released back to

// the OS from s. We will re-scavenge the trimmed section

// if necessary.

memstats.heap_released -= uint64(s.released())

if s.npages > npage { // 如果获取的页数比需要分配的多 则分割剩余的页数放入另外一个span中

// Trim extra and put it back in the heap.

t := (*mspan)(h.spanalloc.alloc())

t.init(s.base()+npage<<_PageShift, s.npages-npage)

s.npages = npage

h.setSpan(t.base()-1, s)

h.setSpan(t.base(), t)

h.setSpan(t.base()+t.npages*pageSize-1, t)

t.needzero = s.needzero

// If s was scavenged, then t may be scavenged.

start, end := t.physPageBounds()

if s.scavenged && start < end {

memstats.heap_released += uint64(end - start)

t.scavenged = true

}

s.state = mSpanManual // prevent coalescing with s

t.state = mSpanManual

h.freeSpanLocked(t, false, false, s.unusedsince)

s.state = mSpanFree

}

// "Unscavenge" s only AFTER splitting so that

// we only sysUsed whatever we actually need.

if s.scavenged {

// sysUsed all the pages that are actually available

// in the span. Note that we don't need to decrement

// heap_released since we already did so earlier.

sysUsed(unsafe.Pointer(s.base()), s.npages<<_PageShift)

s.scavenged = false

}

s.unusedsince = 0

h.setSpans(s.base(), npage, s)

*stat += uint64(npage << _PageShift)

memstats.heap_idle -= uint64(npage << _PageShift)

//println("spanalloc", hex(s.start<<_PageShift))

if s.inList() {

throw("still in list")

}

return s

}

此时查看grow函数的流程

// Try to add at least npage pages of memory to the heap,

// returning whether it worked.

//

// h must be locked.

func (h *mheap) grow(npage uintptr) bool {

ask := npage << _PageShift // 获取对应页数的实际内存大小

v, size := h.sysAlloc(ask) //调用sysAlloc分配指定大小的内存

if v == nil {

print("runtime: out of memory: cannot allocate ", ask, "-byte block (", memstats.heap_sys, " in use)\n")

return false

}

// Scavenge some pages out of the free treap to make up for

// the virtual memory space we just allocated. We prefer to

// scavenge the largest spans first since the cost of scavenging

// is proportional to the number of sysUnused() calls rather than

// the number of pages released, so we make fewer of those calls

// with larger spans.

h.scavengeLargest(size)

// Create a fake "in use" span and free it, so that the

// right coalescing happens.

s := (*mspan)(h.spanalloc.alloc()) // 获取一个span

s.init(uintptr(v), size/pageSize) // 初始化对应的span大小

h.setSpans(s.base(), s.npages, s)

atomic.Store(&s.sweepgen, h.sweepgen)

s.state = mSpanInUse

h.pagesInUse += uint64(s.npages) // 标记为可用

h.freeSpanLocked(s, false, true, 0)

return true

}

至此,三个不同大小的分配的主要过程基本上描述完成。

总结

go的内存分配机制与tcmalloc原理基本类似,使用过程也比较类似,通过不同线程缓存的cache来分配内存,通过唯一的heap来分配管理内存,heap通过全局的缓存的spanClass的对象来进行小对象的快速分配,提高全局分配内存的响应速度,如果是大对象则直接进行对象的分配管理。由于本人才疏学浅,如有错误请批评指正。