MobileNet v1、v2,v3网络模型

1.网络模型整体架构

1.1.MobileNet v1网络

MobileNet v1网络是由Google团队在2017年提出的,专注于移动端或者嵌入式设备中的轻量级CNN网络。相比传统卷积神经网络,在准确率小幅降低的前提下大大减少模型参数与运算量。(相比VGG16准确率减小了0.9%,但模型参数只有VGG的1/32)。网络中的亮点有:

- Depthwise Convolution(大大减少运算量和参数数量)

- 增加超参数α、β

1.2.MobileNet v2网络

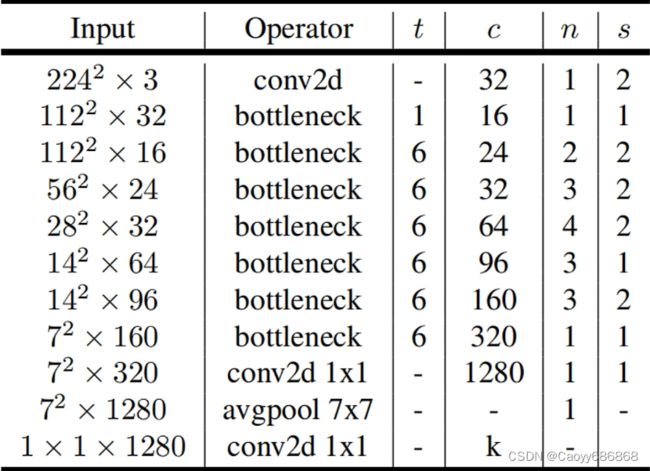

MobileNet v2网络时Google团队在2018年提出的,相比MobileNet v1网络,准确率更高,模型更小。网络中的亮点有:

- Inverted Residuals(倒残差结构)

- Linear Bottlenecks

- t是扩展因子

- c是输出特征矩阵深度channel

- n是bottleneck的重复次数

- s是步距(针对第一层,其他为1)

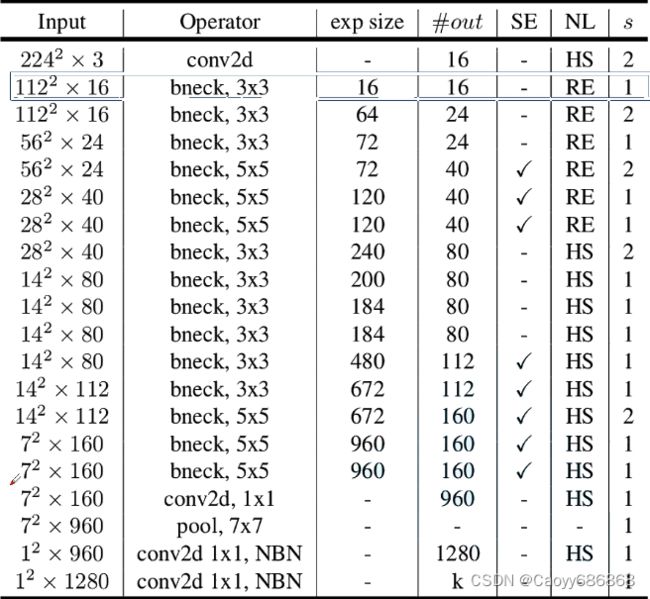

1.3.MobileNet v3网络

- 更新Block(bneck):加入SE模块,更新了激活函数

- 使用NAS搜索参数(Neural Architecture Search)

- 重新设计耗时层结构:减少第一个卷积层的卷积核个数(32→16),精简Last stage

- 注意:当stride==1且input_channel==output_channel才有shortcut连接

2.网络模型搭建

2.1.MobileNetV2网络

from torch import nn

import torch

def _make_divisible(ch, divisor=8, min_ch=None):

"""

This function is taken from the original tf repo.

It ensures that all layers have a channel number that is divisible by 8

It can be seen here:

https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

"""

if min_ch is None:

min_ch = divisor

new_ch = max(min_ch, int(ch + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if new_ch < 0.9 * ch:

new_ch += divisor

return new_ch

class ConvBNReLU(nn.Sequential):

def __init__(self, in_channel, out_channel, kernel_size=3, stride=1, groups=1):

padding = (kernel_size - 1) // 2

super(ConvBNReLU, self).__init__(

nn.Conv2d(in_channel, out_channel, kernel_size, stride, padding, groups=groups, bias=False),

nn.BatchNorm2d(out_channel),

nn.ReLU6(inplace=True)

)

class InvertedResidual(nn.Module):

def __init__(self, in_channel, out_channel, stride, expand_ratio):

super(InvertedResidual, self).__init__()

hidden_channel = in_channel * expand_ratio

self.use_shortcut = stride == 1 and in_channel == out_channel

layers = []

if expand_ratio != 1:

# 1x1 pointwise conv

layers.append(ConvBNReLU(in_channel, hidden_channel, kernel_size=1))

layers.extend([

# 3x3 depthwise conv

ConvBNReLU(hidden_channel, hidden_channel, stride=stride, groups=hidden_channel),

# 1x1 pointwise conv(linear)

nn.Conv2d(hidden_channel, out_channel, kernel_size=1, bias=False),

nn.BatchNorm2d(out_channel),

])

self.conv = nn.Sequential(*layers)

def forward(self, x):

if self.use_shortcut:

return x + self.conv(x)

else:

return self.conv(x)

class MobileNetV2(nn.Module):

def __init__(self, num_classes=1000, alpha=1.0, round_nearest=8):

super(MobileNetV2, self).__init__()

block = InvertedResidual

input_channel = _make_divisible(32 * alpha, round_nearest)

last_channel = _make_divisible(1280 * alpha, round_nearest)

inverted_residual_setting = [

# t, c, n, s

[1, 16, 1, 1],

[6, 24, 2, 2],

[6, 32, 3, 2],

[6, 64, 4, 2],

[6, 96, 3, 1],

[6, 160, 3, 2],

[6, 320, 1, 1],

]

features = []

# conv1 layer

features.append(ConvBNReLU(3, input_channel, stride=2))

# building inverted residual residual blockes

for t, c, n, s in inverted_residual_setting:

output_channel = _make_divisible(c * alpha, round_nearest)

for i in range(n):

stride = s if i == 0 else 1

features.append(block(input_channel, output_channel, stride, expand_ratio=t))

input_channel = output_channel

# building last several layers

features.append(ConvBNReLU(input_channel, last_channel, 1))

# combine feature layers

self.features = nn.Sequential(*features)

# building classifier

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.classifier = nn.Sequential(

nn.Dropout(0.2),

nn.Linear(last_channel, num_classes)

)

# weight initialization

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out')

if m.bias is not None:

nn.init.zeros_(m.bias)

elif isinstance(m, nn.BatchNorm2d):

nn.init.ones_(m.weight)

nn.init.zeros_(m.bias)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.zeros_(m.bias)

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

2.2.MobileNetV3网络

from typing import Callable, List, Optional

import torch

from torch import nn, Tensor

from torch.nn import functional as F

from functools import partial

def _make_divisible(ch, divisor=8, min_ch=None):

"""

This function is taken from the original tf repo.

It ensures that all layers have a channel number that is divisible by 8

It can be seen here:

https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

"""

if min_ch is None:

min_ch = divisor

new_ch = max(min_ch, int(ch + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if new_ch < 0.9 * ch:

new_ch += divisor

return new_ch

class ConvBNActivation(nn.Sequential):

def __init__(self,

in_planes: int,

out_planes: int,

kernel_size: int = 3,

stride: int = 1,

groups: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

activation_layer: Optional[Callable[..., nn.Module]] = None):

padding = (kernel_size - 1) // 2

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if activation_layer is None:

activation_layer = nn.ReLU6

super(ConvBNActivation, self).__init__(nn.Conv2d(in_channels=in_planes,

out_channels=out_planes,

kernel_size=kernel_size,

stride=stride,

padding=padding,

groups=groups,

bias=False),

norm_layer(out_planes),

activation_layer(inplace=True))

class SqueezeExcitation(nn.Module):

def __init__(self, input_c: int, squeeze_factor: int = 4):

super(SqueezeExcitation, self).__init__()

squeeze_c = _make_divisible(input_c // squeeze_factor, 8)

self.fc1 = nn.Conv2d(input_c, squeeze_c, 1)

self.fc2 = nn.Conv2d(squeeze_c, input_c, 1)

def forward(self, x: Tensor) -> Tensor:

scale = F.adaptive_avg_pool2d(x, output_size=(1, 1))

scale = self.fc1(scale)

scale = F.relu(scale, inplace=True)

scale = self.fc2(scale)

scale = F.hardsigmoid(scale, inplace=True)

return scale * x

class InvertedResidualConfig:

def __init__(self,

input_c: int,

kernel: int,

expanded_c: int,

out_c: int,

use_se: bool,

activation: str,

stride: int,

width_multi: float):

self.input_c = self.adjust_channels(input_c, width_multi)

self.kernel = kernel

self.expanded_c = self.adjust_channels(expanded_c, width_multi)

self.out_c = self.adjust_channels(out_c, width_multi)

self.use_se = use_se

self.use_hs = activation == "HS" # whether using h-swish activation

self.stride = stride

@staticmethod

def adjust_channels(channels: int, width_multi: float):

return _make_divisible(channels * width_multi, 8)

class InvertedResidual(nn.Module):

def __init__(self,

cnf: InvertedResidualConfig,

norm_layer: Callable[..., nn.Module]):

super(InvertedResidual, self).__init__()

if cnf.stride not in [1, 2]:

raise ValueError("illegal stride value.")

self.use_res_connect = (cnf.stride == 1 and cnf.input_c == cnf.out_c)

layers: List[nn.Module] = []

activation_layer = nn.Hardswish if cnf.use_hs else nn.ReLU

# expand

if cnf.expanded_c != cnf.input_c:

layers.append(ConvBNActivation(cnf.input_c,

cnf.expanded_c,

kernel_size=1,

norm_layer=norm_layer,

activation_layer=activation_layer))

# depthwise

layers.append(ConvBNActivation(cnf.expanded_c,

cnf.expanded_c,

kernel_size=cnf.kernel,

stride=cnf.stride,

groups=cnf.expanded_c,

norm_layer=norm_layer,

activation_layer=activation_layer))

if cnf.use_se:

layers.append(SqueezeExcitation(cnf.expanded_c))

# project

layers.append(ConvBNActivation(cnf.expanded_c,

cnf.out_c,

kernel_size=1,

norm_layer=norm_layer,

activation_layer=nn.Identity))

self.block = nn.Sequential(*layers)

self.out_channels = cnf.out_c

self.is_strided = cnf.stride > 1

def forward(self, x: Tensor) -> Tensor:

result = self.block(x)

if self.use_res_connect:

result += x

return result

class MobileNetV3(nn.Module):

def __init__(self,

inverted_residual_setting: List[InvertedResidualConfig],

last_channel: int,

num_classes: int = 1000,

block: Optional[Callable[..., nn.Module]] = None,

norm_layer: Optional[Callable[..., nn.Module]] = None):

super(MobileNetV3, self).__init__()

if not inverted_residual_setting:

raise ValueError("The inverted_residual_setting should not be empty.")

elif not (isinstance(inverted_residual_setting, List) and

all([isinstance(s, InvertedResidualConfig) for s in inverted_residual_setting])):

raise TypeError("The inverted_residual_setting should be List[InvertedResidualConfig]")

if block is None:

block = InvertedResidual

if norm_layer is None:

norm_layer = partial(nn.BatchNorm2d, eps=0.001, momentum=0.01)

layers: List[nn.Module] = []

# building first layer

firstconv_output_c = inverted_residual_setting[0].input_c

layers.append(ConvBNActivation(3,

firstconv_output_c,

kernel_size=3,

stride=2,

norm_layer=norm_layer,

activation_layer=nn.Hardswish))

# building inverted residual blocks

for cnf in inverted_residual_setting:

layers.append(block(cnf, norm_layer))

# building last several layers

lastconv_input_c = inverted_residual_setting[-1].out_c

lastconv_output_c = 6 * lastconv_input_c

layers.append(ConvBNActivation(lastconv_input_c,

lastconv_output_c,

kernel_size=1,

norm_layer=norm_layer,

activation_layer=nn.Hardswish))

self.features = nn.Sequential(*layers)

self.avgpool = nn.AdaptiveAvgPool2d(1)

self.classifier = nn.Sequential(nn.Linear(lastconv_output_c, last_channel),

nn.Hardswish(inplace=True),

nn.Dropout(p=0.2, inplace=True),

nn.Linear(last_channel, num_classes))

# initial weights

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out")

if m.bias is not None:

nn.init.zeros_(m.bias)

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.ones_(m.weight)

nn.init.zeros_(m.bias)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.zeros_(m.bias)

def _forward_impl(self, x: Tensor) -> Tensor:

x = self.features(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

def forward(self, x: Tensor) -> Tensor:

return self._forward_impl(x)

def mobilenet_v3_large(num_classes: int = 1000,

reduced_tail: bool = False) -> MobileNetV3:

"""

Constructs a large MobileNetV3 architecture from

"Searching for MobileNetV3" .

weights_link:

https://download.pytorch.org/models/mobilenet_v3_large-8738ca79.pth

Args:

num_classes (int): number of classes

reduced_tail (bool): If True, reduces the channel counts of all feature layers

between C4 and C5 by 2. It is used to reduce the channel redundancy in the

backbone for Detection and Segmentation.

"""

width_multi = 1.0

bneck_conf = partial(InvertedResidualConfig, width_multi=width_multi)

adjust_channels = partial(InvertedResidualConfig.adjust_channels, width_multi=width_multi)

reduce_divider = 2 if reduced_tail else 1

inverted_residual_setting = [

# input_c, kernel, expanded_c, out_c, use_se, activation, stride

bneck_conf(16, 3, 16, 16, False, "RE", 1),

bneck_conf(16, 3, 64, 24, False, "RE", 2), # C1

bneck_conf(24, 3, 72, 24, False, "RE", 1),

bneck_conf(24, 5, 72, 40, True, "RE", 2), # C2

bneck_conf(40, 5, 120, 40, True, "RE", 1),

bneck_conf(40, 5, 120, 40, True, "RE", 1),

bneck_conf(40, 3, 240, 80, False, "HS", 2), # C3

bneck_conf(80, 3, 200, 80, False, "HS", 1),

bneck_conf(80, 3, 184, 80, False, "HS", 1),

bneck_conf(80, 3, 184, 80, False, "HS", 1),

bneck_conf(80, 3, 480, 112, True, "HS", 1),

bneck_conf(112, 3, 672, 112, True, "HS", 1),

bneck_conf(112, 5, 672, 160 // reduce_divider, True, "HS", 2), # C4

bneck_conf(160 // reduce_divider, 5, 960 // reduce_divider, 160 // reduce_divider, True, "HS", 1),

bneck_conf(160 // reduce_divider, 5, 960 // reduce_divider, 160 // reduce_divider, True, "HS", 1),

]

last_channel = adjust_channels(1280 // reduce_divider) # C5

return MobileNetV3(inverted_residual_setting=inverted_residual_setting,

last_channel=last_channel,

num_classes=num_classes)

def mobilenet_v3_small(num_classes: int = 1000,

reduced_tail: bool = False) -> MobileNetV3:

"""

Constructs a large MobileNetV3 architecture from

"Searching for MobileNetV3" .

weights_link:

https://download.pytorch.org/models/mobilenet_v3_small-047dcff4.pth

Args:

num_classes (int): number of classes

reduced_tail (bool): If True, reduces the channel counts of all feature layers

between C4 and C5 by 2. It is used to reduce the channel redundancy in the

backbone for Detection and Segmentation.

"""

width_multi = 1.0

bneck_conf = partial(InvertedResidualConfig, width_multi=width_multi)

adjust_channels = partial(InvertedResidualConfig.adjust_channels, width_multi=width_multi)

reduce_divider = 2 if reduced_tail else 1

inverted_residual_setting = [

# input_c, kernel, expanded_c, out_c, use_se, activation, stride

bneck_conf(16, 3, 16, 16, True, "RE", 2), # C1

bneck_conf(16, 3, 72, 24, False, "RE", 2), # C2

bneck_conf(24, 3, 88, 24, False, "RE", 1),

bneck_conf(24, 5, 96, 40, True, "HS", 2), # C3

bneck_conf(40, 5, 240, 40, True, "HS", 1),

bneck_conf(40, 5, 240, 40, True, "HS", 1),

bneck_conf(40, 5, 120, 48, True, "HS", 1),

bneck_conf(48, 5, 144, 48, True, "HS", 1),

bneck_conf(48, 5, 288, 96 // reduce_divider, True, "HS", 2), # C4

bneck_conf(96 // reduce_divider, 5, 576 // reduce_divider, 96 // reduce_divider, True, "HS", 1),

bneck_conf(96 // reduce_divider, 5, 576 // reduce_divider, 96 // reduce_divider, True, "HS", 1)

]

last_channel = adjust_channels(1024 // reduce_divider) # C5

return MobileNetV3(inverted_residual_setting=inverted_residual_setting,

last_channel=last_channel,

num_classes=num_classes)

3.网络模型训练

import os

import sys

import json

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import transforms,datasets

from tqdm import tqdm

from model_v2 import MobileNetV2

def main():

device = torch.device("cuda:0"if torch.cuda.is_available() else "cpu")

print("using {} device.".format(device))

batch_size = 16

epochs = 5

data_transform = {

"train":transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

"val":transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406],[0.229, 0.224, 0.225])

])

}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = os.path.join(data_root,"000000\pytorch_图像分类\deep-learning-for-image-processing-master\data_set","flower_data")

assert os.path.exists(image_path),"{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path,"train"),transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val,key) for key,val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict,indent=4)

with open ('class_indices.json','w') as json_file:

json_file.write(json_str)

nw = min([os.cpu_count(),batch_size if batch_size > 1 else 0,8]) # number of workers

print("Using {} dataloader workers every process.".format(nw))

train_loader = torch.utils.data.DataLoader(

train_dataset,

batch_size,

shuffle=True,

num_workers=nw

)

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path,"val"),transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(

validate_dataset,

batch_size,

shuffle=False,

num_workers=nw

)

print("using {} images for training,{} images for validation.".format(train_num,val_num))

# create model

net = MobileNetV2(num_classes=5)

# load pretrain weights

# download url: https://download.pytorch.org/models/mobilenet_v2-b0353104.pth

model_weight_path = "./mobilenet_v2.pth"

assert os.path.exists(model_weight_path),"file {} does not exist.".format(model_weight_path)

pre_weights = torch.load(model_weight_path,map_location='cpu')

# delete classifier weights

pre_dict = {k:v for k,v in pre_weights.items() if net.state_dict()[k].numel() == v.numel()}

missing_keys,unexpect_keys = net.load_state_dict(pre_dict,strict=False)

# freeze features weights

for param in net.features.parameters():

param.requires_grad = False

net.to(device)

# define loss function

loss_function = nn.CrossEntropyLoss()

# construct an optimizer

params = [p for p in net.parameters() if p.requires_grad]

optimizer = optim.Adam(params,lr=0.0001)

best_acc = 0.0

save_path = './MobileNetV2.pth'

train_steps = len(train_loader)

# print(train_steps)

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader,file=sys.stdout)

for step,data in enumerate(train_bar):

images,labels = data

optimizer.zero_grad()

logits = net(images.to(device))

loss = loss_function(logits,labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch+1,epochs,loss)

# validate

net.eval()

acc = 0.0 # accumalate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader,file=sys.stdout)

for val_data in val_bar:

val_images,val_labels = val_data

outputs = net(val_images.to(device))

# loss = loss_function(outputs,val_labels)

predict_y = torch.max(outputs,dim=1)[1]

acc += torch.eq(predict_y,val_labels.to(device)).sum().item()

val_bar.desc = "valid epoch[{}/{}]".format(epoch+1,epochs)

val_accurate = acc/val_num

print("[epoch %d] train loss:%.3f val_accuracy:%.3f"%(epoch+1,running_loss/train_steps,val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(),save_path)

print("Finished Training!!")

if __name__ == "__main__":

main()4.模型检验

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model_v2 import MobileNetV2

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("use {} device.".format(device))

data_transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406],[0.229, 0.224, 0.225])

])

# load image

img_path = "./tulip.jpg"

assert os.path.exists(img_path),"file:'{}'does not exist.".format(img_path)

img = Image.open(img_path)

plt.imshow(img)

#[N,C,H,W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img,dim=0)

# read class indict

json_path = "./class_indices.json"

assert os.path.exists(json_path),"file:'{}'does not exist.".format(json_path)

with open(json_path,'r') as f:

class_indict = json.load(f)

# create model

model = MobileNetV2(num_classes=5).to(device)

# load model weights

model_weight_path = "./MobileNetV2.pth"

assert os.path.exists(model_weight_path),"file:'{}'does not exist.".format(model_weight_path)

model.load_state_dict(torch.load(model_weight_path,map_location=device))

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output,dim=0)

predict_cla = torch.argmax(predict).numpy()

print_res = "class:{} prob:{:.3}".format(class_indict[str(predict_cla)],predict[predict_cla].numpy())

plt.title(print_res)

for i in range(len(predict)):

print("class:{:10} prob:{:.3f}".format(class_indict[str(i)],predict[i].numpy()))

plt.show()

if __name__ == "__main__":

main()