【Java项目】好客租房——数据库集群&部署

前置知识:

[Mysql]

系统架构存在的问题

DB Server 目前只是用了单节点服务,如果面对大并发,海量数据存储,单节点的系统架构存在很严重问题,需要实现 Mysql集群 ,来应对大并发、海量数据存储问题

Mysql 数据库的集群方案

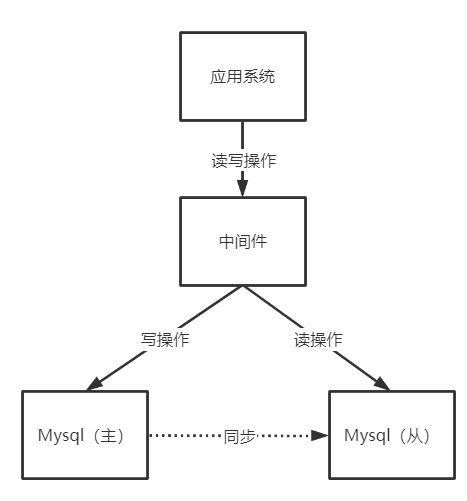

读写分离架构

一般应用对数据库而言都是 “读多写少” ,也就是数据库读取数据的压力比较大。

采用数据库集群方案:

其中一个是

主库,负责写入数据,我们称为 :写库其他都是

从库,负责读取数据,称为:读库

- 读库和写库的数据一致

- 写数据必须写到写库

- 读数据必须到读库

问题:

-

应用程序需要连接到多个节点,对应用程序而言开发变得复杂

-

通过中间件

Mycat解决 -

如果程序内部实现,可使用

Spring AOP

-

-

主从之间的同步,是异步完成,

弱一致性- 可能会导致,数据写入主库,应用读取从库获取不到数据,或者丢失数据,对于数据安全性要求高的应用不合适,如支付场景

- 该问题通过

PXC集群解决

中间件

应用程序,连接多个数据库节点,会使应用程序的复杂度提升,可以通过中间件方式解决

- 应用程序只需要连接到中间件即可,无需连接多个数据库节点

- 应用程序无需区分读写操作,对中间件直接进行读写操作即可

- 在中间件中进行区分读写操作,读操作发送到从节点,写操作发送到主节点

中间件的性能称为了系统的瓶颈

- 中间件的可靠性得到了保证,但是,应用系统仍然需要连接到两个中间件,为应用系统带来的复杂度

负载均衡

主从复制 架构的 高可用 框架搭建完成

PXC集群架构

在前面的结构中,都是基于 Mysql主从架构 ,弱一致性问题 没有解决,如果在需要强一致性的需求中,显然这种架构是不能应对的,如:交易数据

PXC提供了 读写强一致性 的功能,可以保证数据在任何一个节点写入的同时可以同步到其他节点,也就意味着可以从其他任何节点进行读取操作,无延迟

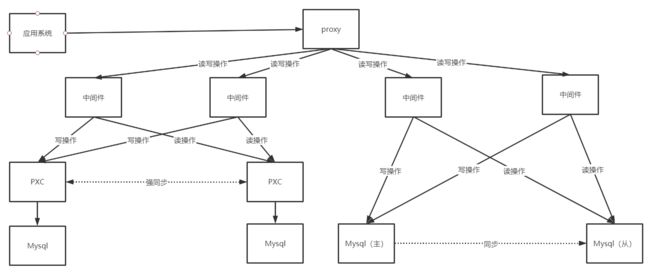

混合架构

在 PXC架构 中,虽然可以实现事务的强一致性,但是它是通过牺牲了性能换来的一致性,如果在某些业务场景下,如果没有强一致性的需求,那么使用PXC就不合适了。所以,在我们的系统架构中,需要将两种方式综合起来,才是一个较为完善的结构

搭建主从复制架构

使用的Mysql版本为衍生版 Percona ,版本为5.7.23,通过docker 搭建服务

主从复制原理

- master 将数据增量记录到

二进制日志(binary log),即配置文件log-bin指定的文件(这些记录叫做二进制日志事件 binary log events) - slave 将 master 的

binary log events拷贝到他的中继日志(relay log) - slave 重做

中继日志中的事件,将改变反映他自己的数据 (数据重演)

注意

- 主 DB Server 和 从DB Server 数据库版本一致

- 主 DB Server 和 从DB Server 数据库数据一致

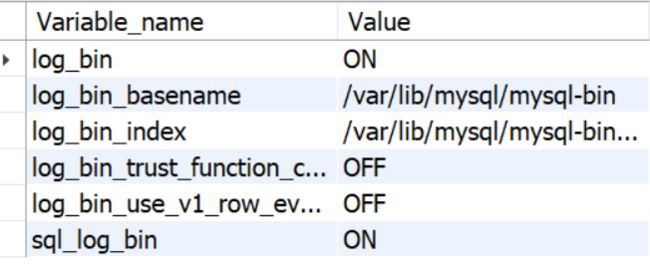

- 主DB server开启二进制日志

- 主DB server和从DB server的server_id都必须唯一

主库配置

# 创建主库 配置文件和数据文件 路径

mkdir -p /data/mysql/master01

cd /data/mysql/master01

mkdir conf data

# 修改当前文件夹读写权限

chmod 777 data -R

chmod 777 conf

# 创建配置文件

cd /data/mysql/master01/conf

vim my.cnf

主库配置文件

#开启主从复制,主库的配置

[mysqld]

log-bin = mysql-bin

#指定主库serverid

server-id=1

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

binlog_format=MIXED

#指定同步的库,如果不指定则同步全部数据库

##binlog-do-db=my_test

创建主库

docker create --name percona-master01 -v /data/mysql/master01/data:/var/lib/mysql -v /data/mysql/master01/conf:/etc/my.cnf.d -p 3306:3306 -e MYSQL_ROOT_PASSWORD=root percona:5.7.23

# 启动

docker start percona-master01 && docker logs -f percona-master01

在主库中创建同步用户

-- 授权用户slave01使用123456密码登录mysql

create user 'slave01'@'%' identified by 'slave01';

grant replication slave on *.* to 'slave01'@'%';

-- 刷新配置

flush privileges;

-- 查看master状态

show master status;

-- 查看二进制日志相关的配置项

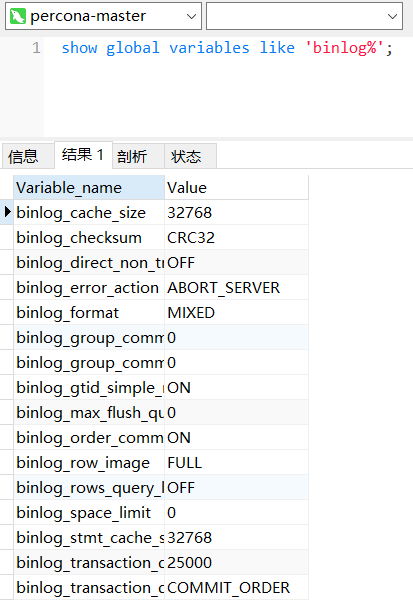

show global variables like 'binlog%';

-- 查看server相关的配置项

show global variables like 'server%';

出现问题:[Err] 1055 - Expression #1 of ORDER BY clause is not in GROUP BY clause and错误

解决方案,在my.cnf配置文件中设置

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

查看主库状态

从库配置

#创建目录

mkdir /data/mysql/slave01

cd /data/mysql/slave01

mkdir conf data

chmod 777 data -R

chmod 777 conf

#创建配置文件

cd /data/mysql/slave01/conf

vim my.cnf

从库配置文件

[mysqld]

server-id=2 #服务id,不可重复

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

创建容器

#创建容器

docker create --name percona-slave01 -v /data/mysql/slave01/data:/var/lib/mysql -v /data/mysql/slave01/conf:/etc/my.cnf.d -p 3307:3306 -e MYSQL_ROOT_PASSWORD=root percona:5.7.23

# 启动容器

docker start percona-slave01 && docker logs -f percona-slave01

设置master相关信息

#设置master相关信息

CHANGE MASTER TO

master_host='8.140.130.91',

master_user='slave01',

master_password='slave01',

master_port=3306,

master_log_file='mysql-bin.000003',

master_log_pos=745;

# 启动同步

start slave;

测试

show slave status;

问题

1. 服务器重启后,主从可能会断开同步,slave_sql_running为false

重置 slave 配置

STOP SLAVE IO_THREAD FOR CHANNEL '';

CHANGE MASTER TO

master_host='8.140.130.91',

master_user='slave01',

master_password='slave01',

master_port=3306,

master_log_file='mysql-bin.000005',

master_log_pos=1217;

start slave;

show slave status;

2. World-writable config file ‘/etc/my.cnf’ is ignored

问题:

权限全局可写,任何一个用户都可以写。mysql担心这种文件被其他用户恶意修改,所以忽略掉这个配置文件

只需要将 conf 目录权限设为 777 ,其下目录设为 644

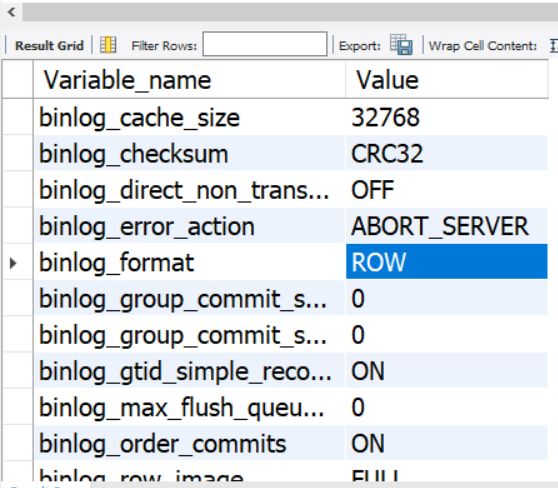

主从复制三种模式

show global variables like 'binlog%';

在查看二进制日志相关参数内容中,会发现默认的模式为 ROW ,在Mysql中提供了 3 中模式,对应的 binlog 的格式有三种:STATEMENT,ROW,MIXED

STATEMENT模式(SBR)——基于sql语句复制(statement-based replication)

每一条涉及数据修改的sql语句会记录到 binlog 中

- 优点:并不需要记录每一条sql语句和每一行的数据变化,减少了 binlog 日志量

- 缺点:在某些情况下会导致

master-slave 数据不一致(如sleep()函数,last_insert_id,user_defined function(udf) 等会出现问题)

ROW模式(RBR)——基于行的复制(row-based replication)

- 优点:不记录每条sql语句的上下文信息,仅需记录哪条数据被修改了,修改成什么样了。 不会出现某些特定情况下的存储过程、或function、或trigger的调用和触发无法被正确复制的问题

- 缺点:会产生大量的日志,尤其是alter table的时候会让日志暴涨

MIXED模式(MBR)——混合模式复制(mixedbased replication)

以上两种模式的混合使用,

- 一般的复制使用STATEMENT模式保存binlog,

- 对于STATEMENT模式无法复制的操作使用ROW模式保存binlog

MySQL会根据执行的SQL语句选择日志保存方式

使用MIXED主从复制模式

#修改主库的配置

[mysqld]

log-bin = mysql-bin

#指定主库serverid

server-id=1

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

binlog_format=MIXED

#指定同步的库,如果不指定则同步全部数据库

##binlog-do-db=my_test

---

# 重启

docker restart percona-master01

# 查看二进制日志相关配置项

show global variables like 'binlog%';

MyCat中间件

基于阿里 Cobar 产品研发

应用程序与Mycat之间为Mysql协议

数据分片与读写分离

- 读写分离:读写操作不在同一节点上

- 数据分片:扩容

安装

下载链接:http://dl.mycat.org.cn/

mkdir -p /haoke/mycat

tar -xvf Mycat-server-1.6.7.6-release-20210303094759-linux.tar.gz

mv mycat mycat01

读写分离

| 主机 | 端口 | 容器名称 | 角色 |

|---|---|---|---|

| 8.140.130.91 | 3306 | percona-master01 | master |

| 8.140.130.91 | 3307 | percona-slave01 | slave |

server.xml

DOCTYPE mycat:server SYSTEM "server.dtd">

<mycat:server xmlns:mycat="http://io.mycat/">

<system>

<property name="nonePasswordLogin">0property>

<property name="useHandshakeV10">1property>

<property name="useSqlStat">0property>

<property name="useGlobleTableCheck">0property>

<property name="sequnceHandlerType">2property>

<property name="subqueryRelationshipCheck">falseproperty>

<property name="processorBufferPoolType">0property>

<property name="handleDistributedTransactions">0property>

<property name="useOffHeapForMerge">1property>

<property name="memoryPageSize">64kproperty>

<property name="spillsFileBufferSize">1kproperty>

<property name="useStreamOutput">0property>

<property name="systemReserveMemorySize">384mproperty>

<property name="useZKSwitch">falseproperty>

system>

<user name="mycat" defaultAccount="true">

<property name="password">mycatproperty>

<property name="schemas">haokeproperty>

user>

mycat:server>

schema.xml

DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="haoke" checkSQLschema="false" sqlMaxLimit="100">

<table name="tb_ad" dataNode="dn1" rule="mod-long" />

schema>

<dataNode name="dn1" dataHost="cluster1" database="haoke" />

<dataHost name="cluster1" maxCon="1000" minCon="10" balance="3"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W1" url="8.140.130.91:3306" user="root" password="root">

<readHost host="W1R1" url="8.140.130.91:3307" user="root" password="root" />

writeHost>

dataHost>

mycat:schema>

balance 属性:

-

=“0”:

不开启读写分离机制,所有读操作都发送到当前可用的 writehost上

-

=“1”:

全部的主从节点都参与到

select语句的负载均衡,一个主节点会作为另一个主节点的从节点 -

=“2”:

所有读操作都随机的在主节点、从节点上分发

-

=“3”:

所有读请求随机分发到主节点对应的从节点上执行,主节点不负担读压力

只在 Mycat1.4 以后支持

rule.xml

<function name="mod-long" class="io.mycat.route.function.PartitionByMod">

<property name="count">1property>

function>

启动mycat

cd /haoke/mycat/mycat01

# 测试配置是否正确

./bin/mycat console

./bin/startup_nowrap.sh

[root@iZ2zeg4pktzjhp9h7wt6doZ mycat01]# jps

5987 Jps

2043 MycatStartup

[root@iZ2zeg4pktzjhp9h7wt6doZ mycat01]# kill 2043

[root@iZ2zeg4pktzjhp9h7wt6doZ mycat01]# jps

6004 Jps

问题:./startup.sh: /bin/sh^M: bad interpreter: No such file or directory

用vi打开文件 执行 :set ff 发现文件格式是dos格式

执行 :set ff=unix 将文件变成unix格式

然后再执行就可以了

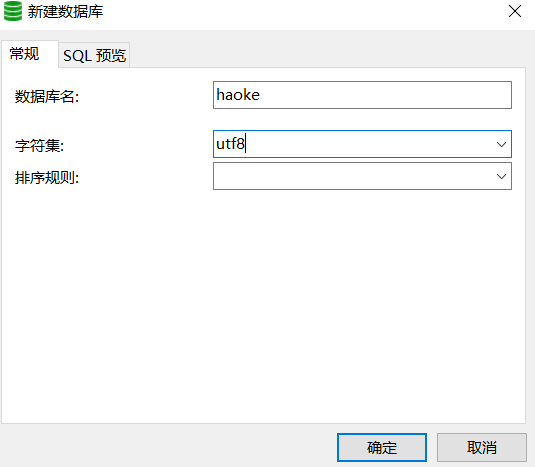

问题:连接时出现 database=0错误

mycat指定的database没有被创建

解决:先创建mycat配置文件中的库,再连接mycat

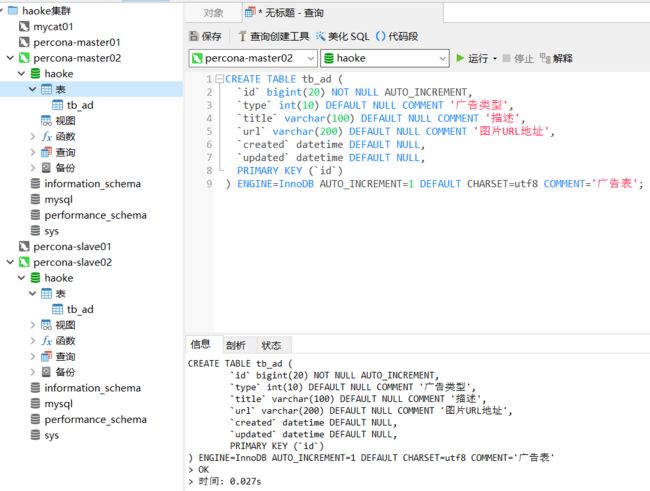

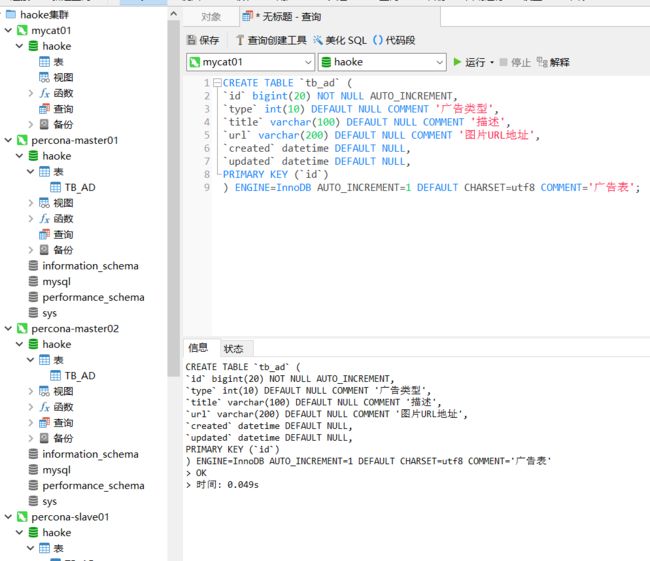

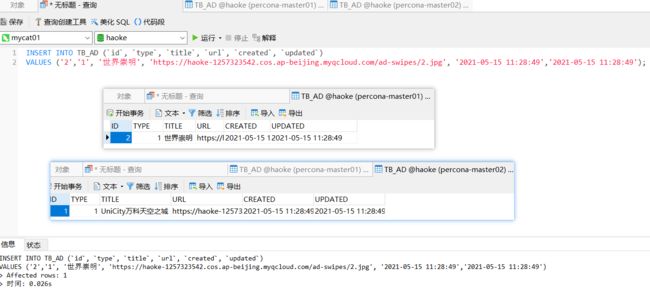

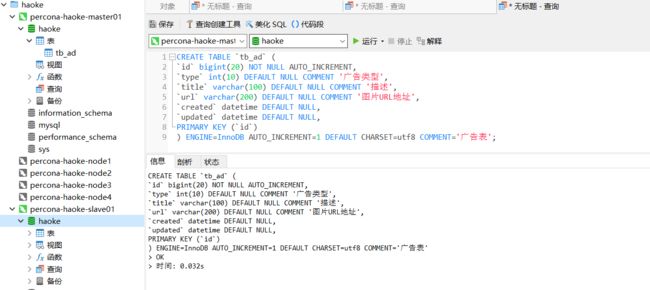

测试读写分离

--创建表

CREATE TABLE `tb_ad` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`type` int(10) DEFAULT NULL COMMENT '广告类型',

`title` varchar(100) DEFAULT NULL COMMENT '描述',

`url` varchar(200) DEFAULT NULL COMMENT '图片URL地址',

`created` datetime DEFAULT NULL,

`updated` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 COMMENT='广告表';

--测试插入数据

INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`)

VALUES ('1','1', 'UniCity万科天空之城', 'http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/26/15432029097062227.jpg', '2018-11-26 11:28:49','2018-11-26 11:28:51');

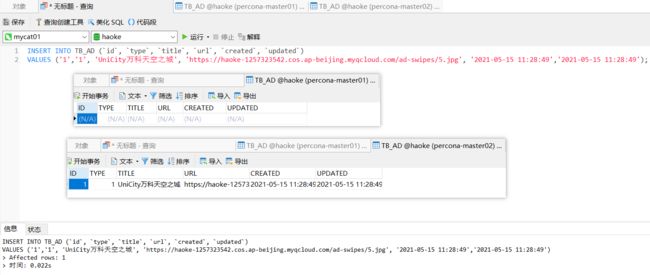

--测试结果:主库有写入数据,从库会同步数据

数据分片

Mysql集群1:

| 主机 | 端口 | 容器名称 | 角色 |

|---|---|---|---|

| 8.140.130.91 | 3306 | percona-master01 | master |

| 8.140.130.91 | 3307 | percona-slave01 | slave |

Mysql集群2:

| 主机 | 端口 | 容器名称 | 角色 |

|---|---|---|---|

| 8.140.130.91 | 3316 | percona-master02 | master |

| 8.140.130.91 | 3317 | percona-slave02 | slave |

配置master02

# 创建主库 配置文件和数据文件 路径

mkdir -p /data/mysql/master02

cd /data/mysql/master02

mkdir conf data

# 修改当前文件夹读写权限

chmod 777 data -R

chmod 777 conf

# 创建配置文件

cd /data/mysql/master02/conf

vim my.cnf

---

#开启主从复制,主库的配置

[mysqld]

log-bin=mysql-bin

#指定主库serverid

server-id=1# 同一集群 id不同

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

binlog_format=MIXED

#指定同步的库,如果不指定则同步全部数据库

##binlog-do-db=my_test

---

# 创建容器

docker create --name percona-master02 -v /data/mysql/master02/data:/var/lib/mysql -v /data/mysql/master02/conf:/etc/my.cnf.d -p 3316:3306 -e MYSQL_ROOT_PASSWORD=root percona:5.7.23

# 启动

docker start percona-master02 && docker logs -f percona-master02

#创建同步账户以及授权

create user 'slave02'@'%' identified by 'slave02';

grant replication slave on *.* to 'slave02'@'%';

flush privileges;

#查看master状态

show master status;

-- 查看master状态

show master status;

-- 查看二进制日志相关的配置项

show global variables like 'binlog%';

-- 查看server相关的配置项

show global variables like 'server%';

配置slave02

#搭建从库

#创建目录

mkdir /data/mysql/slave02

cd /data/mysql/slave02

mkdir conf data

chmod 777 data -R

chmod 777 conf

#创建配置文件

cd /data/mysql/slave02/conf

vim my.cnf

---

#输入如下内容

[mysqld]

server-id=2 #服务id,不可重复

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

---

#创建容器

docker create --name percona-slave02 -v /data/mysql/slave02/data:/var/lib/mysql -v /data/mysql/slave02/conf:/etc/my.cnf.d -p 3317:3306 -e MYSQL_ROOT_PASSWORD=root percona:5.7.23

#启动

docker start percona-slave02 && docker logs -f percona-slave02

设置主节点信息

-- 设置master相关信息

CHANGE MASTER TO

master_host='8.140.130.91',

master_user='slave02',

master_password='slave02',

master_port=3316,

master_log_file='mysql-bin.000006',

master_log_pos=924;

-- 启动同步

start slave;

-- 查看master状态

show slave status;

创建数据库和表

CREATE TABLE tb_ad (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`type` int(10) DEFAULT NULL COMMENT '广告类型',

`title` varchar(100) DEFAULT NULL COMMENT '描述',

`url` varchar(200) DEFAULT NULL COMMENT '图片URL地址',

`created` datetime DEFAULT NULL,

`updated` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 COMMENT='广告表';

配置MyCat

schema.xml

DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="haoke" checkSQLschema="false" sqlMaxLimit="100">

<table name="tb_ad" dataNode="dn1,dn2" rule="mod-long" />

schema>

<dataNode name="dn1" dataHost="cluster1" database="haoke" />

<dataNode name="dn2" dataHost="cluster2" database="haoke" />

<dataHost name="cluster1" maxCon="1000" minCon="10" balance="3"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W1" url="8.140.130.91:3306" user="root" password="root">

<readHost host="W1R1" url="8.140.130.91:3307" user="root" password="root" />

writeHost>

dataHost>

<dataHost name="cluster2" maxCon="1000" minCon="10" balance="3"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W2" url="8.140.130.91:3316" user="root" password="root">

<readHost host="W2R1" url="8.140.130.91:3317" user="root" password="root" />

writeHost>

dataHost>

mycat:schema>

rule.xml

<function name="mod-long" class="io.mycat.route.function.PartitionByMod">

<property name="count">2property>

function>

启动Mycat

# 测试mycat

./mycat console

./startup_nowrap.sh && tail -f ../logs/mycat.log

测试

CREATE TABLE `TB_AD` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`type` int(10) DEFAULT NULL COMMENT '广告类型',

`title` varchar(100) DEFAULT NULL COMMENT '描述',

`url` varchar(200) DEFAULT NULL COMMENT '图片URL地址',

`created` datetime DEFAULT NULL,

`updated` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 COMMENT='广告表';

逐条插入

INSERT INTO TB_AD (`id`, `type`, `title`, `url`, `created`, `updated`)

VALUES ('1','1', 'UniCity万科天空之城', 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/5.jpg', '2021-05-15 11:28:49','2021-05-15 11:28:49');

INSERT INTO TB_AD (`id`, `type`, `title`, `url`, `created`, `updated`)

VALUES ('2','1', '世界崇明', 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/2.jpg', '2021-05-15 11:28:49','2021-05-15 11:28:49');

INSERT INTO TB_AD (`id`, `type`, `title`, `url`, `created`, `updated`)

VALUES ('3','1', '[奉贤 南桥] 光语著', 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/3.jpg', '2021-05-15 11:28:49','2021-05-15 11:28:49');

INSERT INTO TB_AD (`id`, `type`, `title`, `url`, `created`, `updated`)

VALUES ('4','1', '[上海周边 嘉兴] 融创海逸长洲', 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/4.jpg', '2021-05-15 11:28:49','2021-05-15 11:28:49');

Mycat集群

为解决单节点性能问题,部署多个mycat节点

cd /haoke/mycat

cp mycat01 mycat02 -R

cd mycat02

vim wrapper.xml

wrapper.java.additional.6=-Dcom.sun.management.jmxremote.port=1985

vim server.xml

<property name="serverPort">8067</property>

<property name="managerPort">9067</property>

# 启动服务

./startup_nowrap.sh

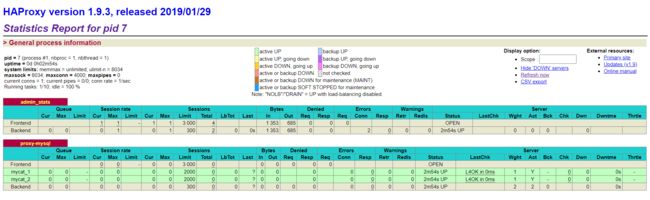

负载均衡

为解决应用程序需要连接到多个mycat,添加 负载均衡组件 HAProxy

简介

haproxy C语言编写,高可用性、负载均衡以及基于TCP和HTTP的应用程序代理

Haproxy 运行在硬件上,支持万级并发。

HAProxy 实现了 事件驱动 单一进程模型 ,此模型支持非常大的并发连接。

- 多线程或多线程模型受内存限制、系统调度器限制以及无所不在的锁限制,很少能处理千级并发

- 事件驱动模型 有更好的资源和时间管理的用户空间实现所有的任务

HAProxy - The Reliable, High Performance TCP/HTTP Load Balancer

部署安装HAProxy

#拉取镜像

docker pull haproxy:1.9.3

#创建目录,用于存放配置文件

mkdir /haoke/haproxy

#创建容器

docker create --name haproxy --net host -v /haoke/haproxy:/usr/local/etc/haproxy haproxy:1.9.3

配置文件

#创建文件

vim /haoke/haproxy/haproxy.cfg

---

global

log 127.0.0.1 local2

maxconn 4000

daemon

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen admin_stats

bind 0.0.0.0:4001

mode http

stats uri /dbs

stats realm Global\ statistics

stats auth admin:admin

listen proxy-mysql

bind 0.0.0.0:4002

mode tcp

balance roundrobin

option tcplog

#代理mycat服务

server mycat_1 8.140.130.91:8066 check port 8066 maxconn 2000

server mycat_2 8.140.130.91:8067 check port 8067 maxconn 2000

---

# 启动容器

docker start haproxy && docker logs -f haproxy

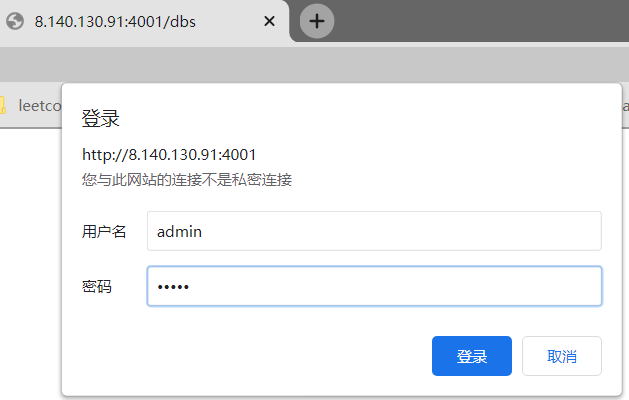

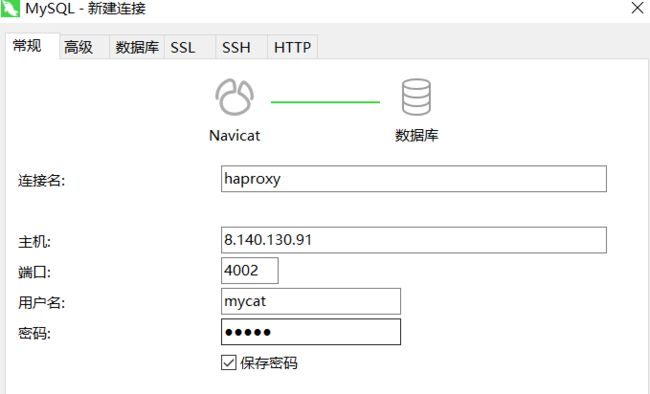

测试

web 界面测试:http://8.140.130.91:4001/dbs

mysql客户端测试:

PXC集群

Percona XtraDB Cluster (PXC) 是针对Mysql用户的高可用性和扩展性解决方案,基于Percona Server,是一个针对事务性应用程序的同步多主机复制插件。

Percona Server是Mysql的改进版本,使用 XtraDB存储引擎,在功能和性能上较Mysql有着显著提升:提升了高负载情况下的InnoDB的性能,为DBA提供了一些非常有用的性能诊断工具,另外有更多的参数和命令来控制服务器行为

Percona XtraDB Cluster提供了:

- 同步复制:事务可以在所有节点上提交

- 多主机复制:可以写到任意节点上

- 从(slave)服务器上的并行应用事件,真正的“主从复制”

- 自动节点配置

- 数据一致性:不再有未同步的从服务器

https://www.percona.com/software/mysql-database/percona-xtradb-cluster

注意

- 尽可能控制PXC集群的规模,节点越多,数据同步速度越慢

- 所有PXC节点的硬件配置要一致,配置低的节点将拖慢数据同步速度

- PXC集群只支持InnoDB引擎,不支持其他的存储引擎

PXC集群方案与Replication区别

- PXC集群方案所有节点都是可读可写的,Replication从节点不能写入(主从同步是单向的,无法从slave节点向master节点同步)

- PXC同步机制是同步进行的,这也是它能保证数据强一致性的根本原因,Replication同步机制是异步进行的,如果从节点停止同步,依然可以向主节点插入数据,正确返回,但会造成数据不一致问题

- PXC是用性能的牺牲换取数据一致性,Replication在性能上是高于PXC的

- 两者用途不一致:

- Replication用于一般信息的存储,能够容忍数据丢失,如:购物车,用户行为日志等

- PXC是用于重要信息存储,如:订单,用户信息

部署安装

| 节点 | 端口 | 容器名称 | 数据卷 |

|---|---|---|---|

| node1 | 13306 | pxc_node1 | v1 |

| node2 | 13307 | pxc_node2 | v2 |

| node3 | 13308 | pxc_node3 | v3 |

#创建数据卷(存储路径:/var/lib/docker/volumes)

docker volume create v1

docker volume create v2

docker volume create v3

#拉取镜像

docker pull percona/percona-xtradb-cluster:5.7

#重命名

docker tag percona/percona-xtradb-cluster:5.7 pxc

#创建网络

docker network create --subnet=172.30.0.0/24 pxc-network

#创建容器

#第一节点

docker create -p 13306:3306 -v v1:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --name=pxc_node1 --net=pxc-network --ip=172.30.0.2 pxc

#第二节点(增加了CLUSTER_JOIN参数)

docker create -p 13307:3306 -v v2:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --name=pxc_node2 -e CLUSTER_JOIN=pxc_node1 --net=pxc-network --ip=172.30.0.3 pxc

#第三节点(增加了CLUSTER_JOIN参数)

docker create -p 13308:3306 -v v3:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --name=pxc_node3 -e CLUSTER_JOIN=pxc_node1 --net=pxc-network --ip=172.30.0.4 pxc

#启动

docker create pxc_node1

#查看集群节点

show status like 'wsrep_cluster%';

先启动第一个结点,等到mysql客户端可以连接到服务后,再启动其他节点

- 启动第一个节点,PXC集群会进行初始化,集群初始化完成后,其他节点才能加入到集群中

测试

CREATE TABLE `tb_ad` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`type` int(10) DEFAULT NULL COMMENT '广告类型',

`title` varchar(100) DEFAULT NULL COMMENT '描述',

`url` varchar(200) DEFAULT NULL COMMENT '图片URL地址',

`created` datetime DEFAULT NULL,

`updated` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 COMMENT='广告表';

INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`) VALUES ('1','1', 'UniCity万科天空之城', 'http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/26/15432029097062227.jpg', '2018-11-26 11:28:49','2018-11-26 11:28:51');

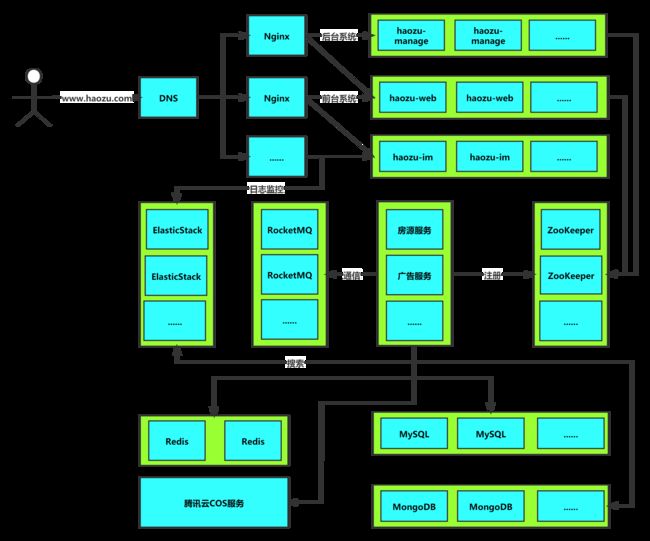

haoke租房数据库集群

好客租房项目采用混合架构的方式完善数据库集群

- HAProxy 作为负载均衡器

- 部署了两个 Mycat 节点作为数据库中间件

- 部署两个 PXC 集群节点,作为2个Mycat分片,每个PXC集群中有两个节点,作为数据的同步存储,PXC集群做数据分片

- 部署一个主从复制集群

- 房源数据保存到PXC分片中,其余数据保存在主从架构中

部署PXC集群

集群一:

| 节点 | 端口 | 容器名称 | 数据卷 |

|---|---|---|---|

| node1 | 13306 | pxc_node1 | haoke-v1 |

| node2 | 13307 | pxc_node2 | haoke-v2 |

集群二:

| 节点 | 端口 | 容器名称 | 数据卷 |

|---|---|---|---|

| node3 | 13308 | pxc_node3 | haoke-v3 |

| node4 | 13309 | pxc_node4 | haoke-v4 |

#创建数据卷(存储路径:/var/lib/docker/volumes)

docker volume create haoke-v1

docker volume create haoke-v2

docker volume create haoke-v3

docker volume create haoke-v4

# 拉取镜像

docker pull percona/percona-xtradb-cluster:5.7

# 镜像命名

docker tag percona/percona-xtradb-cluster:5.7 pxc

#创建网络

docker network create --subnet=172.30.0.0/24 pxc-network

#创建容器

#集群1,第一节点

docker create --name=pxc-haoke-node1 -p 13306:3306 -v haoke-v1:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --net=pxc-network --ip=172.30.0.2 pxc

#第二节点(增加了CLUSTER_JOIN参数)

docker create --name=pxc-haoke-node2 -p 13307:3306 -v haoke-v2:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -eCLUSTER_NAME=pxc -e CLUSTER_JOIN=pxc-haoke-node1 --net=pxc-network --ip=172.30.0.3 pxc

#集群2

#第一节点

docker create --name=pxc-haoke-node3 -p 13308:3306 -v haoke-v3:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --net=pxc-network --ip=172.30.0.4 pxc

#第二节点(增加了CLUSTER_JOIN参数)

docker create -p 13309:3306 -v haoke-v4:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --name=pxc-haoke-node4 -e CLUSTER_JOIN=pxc-haoke-node3 --net=pxc-network --ip=172.30.0.5 pxc

#启动 先pxc第一个结点启动成功后再启动第二个

docker start pxc-haoke-node1 && docker logs -f pxc-haoke-node1

docker start pxc-haoke-node2 && docker logs -f pxc-haoke-node2

docker start pxc-haoke-node3 && docker logs -f pxc-haoke-node3

docker start pxc-haoke-node4 && docker logs -f pxc-haoke-node4

#查看集群节点

show status like 'wsrep_cluster%';

测试

CREATE TABLE `tb_house_resources` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`title` varchar(100) DEFAULT NULL COMMENT '房源标题',

`estate_id` bigint(20) DEFAULT NULL COMMENT '楼盘id',

`building_num` varchar(5) DEFAULT NULL COMMENT '楼号(栋)',

`building_unit` varchar(5) DEFAULT NULL COMMENT '单元号',

`building_floor_num` varchar(5) DEFAULT NULL COMMENT '门牌号',

`rent` int(10) DEFAULT NULL COMMENT '租金',

`rent_method` tinyint(1) DEFAULT NULL COMMENT '租赁方式,1-整租,2-合租',

`payment_method` tinyint(1) DEFAULT NULL COMMENT '支付方式,1-付一押一,2-付三押一,3-付六押一,4-年付押一,5-其它',

`house_type` varchar(255) DEFAULT NULL COMMENT '户型,如:2室1厅1卫',

`covered_area` varchar(10) DEFAULT NULL COMMENT '建筑面积',

`use_area` varchar(10) DEFAULT NULL COMMENT '使用面积',

`floor` varchar(10) DEFAULT NULL COMMENT '楼层,如:8/26',

`orientation` varchar(2) DEFAULT NULL COMMENT '朝向:东、南、西、北',

`decoration` tinyint(1) DEFAULT NULL COMMENT '装修,1-精装,2-简装,3-毛坯',

`facilities` varchar(50) DEFAULT NULL COMMENT '配套设施, 如:1,2,3',

`pic` varchar(1000) DEFAULT NULL COMMENT '图片,最多5张',

`house_desc` varchar(200) DEFAULT NULL COMMENT '描述',

`contact` varchar(10) DEFAULT NULL COMMENT '联系人',

`mobile` varchar(11) DEFAULT NULL COMMENT '手机号',

`time` tinyint(1) DEFAULT NULL COMMENT '看房时间,1-上午,2-中午,3-下午,4-晚上,5-全天',

`property_cost` varchar(10) DEFAULT NULL COMMENT '物业费',

`created` datetime DEFAULT NULL,

`updated` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 COMMENT='房源表';

部署主从复制集群

主库

# 创建主库 配置文件和数据文件 路径

mkdir -p /data/mysql/master01

cd /data/mysql/master01

mkdir conf data

# 修改当前文件夹读写权限

chmod 777 data -R

chmod 777 conf

# 创建配置文件

cd /data/mysql/master01/conf

vim my.cnf

---

#开启主从复制,主库的配置

[mysqld]

log-bin=mysql-bin

#指定主库serverid

server-id=1# 同一集群 id不同

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

binlog_format=MIXED

#指定同步的库,如果不指定则同步全部数据库

##binlog-do-db=my_test

---

# 创建容器

docker create --name percona-haoke-master01 -v /data/mysql/master01/data:/var/lib/mysql -v /data/mysql/master01/conf:/etc/my.cnf.d -p 3306:3306 -e MYSQL_ROOT_PASSWORD=root percona:5.7.23

# 启动

docker start percona-haoke-master01 && docker logs -f percona-haoke-master01

#创建同步账户以及授权

create user 'slave01'@'%' identified by 'slave01';

grant replication slave on *.* to 'slave01'@'%';

flush privileges;

#查看master状态

show master status;

#查看二进制日志相关的配置项

show global variables like 'binlog%';

#查看server相关的配置项

show global variables like 'server%';

从库

#搭建从库

#创建目录

mkdir /data/mysql/slave01

cd /data/mysql/slave01

mkdir conf data

chmod 777 data -R

chmod 777 conf

#创建配置文件

cd /data/mysql/slave01/conf

vim my.cnf

---

#输入如下内容

[mysqld]

server-id=2 #服务id,不可重复

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

---

#创建容器

docker create --name percona-haoke-slave01 -v /data/mysql/slave01/data:/var/lib/mysql -v /data/mysql/slave01/conf:/etc/my.cnf.d -p 3307:3306 -e MYSQL_ROOT_PASSWORD=root percona:5.7.23

#启动

docker start percona-haoke-slave01 && docker logs -f percona-haoke-slave01

#设置master相关信息

CHANGE MASTER TO

master_host='8.140.130.91',

master_user='slave01',

master_password='slave01',

master_port=3306,

master_log_file='mysql-bin.000004',

master_log_pos=154;

#启动同步

start slave;

#查看master状态

show slave status;

测试

CREATE TABLE `tb_ad` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`type` int(10) DEFAULT NULL COMMENT '广告类型',

`title` varchar(100) DEFAULT NULL COMMENT '描述',

`url` varchar(200) DEFAULT NULL COMMENT '图片URL地址',

`created` datetime DEFAULT NULL,

`updated` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 COMMENT='广告表';

部署MyCat

节点一

cd /data/

mkdir mycat

cd mycat

cp /haoke/mycat . -R

mv mycat/ mycat-node1

server.xml

DOCTYPE mycat:server SYSTEM "server.dtd">

<mycat:server xmlns:mycat="http://io.mycat/">

<system>

<property name="nonePasswordLogin">0property>

<property name="useHandshakeV10">1property>

<property name="useSqlStat">0property>

<property name="useGlobleTableCheck">0property>

<property name="sequnceHandlerType">2property>

<property name="subqueryRelationshipCheck">falseproperty>

<property name="processorBufferPoolType">0property>

<property name="handleDistributedTransactions">0property>

<property name="useOffHeapForMerge">1property>

<property name="memoryPageSize">64kproperty>

<property name="spillsFileBufferSize">1kproperty>

<property name="useStreamOutput">0property>

<property name="systemReserveMemorySize">384mproperty>

<property name="useZKSwitch">falseproperty>

<property name="serverPort">18067property>

<property name="managerPort">19067property>

system>

<user name="mycat" defaultAccount="true">

<property name="password">mycatproperty>

<property name="schemas">haokeproperty>

user>

mycat:server>

schema.xml

DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="haoke" checkSQLschema="false" sqlMaxLimit="100">

<table name="tb_house_resources" dataNode="dn1,dn2" rule="mod-long" />

<table name="tb_ad" dataNode="dn3"/>

schema>

<dataNode name="dn1" dataHost="cluster1" database="haoke" />

<dataNode name="dn2" dataHost="cluster2" database="haoke" />

<dataNode name="dn3" dataHost="cluster3" database="haoke" />

<dataHost name="cluster1" maxCon="1000" minCon="10" balance="2"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W1" url="8.140.130.91:13306" user="root"

password="root">

<readHost host="W1R1" url="8.140.130.91:13307" user="root"

password="root" />

writeHost>

dataHost>

<dataHost name="cluster2" maxCon="1000" minCon="10" balance="2"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W2" url="8.140.130.91:13308" user="root"

password="root">

<readHost host="W2R1" url="8.140.130.91:13309" user="root"

password="root" />

writeHost>

dataHost>

<dataHost name="cluster3" maxCon="1000" minCon="10" balance="3"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W3" url="8.140.130.91:3306" user="root"

password="root">

<readHost host="W3R1" url="8.140.130.91:3307" user="root"

password="root" />

writeHost>

dataHost>

mycat:schema>

rule.xml

<function name="mod-long" class="io.mycat.route.function.PartitionByMod">

<property name="count">2property>

function>

设置端口及启动

vim wrapper.conf

#设置jmx端口

wrapper.java.additional.7=-Dcom.sun.management.jmxremote.port=11984

./mycat console

./startup_nowrap.sh && tail -f ../logs/mycat.log

测试:

连接

![]()

插入数据

INSERT INTO `tb_house_resources` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`) VALUES ('1', '东方曼哈顿 3室2厅 16000元', '1005', '2', '1', '1','1111', '1', '1', '1室1厅1卫1厨1阳台', '2', '2', '1/2', '南', '1', '1,2,3,8,9', NULL, '这个经纪人很懒,没写核心卖点', '张三', '11111111111', '1', '11', '2021-05-16 01:16:00','2021-05-16 01:16:00');

INSERT INTO `tb_house_resources` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`) VALUES ('2', '康城 3室2厅1卫', '1002', '1', '2', '3', '2000', '1','2', '3室2厅1卫1厨2阳台', '100', '80', '2/20', '南', '1', '1,2,3,7,6', NULL, '拎包入住','张三', '18888888888', '5', '1.5', '2021-05-16 01:16:00','2021-05-16 01:16:00');

INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`) VALUES ('1','1', 'UniCity万科天空之城', 'http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/26/15432029097062227.jpg', '2021-05-16 01:16:00','2021-05-16 01:16:00');

节点二

cp mycat-node1/ mycat-node2 -R

vim wrapper.conf

#设置jmx端口

wrapper.java.additional.7=-Dcom.sun.management.jmxremote.port=11986

vim server.xml

#设置服务端口以及管理端口

<property name="serverPort">18068</property>

<property name="managerPort">19068</property>

./mycat console

./startup_nowrap.sh && tail -f ../logs/mycat.log

部署HAProxy

修改配置文件:

#修改文件

vim /haoke/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

maxconn 4000

daemon

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen admin_stats

bind 0.0.0.0:4001

mode http

stats uri /dbs

stats realm Global\ statistics

stats auth admin:admin

listen proxy-mysql

bind 0.0.0.0:4002

mode tcp

balance roundrobin

option tcplog

#代理mycat服务

server mycat_1 8.140.130.91:18067 check port 18067 maxconn 2000

server mycat_2 8.140.130.91:18068 check port 18068 maxconn 2000

docker create --name haproxy --net host -v /data/haproxy:/usr/local/etc/haproxy haproxy:1.9.3

# 启动容器

docker start haproxy && docker logs haproxy

部署

在实际项目中,在部署上线前对服务进行盘点,然后根据用户数和并发数,对需要的服务器进行统计,然后采购服务器,最后实施部署。

项目地址

部署架构

部署计划

在实际项目中,在部署上线前对服务进行盘点,然后根据用户数和并发数,对需要的服务器进行统计,然后采购服务器,最后实施部署。

两台服务器:腾讯云(1核2G 50G):82.157.25.57,阿里云(2核4G 40G)8.140.130.91

# 查看cpu状态

top

#查看CPU

1

# 查看内存

free -m

# 查看磁盘容量

df -h

jdk1.8.0_151

Mysql

规划

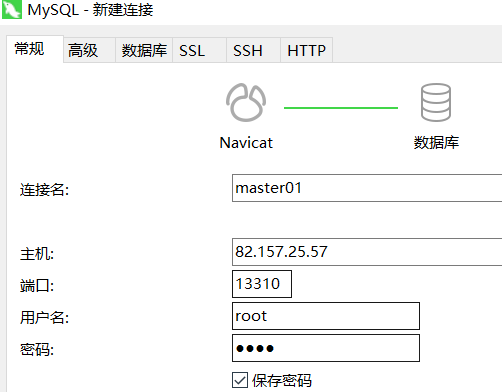

| 服务 | 角色 | 端口 | 服务器 | 容器名 |

|---|---|---|---|---|

| MySQL-node01 | pxc | 13306 | 8.140.130.91 | pxc_node1 |

| MySQL-node02 | pxc | 13307 | 8.140.130.91 | pxc_node2 |

| MySQL-node03 | pxc | 13308 | 8.140.130.91 | pxc_node3 |

| MySQL-node04 | pxc | 13309 | 8.140.130.91 | pxc_node4 |

| MySQL-node05 | master | 13310 | 82.157.25.57 | ms_node1 |

| MySQL-node06 | slave | 13311 | 82.157.25.57 | ms_node2 |

| MyCat-node01 | mycat | 11986,18068,19068 | 82.157.25.57 | mycat_node01 |

| MyCat-node02 | mycat | 11987,18069,19069 | 8.140.130.91 | mycat_node02 |

| HAProxy | haproxy | 4001,4002 | 82.157.25.57 | haproxy |

部署PXC集群——阿里云

#创建数据卷(存储路径:/var/lib/docker/volumes)

docker volume create haoke-v1

docker volume create haoke-v2

docker volume create haoke-v3

docker volume create haoke-v4

#拉取镜像

docker pull percona/percona-xtradb-cluster:5.7

docker tag percona/percona-xtradb-cluster:5.7 pxc

#创建网络

docker network create --subnet=172.30.0.0/24 pxc-network

# 查看网络信息

docker network ls

#集群1,第一节点

docker create -p 13306:3306 -v haoke-v1:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --name=pxc_node1 --net=pxc-network --ip=172.30.0.2 pxc

#第二节点(增加了CLUSTER_JOIN参数)

docker create -p 13307:3306 -v haoke-v2:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --name=pxc_node2 -e CLUSTER_JOIN=pxc_node1 --net=pxc-network --ip=172.30.0.3 pxc

#集群2

#第一节点

docker create -p 13308:3306 -v haoke-v3:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --name=pxc_node3 --net=pxc-network --ip=172.30.0.4 pxc

#第二节点(增加了CLUSTER_JOIN参数)

docker create -p 13309:3306 -v haoke-v4:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e CLUSTER_NAME=pxc --name=pxc_node4 -e CLUSTER_JOIN=pxc_node3 --net=pxc-network --ip=172.30.0.5 pxc

# 查看集群节点

show status like 'wsrep_cluster%';

宕机重启配置

[ERROR] WSREP: It may not be safe to bootstrap the cluster from this node. It was not the last one to leave the cluster and may not contain all the updates. To force cluster bootstrap with this node, edit the grastate.dat file manually and set safe_to_bootstrap to 1 .

手动编辑grastate.dat文件,并将safe_to_bootstrap参数设置为1

# GALERA saved state

version: 2.1

uuid: a4a9e18a-b7e4-11eb-98b8-0b4f06fa70b2

seqno: -1

safe_to_bootstrap: 0

部署MS集群——腾讯云

master

#创建数据卷(存储路径:/var/lib/docker/volumes)

docker volume create haoke-v5

docker volume create haoke-v6

#master

mkdir /data/mysql/haoke-master01/conf -p

cd /data/mysql/haoke-master01/conf

vim my.cnf

#输入如下内容

[mysqld]

log-bin=mysql-bin #开启二进制日志

server-id=1 #服务id,不可重复

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

binlog_format=MIXED

#创建容器

docker create --name ms_node1 -v haoke-v5:/var/lib/mysql -v /data/mysql/haoke/master01/conf:/etc/my.cnf.d -p 13310:3306 -e MYSQL_ROOT_PASSWORD=root percona:5.7.23

#启动

docker start ms_node1 && docker logs -f ms_node1

#创建同步账户以及授权

create user 'haoke'@'%' identified by 'haoke';

grant replication slave on *.* to 'haoke'@'%';

flush privileges;

#查看master状态

show master status;

slave

#slave

mkdir /data/mysql/haoke-slave01/conf -p

cd /data/mysql/haoke-slave01/conf

vim my.cnf

#输入如下内容

---

[mysqld]

server-id=2 #服务id,不可重复

sql_mode='STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'

---

#创建容器

docker create --name ms_node2 -v haoke-v6:/var/lib/mysql -v /data/mysql/haoke-slave01/conf:/etc/my.cnf.d -p 13311:3306 -e MYSQL_ROOT_PASSWORD=root percona:5.7.23

#启动

docker start ms_node2 && docker logs -f ms_node2

#设置master相关信息

---

CHANGE MASTER TO

master_host='82.157.25.57',

master_user='haoke',

master_password='haoke',

master_port=13310,

master_log_file='mysql-bin.000001',

master_log_pos=154;

---

#启动同步

start slave;

#查看master状态

show slave status;

部署MyCat

Mycat-server-1.6.7.6-release-20210303094759

解压

mycat01——腾讯云

server.xml

DOCTYPE mycat:server SYSTEM "server.dtd">

<mycat:server xmlns:mycat="http://io.mycat/">

<system>

<property name="nonePasswordLogin">0property>

<property name="useHandshakeV10">1property>

<property name="useSqlStat">0property>

<property name="useGlobleTableCheck">0property>

<property name="sequnceHandlerType">2property>

<property name="subqueryRelationshipCheck">falseproperty>

<property name="processorBufferPoolType">0property>

<property name="handleDistributedTransactions">0property>

<property name="useOffHeapForMerge">1property>

<property name="memoryPageSize">64kproperty>

<property name="spillsFileBufferSize">1kproperty>

<property name="useStreamOutput">0property>

<property name="systemReserveMemorySize">384mproperty>

<property name="useZKSwitch">falseproperty>

<property name="serverPort">18068property>

<property name="managerPort">19068property>

system>

<user name="mycat" defaultAccount="true">

<property name="password">mycatproperty>

<property name="schemas">haokeproperty>

user>

mycat:server>

schema.xml

DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="haoke" checkSQLschema="false" sqlMaxLimit="100">

<table name="tb_house_resources" dataNode="dn1,dn2" rule="mod-long" />

<table name="tb_ad" dataNode="dn3"/>

schema>

<dataNode name="dn1" dataHost="cluster1" database="haoke" />

<dataNode name="dn2" dataHost="cluster2" database="haoke" />

<dataNode name="dn3" dataHost="cluster3" database="haoke" />

<dataHost name="cluster1" maxCon="1000" minCon="10" balance="2"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W1" url="8.140.130.91:13306" user="root"

password="root">

<readHost host="W1R1" url="8.140.130.91:13307" user="root"

password="root" />

writeHost>

dataHost>

<dataHost name="cluster2" maxCon="1000" minCon="10" balance="2"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W2" url="8.140.130.91:13308" user="root"

password="root">

<readHost host="W2R1" url="8.140.130.91:13309" user="root"

password="root" />

writeHost>

dataHost>

<dataHost name="cluster3" maxCon="1000" minCon="10" balance="3"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W3" url="82.157.25.57:13310" user="root"

password="root">

<readHost host="W3R1" url="82.157.25.57:13311" user="root"

password="root" />

writeHost>

dataHost>

mycat:schema>

rule.xml

<function name="mod-long" class="io.mycat.route.function.PartitionByMod">

<property name="count">2property>

function>

wrapper.conf

#设置jmx端口

wrapper.java.additional.6=-Dcom.sun.management.jmxremote.port=11986

启动测试

cd bin

./mycat console

./startup_nowrap.sh && tail -f ../logs/mycat.log

CREATE TABLE `tb_ad` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`type` int(10) DEFAULT NULL COMMENT '广告类型',

`title` varchar(100) DEFAULT NULL COMMENT '描述',

`url` varchar(200) DEFAULT NULL COMMENT '图片URL地址',

`created` datetime DEFAULT NULL,

`updated` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=5 DEFAULT CHARSET=utf8 COMMENT='广告表';

CREATE TABLE `tb_house_resources` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`title` varchar(100) DEFAULT NULL COMMENT '房源标题',

`estate_id` varchar(255) DEFAULT NULL COMMENT '楼盘id',

`building_num` varchar(5) DEFAULT NULL COMMENT '楼号(栋)',

`building_unit` varchar(5) DEFAULT NULL COMMENT '单元号',

`building_floor_num` varchar(5) DEFAULT NULL COMMENT '门牌号',

`rent` int(10) DEFAULT NULL COMMENT '租金',

`rent_method` tinyint(1) DEFAULT NULL COMMENT '租赁方式,1-整租,2-合租',

`payment_method` tinyint(1) DEFAULT NULL COMMENT '支付方式,1-付一押一,2-付三押一,3-付六押一,4-年付押一,5-其它',

`house_type` varchar(255) DEFAULT NULL COMMENT '户型,如:2室1厅1卫',

`covered_area` varchar(10) DEFAULT NULL COMMENT '建筑面积',

`use_area` varchar(10) DEFAULT NULL COMMENT '使用面积',

`floor` varchar(10) DEFAULT NULL COMMENT '楼层,如:8/26',

`orientation` varchar(2) DEFAULT NULL COMMENT '朝向:东、南、西、北',

`decoration` tinyint(1) DEFAULT NULL COMMENT '装修,1-精装,2-简装,3-毛坯',

`facilities` varchar(50) DEFAULT NULL COMMENT '配套设施, 如:1,2,3',

`pic` varchar(1000) DEFAULT NULL COMMENT '图片,最多5张',

`house_desc` varchar(200) DEFAULT NULL COMMENT '描述',

`contact` varchar(10) DEFAULT NULL COMMENT '联系人',

`mobile` varchar(11) DEFAULT NULL COMMENT '手机号',

`time` tinyint(1) DEFAULT NULL COMMENT '看房时间,1-上午,2-中午,3-下午,4-晚上,5-全天',

`property_cost` varchar(10) DEFAULT NULL COMMENT '物业费',

`created` datetime DEFAULT NULL,

`updated` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=10 DEFAULT CHARSET=utf8 COMMENT='房源表';

mycat02——阿里云

server.xml

DOCTYPE mycat:server SYSTEM "server.dtd">

<mycat:server xmlns:mycat="http://io.mycat/">

<system>

<property name="nonePasswordLogin">0property>

<property name="useHandshakeV10">1property>

<property name="useSqlStat">0property>

<property name="useGlobleTableCheck">0property>

<property name="sequnceHandlerType">2property>

<property name="subqueryRelationshipCheck">falseproperty>

<property name="processorBufferPoolType">0property>

<property name="handleDistributedTransactions">0property>

<property name="useOffHeapForMerge">1property>

<property name="memoryPageSize">64kproperty>

<property name="spillsFileBufferSize">1kproperty>

<property name="useStreamOutput">0property>

<property name="systemReserveMemorySize">384mproperty>

<property name="useZKSwitch">falseproperty>

<property name="serverPort">18069property>

<property name="managerPort">19069property>

system>

<user name="mycat" defaultAccount="true">

<property name="password">mycatproperty>

<property name="schemas">haokeproperty>

user>

mycat:server>

schema.xml

DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="haoke" checkSQLschema="false" sqlMaxLimit="100">

<table name="tb_house_resources" dataNode="dn1,dn2" rule="mod-long" />

<table name="tb_ad" dataNode="dn3"/>

schema>

<dataNode name="dn1" dataHost="cluster1" database="haoke" />

<dataNode name="dn2" dataHost="cluster2" database="haoke" />

<dataNode name="dn3" dataHost="cluster3" database="haoke" />

<dataHost name="cluster1" maxCon="1000" minCon="10" balance="2"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W1" url="8.140.130.91:13306" user="root"

password="root">

<readHost host="W1R1" url="8.140.130.91:13307" user="root"

password="root" />

writeHost>

dataHost>

<dataHost name="cluster2" maxCon="1000" minCon="10" balance="2"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W2" url="8.140.130.91:13308" user="root"

password="root">

<readHost host="W2R1" url="8.140.130.91:13309" user="root"

password="root" />

writeHost>

dataHost>

<dataHost name="cluster3" maxCon="1000" minCon="10" balance="3"

writeType="1" dbType="mysql" dbDriver="native" switchType="1"

slaveThreshold="100">

<heartbeat>select user()heartbeat>

<writeHost host="W3" url="82.157.25.57:13310" user="root"

password="root">

<readHost host="W3R1" url="82.157.25.57:13311" user="root"

password="root" />

writeHost>

dataHost>

mycat:schema>

rule.xml

<function name="mod-long" class="io.mycat.route.function.PartitionByMod">

<property name="count">2property>

function>

wrapper.conf

vim wrapper.conf

#设置jmx端口

wrapper.java.additional.6=-Dcom.sun.management.jmxremote.port=11987

启动

cd bin

./mycat console

./startup_nowrap.sh && tail -f ../logs/mycat.log

创建表及测试

INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`)

VALUES ('1','1', 'UniCity万科天空之城', 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/5.jpg', '2021-05-17 11:28:49','2021-05-17 11:28:51');

INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`)

VALUES ('2','1', '天和尚海庭前', 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/2.jpg', '2021-05-17 11:29:27','2021-05-17 11:29:29');

INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`)

VALUES ('3','1', '[奉贤 南桥] 光语著', 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/3.jpg', '2021-05-17 11:30:04','2021-05-17 11:30:06');

INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`)

VALUES ('4','1', '[上海周边 嘉兴] 融创海逸长洲', 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/4.jpg', '2021-05-17 11:30:49','2021-05-17 11:30:53');

创建HAProxy

docker pull haproxy:1.9.3

#创建目录,用于存放配置文件

mkdir /haoke/haproxy -p

#创建容器

docker create --name haproxy --net host -v /root/haoke/haproxy:/usr/local/etc/haproxy haproxy:1.9.3

#创建文件

vim /haoke/haproxy/haproxy.cfg

#输入如下内容

global

log 127.0.0.1 local2

maxconn 4000

daemon

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen admin_stats

bind 0.0.0.0:4001

mode http

stats uri /dbs

stats realm Global\ statistics

stats auth admin:admin

listen proxy-mysql

bind 0.0.0.0:4002

mode tcp

balance roundrobin

option tcplog

#代理mycat服务

server mycat_1 82.157.25.57:18068 check port 18068 maxconn 2000

server mycat_2 8.140.130.91:18069 check port 18069 maxconn 2000

#启动容器

docker restart haproxy && docker logs -f haproxy

测试

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('1', '东方曼哈顿 3室2厅 16000元', '1005', '2', '1', '1','1111', '1', '1', '1室1厅1卫1厨1阳台', '2', '2', '1/2', '南', '1', '1,2,3,8,9', NULL, '这个经纪人很懒,没写核心卖点', '张三', '11111111111', '1', '11', '2018-11-16 01:16:00','2018-11-16 01:16:00');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('2', '康城 3室2厅1卫', '1002', '1', '2', '3', '2000', '1','2', '3室2厅1卫1厨2阳台', '100', '80', '2/20', '南', '1', '1,2,3,7,6', NULL, '拎包入住','张三', '18888888888', '5', '1.5', '2018-11-16 01:34:02', '2018-11-16 01:34:02');

=====

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('3', '2', '1002', '2', '2', '2', '2', '1', '1', '1室1厅1卫1厨1阳台', '22', '11', '1/5', '南', '1', '1,2,3', NULL, '11', '22', '33', '1', '3','2018-11-16 21:15:29', '2018-11-16 21:15:29');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`,`payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('4', '11', '1002', '1', '1', '1', '1', '1', '1', '1室1厅1卫1厨1阳台', '11', '1', '1/1', '南', '1', '1,2,3', NULL, '11', '1', '1', '1', '1','2018-11-16 21:16:50', '2018-11-16 21:16:50');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('5', '最新修改房源5', '1002', '1', '1', '1', '3000', '1','1', '1室1厅1卫1厨1阳台', '80', '1', '1/1', '南', '1', '1,2,3', 'http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/12/04/15439353467987363.jpg,http://itcasthaoke.oss-cn-qingdao.aliyuncs.com/images/2018/12/04/15439354795233043.jpg', '11', '1','1', '1', '1', '2018-11-16 21:17:02', '2018-12-04 23:05:19');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('6', '房源标题', '1002', '1', '1', '11', '1', '1', '1', '1室1厅1卫1厨1阳台', '11', '1', '1/1', '南', '1', '1,2,3', 'http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/16/15423743004743329.jpg,http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/11/16/15423743049233737.jpg', '11', '2', '2', '1','1', '2018-11-16 21:18:41', '2018-11-16 21:18:41');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`,`payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('7', '房源标题', '1002', '1', '1', '11', '1', '1', '1', '1室1厅1卫1厨1阳台', '11', '1', '1/1', '南', '1', '1,2,3', 'http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/16/15423743004743329.jpg,http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/11/16/15423743049233737.jpg', '11', '2', '2', '1','1', '2018-11-16 21:18:41', '2018-11-16 21:18:41');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('8', '3333', '1002', '1', '1', '1', '1', '1', '1', '1室1厅1卫1厨1阳台', '1', '1', '1/1', '南', '1', '1,2,3', 'http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/17/15423896060254118.jpg,http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/11/17/15423896084306516.jpg', '1', '1', '1', '1','1', '2018-11-17 01:33:35', '2018-12-06 10:22:20');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('9', '康城 精品房源2', '1002', '1', '2', '3', '1000', '1','1', '1室1厅1卫1厨1阳台', '50', '40', '3/20', '南', '1', '1,2,3', 'http://itcasthaoke.oss-cnqingdao.aliyuncs.com/images/2018/11/30/15435106627858721.jpg,http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/11/30/15435107119124432.jpg', '精品房源', '李四','18888888888', '1', '1', '2018-11-21 18:31:35', '2018-11-30 00:58:46');

Redis集群

redis采用2主2从的架构

规划

| 服务 | 端口 | 服务器 | 容器名 |

|---|---|---|---|

| Redis-node01 | 6379 | 8.140.130.91 | redis-node01 |

| Redis-node02 | 6380 | 8.140.130.91 | redis-node02 |

| Redis-node03 | 6381 | 8.140.130.91 | redis-node03 |

| Redis-node04 | 6382 | 82.157.25.57 | redis-node04 |

| Redis-node05 | 6383 | 82.157.25.57 | redis-node05 |

| Redis-node06 | 6384 | 82.157.25.57 | redis-node06 |

阿里云

docker volume create redis-node01

docker volume create redis-node02

docker volume create redis-node03

#创建容器

docker create --name redis-node01 --net host -v redis-node01:/data redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-01.conf --port 6379 --cluster-announce-ip 8.140.130.91 --cluster-announce-bus-port 16379

docker create --name redis-node02 --net host -v redis-node02:/data redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-02.conf --port 6380 --cluster-announce-ip 8.140.130.91 --cluster-announce-bus-port 16380

docker create --name redis-node03 --net host -v redis-node03:/data redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-03.conf --port 6381 --cluster-announce-ip 8.140.130.91 --cluster-announce-bus-port 16381

# 启动容器

docker start redis-node01 redis-node02

腾讯云

docker volume create redis-node04

docker volume create redis-node05

docker volume create redis-node06

#创建容器

docker create --name redis-node04 --net host -v redis-node04:/data redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-04.conf --port 6382 --cluster-announce-bus-port 16382 --cluster-announce-ip 82.157.25.57

docker create --name redis-node05 --net host -v redis-node05:/data redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-03.conf --port 6383 --cluster-announce-bus-port 16383 --cluster-announce-ip 82.157.25.57

docker create --name redis-node06 --net host -v redis-node06:/data redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-03.conf --port 6384 --cluster-announce-bus-port 16384 --cluster-announce-ip 82.157.25.57

# 启动容器

docker start redis-node05 redis-node06

测试

#进入redis-node01容器进行操作

docker exec -it redis-node01 /bin/bash

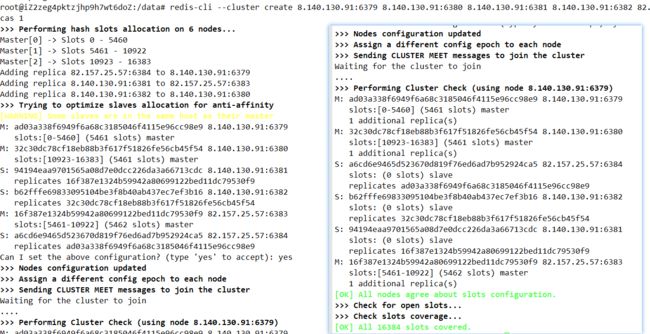

redis-cli --cluster create 8.140.130.91:6379 8.140.130.91:6380 8.140.130.91:6381 82.157.25.57:6382 82.157.25.57:6383 82.157.25.57:6384 --cluster-replicas 1

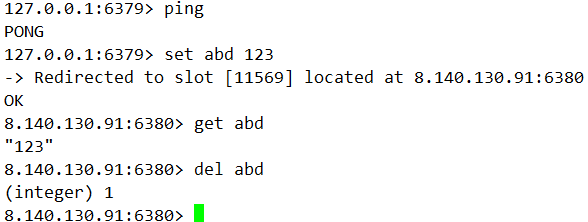

# 测试

redis-cli -c

# 是否能ping通

ping

# 查看集群节点信息

CLUSTER NODES

redis 3.x先主后从

redis 5.x 随机选主从

部署ElastisSearch集群

Elasticsearch集群部署3个节点的集群

规划

| 服务 | 端口 | 服务器 | 容器名 |

|---|---|---|---|

| es-node01 | 9200,9300 | 8.140.130.91 | es-node01 |

| es-node02 | 9201,9301 | 8.130.140.91 | es-node02 |

| es-node03 | 9202,9302 | 82.157.25.57 | es-node03 |

部署

阿里云

# node01/ elasticsearch.yml

---

cluster.name: es-haoke-cluster

node.name: node01

node.master: true

node.data: true

network.host: 0.0.0.0

network.publish_host: 8.140.130.91

http.host: 0.0.0.0

http.port: 9200

transport.tcp.port: 9300

discovery.zen.ping.unicast.hosts: ["8.140.130.91:9300","8.140.130.91:9301","82.157.25.57:9302"]

discovery.zen.minimum_master_nodes: 2

http.cors.enabled: true

http.cors.allow-origin: "*"

---

# jvm.options

---

-Xms512m

-Xmx512m

---

mkdir /data/es-cluster-data/node01/data

chmod 777 /data/es-cluster-data/node01/data

docker create --name es-node01 --net host -v /data/es-cluster-data/node01/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /data/es-cluster-data/node01/jvm.options:/usr/share/elasticsearch/config/jvm.options -v /data/es-cluster-data/node01/data:/usr/share/elasticsearch/data -v /data/es-cluster-data/ik:/usr/share/elasticsearch/plugins/ik elasticsearch:6.5.4

# node02/ elasticsearch.yml

---

cluster.name: es-haoke-cluster

node.name: node02

node.master: true

node.data: true

network.host: 0.0.0.0

network.publish_host: 8.140.130.91

http.host: 0.0.0.0

http.port: 9201

transport.tcp.port: 9301

discovery.zen.ping.unicast.hosts: ["8.140.130.91:9300","8.140.130.91:9301","82.157.25.57:9302"]

discovery.zen.minimum_master_nodes: 2

http.cors.enabled: true

http.cors.allow-origin: "*"

---

# jvm.options

---

-Xms512m

-Xmx512m

---

docker create --name es-node02 --net host -v /data/es-cluster-data/node02/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /data/es-cluster-data/node02/jvm.options:/usr/share/elasticsearch/config/jvm.options -v /data/es-cluster-data/node02/data:/usr/share/elasticsearch/data -v /data/es-cluster-data/ik:/usr/share/elasticsearch/plugins/ik elasticsearch:6.5.4

#启动

docker start es-node01 && docker logs -f es-node01

docker start es-node02 && docker logs -f es-node02

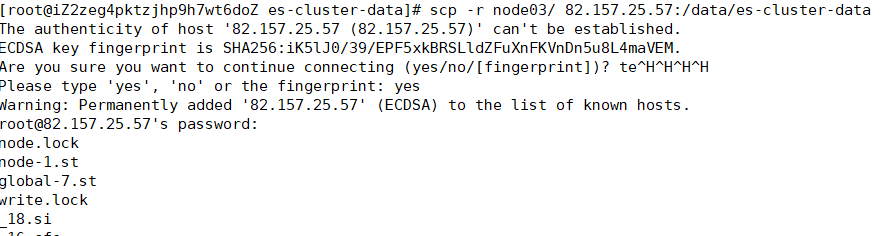

腾讯云

# node03/ elasticsearch.yml

---

cluster.name: es-haoke-cluster

node.name: node03

node.master: true

node.data: true

# 当前系统的内网ip

network.host: 172.21.0.7

# 当前系统的公网ip

#设置结点之间交互的ip地址

network.publish_host: 82.157.25.57

#设置可以访问的ip,默认为0.0.0.0,这里全部设置通过

network.bind_host: 0.0.0.0

# 该主机的所有网卡可被访问

http.host: 0.0.0.0

#对外服务的http端口,默认为9200

http.port: 9202

#节点之间交互的tcp端口

transport.tcp.port: 9302

# 集群单播发现

discovery.zen.ping.unicast.hosts: ["8.140.130.91:9300","8.140.130.91:9301","82.157.25.57:9302"]

discovery.zen.minimum_master_nodes: 2

http.cors.enabled: true

http.cors.allow-origin: "*"

---

# jvm.options

---

-Xms512m

-Xmx512m

---

docker create --name es-node03 --net host -v /data/es-cluster-data/node03/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /data/es-cluster-data/node03/jvm.options:/usr/share/elasticsearch/config/jvm.options -v /data/es-cluster-data/node03/data:/usr/share/elasticsearch/data -v /data/es-cluster-data/ik:/usr/share/elasticsearch/plugins/ik elasticsearch:6.5.4

#启动

docker start es-node03 && docker logs -f es-node03

创建索引测试

PUT http://8.140.130.91:9200/haoke/

{

"settings": {

"index": {

"number_of_shards": 6,

"number_of_replicas": 1

}

},

"mappings": {

"house": {

"dynamic": false,

"properties": {

"title": {

"type": "text",

"analyzer": "ik_max_word"

},

"pic": {

"type": "keyword",

"index": false

},

"orientation": {

"type": "keyword",

"index": false

},

"houseType": {

"type": "keyword",

"index": false

},

"rentMethod": {

"type": "keyword",

"index": false

},

"time": {

"type": "keyword",

"index": false

},

"rent": {

"type": "keyword",

"index": false

},

"floor": {

"type": "keyword",

"index": false

}

}

}

}

}

Logstash同步mysql中数据到ES

docker-logstash

# 拉取镜像

docker pull logstash:6.5.4

# 创建目录

mkdir /data/logstash/data && chmod 777 /data/logstash/data

# 将原始配置文件复制到 logstash 数据目录下

# 配置文件目录

mkdir /data/logstash/conf.d && chmod 777 /data/logstash/conf.d

# 配置logstash.yml

path.config: /usr/share/logstash/conf.d/*.conf

path.logs: /var/log/logstash

# 创建容器

docker run -it -d --name logstash -p 5044:5044 -v /data/logstash/logstash.yml:/usr/share/logstash/config/logstash.yml -v /data/logstash/conf.d/:/usr/share/logstash/conf.d/ logstash:6.5.4

# 启动容器

docker run -it -d logstash && docker logs -f logstash

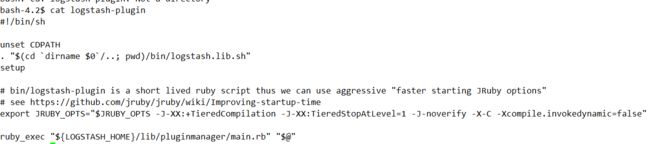

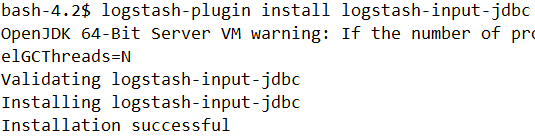

安装logstash-input-jdbc

# 进入容器中

docker exec -it logstash bash

# 进入bin目录下,可以看到logstash-plugin文件夹,安装插件

logstash-plugin install logstash-input-jdbc

配置文件jdbc.conf

http://blog.sqber.com/articles/logstash-mysql-to-stdout.html

input {

jdbc {

jdbc_driver_library => "./my/mysql-connector-java-8.0.25.jar"

jdbc_driver_class => "com.mysql.cj.jdbc.Driver"

jdbc_connection_string => "jdbc:mysql://82.157.25.57:4002/haoke?useUnicode=true&characterEncoding=utf8&serverTimezone=UTC"

jdbc_user => "mycat"

jdbc_password => "mycat"

schedule => "* * * * *"

statement_filepath => "./my/jdbc.sql"

tracking_column => updated

record_last_run => true

clean_run => false

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

ruby {

code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*60*60)"

}

ruby {

code => "event.set('@timestamp',event.get('timestamp'))"

}

mutate {

remove_field => ["timestamp"]

}

}

output{

elasticsearch {

hosts => ["8.140.130.91:9200","8.140.130.91:9201","82.157.25.57:9202"]

index => "test"

document_id => "%{id}"

document_type => "house"

}

stdout {

codec => json_lines

}

}

配置jdbc.sql

select id,title,pic,orientation,house_type,rent_method, time,rent,updated, floor from users where updated>= :sql_last_value

./logstash -f /data/logstash/jdbc.conf

ES尽量使用单独的机器,如果要安装在一台机器上,那就看一下logstash的使用量,一般给个4G就够用了,然后给kibana留个1~2G,剩下的内存除以2,配置给ES就可以了,一般的机器都是最大32G,那个魔法值是对所有机器的。像你这里说的64G ,分给logstash4G、kibana2G,然后剩下58G,除以2,分配给ES 28G比较合理。

搭建zk集群

规划

| 服务 | 端口 | 服务器 | 容器名 |

|---|---|---|---|

| zk01 | 2181,2888,3888 | 8.140.130.91 | zk01 |

| zk02 | 2181,2888,3888 | 82.157.25.57 | zk02 |

部署

#拉取zk镜像

docker pull zookeeper:3.4

#创建容器

docker create --name zk01 --net host -e ZOO_MY_ID=1 -e ZOO_SERVERS="server.1=0.0.0.0:2888:3888 server.2=82.157.25.57:2888:3888" zookeeper:3.4

docker create --name zk02 --net host -e ZOO_MY_ID=2 -e ZOO_SERVERS="server.1=8.140.130.91:2888:3888 server.2=0.0.0.0:2888:3888" zookeeper:3.4

#启动容器

docker start zk01 && docker logs -f zk01

docker start zk02 && docker logs -f zk02

问题:Cannot open channel to 1 at election address /8.140.130.91:3888

将本机ip改为0.0.0.0

搭建RocketMQ集群

2主2从

规划

| 服务 | 端口 | 服务器 | 容器名 |

|---|---|---|---|

| rmqserver01 | 9876 | 192.168.1.7 | rmqserver01 |

| rmqserver02 | 9877 | 192.168.1.7 | rmqserver02 |

| rmqbroker01 | 10911 | 192.168.1.19 | rmqbroker01 |

| rmqbroker02 | 10811 | 192.168.1.19 | rmqbroker02 |

| rmqbroker01-slave | 10711 | 192.168.1.18 | rmqbroker01-slave |

| rmqbroker02-slave | 10611 | 192.168.1.18 | rmqbroker02-slave |

| rocketmq-console | 8082 | 192.168.1.7 | rocketmq-console |