第三章 FlinkSQL集成Iceberg实战案例之日志数据实时写入

1、实时写入文件配置

- 实时写入必要配置:在flink-conf.yaml中配置checkpoints相关参数

restart-strategy: fixed-delay

restart-strategy.fixed-delay.attempts: 3

restart-strategy.fixed-delay.delay: 30s

execution.checkpointing.interval: 1min

execution.checkpointing.externsalized-checkpoint-retention: RETAIN_ON_CANCELLATION

state.checkpoints.dir: hdfs:///flink/checkpoints

state.backend: filesystem

2、数据源模拟

2.1、模拟数据结构

- 数据结构:【自增ID,随机数字】

public class datamaking {

public static void main( String[] args ) throws InterruptedException {

int len = 100000;

int sleepMilis = 1000;

// System.out.println("<生成id范围><每条数据停顿时长毫秒>");

if(args.length == 1){

len = Integer.valueOf(args[0]);

}

if(args.length == 2){

len = Integer.valueOf(args[0]);

sleepMilis = Integer.valueOf(args[1]);

}

Random random = new Random();

for(int i=0; i<10000000; i++){

System.out.println(i+"," + random.nextInt(len) );

Thread.sleep(sleepMilis);

}

}

}

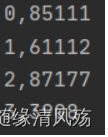

- 模拟结果

2.2、服务器运行数据脚本

#!/bin/bash

#for i in {1..100000};do echo $i,$RANDOM >> /opt/module/logs/mockLog.log; sleep 1s ;done;

nohup java -jar /opt/module/applog/DataMaking-1.0-SNAPSHOT.jar > /opt/module/applog/log/mockLog.log 2>&1 &

- 运行结果

3、日志数据实时同步至Kafka中

3.1、创建配置文件flume-taildir-kafka.conf

# 定义这个agent中各组件的名字

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# 描述和配置source组件:r1

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /opt/data/mockLog.log

# 描述和配置sink组件:k1

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.kafka.topic = jsonTopic

a1.sinks.k1.kafka.bootstrap.servers = leidi:9092

a1.sinks.k1.kafka.flumeBatchSize = 20

a1.sinks.k1.kafka.producer.acks = 1

a1.sinks.k1.kafka.producer.linger.ms = 1

a1.sinks.ki.kafka.producer.compression.type = snappy

# 描述和配置channel组件,此处使用是内存缓存的方式

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# 描述和配置source channel sink之间的连接关系

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

3.2、配置Kafka

(1)创建Kafkatopic

bin/kafka-topics.sh --create --topic jsonTopic --replication-factor 1 --partitions 1 --zookeeper localhost:2181

(2)启动Kafka消费者

bin/kafka-console-producer.sh --topic jsonTopic --broker-list localhost:6667

bin/kafka-console-consumer.sh --bootstrap-server leidi01:6667 --topic jsonTopic --from-beginning

(3)启动flume的agent

bin/flume-ng agent -c conf -f conf/flume-taildir-kafka.conf -n a1 -Dflume.root.logger=INFO,console

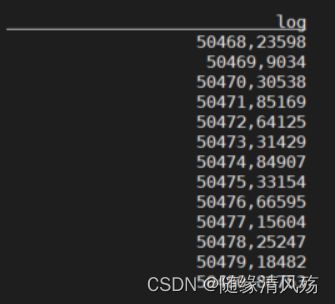

- 运行结果

4、Kafka数据入湖

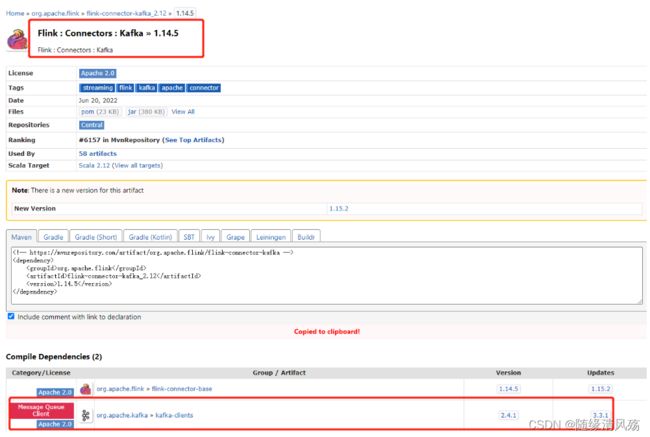

- 相关依赖

https://mvnrepository.com/artifact/org.apache.flink/flink-connector-kafka_2.11/1.14.5

4.1、开启Checkpoint

--开启带hive和kafka功能的FlinkSQL

./sql-client.sh embedded -s yarn-session

--开启checkpoint

SET execution.checkpointing.interval = 3s;

--传统制表形式打开查询结果

SET execution.result-mode=tableau;

4.2、创建Kafka表

use catalog default_catalog;

use default_database;

create table `default_catalog`.`default_database`.`kafka_behavior_log`

(

log STRING

) WITH (

'connector' = 'kafka',

'topic' = 'jsonTopic',

'properties.bootstrap.servers' = 'leidi01:6667',

'properties.group.id' = 'testGroup',

'scan.startup.mode' = 'earliest-offset',

'format' = 'raw'

)

select * from `default_catalog`.`default_database`.`kafka_behavior_log`;

insert into `default_catalog`.`default_database`.`kafka_behavior_log` values("1,hadoop")

- 参数说明

4.3、查询表数据

Flink SQL> select * from kafka_behavior_log_raw;

- 查看结果

4.4、将Kafka表中数据写入Iceberg表中

--创建Hive表

create table `behavior_log`(

log STRING

) STORED BY 'org.apache.iceberg.mr.hive.HiveIcebergStorageHandler';

insert into `behavior_log` select log from `default_catalog`.`default_database`.`kafka_behavior_log`

- 查看Iceberg表结果

- 查看HDFS文件目录

5、报错问题

5.1、Maven打包运行到服务器上报错

- 报错日志

no main manifest attribute, in original-DataMaking-1.0-SNAPSHOT.jar

-

报错原因:java包运行时找不到主类

-

解决方案:pom文件中添加相关配置文件

<build>

<plugins>

<plugin>

<artifactId>maven-compiler-pluginartifactId>

<version>3.8.0version>

<configuration>

<source>1.8source>

<target>1.8target>

configuration>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-shade-pluginartifactId>

<version>3.2.1version>

<executions>

<execution>

<phase>packagephase>

<goals>

<goal>shadegoal>

goals>

<configuration>

<transformers>

<transformer

implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>com.dx.DataMakingmainClass>

transformer>

transformers>

configuration>

execution>

executions>

plugin>

plugins>

build>

5.2、Kafka消费者单点启动报错

- 报错日志

[2022-10-11 17:16:33,513] WARN [Consumer clientId=consumer-1, groupId=console-consumer-33906] Connection to node -1 could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

- 报错原因:Ambari配置的kafka端口为6667

- 解决方案:启动从消费者时指定端口为6667.

5.3、FlinkSQL读取不到Kafka/mysql外部数据

-

问题现象:FlinkSQL无法读取外部数据

-

问题原因:FlinkSQL没有设置Checkpoint

-

解决方案:

- ①Flinksql设置checkpoint:

SET 'execution.checkpointing.interval' = '3s' - ②外部数据Source表创建必须使用default_catalog

- ①Flinksql设置checkpoint:

5.4、FlinkSQL查询Kafka表报错

- 问题现象:

Caused by: java.lang.ClassNotFoundException: org.apache.kafka.clients.consumer.ConsumerRecord