Linux /proc/kcore详解(二)

文章目录

- 前言

- 一、初始化 /proc/kcore

-

- 1.1 数据结构

- 1.2 mem_init

- 1.3 kcore初始化

-

- 1.3.1 创建/proc/kcore文件

- 1.3.2 添加 _text 段

- 1.3.3 添加 vmalloc段

- 1.3.4 添加 modules段

- 1.3.5 添加有效内存

- 二、 读取/proc/kcore

-

- 2.1 read_kcore

- 2.2 构造 ELF header 和 program header

- 2.3 小结

- 总结

- 参考资料

前言

Linux /proc/kcore详解(一)

这篇文章介绍 Linux内核 /proc/kcore 文件 源码解析

内核版本:3.10.0

一、初始化 /proc/kcore

1.1 数据结构

在上一篇文章说到elf core 文件 程序头表中的 PT_LOAD段。

PT_LOAD:每个 segemnt 用来记录一段 memory 区域。

内核用一个双向链表将各个 PT_LOAD segment 组织起来,kclist_head链表中每一个成员对应一个 PT_LOAD segment 。

// include/linux/kcore.h

// PT_LOAD segment 的类型

enum kcore_type {

KCORE_TEXT,

KCORE_VMALLOC,

KCORE_RAM,

KCORE_VMEMMAP,

KCORE_OTHER,

};

//kclist_head链表成员结构体

struct kcore_list {

struct list_head list;

unsigned long addr;

size_t size;

int type;

};

extern void kclist_add(struct kcore_list *, void *, size_t, int type);

// fs/proc/kcore.c

static LIST_HEAD(kclist_head);

static DEFINE_RWLOCK(kclist_lock);

// 将链表成员即: PT_LOAD segment 加入kclist_head 链表中

void kclist_add(struct kcore_list *new, void *addr, size_t size, int type)

{

new->addr = (unsigned long)addr;

new->size = size;

new->type = type;

write_lock(&kclist_lock);

list_add_tail(&new->list, &kclist_head);

write_unlock(&kclist_lock);

}

1.2 mem_init

内核的启动从入口函数 start_kernel() 开始,start_kernel 相当于内核的 main 函数

// init/main.c

start_kernel()

-->mm_init()

-->mem_init()

在start_kernel()函数中,系统初始化的时候,mem_init函数中将 VSYSCALL 区域加入了kcore_list

// arch/x86/mm/init_64.c

static LIST_HEAD(kclist_head);

static struct kcore_list kcore_vsyscall;

void __init mem_init(void)

{

......

/* Register memory areas for /proc/kcore */

kclist_add(&kcore_vsyscall, (void *)VSYSCALL_START, VSYSCALL_END - VSYSCALL_START, KCORE_OTHER);

......

}

// arch/x86/include/uapi/asm/vsyscall.h

#define VSYSCALL_START (-10UL << 20)

#define VSYSCALL_SIZE 1024

#define VSYSCALL_END (-2UL << 20)

#define VSYSCALL_MAPPED_PAGES 1

#define VSYSCALL_ADDR(vsyscall_nr) (VSYSCALL_START+VSYSCALL_SIZE*(vsyscall_nr))

1.3 kcore初始化

// fs/proc/kcore.c

static int __init proc_kcore_init(void)

{

(1)

proc_root_kcore = proc_create("kcore", S_IRUSR, NULL, &proc_kcore_operations);

(2)

/* Store text area if it's special */

proc_kcore_text_init();

(3)

/* Store vmalloc area */

kclist_add(&kcore_vmalloc, (void *)VMALLOC_START, VMALLOC_END - VMALLOC_START, KCORE_VMALLOC);

(4)

add_modules_range();

(5)

/* Store direct-map area from physical memory map */

kcore_update_ram();

(6)

register_hotmemory_notifier(&kcore_callback_nb);

return 0;

}

1.3.1 创建/proc/kcore文件

在proc下创建kcore文件,即创建/proc/kcore文件。

在procfs文件系统中,每个文件都是一个proc_dir_entry。

// fs/proc/kcore.c

static struct proc_dir_entry *proc_root_kcore;

proc_root_kcore = proc_create("kcore", S_IRUSR, NULL, &proc_kcore_operations);

static const struct file_operations proc_kcore_operations = {

.read = read_kcore,

.open = open_kcore,

.llseek = default_llseek,

};

来看一下proc_kcore_operations中的llseek函数指针成员指向default_llseek函数。

//fs/read_write.c

loff_t default_llseek(struct file *file, loff_t offset, int whence)

{

......

}

EXPORT_SYMBOL(default_llseek);

当应用层调用lseek 操作 /proc/kcore文件时:

// include/linux/file.h

struct fd {

struct file *file;

int need_put;

};

// fs/read_write.c

SYSCALL_DEFINE3(lseek, unsigned int, fd, off_t, offset, unsigned int, whence)

{

......

struct fd f = fdget(fd);

......

loff_t res = vfs_llseek(f.file, offset, whence);

......

fdput(f);

....

}

loff_t vfs_llseek(struct file *file, loff_t offset, int whence)

{

loff_t (*fn)(struct file *, loff_t, int);

......

fn = file->f_op->llseek;

......

return fn(file, offset, whence);

}

EXPORT_SYMBOL(vfs_llseek);

即当应用层调用lseek 操作 /proc/kcore文件时,会执行内核的default_llseek函数。

1.3.2 添加 _text 段

在kcore中添加kernel的text段,将内核代码段 _text 加入kclist_head链表

#ifdef CONFIG_ARCH_PROC_KCORE_TEXT

static struct kcore_list kcore_text;

/*

* If defined, special segment is used for mapping kernel text instead of

* direct-map area. We need to create special TEXT section.

*/

static void __init proc_kcore_text_init(void)

{

kclist_add(&kcore_text, _text, _end - _text, KCORE_TEXT);

}

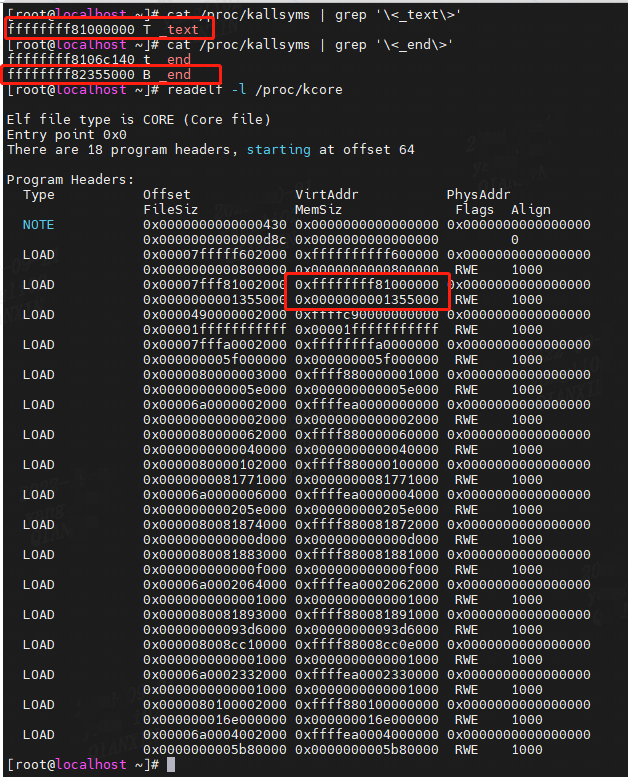

0xffffffff81000000 + 0x0000000001355000 = 0xffffffff82355000

该段不止包括代码段,还有内核得其它数据,比如内核只读数据段,内核数据段,内核BSS段等。

1.3.3 添加 vmalloc段

在kcore中添加vmalloc段,将 VMALLOC内存区域加入kclist_head链表

static struct kcore_list kcore_vmalloc;

kclist_add(&kcore_vmalloc, (void *)VMALLOC_START, VMALLOC_END - VMALLOC_START, KCORE_VMALLOC);

// /arch/x86/include/asm/pgtable_64_types.h

#define VMALLOC_START _AC(0xffffc90000000000, UL)

#define VMALLOC_END _AC(0xffffe8ffffffffff, UL)

1.3.4 添加 modules段

将 MODULES 模块内存区域加入kclist_head链表

/*

* MODULES_VADDR has no intersection with VMALLOC_ADDR.

*/

struct kcore_list kcore_modules;

static void __init add_modules_range(void)

{

kclist_add(&kcore_modules, (void *)MODULES_VADDR, MODULES_END - MODULES_VADDR, KCORE_VMALLOC);

}

// /arch/x86/include/asm/pgtable_64_types.h

#define MODULES_VADDR _AC(0xffffffffa0000000, UL)

#define MODULES_END _AC(0xffffffffff000000, UL)

#define MODULES_LEN (MODULES_END - MODULES_VADDR)

1.3.5 添加有效内存

遍历 System RAM 布局表,将有效内存加入kclist_head链表

static int kcore_update_ram(void)

{

int nid, ret;

unsigned long end_pfn;

LIST_HEAD(head);

/* Not inialized....update now */

/* find out "max pfn" */

end_pfn = 0;

for_each_node_state(nid, N_MEMORY) {

unsigned long node_end;

node_end = NODE_DATA(nid)->node_start_pfn +

NODE_DATA(nid)->node_spanned_pages;

if (end_pfn < node_end)

end_pfn = node_end;

}

(1)

/* scan 0 to max_pfn */

ret = walk_system_ram_range(0, end_pfn, &head, kclist_add_private);

if (ret) {

free_kclist_ents(&head);

return -ENOMEM;

}

(2)

__kcore_update_ram(&head);

return ret;

}

(1) 遍历 System RAM

遍历System RAM ,将标志位IORESOURCE_MEM | IORESOURCE_BUSY的内存加入list链表 。

System RAM 即我们所说的内存条,DDR。

// /kernel/resource.c

/*

* This function calls callback against all memory range of "System RAM"

* which are marked as IORESOURCE_MEM and IORESOUCE_BUSY.

* Now, this function is only for "System RAM".

*/

int walk_system_ram_range(unsigned long start_pfn, unsigned long nr_pages,

void *arg, int (*func)(unsigned long, unsigned long, void *))

{

struct resource res;

unsigned long pfn, end_pfn;

u64 orig_end;

int ret = -1;

res.start = (u64) start_pfn << PAGE_SHIFT;

res.end = ((u64)(start_pfn + nr_pages) << PAGE_SHIFT) - 1;

res.flags = IORESOURCE_MEM | IORESOURCE_BUSY;

orig_end = res.end;

while ((res.start < res.end) &&

(find_next_system_ram(&res, "System RAM") >= 0)) {

pfn = (res.start + PAGE_SIZE - 1) >> PAGE_SHIFT;

end_pfn = (res.end + 1) >> PAGE_SHIFT;

if (end_pfn > pfn)

ret = (*func)(pfn, end_pfn - pfn, arg);

if (ret)

break;

res.start = res.end + 1;

res.end = orig_end;

}

return ret;

}

(2) Replace KCORE_RAM/KCORE_VMEMMAP

/*

* Replace all KCORE_RAM/KCORE_VMEMMAP information with passed list.

*/

static void __kcore_update_ram(struct list_head *list)

{

int nphdr;

size_t size;

struct kcore_list *tmp, *pos;

LIST_HEAD(garbage);

write_lock(&kclist_lock);

if (kcore_need_update) {

//删除掉原有 kclist_head 链表中的 KCORE_RAM/KCORE_VMEMMAP 区域

list_for_each_entry_safe(pos, tmp, &kclist_head, list) {

if (pos->type == KCORE_RAM

|| pos->type == KCORE_VMEMMAP)

list_move(&pos->list, &garbage);

}

//将原有 kclist_head 链表 和全局链表 list 拼接到一起

list_splice_tail(list, &kclist_head);

} else

list_splice(list, &garbage);

kcore_need_update = 0;

//计算 /proc/kcore 文件的长度,这个长度是个虚值,最大是虚拟地址的最大范围

proc_root_kcore->size = get_kcore_size(&nphdr, &size);

write_unlock(&kclist_lock);

//释放掉上面删除的链表成员占用的空间

free_kclist_ents(&garbage);

}

(3)获取kcore文件大小

kcore文件的大小并不是真的占据那么大的空间,而是内核提供的“抽象”实体的意义上的大小就是那么大,这里就是整个内存映像

这个长度是个虚值,最大是虚拟地址的最大范围

static size_t get_kcore_size(int *nphdr, size_t *elf_buflen)

{

size_t try, size;

struct kcore_list *m;

*nphdr = 1; /* PT_NOTE */

size = 0;

list_for_each_entry(m, &kclist_head, list) {

try = kc_vaddr_to_offset((size_t)m->addr + m->size);

if (try > size)

size = try;

*nphdr = *nphdr + 1;

}

*elf_buflen = sizeof(struct elfhdr) +

(*nphdr + 2)*sizeof(struct elf_phdr) +

3 * ((sizeof(struct elf_note)) +

roundup(sizeof(CORE_STR), 4)) +

roundup(sizeof(struct elf_prstatus), 4) +

roundup(sizeof(struct elf_prpsinfo), 4) +

roundup(sizeof(struct task_struct), 4);

*elf_buflen = PAGE_ALIGN(*elf_buflen);

return size + *elf_buflen;

}

二、 读取/proc/kcore

2.1 read_kcore

/*

* read from the ELF header and then kernel memory

*/

static ssize_t read_kcore(struct file *file, char __user *buffer, size_t buflen, loff_t *fpos)

{

size_t size,

size_t elf_buflen;

int nphdr;

.......

//获取到PT_LOAD segment个数、PT_NOTE segment的长度等信息

size = get_kcore_size(&nphdr, &elf_buflen);

char * elf_buf;

elf_buf = kzalloc(elf_buflen, GFP_ATOMIC);

//构造 ELF 文件头,并拷贝给给用户态读内存

//构造 ELF program 头,并拷贝给给用户态读内存

elf_kcore_store_hdr(elf_buf, nphdr, elf_buflen);

read_unlock(&kclist_lock);

if (copy_to_user(buffer, elf_buf + *fpos, tsz)) {

kfree(elf_buf);

return -EFAULT;

}

......

//将 PT_LOAD segment拷贝给给用户态读内存

while (buflen) {

struct kcore_list *m;

read_lock(&kclist_lock);

list_for_each_entry(m, &kclist_head, list) {

if (start >= m->addr && start < (m->addr+m->size))

break;

}

read_unlock(&kclist_lock);

if (&m->list == &kclist_head) {

if (clear_user(buffer, tsz))

return -EFAULT;

} else if (is_vmalloc_or_module_addr((void *)start)) {

char * elf_buf;

elf_buf = kzalloc(tsz, GFP_KERNEL);

if (!elf_buf)

return -ENOMEM;

vread(elf_buf, (char *)start, tsz);

/* we have to zero-fill user buffer even if no read */

if (copy_to_user(buffer, elf_buf, tsz)) {

kfree(elf_buf);

return -EFAULT;

}

kfree(elf_buf);

} else {

if (kern_addr_valid(start)) {

unsigned long n;

n = copy_to_user(buffer, (char *)start, tsz);

/*

* We cannot distinguish between fault on source

* and fault on destination. When this happens

* we clear too and hope it will trigger the

* EFAULT again.

*/

if (n) {

if (clear_user(buffer + tsz - n,

n))

return -EFAULT;

}

} else {

if (clear_user(buffer, tsz))

return -EFAULT;

}

}

buflen -= tsz;

*fpos += tsz;

buffer += tsz;

acc += tsz;

start += tsz;

tsz = (buflen > PAGE_SIZE ? PAGE_SIZE : buflen);

}

}

2.2 构造 ELF header 和 program header

/*

* store an ELF coredump header in the supplied buffer

* nphdr is the number of elf_phdr to insert

*/

static void elf_kcore_store_hdr(char *bufp, int nphdr, int dataoff)

{

struct elf_prstatus prstatus; /* NT_PRSTATUS */

struct elf_prpsinfo prpsinfo; /* NT_PRPSINFO */

struct elf_phdr *nhdr, *phdr;

struct elfhdr *elf;

struct memelfnote notes[3];

off_t offset = 0;

struct kcore_list *m;

(1)

/* setup ELF header */

elf = (struct elfhdr *) bufp;

bufp += sizeof(struct elfhdr);

offset += sizeof(struct elfhdr);

memcpy(elf->e_ident, ELFMAG, SELFMAG);

elf->e_ident[EI_CLASS] = ELF_CLASS;

elf->e_ident[EI_DATA] = ELF_DATA;

elf->e_ident[EI_VERSION]= EV_CURRENT;

elf->e_ident[EI_OSABI] = ELF_OSABI;

memset(elf->e_ident+EI_PAD, 0, EI_NIDENT-EI_PAD);

elf->e_type = ET_CORE;

elf->e_machine = ELF_ARCH;

elf->e_version = EV_CURRENT;

elf->e_entry = 0;

elf->e_phoff = sizeof(struct elfhdr);

elf->e_shoff = 0;

elf->e_flags = ELF_CORE_EFLAGS;

elf->e_ehsize = sizeof(struct elfhdr);

elf->e_phentsize= sizeof(struct elf_phdr);

elf->e_phnum = nphdr;

elf->e_shentsize= 0;

elf->e_shnum = 0;

elf->e_shstrndx = 0;

(2)

/* setup ELF PT_NOTE program header */

nhdr = (struct elf_phdr *) bufp;

bufp += sizeof(struct elf_phdr);

offset += sizeof(struct elf_phdr);

nhdr->p_type = PT_NOTE;

nhdr->p_offset = 0;

nhdr->p_vaddr = 0;

nhdr->p_paddr = 0;

nhdr->p_filesz = 0;

nhdr->p_memsz = 0;

nhdr->p_flags = 0;

nhdr->p_align = 0;

(3)

/* setup ELF PT_LOAD program header for every area */

list_for_each_entry(m, &kclist_head, list) {

phdr = (struct elf_phdr *) bufp;

bufp += sizeof(struct elf_phdr);

offset += sizeof(struct elf_phdr);

phdr->p_type = PT_LOAD;

phdr->p_flags = PF_R|PF_W|PF_X;

phdr->p_offset = kc_vaddr_to_offset(m->addr) + dataoff;

phdr->p_vaddr = (size_t)m->addr;

phdr->p_paddr = 0;

phdr->p_filesz = phdr->p_memsz = m->size;

phdr->p_align = PAGE_SIZE;

}

/*

* Set up the notes in similar form to SVR4 core dumps made

* with info from their /proc.

*/

nhdr->p_offset = offset;

(4)

/* set up the process status */

notes[0].name = CORE_STR;

notes[0].type = NT_PRSTATUS;

notes[0].datasz = sizeof(struct elf_prstatus);

notes[0].data = &prstatus;

memset(&prstatus, 0, sizeof(struct elf_prstatus));

nhdr->p_filesz = notesize(¬es[0]);

bufp = storenote(¬es[0], bufp);

/* set up the process info */

notes[1].name = CORE_STR;

notes[1].type = NT_PRPSINFO;

notes[1].datasz = sizeof(struct elf_prpsinfo);

notes[1].data = &prpsinfo;

memset(&prpsinfo, 0, sizeof(struct elf_prpsinfo));

prpsinfo.pr_state = 0;

prpsinfo.pr_sname = 'R';

prpsinfo.pr_zomb = 0;

strcpy(prpsinfo.pr_fname, "vmlinux");

strncpy(prpsinfo.pr_psargs, saved_command_line, ELF_PRARGSZ);

nhdr->p_filesz += notesize(¬es[1]);

bufp = storenote(¬es[1], bufp);

/* set up the task structure */

notes[2].name = CORE_STR;

notes[2].type = NT_TASKSTRUCT;

notes[2].datasz = sizeof(struct task_struct);

notes[2].data = current;

nhdr->p_filesz += notesize(¬es[2]);

bufp = storenote(¬es[2], bufp);

} /* end elf_kcore_store_hdr() */

(1)

构造 ELF 文件头,其中:

elf->e_type = ET_CORE; //elf文件格式是 ET_CORE 类型

elf->e_phnum = nphdr; //程序头表项的数目

(2)

构造 ELF PT_NOTE program header

nhdr = (struct elf_phdr *) bufp;

bufp += sizeof(struct elf_phdr);

offset += sizeof(struct elf_phdr);

nhdr->p_type = PT_NOTE;

nhdr->p_offset = 0;

nhdr->p_vaddr = 0;

nhdr->p_paddr = 0;

nhdr->p_filesz = 0;

nhdr->p_memsz = 0;

nhdr->p_flags = 0;

nhdr->p_align = 0;

(3)

setup ELF PT_LOAD program header

/* setup ELF PT_LOAD program header for every area */

list_for_each_entry(m, &kclist_head, list) {

phdr = (struct elf_phdr *) bufp;

bufp += sizeof(struct elf_phdr);

offset += sizeof(struct elf_phdr);

phdr->p_type = PT_LOAD;

phdr->p_flags = PF_R|PF_W|PF_X;

phdr->p_offset = kc_vaddr_to_offset(m->addr) + dataoff;

phdr->p_vaddr = (size_t)m->addr;

phdr->p_paddr = 0;

phdr->p_filesz = phdr->p_memsz = m->size;

phdr->p_align = PAGE_SIZE;

}

其中 PF_R代表读权限,PF_W代表写权限,PF_X代表执行权限,且文件大小等于内存大小。

(4)

set up the process status :添加 NT_PRSTATUS

set up the process info : 添加 NT_PRPSINFO

set up the task structure :添加 NT_TASKSTRUCT

// include/uapi/linux/elf.h

#define NT_PRSTATUS 1

#define NT_PRFPREG 2

#define NT_PRPSINFO 3

#define NT_TASKSTRUCT 4

2.3 小结

read_kcore 函数完毕之后,当前内核运行时的整个内存状况就被读取出来,将该内存保存起来就是当时的内存运行快照,我们通过kcore文件可以dump出整个内存,kcore文件就是当前内核运行时的内存镜像,我们可以用来进行调试。

总结

/proc/kcore文件源码解析的内容到此结束了,代码较多。。。

参考资料

https://blog.csdn.net/pwl999/article/details/118418242

https://blog.csdn.net/dog250/article/details/5303663

https://blog.csdn.net/weixin_45030965/article/details/126854838

https://blog.csdn.net/tiantao2012/article/details/84842029