安装部署高可用Kubernetes

目录

Master高可用部署架构

Master负载均衡

创建CA根证书

安装部署高可用的etcd集群

安装etcd

创建etcd的CA证书

etcd配置

启动etcd集群

部署Kubernetes Master高可用集群

下载Kubernetes二进制文件

部署kube-apiserver服务

配置证书

配置systemd文件

配置kube-apiserver配置文件

启动kube-apiserver服务

创建客户端CA证书

创建连接kube-apiserver需要的配置文件

部署kube-controller-manager服务

配置systemd文件

配置kube-controller-manager配置文件

启动kube-controller-manager服务

部署kube-scheduler服务

配置systemd文件

配置kube-scheduler配置文件

启动kube-scheduler服务

HAProxy+Keepalived

部署HAProxy

修改配置文件

部署Keepalived

修改配置文件

部署Node服务

部署kubelet服务

配置systemd文件

配置kubelet配置文件

启动kubelet服务

部署kube-proxy服务

配置systemd文件

配置kube-proxy配置文件

启动kube-proxy服务

安装Calico CNI网络插件

Master验证Node信息

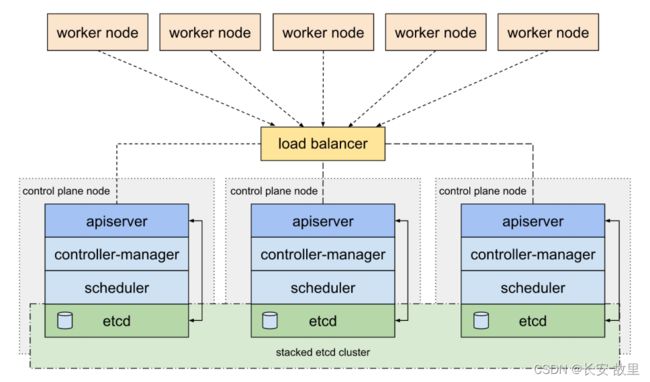

Master高可用部署架构

Kubernetes集群高可用性应包含以下两个层面:

- etcd数据存储的高可用性

- Kubernetes Master组件的高可用性

在正式环境中应确保Master的高可用,并启用安全访问机制,至少包括以下几个方面:

- Master的kube-apiserver、kube-controller-mansger和kube-scheduler服务至少以3各节点的多实例方式部署

- Master启用基于CA认证的HTTPS安全机制

- etcd至少以3各节点的集群模式部署

- etcd集群启用基于CA认证的HTTPS安全机制

- Master启用RBAC授权模式

Master的高可用部署架构如图

在Master的3各节点之前,应通过一个负载均衡器提供对客户端的唯一访问入口地址,负载均衡器可以选择硬件或者软件进行搭建。软件负载均衡器可以选择的方案比较多,可自行选择。

Master负载均衡

为API Server提供负载均衡服务:

- 所有节点都需要通过负载均衡器金额API Server进行通信

- 可选择的负载均衡

- 免费:nginx,haproxy

- 商业:F5,A10

- 云负载均衡

- 负载均衡高可用

- 负载均衡作为入口也需要高可用

- 可搭配选用keepalived

- 使用私有云提供的Floating IP

以HAProxy+Keepalived为例

创建CA根证书

为etcd和Kubernetes服务启用基于CA认证的安全机制,需要CA证书将进行配置。如果组织能够提供统一的CA认证中心,则直接使用组织办法的CA证书即可。如果没有统一的CA认证中心,则可以通过办法自签名的CA证书来完成安全配置。

etcd各Kubernetes在制作CA证书时,均需要基于CA根证书推荐Kubernetes和etcd使用同一套CA根证书

CA证书的制作可以使用openssl,easyrsa、cfssl等工具完成,以openssl为例,以下是创建CA根证书的命令,包括私钥文件ca.key和证书文件ca.crt:

# openssl genrsa -out ca.key 2048

# openssl req -x509 -new -nodes -key ca.key -subj "/CN=192.168.18.3" -days 36500 -out ca.crt

-subj: "/CN"的值为Master主机名或者IP地址

-days: 设置证书的有效期将生成的ca.key和ca.crt文件保存在/etc/kubernetes/pki目录下

安装部署高可用的etcd集群

etcd是kubernetes的主要数据库,在安装kubernetes的各个服务之前的需要提前安装和启动etcd

安装etcd

# yum install etcd -y创建etcd的CA证书

首先创建一个x509 v3配置文件etcd_ssl.cnf,其中subjectAltName参数(alt_names)包括所有的etcd主机的IP地址,例如

# etcd_ssl.cnf

[ req ]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[ req_distinguished_name ]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[ alt_names ]

IP.1 = 192.168.18.3

IP.2 = 192.168.18.4

IP.3 = 192.168.18.5然后使用openssl命令创建etcd的服务端CA证书,包括etcd_server.key和etcd_server.crt文件,将其保存到/etc/etcd/pki目录下:

# openssl genrsa -out etcd_server.key 2048

# openssl req -new -key etcd_server.key -config etcd_ssl.cnf -subj "/CN=etcd-server" -out etcd_server.csr

# openssl x509 -req -in etcd_server.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile etcd_ssl.cnf -out etcd_server.crt其次就是创建客户端使用的CA证书,包括etcd_client.key和etc_client.crt文件,也将其保存到/etc/etcd/pki目录下,后续提供给kube-apiserver连接etcd时使用:

# openssl genrsa -out etcd_client.key 2048

# openssl req -new -key etcd_client.key -config etcd_ssl.cnf -subj "/CN=etcd-client" -out etcd_client.csr

# openssl x509 -req -in etcd_client.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile etcd_ssl.cnf -out etcd_client.crtetcd配置

配置etcd的方式包括启动参数、环境变量、配置文件等,常用方式为修改/etc/etcd/etcd.conf文件。

例如将etcd分别安装在192.168.18.3、192.168.18.4、192.168.18.5主机上,配置/etc/etcd/etcd.conf文件如下:

# /etc/etcd/etcd.conf - node 1

ETCD_NAME=etcd1

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://192.168.18.3:2379

ETCD_ADVERTISE_CLIENT_URLS=https://192.168.18.3:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://192.168.18.3:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://192.168.18.3:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.18.3:2380,etcd2=https://192.168.18.4:2380,etcd3=https://192.168.18.5:2380"

ETCD_INITIAL_CLUSTER_STATE=new

# /etc/etcd/etcd.conf - node 2

ETCD_NAME=etcd2

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://192.168.18.4:2379

ETCD_ADVERTISE_CLIENT_URLS=https://192.168.18.4:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://192.168.18.4:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://192.168.18.4:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.18.3:2380,etcd2=https://192.168.18.4:2380,etcd3=https://192.168.18.5:2380"

ETCD_INITIAL_CLUSTER_STATE=new

# /etc/etcd/etcd.conf - node 3

ETCD_NAME=etcd3

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://192.168.18.5:2379

ETCD_ADVERTISE_CLIENT_URLS=https://192.168.18.5:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://192.168.18.5:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://192.168.18.5:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.18.3:2380,etcd2=https://192.168.18.4:2380,etcd3=https://192.168.18.5:2380"

ETCD_INITIAL_CLUSTER_STATE=new

配置参数具体说明:

etcd服务相关的参数

- ETCD_NAME:etcd的节点名称,各节点不相同

- ETCD_DATA_DIR:etcd的数据存储目录

- ETCD_LISTEN_CLIENT_URLS&&ETCD_ADVERTISE_CLIENT_URLS:为客户端提供的服务监听URL地址

- ETCD_LISTEN_PEER_URLS&&ETCD_INITIAL_ADVERTISE_PEER_URLS:为本集群其他节点提供的服务监听URL地址

- ETCD_INITIAL_CLUSTER_TOKEN:etcd集群名称

- ETCD_INITIAL_CLUSTER:集群各节点endpoint列表

- ETCD_INITIAL_CLUSTER_STATE:初始集群状态,新建为new,集群已存在时为existing

CA证书相关的配置参数

- ETCD_CERT_FILE:etcd服务端CA证书-crt文件的全路径

- ETCD_KEY_FILE:etcd服务端CA证书-key文件全路径

- ETCD_TRUSTED_CA_FILE:CA根证书文件全路径

- ETCD_CLIENT_CERT_AUTH:是否启用客户端证书认证

- ETCD_PEER_CERT_FILE:集群各节点相互认证使用的CA证书-crt文件全路径

- ETCD_PEER_KEY_FILE:集群各节点相互认证使用的CA证书-key文件全路径

- ETCD_PEER_TRUSTED_CA_FILE:CA根证书文件全路径

启动etcd集群

# 开机自启动

# systemctl enable etcd

# 重新启动etcd

# systemctl restart etcd

# 验证集群

# etcdctl --cacert=/etc/kubernetes/pki/ca.crt --cert=/etc/etcd/pki/etcd_client.crt --key=/etc/etcd/pki/etcd_client.key --endpoints=https://192.168.1.10:2379,https://192.168.1.11:2379,https://192.168.1.12:2379 endpoint health

# 结果显示各节点状态为healthy则表示集群正常部署Kubernetes Master高可用集群

下载Kubernetes二进制文件

https://kubernetes.io/releases/

kubernetes-server-linux-amd64\kubernetes\server\bin

├── apiextensions-apiserver

├── kubeadm

├── kube-aggregator

├── kube-apiserver

├── kube-apiserver.docker_tag

├── kube-apiserver.tar

├── kube-controller-manager

├── kube-controller-manager.docker_tag

├── kube-controller-manager.tar

├── kubectl

├── kubectl-convert

├── kubelet

├── kube-log-runner

├── kube-proxy

├── kube-proxy.docker_tag

├── kube-proxy.tar

├── kube-scheduler

├── kube-scheduler.docker_tag

├── kube-scheduler.tar

└── mounter

Master需要安装的服务

- etcd

- kube-apiserver

- kube-controller-manager

- kube-scheduler

工作节点Node需要安装的服务

- docker

- kubelet

- kube-proxy

安装方式:上述服务在文件中找到对应的二进制文件复制到/usr/bin/目录下,并且在/usr/lib/systemd/system目录下创建systemd服务配置文件

部署kube-apiserver服务

配置证书

设置kube-service服务需要的CA相关证书,准备master_ssl.cnf文件用于生成x509 v3 版本的证书,示例如下:

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

DNS.5 = k8s-1

DNS.6 = k8s-2

DNS.7 = k8s-3

IP.1 = 169.169.0.1

IP.2 = 192.168.18.3

IP.3 = 192.168.18.4

IP.4 = 192.168.18.5

IP.5 = 192.168.18.100上述文件中【alt_names】设定了Master服务的全部域名和IP地址包括:

- DNS主机名

- Master Service虚拟服务名称

- IP地址

- Master Service虚拟服务的ClusterIP地址

使用openssl命令创建kube-apiserver的服务端CA证书,包括apiserver.key和apiserver.crt文件,将其保存到/etc/kubernetes/pki目录下:

# openssl genrsa -out apiserver.key 2048

# openssl req -new -key apiserver.key -config master_ssl.cnf -subj "/CN=192.168.18.3" -out apiserver.csr

# openssl x509 -req -in apiserver.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile master_ssl.cnf -out apiserver.crt

配置systemd文件

为kube-apiserver服务闯将systemd服务配置文件/usr/lib/systemd/system/kube-apiserver.service,内容如下:

# /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver $KUBE_API_ARGS

Restart=always

[Install]

WantedBy=multi-user.target配置kube-apiserver配置文件

配置文件/etc/kubernetes/apiserver主要记录了kube-apiserver全部启动参数,其中包含CA安全配置的启动参数,内容如下:

# /etc/kubernetes/apiserver

KUBE_API_ARGS="--insecure-port=0 \

--secure-port=6443 \

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt \

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key \

--client-ca-file=/etc/kubernetes/pki/ca.crt \

--apiserver-count=3 --endpoint-reconciler-type=master-count \

--etcd-servers=https://192.168.18.3:2379,https://192.168.18.4:2379,https://192.168.18.5:2379 \

--etcd-cafile=/etc/kubernetes/pki/ca.crt \

--etcd-certfile=/etc/etcd/pki/etcd_client.crt \

--etcd-keyfile=/etc/etcd/pki/etcd_client.key \

--service-cluster-ip-range=169.169.0.0/16 \

--service-node-port-range=30000-32767 \

--allow-privileged=true \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"以上参数说明:

- secure-port:HTTPS端口号,默认值6443

- insecure-port:HTTP端口号,默认为8080,设置为0表示关闭HTTP访问

- tls-cert-file:服务端CA证书文件的全路径

- tls-private-key-file: 服务端CA私钥文件全路径

- client-ca-file:CA根证书全路径

- apiserver-count::API Server实力数量,例如3,需要同时设定参数--endpoint-reconciler-type=master-count

- etcd-servers:连接etcd的URL列表,注意使用https

- etcd-cafile:etcd使用的CA根证书文件的全路径

- etcd-certfile:etcd客户端CA证书文件全路径

- etcd-keyfile:etcd客户端私钥文件全路径

- service-cluster-ip-range:Service虚拟IP地址范围,以CIDR格式表示

- service-node-port-range:Service可使用的物理机端口号范围,30000-32767

- allow-privileged:是否允许容器以特权模式运行

- logtostderr:是否将日志输出到stderr

- log-dir:日志的输出目录

- v: 日志级别

启动kube-apiserver服务

# 开机自启动kube-apiserver

# systemctl enable kube-apiserver

# 启动kube-apiserver

# systemctl start kube-apiserver创建客户端CA证书

kube-controller-manager、kube-scheduler、kubelet和kube-proxy服务作为客户端连接kube-apiserver,需要为他们创建客户端CA证书进行访问

通过openssl工具常见CA证书和私钥文件

# openssl genrsa -out client.key 2048

# openssl req -new -key client.key -subj "/CN=admin" -out client.csr

# openssl x509 -req -in client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out client.crt -days 36500

将生成的client.key和client.crt文件保存在/etc/kubernetes/pki目录下

创建连接kube-apiserver需要的配置文件

kube-controller-manager、kube-scheduler、kubelet和kube-proxy连接kube-apiserver需要相关的配置文件kubeconfig,在kubeconfig文件中主要设定kube-apiserver的URL地址及所需CA证书等相关信息,示例如下:

# /etc/kubernetes/kubeconfig

apiVersion: v1

kind: Config

clusters:

- name: default

cluster:

server: https://192.168.18.100:9443

certificate-authority: /etc/kubernetes/pki/ca.crt

users:

- name: admin

user:

client-certificate: /etc/kubernetes/pki/client.crt

client-key: /etc/kubernetes/pki/client.key

contexts:

- context:

cluster: default

user: admin

name: default

current-context: default以上参数说明:

- Server:配置为负载均衡器(HAProxy)使用的VIP地址

- client-certificate:配置为客户端证书文件(client.crt)全路径

- client-key:配置为客户端私钥文件(client.key)全路径

- certificate-authority:配置为CA根证书(ca.crt)全路径

- user:连接API Server的用户名,设置与客户端证书中的"/CN"名称保持一致即可

部署kube-controller-manager服务

配置systemd文件

# /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

配置kube-controller-manager配置文件

# /etc/kubernetes/controller-manager

KUBE_CONTROLLER_MANAGER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=true \

--service-cluster-ip-range=169.169.0.0/16 \

--service-account-private-key-file=/etc/kubernetes/pki/apiserver.key \

--root-ca-file=/etc/kubernetes/pki/ca.crt \

--log-dir=/var/log/kubernetes --logtostderr=false --v=0"以上参数说明:

- kubeconfig: 与API Server连接的相关配置

- leader-elect:启用选举机制,在三个节点环境中应该设定为true

- service-account-private-key-file:为ServiceAccount自动颁发token使用的私钥文件全路径

- root-ca-file:CA根证书全路径

- service-cluster-ip-range:Service虚拟IP地址范围

启动kube-controller-manager服务

# 开机自启动kube-controller-manager

# systemctl enable kube-controller-manager

# 启动kube-controller-manager

# systemctl start kube-controller-manager部署kube-scheduler服务

配置systemd文件

# /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/scheduler

ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target配置kube-scheduler配置文件

# /etc/kubernetes/scheduler

KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=true \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"以上参数说明:

- kubeconfig: 与API Server连接的相关配置

- leader-elect:启用选举机制,在三个节点环境中应该设定为true

启动kube-scheduler服务

# 开机自启动kube-scheduler

# systemctl enable kube-scheduler

# 启动kube-scheduler

# systemctl start kube-schedulerHAProxy+Keepalived

由于kube-apiserver存在三个,使用HAProxy——Keepalived实现负载均衡,并且通过VIP地址作为master的唯一入口提供给客户端使用。

HAProxy+Keepalived至少需要两个实例,放置单点故障。HAProxy负责将客户端的请求转发到后端的3各kube-apiserver实例上,keepalived负责维护VIP的高可用。

部署HAProxy

# 安装haproxy

# yum install haproxy -y

修改配置文件

# /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4096

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend kube-apiserver

mode tcp

bind *:9443

option tcplog

default_backend kube-apiserver

listen stats

mode http

bind *:8888

stats auth admin:password

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /stats

log 127.0.0.1 local3 err

backend kube-apiserver

mode tcp

balance roundrobin

server k8s-master1 192.168.18.3:6443 check

server k8s-master2 192.168.18.4:6443 check

server k8s-master3 192.168.18.5:6443 check

主要参数说明:

- frontend:HAProxy的监听协议和端口号

- backend:后端需要代理的地址,这里指的的kube-apiserver的地址,mode指的是是设定协议,balance指定负载均衡策略,例如roundrobin为轮询

- listen stats:状态监控的服务配置,其中bind用于设定监听端口号8888;stats auth用于配置访问账号;stats uri用于配置访问URL路径,例如/stats

部署Keepalived

# 安装keepalived

# yum install keepalived -y

修改配置文件

两个keepalived配置不同

keepalived 1

# /etc/keepalived/keepalived.conf - master 1

! Configuration File for keepalived

global_defs {

router_id LVS_1

}

vrrp_script checkhaproxy

{

script "/usr/bin/check-haproxy.sh"

interval 2

weight -30

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

virtual_ipaddress {

192.168.18.100/24 dev ens33

}

authentication {

auth_type PASS

auth_pass password

}

track_script {

checkhaproxy

}

}keepalived 2

# /etc/keepalived/keepalived.conf - master 2

! Configuration File for keepalived

global_defs {

router_id LVS_2

}

vrrp_script checkhaproxy

{

script "/usr/bin/check-haproxy.sh"

interval 2

weight -30

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 100

advert_int 1

virtual_ipaddress {

192.168.18.100/24 dev ens33

}

authentication {

auth_type PASS

auth_pass password

}

track_script {

checkhaproxy

}

}主要参数说明:

- vrrp_instance VI_1: 设置keepalived虚拟路由器VRRP的名称

- state:设置为“MASTER”,将其它均设定为“BACKUP”

- interface:带设定VIP地址的网卡名称

- virtual_router_id:随意数字

- priority:优先级

- virtual_ipaddress:VIP地址

- authentication:访问keepalived服务的鉴权信息

- track_script:HAProxy健康检查脚本

HAProxy健康检查脚本放置在/usr/bin目录下,内容如下:

# check-haproxy.sh

#!/bin/bash

count=`netstat -apn | grep 9443 | wc -l`

if [ $count -gt 0 ]; then

exit 0

else

exit 1

fi

两个keepalive的配置文件差异点

- vrrp_instance中的state表示集群中的角色,有且仅有一个MASTER,其余的都是BACKUP

- vrrp_instance的值VI_1需要相同,属于同一个路由器组,当MASTER不可用时,会重新选举一个MASTER

部署Node服务

在Node上需要部署docker、kubelet、kube-proxy,在加入kubernets之后,需要安装CNI是网络插件、DNS插件等管理组件,关于docker的安装部署可参考官网的说明文档。

部署kubelet服务

配置systemd文件

# /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/kubernetes/kubernetes

After=docker.target

[Service]

EnvironmentFile=/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet $KUBELET_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

配置kubelet配置文件

# /etc/kubernetes/kubelet

KUBELET_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --config=/etc/kubernetes/kubelet.config \

--hostname-override=192.168.18.3 \

--network-plugin=cni \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"以上参数说明:

- kubeconfig:设置与API Server连接的相关配置,可以与kube-controller-manager使用的kubeconfig相同,需要将相关客户端证书文件从Master主机复制到Node主机的/etc/kubernetes/pki目录下

- config:kubelet配置文件

- hostname-override:设置本Node在集群中的名称,默认为主机名

- network-plugin:网络插件类型,建议使用CNI网络插件

配置文件kubelet.config示例如下:

# /etc/kubernetes/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

cgroupDriver: cgroupfs

clusterDNS: ["169.169.0.100"]

clusterDomain: cluster.local

authentication:

anonymous:

enabled: true

以上参数说明:

- address:服务监听IP地址

- port:服务监听端口号,默认10250

- cgroupDriver:设置为cgroupDriver驱动,默认值cgroupfs

- clusterDNS:集群DNS服务的IP地址

- clusterDomain:服务DNS域名后缀

- authentication:设置是否允许匿名访问或者是否使用webhook进行鉴权

启动kubelet服务

# 开机自启动kubelet

# systemctl enable kubelet

# 启动kubelet

# systemctl start kubelet部署kube-proxy服务

配置systemd文件

# /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy $KUBE_PROXY_ARGS

Restart=always

[Install]

WantedBy=multi-user.target配置kube-proxy配置文件

# /etc/kubernetes/proxy

KUBE_PROXY_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--hostname-override=192.168.18.3 \

--proxy-mode=iptables \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

以上参数说明:

- kubeconfig:设置与API Server连接的相关配置,可以与kube-controller-manager使用的kubeconfig相同,需要将相关客户端证书文件从Master主机复制到Node主机的/etc/kubernetes/pki目录下

- hostname-override:设置本Node在集群中的名称,默认为主机名

- proxy-mode:代理模式

启动kube-proxy服务

# 开机自启动kube-proxy

# systemctl enable kube-proxy

# 启动kube-proxy

# systemctl start kube-proxy安装Calico CNI网络插件

# kubectl apply -f "https://docs.projectcalico.org/manifests/calico.yaml"Master验证Node信息

当node的kubelet和kube-proxy服务正常启动之后,node会自动注册到Master上,可通过kubectl查询kubernetes集群中的node信息

由于Master开启了HTTPS认证,所以在kubectl也需要使用客户端CA证书连接Master,使用指定了CA证书的kubeconfig文件访问:

# kubectl --kubeconfig=/etc/kubernetes/kubeconfig get nodes目前集群尚未正常工作,还需要部署DNS服务,建议使用CoreDNS进行部署