mac中使用docker搭建kafka集群

一 环境准备

| 环境 | 参数 |

|---|---|

| mac | mac os12.3 |

| docker | 4.2.0 |

| kafka | wurstmeister/kafka |

| zookeeper | zookeeper:latest |

二 前期准备

为了防止一些小伙伴前面自己做过docker搭建kafka,影响后续工作,先删除一下之前的历史配置,没有做过的可以直接跳过。

- 删除所有dangling数据卷(即无用的Volume,僵尸文件)

docker volume rm $(docker volume ls -qf dangling=true)

- 删除所有dangling镜像(即无tag的镜像)

docker rmi $(docker images | grep "^" | awk "{print $3}"

- 删除所有关闭的容器

docker ps -a | grep Exit | cut -d ' ' -f 1 | xargs docker rm

三 编写docker文件

- 集群规划

| hostname | Ip addr | port | listener |

|---|---|---|---|

| zook1 | 172.20.10.11 | 2184:2181 | |

| zook2 | 172.20.10.12 | 2185:2181 | |

| zook3 | 172.20.10.11 | 2184:2181 | |

| kafka1 | 172.20.10.11 | 内部9092:9092,外部9192:9192 | kafka1 |

| kafka2 | 172.20.10.11 | 内部9093:9093,外部9193:9193 | kafka2 |

| Kafka3 | 172.20.10.16 | 内部9094:9094,外部9194:9194 | Kafka3 |

| 本机 | (宿主机Mbp) | 172.20.10.2 |

- 编写docker文件

(1)zk-docker-compose.yml

version: '3.4'

services:

zook1:

image: zookeeper:latest

#restart: always #自动重新启动

hostname: zook1

container_name: zook1 #容器名称,方便在rancher中显示有意义的名称

ports:

- 2183:2181 #将本容器的zookeeper默认端口号映射出去

volumes: # 挂载数据卷 前面是宿主机即本机的目录位置,后面是docker的目录

- "/Users/zhy/opt/kafka/zookeeper/volume/zkcluster/zook1/data:/data"

- "/Users/zhy/opt/kafka/zookeeper/volume/zkcluster/zook1/datalog:/datalog"

- "/Users/zhy/opt/kafka/zookeeper/volume/zkcluster/zook1/logs:/logs"

environment:

ZOO_MY_ID: 1 #即是zookeeper的节点值,也是kafka的brokerid值

ZOO_SERVERS: server.1=zook1:2888:3888;2181 server.2=zook2:2888:3888;2181 server.3=zook3:2888:3888;2181

networks:

docker-net:

ipv4_address: 172.20.10.11

zook2:

image: zookeeper:latest

#restart: always #自动重新启动

hostname: zook2

container_name: zook2 #容器名称,方便在rancher中显示有意义的名称

ports:

- 2184:2181 #将本容器的zookeeper默认端口号映射出去

volumes:

- "/Users/zhy/opt/kafka/zookeeper/volume/zkcluster/zook2/data:/data"

- "/Users/zhy/opt/kafka/zookeeper/volume/zkcluster/zook2/datalog:/datalog"

- "/Users/zhy/opt/kafka/zookeeper/volume/zkcluster/zook2/logs:/logs"

environment:

ZOO_MY_ID: 2 #即是zookeeper的节点值,也是kafka的brokerid值

ZOO_SERVERS: server.1=zook1:2888:3888;2181 server.2=zook2:2888:3888;2181 server.3=zook3:2888:3888;2181

networks:

docker-net:

ipv4_address: 172.20.10.12

zook3:

image: zookeeper:latest

#restart: always #自动重新启动

hostname: zook3

container_name: zook3 #容器名称,方便在rancher中显示有意义的名称

ports:

- 2185:2181 #将本容器的zookeeper默认端口号映射出去

volumes:

- "/Users/zhy/opt/kafka/zookeeper/volume/zkcluster/zook3/data:/data"

- "/Users/zhy/opt/kafka/zookeeper/volume/zkcluster/zook3/datalog:/datalog"

- "/Users/zhy/opt/kafka/zookeeper/volume/zkcluster/zook3/logs:/logs"

environment:

ZOO_MY_ID: 3 #即是zookeeper的节点值,也是kafka的brokerid值

ZOO_SERVERS: server.1=zook1:2888:3888;2181 server.2=zook2:2888:3888;2181 server.3=zook3:2888:3888;2181

networks:

docker-net:

ipv4_address: 172.20.10.13

networks:

docker-net:

name: docker-net

(2)kafka-docker-compose.yml

version: '2'

services:

kafka1:

image: docker.io/wurstmeister/kafka

#restart: always #自动重新启动

hostname: kafka1

container_name: kafka1

ports:

- 9093:9093

- 9193:9193

environment:

KAFKA_BROKER_ID: 1

KAFKA_LISTENERS: INSIDE://:9093,OUTSIDE://:9193

#KAFKA_ADVERTISED_LISTENERS=INSIDE://<container>:9092,OUTSIDE://<host>:9094

KAFKA_ADVERTISED_LISTENERS: INSIDE://172.20.10.14:9093,OUTSIDE://localhost:9193

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: INSIDE

KAFKA_ZOOKEEPER_CONNECT: zook1:2181,zook2:2181,zook3:2181

ALLOW_PLAINTEXT_LISTENER : 'yes'

JMX_PORT: 9999 #开放JMX监控端口,来监测集群数据

volumes:

- /Users/zhy/Development/volume/kafka/kafka1/wurstmeister/kafka:/wurstmeister/kafka

- /Users/zhy/Development/volume/kafka/kafka1/kafka:/kafka

external_links:

- zook1

- zook2

- zook3

networks:

docker-net:

ipv4_address: 172.20.10.14

kafka2:

image: docker.io/wurstmeister/kafka

#restart: always #自动重新启动

hostname: kafka2

container_name: kafka2

ports:

- 9094:9094

- 9194:9194

environment:

KAFKA_BROKER_ID: 2

KAFKA_LISTENERS: INSIDE://:9094,OUTSIDE://:9194

#KAFKA_ADVERTISED_LISTENERS=INSIDE://<container>:9092,OUTSIDE://<host>:9094

KAFKA_ADVERTISED_LISTENERS: INSIDE://172.20.10.15:9094,OUTSIDE://localhost:9194

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: INSIDE

KAFKA_ZOOKEEPER_CONNECT: zook1:2181,zook2:2181,zook3:2181

ALLOW_PLAINTEXT_LISTENER : 'yes'

JMX_PORT: 9999 #开放JMX监控端口,来监测集群数据

volumes:

- /Users/zhy/Development/volume/kafka/kafka2/wurstmeister/kafka:/wurstmeister/kafka

- /Users/zhy/Development/volume/kafka/kafka2/kafka:/kafka

external_links:

- zook1

- zook2

- zook3

networks:

docker-net:

ipv4_address: 172.20.10.15

kafka3:

image: docker.io/wurstmeister/kafka

#restart: always #自动重新启动

hostname: kafka3

container_name: kafka3

ports:

- 9095:9095

- 9195:9195

environment:

KAFKA_BROKER_ID: 3

KAFKA_LISTENERS: INSIDE://:9095,OUTSIDE://:9195

#KAFKA_ADVERTISED_LISTENERS=INSIDE://<container>:9092,OUTSIDE://<host>:9094

KAFKA_ADVERTISED_LISTENERS: INSIDE://172.20.10.16:9095,OUTSIDE://localhost:9195

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: INSIDE

KAFKA_ZOOKEEPER_CONNECT: zook1:2181,zook2:2181,zook3:2181

ALLOW_PLAINTEXT_LISTENER : 'yes'

JMX_PORT: 9999 #开放JMX监控端口,来监测集群数据

volumes:

- /Users/zhy/Development/volume/kafka/kafka3/wurstmeister/kafka:/wurstmeister/kafka

- /Users/zhy/Development/volume/kafka/kafka3/kafka:/kafka

external_links:

- zook1

- zook2

- zook3

networks:

docker-net:

ipv4_address: 172.20.10.16

networks:

docker-net:

name: docker-net

(3)kafka-manager-docker-compose.yml

version: '2'

services:

kafka-manager:

image: scjtqs/kafka-manager:latest

restart: always

hostname: kafka-manager

container_name: kafka-manager

ports:

- 9000:9000

external_links: # 连接本compose文件以外的container

- zook1

- zook2

- zook3

- kafka1

- kafka2

- kafka3

environment:

ZK_HOSTS: zook1:2181,zook2:2181,zook3:2181

KAFKA_BROKERS: kafka1:9093,kafka2:9094,kafka3:9095

APPLICATION_SECRET: letmein

KM_ARGS: -Djava.net.preferIPv4Stack=true

networks:

docker-net:

ipv4_address: 172.20.10.10

networks:

docker-net:

external:

name: docker-net

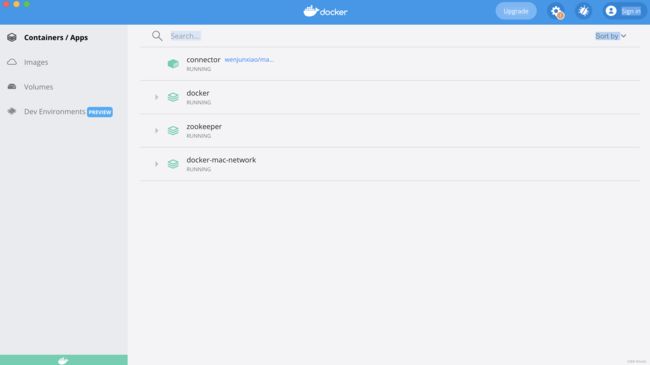

(4)在文件目录下,依次启动docker文件

docker compose -p zookeeper -f ./zk-docker-compose.yml up -d

docker compose -f ./kafka-docker-compose.yml up -d

docker compose -f ./kafka-manager-docker-compose.yml up -d

(5)创建分区

随便进入一个kafka容器内

cd /opt/kafka_2.13-2.8.1/

bin/kafka-topics.sh --create --zookeeper zook1:2181 --replication-factor 2 --partitions 2 --topic partopic

三 整合spriongboot

pom中引入依赖

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.12.0</version>

</dependency>

这样kafka的依赖会与你的springboot版本一致。

配置文件:

spring:

kafka:

bootstrap-servers: 172.20.10.14:9093,172.20.10.15:9094,172.20.10.16:9095

producer:

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

consumer:

group-id: test

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

**注意:

**(1)此版本kafka,bootstrap-servers已经不需要依赖zookeeper,所以直接配置kafka本身的ip及端口。如果用zookeeper配置会报错:

[Producer clientId=producer-1] Bootstrap broker 172.20.10.12:2181 (id: -2 rack: null) disconnected

06-21 23:21:36.768 ERROR

(2)但是mac中本身与容器不能互通,所以需要自己添加网络代理,引用一个文章配置:**

https://blog.csdn.net/tqtaylor/article/details/119799526

(3)kafka配置文件中的KAFKA_LISTENERS配置不能用容器名,虽然用容器名配置没问题,但是在mac本机使用,会导致识别不到该host。**

生产者代码:

package com.ziyi.controller;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

/**

* @author zhy

* @data 2022/6/21 21:17

*/

@RestController

@RequestMapping("/kafka")

public class TestKafkaMQController {

@Autowired

private KafkaTemplate template;

@RequestMapping("/sendMsg")

public String sendMsg(String topic, String message) {

template.send(topic, message);

return "success";

}

}

消费者代码

package com.ziyi.mq.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

/**

* @author zhy

* @data 2022/6/21 21:16

*/

public class KafkaConsumer {

@KafkaListener(topics = {"partopic"})

public void listen(ConsumerRecord record){

System.out.println(record.topic()+":"+record.value());

}

}

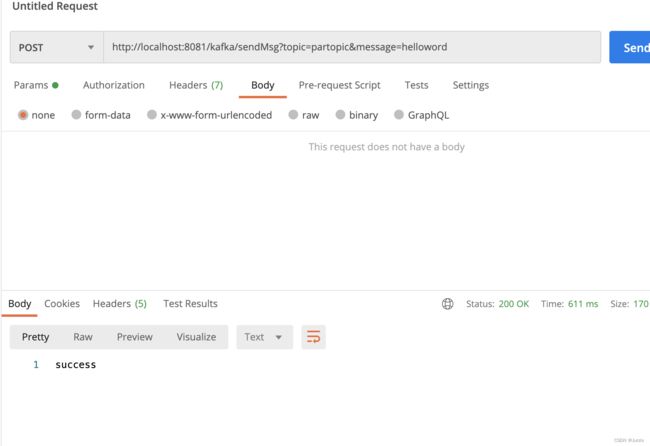

接下来用postman测试下:

http://localhost:8081/kafka/sendMsg?topic=partopic&message=helloword