09-基于Kubernetes v1.25.0和Docker部署集群

基于 Kubernetes v1.25.0和Docker部署集群

-

- 1.1 Kubernetes 组件

- 1.2 k8s集群拓扑图

- 1.3 准备环境

-

- 1.3.1 机器环境

- 1.3.2 节点环境

- 1.4 基于Kubeadm 实现 Kubernetes v1.25.0集群部署流程说明

- 1.5 基于Kubeadm 部署 Kubernetes v1.25.0高可用集群案例

-

- 1.5.1 每个节点主机的初始环境准备

-

- 1.5.1.1 配置 ssh key 验证

- 1.5.1.2 设置主机名和解析

- 1.5.1.3 禁用 swap

- 1.5.1.4 禁用 时区同步

- 1.5.1.5 禁用 防火墙

- 1.5.2 所有Master和Node节点都安装docker

- 1.5.3 所有Master和Node节点安装kubeadm 、kubelet、kubectl

- 1.5.4 所有Master和Node节点安装和配置cri-dockerd

-

- 1.5.4.1 安装cri-dockerd

- 1.5.4.2 配置cri-dockerd并启动

- 1.5.5 在第一个 master 节点初始化 Kubernetes 集群

- 1.5.6 在第一个 master 节点生成 kubectl 命令的授权文件

- 1.5.7 实现 kubectl 命令补全

- 1.5.8 在第一个 master 节点配置网络组件

- 1.5.9 其它master节点加入到控制平面集群(多个master需要这一步)

- 1.5.10 其它工作节点(node节点)加入集群

- 1.5.11 创建 pod 并启动容器测试访问,并测试网络通信

-

- 1.5.11.1 测试方法一

- 1.5.11.2 测试方法二

- 1.5.11.3 扩容和缩容

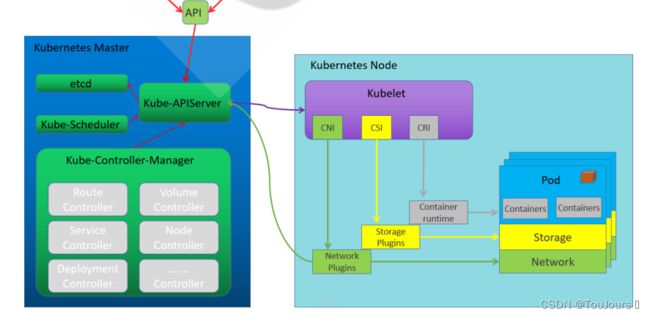

1.1 Kubernetes 组件

Kubernetes提供了三个特定功能的接口,kubernetes通过调用这几个接口,来完成相应的功能。

- 容器运行时接口CRI: Container Runtime Interface

kubernetes 对于容器的解决方案,只是预留了容器接口,只要符合CRI标准的解决方案都可以使用 - 容器网络接口CNI: Container Network Interface

kubernetes 对于网络的解决方案,只是预留了网络接口,只要符合CNI标准的解决方案都可以使用 - 容器存储接口CSI: Container Storage Interface

kubernetes 对于存储的解决方案,只是预留了存储接口,只要符合CSI标准的解决方案都可以使用

此接口非必须

1.2 k8s集群拓扑图

1.3 准备环境

1.3.1 机器环境

- 每个主机2G内存以上,2核CPU以上

- OS: Ubuntu 20.04.5 LTS

- Kubernetes:v1.25,0

- Container Runtime: Docker CE 20.10.12

- CRI:cri-dockerd v0.2.5.3

1.3.2 节点环境

| ip地址 | 主机名称 | 角色 |

|---|---|---|

| 10.0.0.70 | kubeapi.lec.org | 指定控制平面的固定访问地址 |

| 10.0.0.70 | master1.lec.org | K8s 集群主节点 1,Master和etcd |

| 10.0.0.71 | node1.lec.org | K8s 集群工作节点 1 |

| 10.0.0.72 | node2.lec.org | K8s 集群工作节点 2 |

| 10.0.0.73 | node3.lec.org | K8s 集群工作节点 3 |

1.4 基于Kubeadm 实现 Kubernetes v1.25.0集群部署流程说明

- 每个节点主机的初始环境准备

- 在所有Master和Node节点都安装docker容器运行时,实际Kubernetes只使用其中的Containerd

- 在所有Master和Node节点安装kubeadm 、kubelet、kubectl

- 在所有节点安装和配置 cri-dockerd

- 在第一个 master 节点运行 kubeadm init 初始化命令 ,并验证 master 节点状态

- 在第一个 master 节点生成 kubectl 命令的授权文件

- 实现 kubectl 命令补全

- 在第一个 master 节点安装配置网络插件

- 在其它master节点运行kubeadm join 命令加入到控制平面集群中(多个master需要这一步)

- 在所有 node 节点使用 kubeadm join 命令加入集群

- 创建 pod 并启动容器测试访问 ,并测试网络通信

1.5 基于Kubeadm 部署 Kubernetes v1.25.0高可用集群案例

1.5.1 每个节点主机的初始环境准备

1.5.1.1 配置 ssh key 验证

配置 ssh key 验证,方便后续同步文件

master1上

ssh-keygen

# 将公钥文件传给其他机器

ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

1.5.1.2 设置主机名和解析

# master1上

hostnamectl set-hostname master1.lec.org

cat >> /etc/hosts <<EOF

10.0.0.70 master1.lec.org

10.0.0.70 kubeapi.lec.org

10.0.0.71 node1.lec.org

10.0.0.72 node2.lec.org

10.0.0.73 node3.lec.org

EOF

# 拷贝到其他node上

for i in {71..73};do scp /etc/hosts 10.0.0.$i:/etc/ ;done

# node1上

hostnamectl set-hostname node1.lec.org

# node2上

hostnamectl set-hostname node2.lec.org

# node3上

hostnamectl set-hostname node3.lec.org

1.5.1.3 禁用 swap

sed -i '/swap/s/^/#/' /etc/fstab;swapoff -a

1.5.1.4 禁用 时区同步

# 借助于chronyd服务(程序包名称chrony)设定各节点时间精确同步

apt -y install chrony

chronyc sources -v

# 时区同步

timedatectl set-timezone Asia/Shanghai

1.5.1.5 禁用 防火墙

#禁用默认配置的iptables防火墙服务

ufw disable

ufw status

root@master1:~# ufw status

Status: inactive

#如果是inactive表示已经关闭,如果是active表示已经启动

1.5.2 所有Master和Node节点都安装docker

#Ubuntu20.04可以利用内置仓库安装docker

# 4台机器都要安装

apt update

apt -y install docker.io

# master1上

# docker镜像加速

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": [

"https://docker.mirrors.ustc.edu.cn",

"https://hub-mirror.c.163.com",

"https://reg-mirror.qiniu.com",

"https://registry.docker-cn.com"

],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

# 将镜像加速配置文件拷贝到node节点机器上

for i in {71..73};do scp /etc/docker/daemon.json 10.0.0.$i:/etc/docker/ ;done

# 四台都要启动docker

systemctl restart docker.service

1.5.3 所有Master和Node节点安装kubeadm 、kubelet、kubectl

通过国内镜像站点阿里云安装的参考链接:

https://developer.aliyun.com/mirror/kubernetes

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

# 查看版本

apt-cache madison kubeadm |head

# 安装指定版本

apt install -y kubeadm=1.25.0-00 kubelet=1.25.0-00 kubectl=1.25.0-00

# 安装最新版本

apt-get install -y kubelet kubeadm kubectl

1.5.4 所有Master和Node节点安装和配置cri-dockerd

- Kubernetes-v1.24之前版本无需安装cri-dockerd

1.5.4.1 安装cri-dockerd

curl -LO https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.5/cri-dockerd_0.2.5.3-0.ubuntu-focal_amd64.deb

dpkg -i cri-dockerd_0.2.5.3-0.ubuntu-focal_amd64.deb

1.5.4.2 配置cri-dockerd并启动

sed -i '/^ExecStart/s#$# --pod-infra-container-image registry.aliyuncs.com/google_containers/pause:3.7#' /lib/systemd/system/cri-docker.service

# 加载并启动

systemctl daemon-reload

systemctl restart cri-docker.service

如果不配置,会出现下面日志提示

Aug 21 01:35:17 ubuntu2004 kubelet[6791]: E0821 01:35:17.999712 6791 remote_runtime.go:212] "RunPodSandbox from runtime service f

ailed" err="rpc error: code = Unknown desc = failed pulling image

\"k8s.gcr.io/pause:3.6\": Error response from daemon: Get \"https:

//k8s.gcr.io/v2/\": net/http: request canceled while waiting for connection

(Client.Timeout exceeded while awaiting headers)"

1.5.5 在第一个 master 节点初始化 Kubernetes 集群

多个master主机情况下进行的初始化

kubeadm init \

--control-plane-endpoint="kubeapi.lec.org" \

--image-repository=registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.25.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--cri-socket=unix:///var/run/cri-dockerd.sock \

--token-ttl=0 \

--upload-certs \

--ignore-preflight-errors=Swap

单个个master主机情况下进行的初始化

kubeadm init \

--image-repository=registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.25.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--cri-socket=unix:///var/run/cri-dockerd.sock \

--token-ttl=0 \

--upload-certs \

--ignore-preflight-errors=Swap

kubeadm init 命令参考说明

--control-plane-endpoint

# 多主节点必选项,用于指定控制平面的固定访问地址,可是IP地址或DNS名称,

# 会被用于集群管理员及集群组件的kubeconfig配置文件的API Server的访问地址

# 如果是单主节点的控制平面部署时不使用该选项,注意:kubeadm 不支持将没有

# --control-plane-endpoint 参数的单个控制平面集群转换为高可用性集群。

--image-repository string

#设置镜像仓库地址,默认为 k8s.gcr.io,此地址国内可能无法访问,可以指向国内的镜像地址。

# 如指向阿里云--image-repository=registry.aliyuncs.com/google_containers

--kubernetes-version

#kubernetes程序组件的版本号,它必须要与安装的kubelet程序包的版本号相同

--service-cidr

#Service的网络地址范围,其值为CIDR格式的网络地址,默认为10.96.0.0/12;

#通常,仅Flannel一类的网络插件需要手动指定该地址

--pod-network-cidr

#Pod网络的地址范围,其值为CIDR格式的网络地址,通常情况下Flannel网络插件的默认为10.244.0.0/16,

#Calico网络插件的默认值为192.168.0.0/16

--cri-socket

#v1.24版之后指定连接cri的socket文件路径,注意;不同的CRI连接文件不同

#如果是cRI是containerd,则使用--cri-socket unix:///run/containerd/containerd.sock

#如果是cRI是docker,则使用--cri-socket unix:///var/run/cri-dockerd.sock

#如果是CRI是CRI-o,则使用--cri-socket unix:///var/run/crio/crio.sock

#注意:CRI-o与containerd的容器管理机制不一样,所以镜像文件不能通用。

--ignore-preflight-errors=Swap

#若各节点未禁用Swap设备,还需附加选项“从而让kubeadm忽略该错误

--upload-certs #将控制平面证书上传到 kubeadm-certs Secret

--token-ttl

#共享令牌(token)的过期时长,默认为24小时,0表示永不过期;

#为防止不安全存储等原因导致的令牌泄露危及集群安全,建议为其设定过期时长。

#未设定该选项时,在token过期后,若期望再向集群中加入其它节点,

#可以使用如下命令重新创建token,并生成节点加入命令。

# kubeadm token create --print-join-command

kubeadm init \

--control-plane-endpoint="kubeapi.lec.org" \

--image-repository=registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.25.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--cri-socket=unix:///var/run/cri-dockerd.sock \

--token-ttl=0 \

--upload-certs \

--ignore-preflight-errors=Swap

# 初始化结果

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join kubeapi.lec.org:6443 --token szbs8d.x5l6h9om8ewxbmyw \

--discovery-token-ca-cert-hash sha256:5018f6733cc016a6ac2b51848e80ac269ae945ab3e58883ed6d97d66e2440959 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.lec.org:6443 --token szbs8d.x5l6h9om8ewxbmyw \

--discovery-token-ca-cert-hash sha256:5018f6733cc016a6ac2b51848e80ac269ae945ab3e58883ed6d97d66e2440959

多master的时候,控制平面挂在本机但是没有绑定本机,需要修改环境变量

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

报错内容参考

The connection to the server localhost:8080 was refused - did you specify the right host or port?

解决方法

#直接追加文件内容

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

#然后使生效

source /etc/profile

- 重置节点方法(退出集群方法)

kubeadm reset --cri-socket unix:///run/cri-dockerd.sock

rm -rf /etc/kubernetes/ /var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni /etc/cni/net.d

1.5.6 在第一个 master 节点生成 kubectl 命令的授权文件

#可复制1.5.5初始化的结果执行下面命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

1.5.7 实现 kubectl 命令补全

# 实现kubectl命令自动补全功能

kubectl completion bash > /etc/profile.d/kubectl_completion.sh

1.5.8 在第一个 master 节点配置网络组件

Kubernetes系统上Pod网络的实现依赖于第三方插件进行,这类插件有近数十种之多,较为著名的有

flannel、calico、canal和kube-router等,简单易用的实现是为CoreOS提供的flannel项目。

- 下载flanneld的地址为 https://github.com/flannel-io/flannel/releases

#默认没有网络插件,所以显示如下状态

root@master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.lec.org NotReady control-plane 6m18s v1.25.0

# 配置网络插件

root@master1:~# ls -lrt kube-flannel-v0.19.1.yml

-rw-r--r-- 1 root root 4583 Jan 16 14:10 kube-flannel-v0.19.1.yml

root@master1:~# kubectl apply -f kube-flannel-v0.19.1.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

# 稍等一会再吃查看

```bash

root@master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.lec.org Ready control-plane 6m18s v1.25.0

1.5.9 其它master节点加入到控制平面集群(多个master需要这一步)

- 在其它master节点运行kubeadm join 命令加入到控制平面集群中(多个master需要这一步)

#可复制1.5.5初始化的结果执行下面命令

root@master2:~# kubeadm join kubeapi.lec.org:6443 --token szbs8d.x5l6h9om8ewxbmyw \

--discovery-token-ca-cert-hash sha256:5018f6733cc016a6ac2b51848e80ac269ae945ab3e58883ed6d97d66e2440959 \

--control-plane \

--cri-socket=unix:///var/run/cri-dockerd.sock

root@master2:~# mkdir -p $HOME/.kube

root@master2:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@master2:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config

1.5.10 其它工作节点(node节点)加入集群

- master初始化的时候加入了参数 --control-plane-endpoint

#可复制1.5.5初始化的结果执行下面命令

kubeadm join kubeapi.lec.org:6443 --token szbs8d.x5l6h9om8ewxbmyw \

--discovery-token-ca-cert-hash sha256:5018f6733cc016a6ac2b51848e80ac269ae945ab3e58883ed6d97d66e2440959 \

--cri-socket=unix:///var/run/cri-dockerd.sock

- master初始化的时候没有加入了参数 --control-plane-endpoint

kubeadm join 10.0.0.70:6443 --token 82ft5w.q1h1w8dclom3vr1y \

--discovery-token-ca-cert-hash sha256:ec2818d2f956dd01d0eb84b785835e17fea431b37d78a1626f7d4c1db71c672d

--cri-socket=unix:///var/run/cri-dockerd.sock

- 可以将镜像导出到其它worker节点实现加速

[root@node1 ~]#docker image save `docker image ls --format "{{.Repository}}:{{.Tag}}"` -o k8s-images-v1.25.0.tar

[root@node1 ~]#gzip k8s-images-v1.25.0.tar

[root@node1 ~]#scp k8s-images-v1.25.0.tar.gz 10.0.0.72:/root/

[root@node1 ~]#scp k8s-images-v1.25.0.tar.gz 10.0.0.73:/root/

# node2上面导入

[root@node2 ~]#docker load -i k8s-images-v1.25.0.tar.gz

- 查看所有节点是否都Ready

# master1上

root@master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.lec.org Ready control-plane 120m v1.25.0

node1.lec.org Ready <none> 46m v1.25.0

node2.lec.org Ready <none> 2m25s v1.25.0

node3.lec.org Ready <none> 45s v1.25.0

1.5.11 创建 pod 并启动容器测试访问,并测试网络通信

demoapp是一个web应用,可将demoapp以Pod的形式编排运行于集群之上,并通过在集群外部进行访问:

1.5.11.1 测试方法一

root@master1:~# kubectl create deployment demoapp --image=ikubernetes/demoapp:v1.0 --replicas=3

deployment.apps/demoapp created

# 查看pod信息

root@master1:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-222tb 1/1 Running 0 14m 10.244.3.3 node3.lec.org <none> <none>

demoapp-55c5f88dcb-m8lhr 1/1 Running 0 14m 10.244.2.2 node2.lec.org <none> <none>

demoapp-55c5f88dcb-s7kz7 1/1 Running 0 14m 10.244.1.2 node1.lec.org <none> <none>

- 遇见的错误一:pod的status是ImagePullBackOff

systemctl restart kubelet

1.5.11.2 测试方法二

创建Service对象来demoapp使用的NodePort

#创建service

root@master1:~# kubectl create service nodeport demoapp --tcp=80:80

service/demoapp created

#查看service的ip

root@master1:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.99.176.30 <none> 80:31983/TCP 9s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 137m

# 测试访问service的ip

root@master1:~# curl 10.99.176.30

iKubernetes demoapp v1.0 !! ClientIP: 10.244.0.0, ServerName: demoapp-55c5f88dcb-m8lhr, ServerIP: 10.244.2.2!

root@master1:~# curl 10.99.176.30

iKubernetes demoapp v1.0 !! ClientIP: 10.244.0.0, ServerName: demoapp-55c5f88dcb-222tb, ServerIP: 10.244.3.3!

root@master1:~# curl 10.99.176.30

iKubernetes demoapp v1.0 !! ClientIP: 10.244.0.0, ServerName: demoapp-55c5f88dcb-m8lhr, ServerIP: 10.244.2.2!

root@master1:~# curl 10.99.176.30

iKubernetes demoapp v1.0 !! ClientIP: 10.244.0.0, ServerName: demoapp-55c5f88dcb-s7kz7, ServerIP: 10.244.1.2!

1.5.11.3 扩容和缩容

# 扩容

# 扩容到5个node

root@master1:~# kubectl scale deployment demoapp --replicas 5

deployment.apps/demoapp scaled

root@master1:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-222tb 1/1 Running 0 19m 10.244.3.3 node3.lec.org <none> <none>

demoapp-55c5f88dcb-f59jd 1/1 Running 0 8s 10.244.2.3 node2.lec.org <none> <none>

demoapp-55c5f88dcb-m8lhr 1/1 Running 0 19m 10.244.2.2 node2.lec.org <none> <none>

demoapp-55c5f88dcb-pr7j5 1/1 Running 0 8s 10.244.1.3 node1.lec.org <none> <none>

demoapp-55c5f88dcb-s7kz7 1/1 Running 0 19m 10.244.1.2 node1.lec.org <none> <none>

# 缩容

root@master1:~# kubectl scale deployment demoapp --replicas 2

deployment.apps/demoapp scaled

#销毁过程 STATUS为Terminating

root@master1:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-222tb 1/1 Running 0 20m 10.244.3.3 node3.lec.org <none> <none>

demoapp-55c5f88dcb-f59jd 1/1 Terminating 0 39s 10.244.2.3 node2.lec.org <none> <none>

demoapp-55c5f88dcb-m8lhr 1/1 Terminating 0 20m 10.244.2.2 node2.lec.org <none> <none>

demoapp-55c5f88dcb-pr7j5 1/1 Terminating 0 39s 10.244.1.3 node1.lec.org <none> <none>

demoapp-55c5f88dcb-s7kz7 1/1 Running 0 20m 10.244.1.2 node1.lec.org <none> <none>

#最终结果

root@master1:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-222tb 1/1 Running 0 20m 10.244.3.3 node3.lec.org <none> <none>

demoapp-55c5f88dcb-s7kz7 1/1 Running 0 20m 10.244.1.2 node1.lec.org <none> <none>