基于containerd部署Kubernetes 1.24.3

使用kubeadm部署一个单Master的k8s 1.24.3集群,使用containerd作为容器运行时。

节点规划

10.206.32.4 k8s-master-01 Ubuntu-20.04 2C4G 控制节点

10.206.32.17 k8s-node-01 Ubuntu-20.04 2C4G 工作节点

10.206.32.13 k8s-node-02 Ubuntu-20.04 2C4G 工作节点

containerd安装

首先在3个节点上安装contained,步骤相同。

下载安装包

wget https://github.com/containerd/containerd/releases/download/v1.6.6/containerd-1.6.6-linux-amd64.tar.gz

mkdir /tmp/containerd/

tar xvf containerd-1.6.6-linux-amd64.tar.gz -C /tmp/containerd/

cp /tmp/containerd/bin/* /usr/bin/

准备配置

mkdir /etc/containerd

containerd config default > /etc/containerd/config.toml

sed -i "s#k8s.gcr.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml

sed -i 's#SystemdCgroup = false#SystemdCgroup = true#g' /etc/containerd/config.toml

sed -i '/registry.mirrors]/a\ \ \ \ \ \ \ \ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]' /etc/containerd/config.toml

sed -i '/registry.mirrors."docker.io"]/a\ \ \ \ \ \ \ \ \ \ endpoint = ["http://hub-mirror.c.163.com"]' /etc/containerd/config.toml

准备service文件

cat >> /lib/systemd/system/containerd.service << EOF

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

#uncomment to enable the experimental sbservice (sandboxed) version of containerd/cri integration

#Environment="ENABLE_CRI_SANDBOXES=sandboxed"

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=infinity

# Comment TasksMax if your systemd version does not supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

EOF

安装runc

wget https://github.com/opencontainers/runc/releases/download/v1.1.3/runc.amd64 -O /usr/bin/runc

chmod +x /usr/bin/runc

启动containerd

systemctl daemon-reload

systemctl start containerd

systemctl status containerd

节点环境准备

在每个节点执行下面的步骤进行环境准备工作。

主机名设置

hostnamectl set-hostname <hostname>

安装ipvsadm命令

apt -y install ipvsadm

配置主机名解析

cat >>/etc/hosts<<EOF

10.206.32.4 k8s-master-01

10.206.32.17 k8s-node-01

10.206.32.13 k8s-node-02

EOF

关闭交换分区和防火墙

ufw disable

swapoff -a && sed -i 's|^/swap.img|#/swap.ing|g' /etc/fstab

设置时间同步

这里直接使用ntpdate命令同步阿里云时间,也可以自己配置chrony服务

ntpdate ntp.aliyun.com

优化内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward=1

vm.max_map_count=262144

kernel.pid_max=4194303

fs.file-max=1000000

net.ipv4.tcp_max_tw_buckets=6000

net.netfilter.nf_conntrack_max=2097152

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

EOF

echo ip_vs >> /etc/modules-load.d/modules.conf

echo br_netfilter >> /etc/modules-load.d/modules.conf

modprobe ip_vs && modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

安装kubeadm环境

在3个节点都安装kubeadm、kubectl和kubelet,其中kubectl在工作节点是可选的可以不安装

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

apt-get -y install kubeadm=1.24.3-00 kubelet=1.24.3-00 kubectl=1.24.3-00

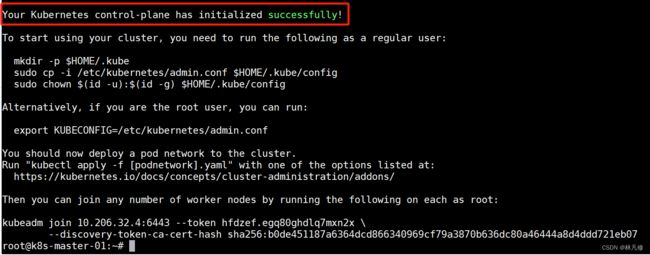

初始化集群

使用kubeadm init初始化控制平面

kubeadm init --apiserver-advertise-address 10.206.32.4 \

--apiserver-bind-port 6443 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.24.3 \

--pod-network-cidr 10.10.0.0/16 \

--service-cidr 172.16.0.0/24 \

--service-dns-domain cluster.local

初始化成功会提示类似下面的信息:

按照提示配置kubectl认证信息

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

配置网络插件

这里选择使用calico网络插件

curl https://projectcalico.docs.tigera.io/manifests/calico.yaml -O

#需要修改calico.yaml中CALICO_IPV4POOL_CIDR的值和前面初始化时pod-network-cidr的值一致

cat calico.yaml |grep -A1 CALICO_IPV4POOL_CIDR

- name: CALICO_IPV4POOL_CIDR

value: "10.10.0.0/16"

kubectl apply -f calico.yaml

工作节点加入集群

在两个工作节点执行如下命令加入集群

kubeadm join 10.206.32.4:6443 --token hfdzef.egq80ghdlq7mxn2x \

--discovery-token-ca-cert-hash sha256:b0de451187a6364dcd866340969cf79a3870b636dc80a46444a8d4ddd721eb07

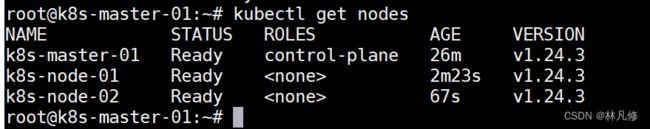

查看节点状态

kubectl get nodes

所有节点的状态都为Ready就表示工作节点加入成功,集群已经正常运行。

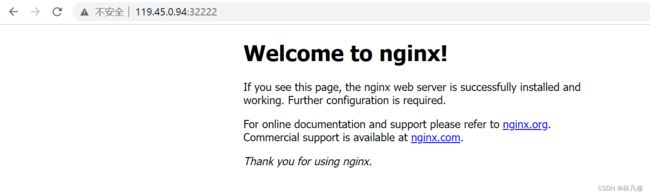

测试

创建一个nginx Deployment和一个对应的Service然后访问测试,资源清单文件如下:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- name: http

containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: default

spec:

type: NodePort

selector:

app: nginx

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 32222

将其创建到集群上

kubectl apply -f nginx-resources.yaml