ELK部署Redis+Keepalived高可用环境

部署Redis+Keepalived部分高可用环境

- 安装redis服务及主从配置

-

- 1.node1和node2安装redis

- 2.Keepalived安装

- 3.redis+keepalived配置

-

- 主节点配置

- 从节点配置

- 主从启动服务(开机自启)

- redis+keepalived测试

- 部署Kibana及nginx代理访问环境

-

- 1、kibana安装配置

本文承接ELK部署

安装redis服务及主从配置

1.node1和node2安装redis

[root@node1 ~]# yum install redis -y

[root@node2 ~]# yum install redis -y

node1和node2都修改的配置

[root@node1 ~]# vim /etc/redis.conf

bind 0.0.0.0

daemonize yes

appendonly yes

[root@node2 ~]# vim /etc/redis.conf

bind 0.0.0.0

daemonize yes

appendonly yes

从redis(node2)比主配置多一行:

[root@node2 ~]# vim /etc/redis.conf

################################# REPLICATION #################################

# slaveof

slaveof node1 6379

主从都配置开机自启

[root@node1 ~]# systemctl enable --now redis

Created symlink from /etc/systemd/system/multi-user.target.wants/redis.service to /usr/lib/systemd/system/redis.service.

[root@node2 ~]# systemctl enable --now redis

Created symlink from /etc/systemd/system/multi-user.target.wants/redis.service to /usr/lib/systemd/system/redis.service.

2.Keepalived安装

两个节点都安装

[root@node1 ~]# yum install -y keepalived

3.redis+keepalived配置

主节点配置

注意:主从节点需要安装psmisc(提供killall命令)

先装psmisc-22.20-17.el7.x86_64

[root@node1 ~]# cd /etc/keepalived/

[root@node1 keepalived]# ls

keepalived.conf

[root@node1 keepalived]# cp keepalived.conf{,.bak}

[root@node1 keepalived]# vim keepalived.conf

#全替换为下方代码

global_defs {

router_id redis-master

}

vrrp_script chk_redis {

script "killall -0 redis-server"

interval 2

timeout 2

fall 3

}

vrrp_instance redis {

state BACKUP

interface ens33

lvs_sync_daemon_interface ens33

virtual_router_id 202

priority 150

nopreempt

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.43.126 # 同一网段VIP地址

}

track_script {

chk_redis

}

#对端地址

notify_master "/etc/keepalived/scripts/redis_master.sh 127.0.0.1 192.168.43.112 6379"

#对端地址

notify_backup "/etc/keepalived/scripts/redis_backup.sh 127.0.0.1 192.168.43.112 6379"

#故障切换

notify_fault /etc/keepalived/scripts/redis_fault.sh

#keepalived停止

notify_stop /etc/keepalived/scripts/redis_stop.sh

}

从节点配置

先在node1配置,再发送到node2

[root@node1 keepalived]# pwd

/etc/keepalived

[root@node1 keepalived]# ls

keepalived.conf keepalived.conf.bak

[root@node1 keepalived]# mkdir scripts

[root@node1 keepalived]# cd scripts/

---------------------------------------1--------------------------------------------------

[root@node1 scripts]# vim redis_master.sh

[root@node1 scripts]# cat redis_master.sh

#!/bin/bash

REDISCLI="redis-cli -h $1 -p $3"

LOGFILE="/var/log/keepalived-redis-state.log"

echo "[master]" >> $LOGFILE

date >> $LOGFILE

echo "Being master...." >> $LOGFILE 2>&1

echo "Run SLAVEOF cmd ... " >> $LOGFILE

$REDISCLI SLAVEOF $2 $3 >> $LOGFILE 2>&1

echo "SLAVEOF $2 cmd can't excute ... " >> $LOGFILE

sleep 10

echo "Run SLAVEOF NO ONE cmd ..." >> $LOGFILE

$REDISCLI SLAVEOF NO ONE >> $LOGFILE 2>&1

---------------------------------------2--------------------------------------------------

[root@node1 scripts]# vim redis_backup.sh

[root@node1 scripts]# cat redis_backup.sh

#!/bin/bash

REDISCLI="redis-cli"

LOGFILE="/var/log/keepalived-redis-state.log"

echo "[BACKUP]" >> $LOGFILE

date >> $LOGFILE

echo "Being slave...." >> $LOGFILE 2>&1

echo "Run SLAVEOF cmd ..." >> $LOGFILE 2>&1

$REDISCLI SLAVEOF $2 $3 >> $LOGFILE

sleep 100

exit 0

----------------------------------------3-------------------------------------------------

[root@node1 scripts]# vim redis_fault.sh

[root@node1 scripts]# cat redis_fault.sh

#!/bin/bash

LOGFILE="/var/log/keepalived-redis-state.log"

echo "[fault]" >> $LOGFILE

date >> $LOGFILE

----------------------------------------4-------------------------------------------------

[root@node1 scripts]# vim redis_stop.sh

[root@node1 scripts]# cat redis_stop.sh

#!/bin/bash

LOGFILE="/var/log/keepalived-redis-state.log"

echo "[stop]" >> $LOGFILE

date >> $LOGFILE

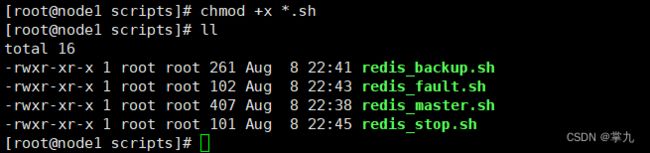

给予执行权限

[root@node1 scripts]# chmod +x *.sh

修改一下几点

[root@node2 keepalived]# vim keepalived.conf

router_id redis-slave

priority 100

notify_master "/etc/keepalived/scripts/redis_master.sh 127.0.0.1 192.168.43.111 6379"

notify_backup "/etc/keepalived/scripts/redis_backup.sh 127.0.0.1 192.168.43.111 6379"

以上的keepalived.conf文件中的切换模式设置为nopreempt,意思是:

不抢占VIP资源,此种模式要是所有的节点都必须设置为state BACKUP模式!

需要注意无论主备服务器都需要设置为BACKUP,与以往KeepAlived的配置不同,

其目的就是防止主服务器恢复后重新抢回VIP,导致Redis切换从而影响稳定。

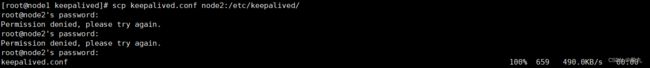

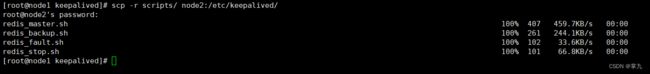

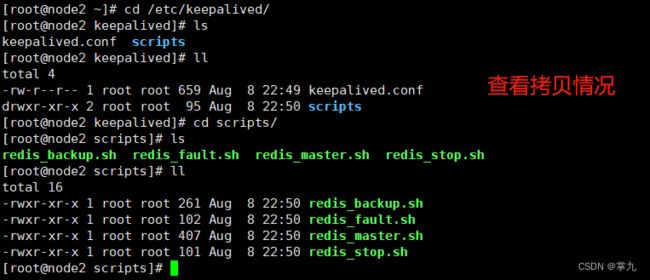

主节点脚本复制到从节点,保证有执行权限。

Keepalived在转换状态时会依照状态来呼叫:

当进入Master状态时会呼叫notify_master

当进入Backup状态时会呼叫notify_backup

当发现异常情况时进入Fault状态呼叫notify_fault

当Keepalived程序终止时则呼叫notify_stop

主从启动服务(开机自启)

[root@node1 keepalived]# systemctl enable --now keepalived.service

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@node2 keepalived]# systemctl enable --now keepalived.service

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

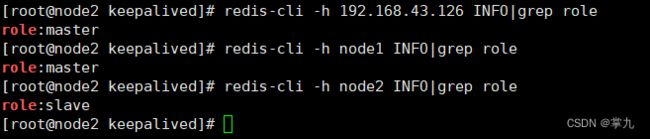

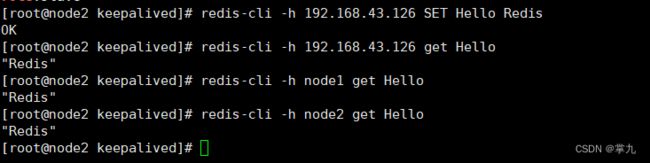

redis+keepalived测试

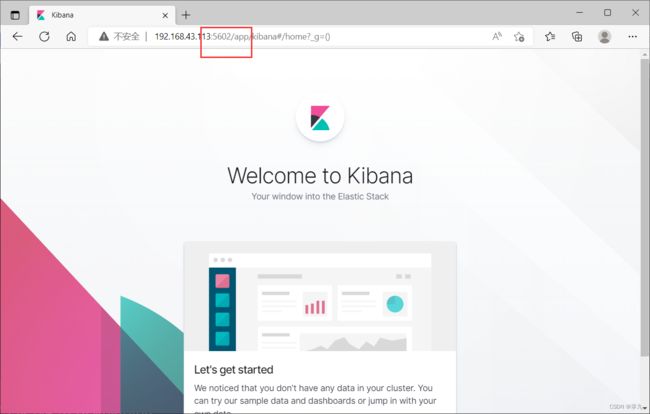

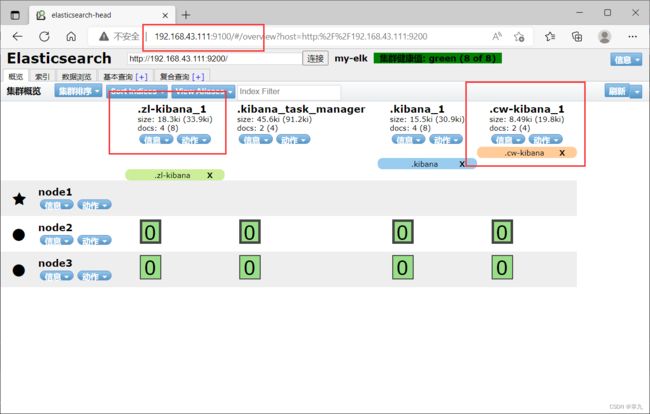

部署Kibana及nginx代理访问环境

Kibana及nginx代理访问环境部署(访问权限控制)。在elk-node03节点机上操作

1、kibana安装配置

[root@node3 ~]# yum install https://mirrors.tuna.tsinghua.edu.cn/elasticstack/yum/elastic-7.x/7.2.0/kibana-7.2.0-x86_64.rpm

由于维护的业务系统比较多,每个系统下的业务日志在kibana界面展示的访问权限只给该系统相关人员

开放,对系统外人员不开放。所以需要做kibana权限控制。

这里通过nginx的访问验证配置来实现。

可以配置多个端口的kibana,每个系统单独开一个kibana端口号,比如财务系统kibana使用5601端

口、租赁系统kibana使用5602,然后nginx做代理访问配置。

每个系统的业务日志单独在其对应的端口的kibana界面里展示。

[root@node3 ~]# cp -r /etc/kibana/ /etc/cw-5601-kibana

[root@node3 ~]# cp -r /etc/kibana/ /etc/zl-5602-kibana

[root@node3 ~]# vim /etc/cw-5601-kibana/kibana.yml

#修改以下命令

server.port: 5601

server.host: "0.0.0.0"

kibana.index: ".cw-kibana"

elasticsearch.hosts: ["http://192.168.43.111:9200"]

覆盖原zl,并新建

[root@node3 ~]# cp /etc/cw-5601-kibana/kibana.yml /etc/zl-5602-kibana/

cp: overwrite ‘/etc/zl-5602-kibana/kibana.yml’? y

[root@node3 ~]# vim /etc/zl-5602-kibana/kibana.yml

#再修改以下命令

server.port: 5602

server.host: "0.0.0.0"

kibana.index: ".zl-kibana"

elasticsearch.hosts: ["http://192.168.43.111:9200"]

提供服务脚本:

拷贝原始脚本重命名

[root@node3 ~]# cp -a /etc/systemd/system/kibana.service /etc/systemd/system/kibana_cw.service

修改文件

[root@node3 ~]# vim /etc/systemd/system/kibana_cw.service

#需修改的命令

ExecStart=/usr/share/kibana/bin/kibana "-c /etc/cw-5601-kibana/kibana.yml"

复制一份重命名为zl并修改内容

[root@node3 ~]# cp /etc/systemd/system/kibana_cw.service /etc/systemd/system/kibana_zl.service

[root@node3 ~]# vim /etc/systemd/system/kibana_zl.service

#需修改的命令

ExecStart=/usr/share/kibana/bin/kibana "-c /etc/zl-5602-kibana/kibana.yml"

配置nginx的反向代理以及访问验证

安装nginx

[root@node3 ~]# yum install nginx -y

若失败,则添加扩展源后在重新安装

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

配置虚拟主机:

[root@node3 ~]# vim /etc/nginx/conf.d/cw_kibana.conf

server {

listen 15601;

server_name localhost;

location / {

proxy_pass http://192.168.43.113:5601/;

auth_basic "Access Authorized";

auth_basic_user_file /etc/nginx/conf.d/cw_auth_password;

}

}

[root@node3 ~]# cp /etc/nginx/conf.d/cw_kibana.conf /etc/nginx/conf.d/zl_kibana.conf

[root@node3 ~]# vim /etc/nginx/conf.d/zl_kibana.conf

server {

listen 15602;

server_name localhost;

location / {

proxy_pass http://192.168.43.113:5602/;

auth_basic "Access Authorized";

auth_basic_user_file /etc/nginx/conf.d/zl_auth_password;

}

}

设置验证文件

安装httpd-tools

[root@node3 ~]# yum install httpd-tools -y

配置登录认证机制

[root@node3 ~]# htpasswd -c /etc/nginx/conf.d/cw_auth_password cwlog

New password:

Re-type new password:

Adding password for user cwlog

[root@node3 ~]# htpasswd -c /etc/nginx/conf.d/zl_auth_password zllog

New password:

Re-type new password:

Adding password for user zllog

测试语法是否有问题

[root@node3 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

nginx开机自启

[root@node3 ~]# systemctl enable --now nginx

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

kibana开机自起

[root@node3 ~]# systemctl enable --now kibana_cw.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kibana_cw.service to /etc/systemd/system/kibana_cw.service.

[root@node3 ~]# systemctl enable --now kibana_zl.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kibana_zl.service to /etc/systemd/system/kibana_zl.service.