Hadoop笔记-生产调优篇

Hadoop笔记-生产调优篇

- 一:HDFS的核心参数

- 1.1NameNode生产环境内存

- 1.1NameNode心跳机制

-

- 1.3开启垃圾回收

- 二: HDFS的压测

- 2.1: 测试HDFS的写性能压测

- 2.2: 测试HDFS的读性能压测

- 2.3: 测试HDFS的性能总结

- 三:HDFS多目录

-

- 3.1NameNode多目录配置

- 3.1DateNode多目录配置

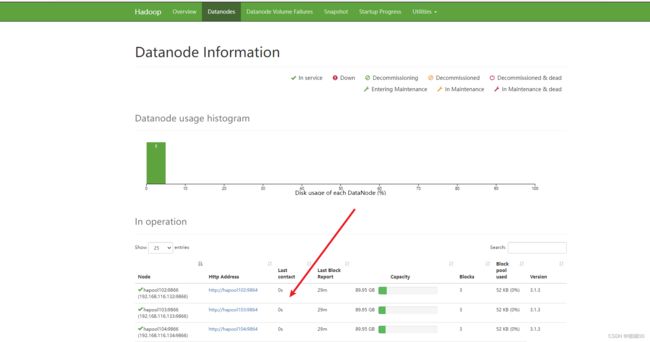

- 四:HDFS的缩容和扩容

-

- 4.1 添加白名单

- 4.2 服役新数据节点

- 4.3 节点的数据平衡

- 4.4 黑名单退役节点

- 五:HDFS故障排除

-

- 5.1NameNode故障处理

- 5.2 集群安全模式&磁盘修复

-

- 5.2.2 磁盘修复

- 5.3 慢磁盘监控

- 5.4 小文件归档

- 六:MapReduc的生产经验

-

- 6.1MapReduce生产跑的满的原因

- 6.2 MapReduce调优

- 6.3 MapReduce的数据倾斜

- 七:Yarn的生产经验

- 八:Hadoop的综合调优

-

- 8.1. Hadoop 小文件优化方法

-

- 8.1.1 小文件的弊端

- 8.1.2 小文件的处理办法

- 8.1.3开启uber 模式

- 8.2. 测试MapReduce的压测

- 九:企业开发案例

-

- 9.1 HDFS 参数调优

- 9.2 MapReduce

- 9 .3 Yarn

一:HDFS的核心参数

1.1NameNode生产环境内存

(1)NameNode内存计算

- 每个文件块大概会占用150byte,一台服务器128为例:能存储多少个文件呢

- 128Gx1028(Mb)x1024(kb) x1024byte约等于9.1亿

- 在hadoop3.x中配置nameNode的内存

- 在hadoop-env.sh中

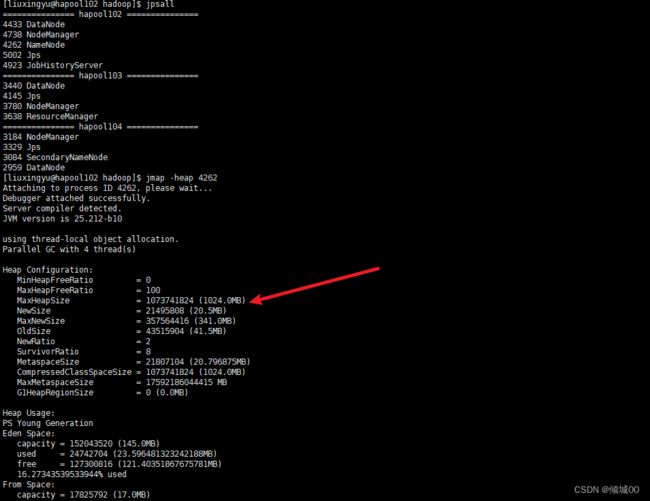

如果没有配置就是按照服务器的内存配置---->最大值

最小值也是这么配置的 - 接下来启动Hadoop

- 执行jps命令

- datanode和NameNode获取的是相同的资源

- 问题1:NameNode是984,dataNode是984,但是如果都需要执行984,就会出现内存不够的情况,就会抢占Linux系统的资源,有可能会导致系统崩溃

- 推荐配置参考

nameNode是每增加1000000个数据块就增加1G内存

dataNode是内增加100000 0的副本就要增加1G内存

export HDFS_NAMENODE_OPTS="-Dhadoop.security.logger=INFO,RFAS -Xmx1024m"

export HDFS_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS-Xmx1024m"

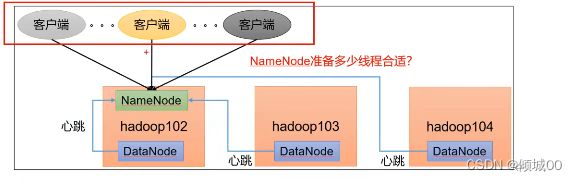

1.1NameNode心跳机制

- 集群中的DataNode要向NameNode去注册,

NameNode有一个工作线程池,用来处理不同的DataNode的并发心跳和元数据的操作,默认是10个线程

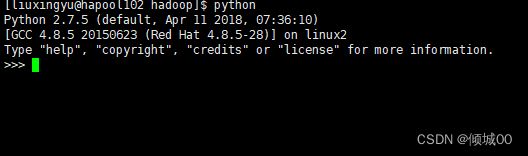

我们可以通过python来进行计算

- 导入函数库

-计算,3台服务器应该设置21

- 退出

- 修改vim hdfs-site.xml 进行配置

- 分发数据,在启动就会按照配置的进行执行了

1.3开启垃圾回收

- 开启垃圾回收功能,可以将删除的文件在不超时的情况下,将文件恢复回来,防止数据误删

- 默认值fs.trash.interval=0,表示禁用回收站,其他值表示设置回收站的存活时间

- 默认值fs.trash.checjpoint.interval=0回收站的间隔,如果为0则和上面的回收站存活时间相等

- 要走fs.trash.checjpoint.interval

- 配置垃圾回收的时间为2分钟

- 在 core-site.xml 中配置

fs.trash.interval

1

- 分发文件,重启集群

通过命令去删除集群上的文件

hadoop fs -rm /xxx -通过命令去删除文件,将会产生一个user命名的文件夹,里面存放的是你删除放进回收站的数据,等回收站时间一过,回收站的数据页会彻底消失

二: HDFS的压测

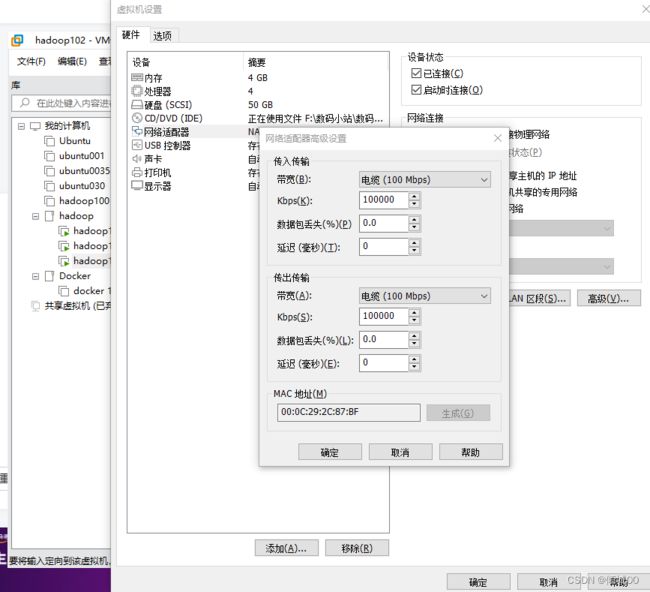

- HDFS的读写性能主要受网络和磁盘的影响力比较大,为了 更好演示将三台虚拟机设为100dps

- –>右键虚拟机打开设置

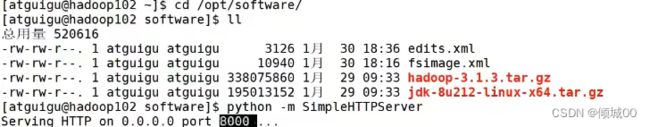

- 这样可以通过ip地址+端口号进行下载,可以查看到下载的速度

2.1: 测试HDFS的写性能压测

#进入到hadoop文件中

hadoop jar /opt/module/hadoop-3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.3-tests.jar TestDFSIO -write -nrFiles 10 -fileSize 128M

# 测试写性能,测试1个文件,每个文件128MB

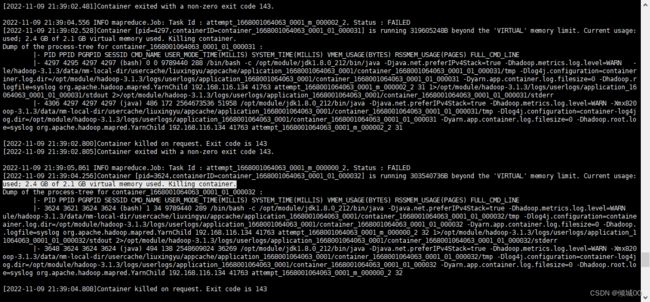

- 会发生错误,说你的内存需要2.4,但是你的虚拟内存只有2.1

- 原因:yarn的虚拟化内存中

- 假如:物理内存是4个G,虚拟内存是物理内存的2.1倍,那么他会虚拟出来8.1的内存 因为你的linux会给java等等预留出5个G的内存,所以你实际获取到的内存会低于4个G,这内存就会产生浪费

所以,需要在yarn-site.xml中关掉虚拟内存

yarn.nodemanager.vmem-check-enabled

false

#配置完成之后,分发文件,在hadoop的目录执行sbin/stop-yarn.sh sbin/start-yarn.sh 重启yarn

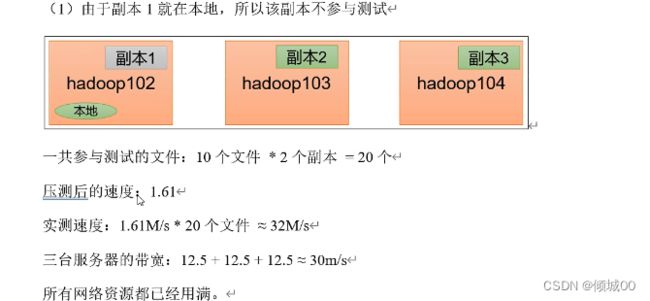

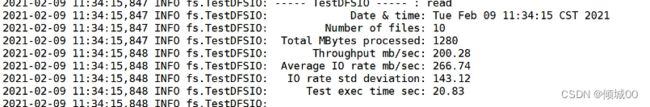

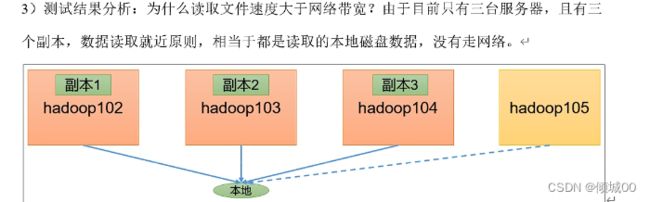

2.2: 测试HDFS的读性能压测

200多每秒,已经超过了网络的限制,因为没走网络,因为没走网络,所以这个数据就是和你的硬盘有关系了,和网络没关系

2.3: 测试HDFS的性能总结

速度和网络磁盘有关系,网络的话就相办法提高网络

磁盘的话就想办法扩磁盘

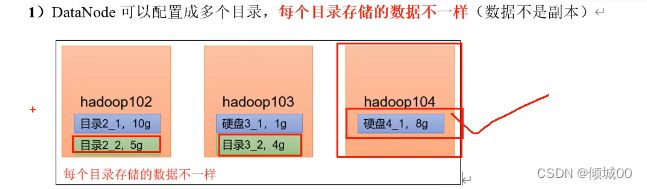

三:HDFS多目录

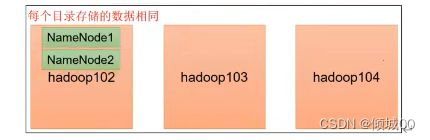

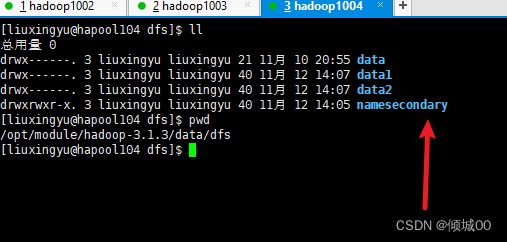

3.1NameNode多目录配置

dfs.namenode.name.dir

file://${hadoop.tmp.dir}/dfs/name1,file://${hadoop.tmp.

dir}/dfs/name2

- 因为重新配置了,需要将3台虚拟机上的数据格式化,不然会出错

rm -rf data/ logs/

- 在102上执行 hdfs namenode -format 格式化数据,然后其中hadoop集群

- 在data/dfs目录下就可以看到有两个NameNode 的路径了

- 两个NameNode的数据是一模一样的,这个因为是在一台集群上,所以不能算高可用,但是可以再另外一台在配置NameNode,实现高可用

3.1DateNode多目录配置

- 在hdfs-site.xml上配置

dfs.datanode.data.dir

file://${hadoop.tmp.dir}/dfs/data1,file://${hadoop.tmp.

dir}/dfs/data2

分发数据

四:HDFS的缩容和扩容

4.1 添加白名单

- 白名单:表名白名单的主机地址可以用来存储数据

- 企业中配置白名单可以防止黑客的恶意攻击和访问

- 配置白名单步骤如下

- 1.创建白名单

- 在/opt/module/hadoop-3.1.3/etc/hadoop新建whitelis文件

- 将我们需要进行配置白名单的联泰服务器放上去

- 继续创建 bldcklist文件

- vim hdfs-site.xml中配置

dfs.hosts

/opt/module/hadoop-3.1.3/etc/hadoop/whitelis

dfs.hosts.exclude

/opt/module/hadoop-3.1.3/etc/hadoop/blacklist

- 将whitelist bldcklist hdfs-site.xml 这三个修改好的文件分发

- 如果是第一次添加白名单必须重启集群,如果不是第一次只需要刷新NameNode节点即可

- 冲洗启动发现只有两台服务器在跑

- 通过命令上传文件hadoop fs -put wuguo.txt /2

-

只有两台,现在是将104作为客户端,但是不会将数据存储在你这里

-

接下来在whitelis文件中将104也设置为可以访问,然后分发数据

-

重新查看恢复正常是3台

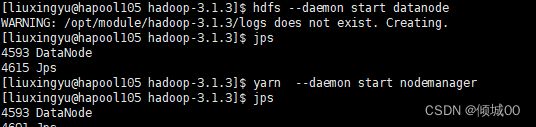

4.2 服役新数据节点

1)随着公司业务的增长,数据量越来越大,原有的数据节点容量已经不能满足存储数据的需求,需要在原有的集群基础上动态添加新的数据节点,

vim /etc/sysconfig/network-scripts/ifcfg-ens33

修改ip地址

vim /etc/hostname

修改主机名称然后重启

- 在102上将hadoop和java拷贝到105上

- scp -r module/* 192.168.116.135:/opt/module/

- 拷贝环境变量

- sudo scp /etc/profile.d/my_env.sh 192.168.116.135:/etc/profile.d

- 在105上执行 source /etc/profile

- 然后再102-103-104上执行vim /etc/hosts,全部修改

- 在102上-103上-104上 配置ssh(普通用户)

- cd .ssh/

- ssh-copy-id hapool105

- 配置完毕之后在105上的hadoop下 rm -rf data/ logs/

- 在102上给白名单新增hapool105,然后分发 刷新 hdfs dfsadmin -refreshNodes

4.3 节点的数据平衡

- 刚才服役了一台服务器

- 在开发中,如果经常在hapool10和hapool104上提交任务且副本数为2,由于数据本地性的原则就会导致hapool102和hapool104上的数据过多,hapool103的数据就会过少,

- 另一种情况就会导致新服役的服务器数据量比较少,就需要执行负载均衡的命令

[liuxingyu@hapool103 hadoop-3.1.3]$ sbin/start-balancer.sh -threshoud 10

- 对于参数10代表的是集群中各个节点的磁盘空间利用率不超过10%可根据实际情况调整

- 停止负载均衡的命令

- sbin/start-balancer.sh

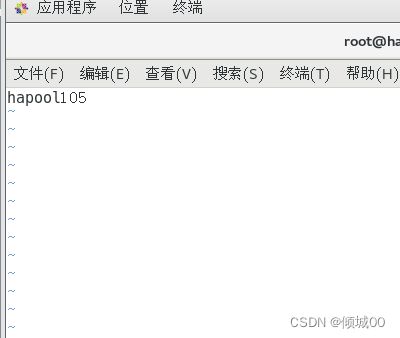

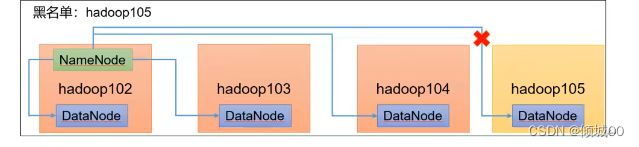

4.4 黑名单退役节点

- 上了黑名单的主机ip地址不可以用来存储数据

-编辑/opt/module/hadoop-3.1.3/etc/hadoop 目录下的 blacklis文件 - 添加需要进入黑名单的主机名称

- hadoop105

需要在 hdfs-site.xml 配置文件中增加 dfs.hosts 配置参数

dfs.hosts.exclude

/opt/module/hadoop-3.1.3/etc/hadoop/blacklist

-最后执行命令,关闭105的节点即可

yarn --daemon stop nodemanager

hdfs --daemon stop datanode

- 服务器完全的退出是10分钟+30秒(默认的)

五:HDFS故障排除

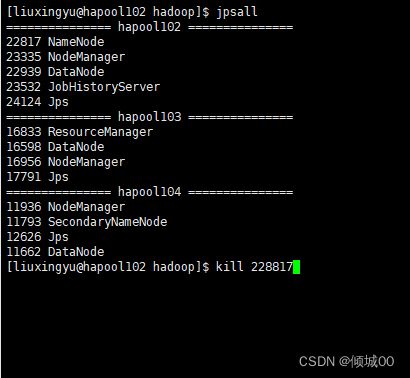

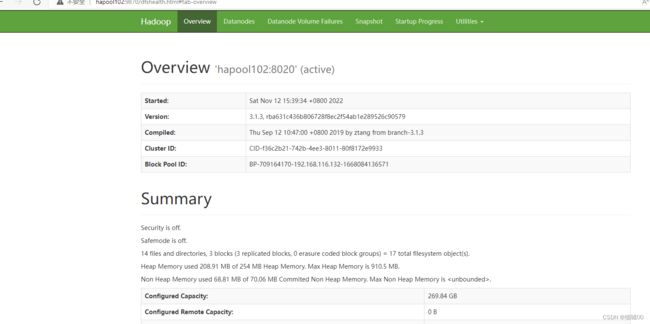

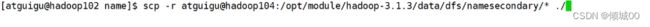

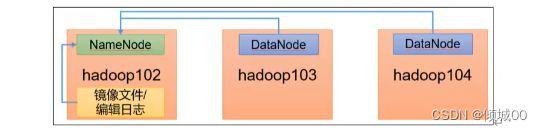

5.1NameNode故障处理

- 将namenode的数据杀死

-

如果只是因为进程挂了用hdfs --daemon start namenode命令

-

接下来再次关掉进程,删除/opt/module/hadoop-3.1.3/data/dfs下的所有文件

-再次重新启动发现启动不起来 -

可以查看NameNode的日志

- 执行拷贝

将2nn里面的数据拷贝到102的当前目录 - 重新执行hdfs --daemon start namenode

- 即可启动成功

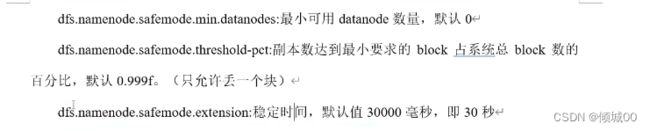

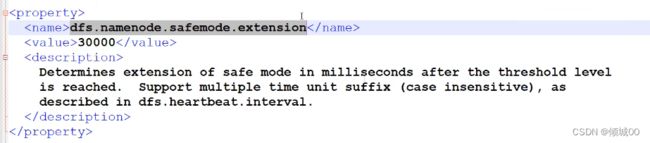

5.2 集群安全模式&磁盘修复

- 集群在启动的时候会进入到安全模式

30s之后安全模式就会退出 - 1)安全模式:文件系统接受读数据请求:而不接受修改删除的请求

- 2)进入到安全模式的场景

- NameNode在加载镜像文件和编辑日志期间处于安全模式

- NameNode在接受DateNode注册时,处于安全模式

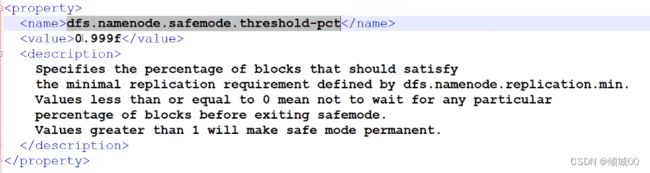

- 退出安全模式的条件

- 4)基本语法

- 集群处于安全模式,不能执行重要操作(写操作)集群启动完成后,自动退出安全模式

- (1)bin/hdfs dfsadmin -safemode get (功能描述:查看安全模式状态)

(2)bin/hdfs dfsadmin -safemode enter (功能描述:进入安全模式状态)

(3)bin/hdfs dfsadmin -safemode leave(功能描述:离开安全模式状态)

(4)bin/hdfs dfsadmin -safemode wait (功能描述:等待安全模式状态)

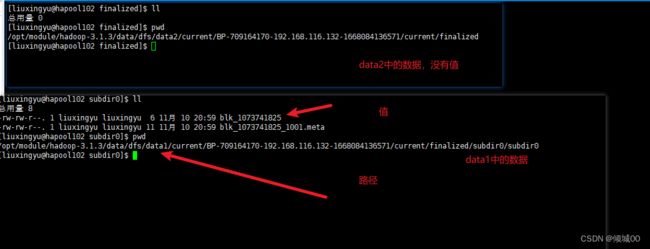

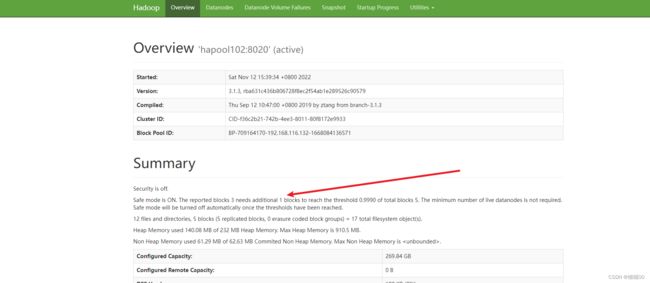

5.2.2 磁盘修复

rm -rf blk_10737418255 blk_1073741825_1001.meta blk_1073741826 blk_1073741826_1002.meta

NameNode是默认6个小时DateNode才会回报一次,所以现在NameNode是不知道文件损坏的,应该重启一下集群

-

看这里,大概讲的是 这个快3个如果你在添加一个块就会达到这个0.999的标准了,以为总共块是5个,然后就会一直卡在这,一直离不开安全模式

-

解决办法:1.找专业的人做磁盘修复

-

- 如果数据不重要删除元数据

-

不然下次启动还是安全模式

-

模拟等待安全模式

-

如果集群进入了安全模式

-

然后执行了hdfs dfsadmin -safemode wait 并上换了一个文件

-

此时就会产生阻塞的状态,文件无法上传,需要执行离开安全模式的命令才会结束堵塞

5.3 慢磁盘监控

- “慢磁盘”指的时写入数据非常慢的一类磁盘。其实慢性磁盘并不少见,当机器运行时

间长了,上面跑的任务多了,磁盘的读写性能自然会退化,严重时就会出现写入数据延时的

尚硅谷大数据技术之 Hadoop(生产调优手册)

如何发现慢磁盘?

正常在 HDFS 上创建一个目录,只需要不到 1s 的时间。如果你发现创建目录超过 1 分

钟及以上,而且这个现象并不是每次都有。只是偶尔慢了一下,就很有可能存在慢磁盘。

可以采用如下方法找出是哪块磁盘慢:

#通过命令进行安装

sudo yum install -y fio

- (1)顺序读测试

[atguigu@hadoop102 ~]# sudo yum install -y fio

[atguigu@hadoop102 ~]# sudo fio -

filename=/home/atguigu/test.log -direct=1 -iodepth 1 -thread -

rw=read -ioengine=psync -bs=16k -size=2G -numjobs=10 -

runtime=60 -group_reporting -name=test_r

Run status group 0 (all jobs):

READ: bw=360MiB/s (378MB/s), 360MiB/s-360MiB/s (378MB/s-378MB/s),

io=20.0GiB (21.5GB), run=56885-56885msec

结果显示,磁盘的总体顺序读速度为 360MiB/s。

- (2)顺序写测试

[atguigu@hadoop102 ~]# sudo fio -

filename=/home/atguigu/test.log -direct=1 -iodepth 1 -thread -

rw=write -ioengine=psync -bs=16k -size=2G -numjobs=10 -

runtime=60 -group_reporting -name=test_w

Run status group 0 (all jobs):

WRITE: bw=341MiB/s (357MB/s), 341MiB/s-341MiB/s (357MB/s357MB/s), io=19.0GiB (21.4GB), run=60001-60001msec

结果显示,磁盘的总体顺序写速度为 341MiB/s。

-(3)随机写测试

[atguigu@hadoop102 ~]# sudo fio -

filename=/home/atguigu/test.log -direct=1 -iodepth 1 -thread -

rw=randwrite -ioengine=psync -bs=16k -size=2G -numjobs=10 -

runtime=60 -group_reporting -name=test_randw

Run status group 0 (all jobs):

WRITE: bw=309MiB/s (324MB/s), 309MiB/s-309MiB/s (324MB/s-324MB/s),

io=18.1GiB (19.4GB), run=60001-60001msec

结果显示,磁盘的总体随机写速度为 309MiB/s。

- (4)混合随机读写:

[atguigu@hadoop102 ~]# sudo fio -

filename=/home/atguigu/test.log -direct=1 -iodepth 1 -thread -

rw=randrw -rwmixread=70 -ioengine=psync -bs=16k -size=2G -

numjobs=10 -runtime=60 -group_reporting -name=test_r_w -

ioscheduler=noop

Run status group 0 (all jobs):

READ: bw=220MiB/s (231MB/s), 220MiB/s-220MiB/s (231MB/s231MB/s), io=12.9GiB (13.9GB), run=60001-60001msec

WRITE: bw=94.6MiB/s (99.2MB/s), 94.6MiB/s-94.6MiB/s

(99.2MB/s-99.2MB/s), io=5674MiB (5950MB), run=60001-60001mse

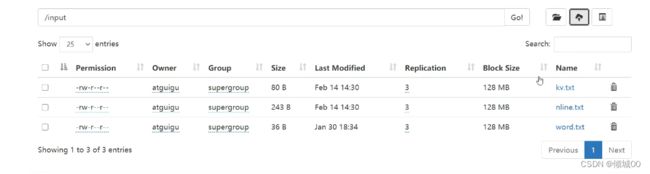

5.4 小文件归档

- 每个文件均按块存储,每个块的元数据存储在 NameNode 的内存中,因此 HDFS 存储

小文件会非常低效。因为大量的小文件会耗尽 NameNode 中的大部分内存。但注意,存储小

文件所需要的磁盘容量和数据块的大小无关。例如,一个 1MB 的文件设置为 128MB 的块

存储,实际使用的是 1MB 的磁盘空间,而不是 128MB。 - 2)解决存储小文件办法之一

HDFS 存档文件或 HAR 文件,是一个更高效的文件存档工具,它将文件存入 HDFS 块,

在减少 NameNode 内存使用的同时,允许对文件进行透明的访问。具体说来,HDFS 存档文

件对内还是一个一个独立文件,对 NameNode 而言却是一个整体,减少了 NameNode 的内

存

- 将input的所有文件归档成一起

- 把/input 目录里面的所有文件归档成一个叫 input.har 的归档文件,并把归档后文件存储

- 到/output 路径下。

hadoop archive -archiveName input.har -p /input /output

(3)查看归档

hadoop fs -ls /output/input.har

hadoop fs -ls har:///output/input.har

(4)解归档文件

hadoop fs -cp har:///output/input.har /

六:MapReduc的生产经验

6.1MapReduce生产跑的满的原因

- 1)计算机的性能

- CPU ,内存,磁盘速度,网络

- 2)

- 数据倾斜

- Map运行时间太长,导致Reduce等待过久

- 小文件太多

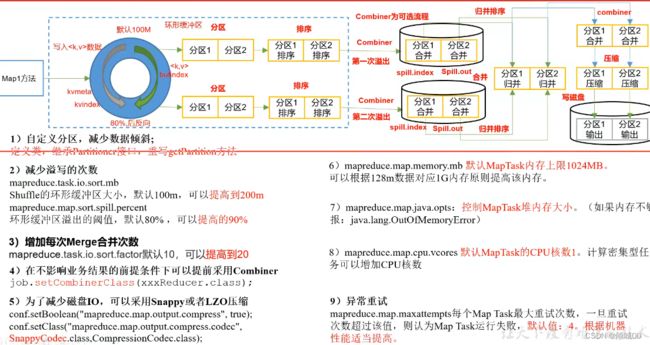

6.2 MapReduce调优

6.3 MapReduce的数据倾斜

- 数据频率倾斜------某一个区域的数量要大于其他的区域

- 数据大小倾斜------部分记录的大小要远远大于平均值

- 2)减少数据倾斜的办法:

- (1)检查是否空值过多导致数据倾斜

- 审查环境可以直接过滤掉空值,如果想保留空值那就自定义分区,将空值+随机数打散,在进行二次聚合

- (2)在Map阶段提前处理,最好现在Map阶段处理,如:Combiner,MapJoin

- (3)设置多个Reduce的个数

- .

七:Yarn的生产经验

八:Hadoop的综合调优

8.1. Hadoop 小文件优化方法

8.1.1 小文件的弊端

- HDFS 上每个文件都要在 NameNode 上创建对应的元数据,这个元数据的大小约为

150byte,这样当小文件比较多的时候,就会产生很多的元数据文件,一方面会大量占用

NameNode 的内存空间,另一方面就是元数据文件过多,使得寻址索引速度变慢。

小文件过多,在进行 MR 计算时,会生成过多切片,需要启动过多的 MapTask。每个

MapTask 处理的数据量小,导致 MapTask 的处理时间比启动时间还小,白白消耗资源。

8.1.2 小文件的处理办法

- 1)在数据采集的时候,就将小文件或小批数据合成大文件再上传 HDFS(数据源头)

- 2)Hadoop Archive(存储方向)

是一个高效的将小文件放入 HDFS 块中的文件存档工具,能够将多个小文件打包成一

个 HAR 文件,从而达到减少 NameNode 的内存使用 - 3)CombineTextInputFormat(计算方向)

CombineTextInputFormat 用于将多个小文件在切片过程中生成一个单独的切片或者少

量的切片。 - 4)开启 uber 模式,实现 JVM 重用(计算方向)

默认情况下,每个 Task 任务都需要启动一个 JVM 来运行,如果 Task 任务计算的数据

量很小,我们可以让同一个 Job 的多个 Task 运行在一个 JVM 中,不必为每个 Task 都开启

一个 JVM。

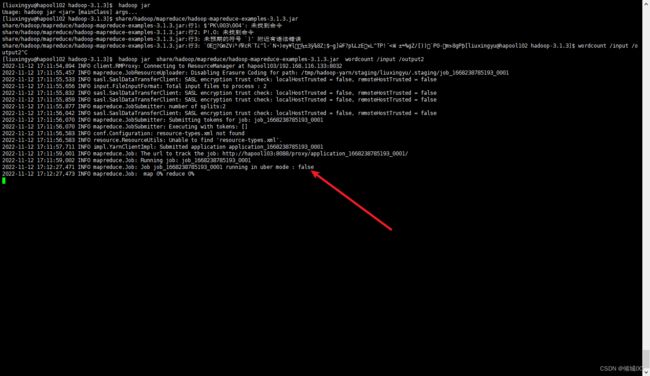

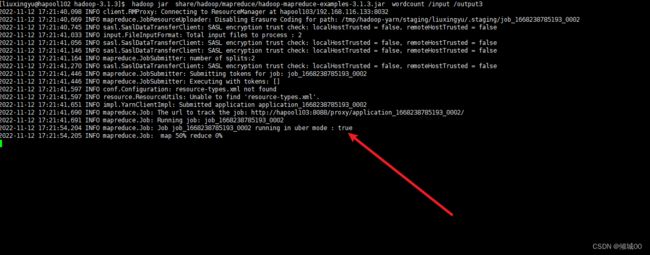

# 执行任务发现uber 默认是关闭的

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output2

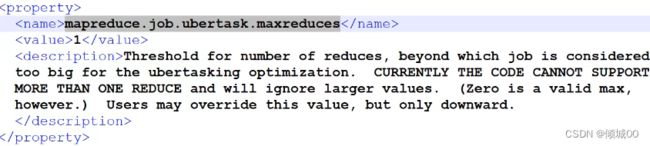

8.1.3开启uber 模式

(4)开启 uber 模式,在 mapred-site.xml 中添加如下配置

mapreduce.job.ubertask.enable

true

mapreduce.job.ubertask.maxmaps

9

mapreduce.job.ubertask.maxreduces

1

mapreduce.job.ubertask.maxbytes

- 看一下数值的默认值

- 默认是关闭的

- MapTask的个数, 可向下修改

- 最大Reduce的个数

- 最大输入数据量,默认是Block大小(128)

- 修改完数据进行分发,不用重启

- hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output3

- 重新执行

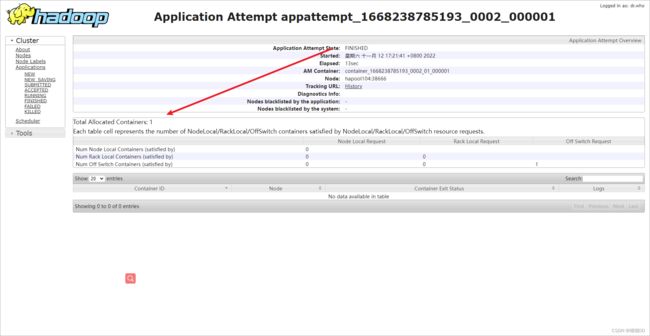

- 发现已经启动了

- 总共1个容器

- 为什么只有一个容器大家公用哪个一个容器,较少开关的时间,对小文件处理起来比较友好

8.2. 测试MapReduce的压测

九:企业开发案例

- 需求:从1个G的数据中,统计每个单词的出现的次数,服务器3台,每台配置4G内存,4核CPU,4线程

(1)需求:从 1G 数据中,统计每个单词出现次数。服务器 3 台,每台配置 4G 内存,

4 核 CPU,4 线程。

(2)需求分析:

1G / 128m = 8 个 MapTask;1 个 ReduceTask;1 个 mrAppMaster

平均每个节点运行 10 个 / 3 台 ≈ 3 个任务(4 3 3)

9.1 HDFS 参数调优

(1)修改:hadoop-env.sh

export HDFS_NAMENODE_OPTS="-Dhadoop.security.logger=INFO,RFAS -

Xmx1024m"

export HDFS_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS

-Xmx1024m"

(2)修改 hdfs-site.xml

dfs.namenode.handler.count

21

(3)修改 core-site.xml

fs.trash.interval

60

9.2 MapReduce

(1)修改 mapred-site.xml

mapreduce.task.io.sort.mb

100

mapreduce.map.sort.spill.percent

0.80

mapreduce.task.io.sort.factor

10

mapreduce.map.memory.mb

-1

The amount of memory to request from the

scheduler for each map task. If this is not specified or is

non-positive, it is inferred from mapreduce.map.java.opts and

mapreduce.job.heap.memory-mb.ratio. If java-opts are also not

specified, we set it to 1024.

mapreduce.map.cpu.vcores

1

mapreduce.map.maxattempts

4

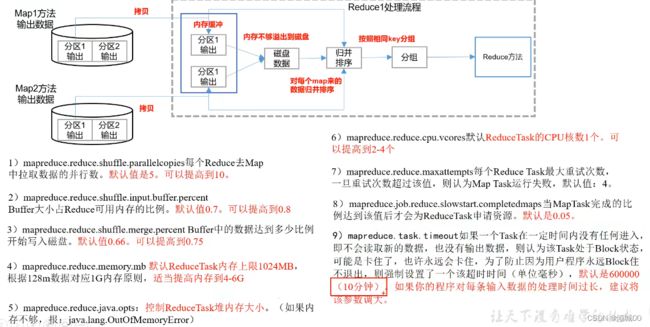

mapreduce.reduce.shuffle.parallelcopies

5

mapreduce.reduce.shuffle.input.buffer.percent

0.70

mapreduce.reduce.shuffle.merge.percent

0.66

mapreduce.reduce.memory.mb

-1

The amount of memory to request from the

scheduler for each reduce task. If this is not specified or

is non-positive, it is inferred

from mapreduce.reduce.java.opts and

mapreduce.job.heap.memory-mb.ratio.

If java-opts are also not specified, we set it to 1024.

mapreduce.reduce.cpu.vcores

2

mapreduce.reduce.maxattempts

4

mapreduce.job.reduce.slowstart.completedmaps

0.05

mapreduce.task.timeout

600000

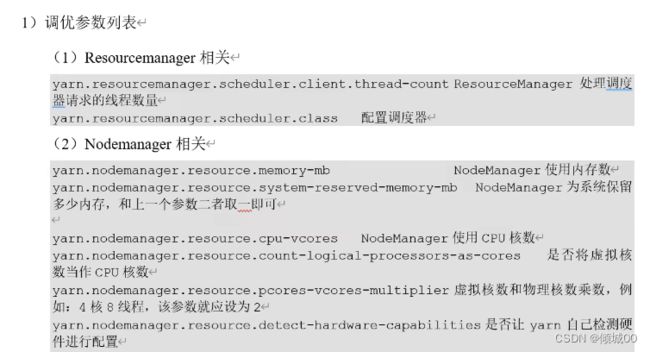

9 .3 Yarn

(1)修改 yarn-site.xml 配置参数如下:

The class to use as the resource scheduler.

yarn.resourcemanager.scheduler.class

org.apache.hadoop.yarn.server.resourcemanager.scheduler.capaci

ty.CapacityScheduler

Number of threads to handle scheduler

interface.

yarn.resourcemanager.scheduler.client.thread-count

8

Enable auto-detection of node capabilities such as

memory and CPU.

yarn.nodemanager.resource.detect-hardware-capabilities

false

Flag to determine if logical processors(such as

hyperthreads) should be counted as cores. Only applicable on Linux

when yarn.nodemanager.resource.cpu-vcores is set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true.

yarn.nodemanager.resource.count-logical-processors-ascores

false

Multiplier to determine how to convert phyiscal cores to

vcores. This value is used if yarn.nodemanager.resource.cpu-vcores

is set to -1(which implies auto-calculate vcores) and

yarn.nodemanager.resource.detect-hardware-capabilities is set to true.

The number of vcores will be calculated as number of CPUs * multiplier.

yarn.nodemanager.resource.pcores-vcores-multiplier

1.0

Amount of physical memory, in MB, that can be allocated

for containers. If set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true, it is

automatically calculated(in case of Windows and Linux).

In other cases, the default is 8192MB.

yarn.nodemanager.resource.memory-mb

4096

Number of vcores that can be allocated

for containers. This is used by the RM scheduler when allocating

resources for containers. This is not used to limit the number of

CPUs used by YARN containers. If it is set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true, it is

automatically determined from the hardware in case of Windows and Linux.

In other cases, number of vcores is 8 by default.

yarn.nodemanager.resource.cpu-vcores

4

The minimum allocation for every container request at the

RM in MBs. Memory requests lower than this will be set to the value of

this property. Additionally, a node manager that is configured to have

less memory than this value will be shut down by the resource manager.

yarn.scheduler.minimum-allocation-mb

1024

The maximum allocation for every container request at the

RM in MBs. Memory requests higher than this will throw an

InvalidResourceRequestException.

yarn.scheduler.maximum-allocation-mb

2048

The minimum allocation for every container request at the

RM in terms of virtual CPU cores. Requests lower than this will be set to

the value of this property. Additionally, a node manager that is configured

to have fewer virtual cores than this value will be shut down by the

resource manager.

yarn.scheduler.minimum-allocation-vcores

1

The maximum allocation for every container request at the

RM in terms of virtual CPU cores. Requests higher than this will throw an

InvalidResourceRequestException.

yarn.scheduler.maximum-allocation-vcores

2

Whether virtual memory limits will be enforced for

containers.

yarn.nodemanager.vmem-check-enabled

false

Ratio between virtual memory to physical memory when

setting memory limits for containers. Container allocations are

expressed in terms of physical memory, and virtual memory usage is

allowed to exceed this allocation by this ratio.

yarn.nodemanager.vmem-pmem-ratio

2.1