TFS淘宝分布式文件核心存储引擎源码架构剖析实现

这里写目录标题

- 相关背景介绍

- 相关设计思路介绍

- 项目基础

-

- 文件系统接口

- 扇区

- 文件结构

- 关于inode

- 为什么淘宝不用小文件存储

- 淘宝网为什么不用普通文件存储海量小数据?

- 设计思路

-

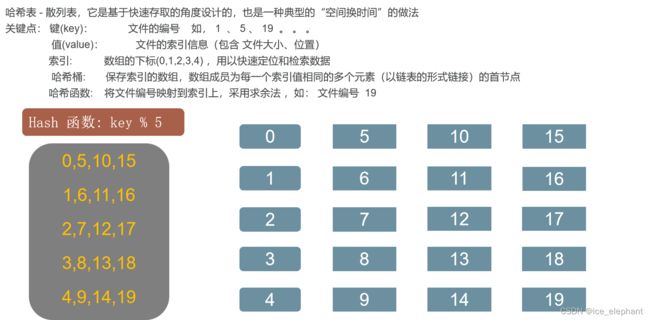

- 关键数据结构哈希表

- 代码日志

-

- mmp_file.h

- mmap_file.cpp

- file_op.h

- main_mmap_op_file.cpp

- index_handle.cpp

- blockwritetest.cpp

- 总结

相关背景介绍

根据淘宝2016年的数据分析,淘宝卖家已经达到900多万,有上十亿的商品。每一个商品有包括大量的图片和文字(平均:15k),粗略估计下,数据所占的存储空间在1PB 以上,如果使用单块容量为1T容量的磁盘来保存数据,那么也需要1024 x 1024 块磁盘来保存.

思考? 这么大的数据量,应该怎么保存呢?就保存在普通的单个文件中或单台服务器中吗?显然是不可行的。

淘宝针对海量非结构化数据存储设计出了的一款分布式系统,叫TFS,它构筑在普通的Linux机器集群上,可为外部提供高可靠和高并发的存储访问。

相关设计思路介绍

以block文件的形式存放数据文件(一般64M一个block),以下简称为“块”,每个块都有唯一的一个整数编号,块在使用之前所用到的存储空间都会预先分配和初始化。

每一个块由一个索引文件、一个主块文件和若干个扩展块组成,“小文件”主要存放在主块中,扩展块主要用来存放溢出的数据。

每个索引文件存放对应的块信息和“小文件”索引信息,索引文件会在服务启动是映射(mmap)到内存,以便极大的提高文件检索速度。“小文件”索引信息采用在索引文件中的数据结构哈希链表来实现。

每个文件有对应的文件编号,文件编号从1开始编号,依次递增,同时作为哈希查找算法的Key 来定位“小文件”在主块和扩展块中的偏移量。文件编号+块编号按某种算法可得到“小文件”对应的文件名。

项目基础

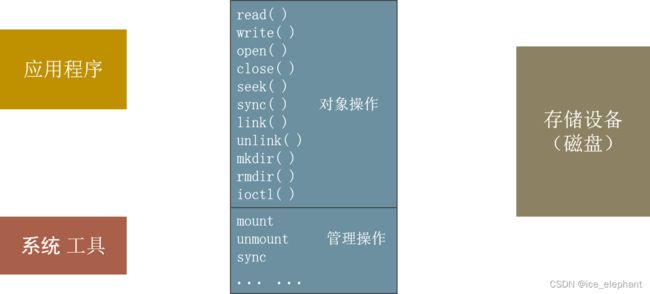

文件系统接口

文件系统是一种把数据组织成文件和目录方式,提供基于文件的存取接口,并通过权限控制。

扇区

磁盘读写的最小单位就是扇区,一般每个扇区是 512 字节(相当于0.5KB);

文件的基本单位块 - 文件存取的最小单位。"块"的大小,最常见的是4KB,即连续八个 sector组成一个 block。

在 Linux 系统中可以用 stat 查看文件相关信息

文件结构

目录项区:存放目录下文件的列表信息

文件数据: 存放文件数据

inode区:(inode table) - 存放inode所包含的信息

关于inode

inode - “索引节点”,储存文件的元信息,比如文件的创建者、文件的创建日期、文件的大小等等。每个inode都有一个号码,操作系统用inode号码来识别不同的文件。ls -i 查看inode 号

inode节点大小 - 一般是128字节或256字节。inode节点的总数,格式化时就给定,一般是每1KB或每2KB就设置一个inode。一块1GB的硬盘中,每1KB就设置一个inode,那么inode table的大小就会达到128MB,占整块硬盘的12.8%。

为什么淘宝不用小文件存储

淘宝网为什么不用普通文件存储海量小数据?

- Inode 占用大量磁盘空间,降低了缓存的效果。

设计思路

以block文件的形式存放数据文件(一般64M一个block),以下简称为“块”,每个块都有唯一的一个整数编号,块在使用之前所用到的存储空间都会预先分配和初始化。

每一个块由一个索引文件、一个主块文件和若干个扩展块组成,“小文件”主要存放在主块中,扩展块主要用来存放溢出的数据。

每个索引文件存放对应的块信息和“小文件”索引信息,索引文件会在服务启动是映射(mmap)到内存,以便极大的提高文件检索速度。“小文件”索引信息采用在索引文件中的数据结构哈希链表来实现。

每个文件有对应的文件编号,文件编号从1开始编号,依次递增,同时作为哈希查找算法的Key 来定位“小文件”在主块和扩展块中的偏移量。文件编号+块编号按某种算法可得到“小文件”对应的文件名。

关键数据结构哈希表

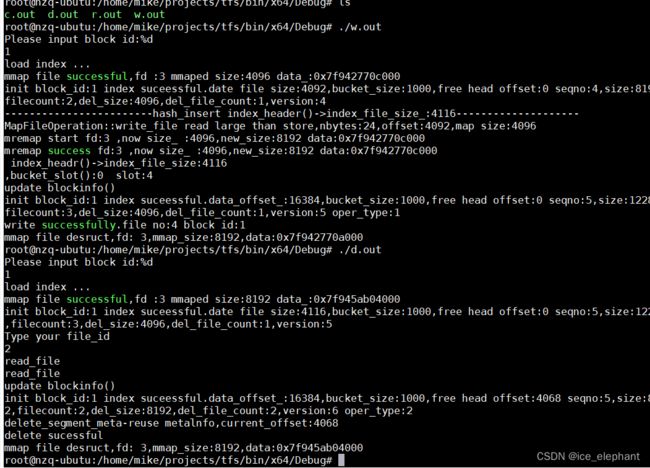

代码日志

文件映射类

mmp_file.h

#ifndef MY_LARGE_FILE_H

#define MY_LARGE_FILE_H

#include "Common.h"

#define DEBUG 1

//代码组织有层次

namespace xiaozhu {

namespace largefile {

struct MMapOption

{

int32_t max_mmap_size_; //最大内存

int32_t first_mmap_size_; //第一次分配的内存

int32_t per_mmap_size_; //每次每块分配的内存

};

class MMapfile {

public:

MMapfile();

explicit MMapfile(const int fd); //必须显示构造

MMapfile(const MMapOption & mmap_option, const int fd);

~MMapfile();

//同步文件,调用这个立即将内存同步到磁盘

bool sync_file();//同步

bool map_file(const bool write = false);//文件映射到内存同时设置访问权限

void* get_data() const; //获取映射到内存的首地址

int32_t get_size()const; //映射内容

bool munmap_file(); //解除映射

bool remap_file(); //重新映射

private:

bool ensure_file_size(const int32_t size); // 扩容

private:

int32_t size_;

int fd_;

void* data_;

struct MMapOption mmap_file_option_;

};

}

}

#endif

mmap_file.cpp

#include "mmap_file.h"

#include 文件操作类

file_op.h

#ifndef FILE_OP_H

#define FILE_OP_H

#include "Common.h"

namespace xiaozhu {

namespace largefile {

class FileOperation {

public:

FileOperation(const std::string &file_Name,const int open_flags = O_RDWR |O_LARGEFILE);

~FileOperation();

int open_file();

void close_file();

int flush_file();//文件立即写入到磁盘 1行代码引起的血案

//带精细化的读写

int pread_file(char* buf, const int32_t nbytes,int64_t offset);

int pwrite_file(char* buf, const int32_t nbytes, int64_t offset);

int write_file(char* buf, const int32_t nbytes);

//int read_file(char* buf, const int32_t nbytes);

int64_t get_file_size();

int unlink_file();//删除文件

int ftruncate_file(const int64_t length);

int seek_file(const int64_t offset);

int get_fd() { return fd_; }

protected:

int fd_;

char* filename_;

int open_flags_;

protected:

static const mode_t OPEN_MODE = 0644;

static const int MAX_DISK_TIMES = 5;//磁盘最大读取次数

protected:

int check_file();

};

}

}

#endif

#include "file_op.h"

namespace xiaozhu {

namespace largefile {

FileOperation::FileOperation(const std::string& file_Name, const int open_flags):fd_(-1), open_flags_(open_flags)

{

filename_ = strdup(file_Name.c_str());//字符串复制

}

FileOperation::~FileOperation()

{

if (fd_ > 0) {

::close(fd_);

}

if (!filename_) free(filename_); filename_ = nullptr;

}

int FileOperation::open_file() {

if (fd_ > 0) {

close(fd_);

fd_ = -1;

}

fd_ = ::open(filename_, open_flags_, OPEN_MODE);

return fd_;

}

void FileOperation::close_file() {

if (fd_ < 0) {

return;

}

close(fd_);

fd_ = -1;

}

int FileOperation::check_file()

{

if (fd_ < 0) {

fd_ = open_file();

}

return fd_;

}

int64_t FileOperation::get_file_size() {

int fd = check_file();

struct stat statbuf;

if (!fstat(fd,&statbuf) != 0) {

return -1;

}

return statbuf.st_size();

}

int FileOperation::ftruncate_file(const int64_t length) {

int fd = check_file();

if (fd < 0) {

return fd;

}

return ftruncate(fd, length);

}

int FileOperation::seek_file(const int64_t offset) {

int fd = check_file();

if (fd < 0) {

return fd;

}

return lseek(fd, offset,SEEK_SET);

}

int FileOperation::flush_file() {

if (open_flags_ & O_SYNC) {

//如果是同步操作的话直接返回就不用主动映射了

return 0;

}

int fd = check_file();

if (fd < 0) {

return fd;

}

return fsync(fd); //缓冲区写入磁盘

}

//读数据

int FileOperation::pread_file(char* buf, const int32_t nbytes, int64_t offset)

{

//从 offset 开始读写nbytes个字节

if (nbytes < 0) return 0;

//int total_read = 0;

int need_read = nbytes;

int cur_offset = offset;

char* tmp_buf = buf;

int i = 0;

while (need_read > 0) {

if (i >= MAX_DISK_TIMES) {

break;

}

if (check_file() < 0) {

return -errno;

}

int readlen = pread64(fd_, tmp_buf, need_read, cur_offset);

if (readlen < 0) {

readlen = errno;

if (-readlen == EINTR || -readlen == EAGAIN) {

continue;

}

else if (EBADF == -readlen) {

fd_ = -1;

continue;

}

else {

continue;

}

}

else if (readlen == 0) {

break;

}

else {

need_read -= readlen; //还需要读这么多

//total_read += readlen; //总共读了这么多

tmp_buf += readlen;

cur_offset += readlen; //当前读写的情况

}

//还有什么情况呢 ?

}

if (need_read != 0 ) {

return xiaozhu::largefile::EXIT_DISK_OPER_INCOMPLETE;

}

return xiaozhu::largefile::TFS_SUCCESS;

}

int FileOperation::pwrite_file(char *buf,const int32_t nbytes,int64_t offset) {

//从 offset 开始读写nbytes个字节

if (nbytes < 0) return 0;

//int total_read = 0;

int need_write = nbytes; //需要读这么多个字节 friends ok is well none of us

int cur_offset = offset;

char* tmp_buf = buf;

int i = 0;

while (need_write > 0) {

if (i >= MAX_DISK_TIMES) {

break;

}

if (check_file() < 0) {

return -errno;

}

int writelen = ::pwrite64(fd_, tmp_buf, need_write, cur_offset);

if (writelen < 0) {

writelen = errno;

if (-writelen == EINTR || -writelen == EAGAIN) {

continue;

}

else if (EBADF == -writelen) {

fd_ = -1;

continue;

}

else {

continue;

}

}

else if (writelen == 0) {

break;

}

else {

need_write -= writelen; //还需要读这么多

tmp_buf += writelen; //总共读了这么多

cur_offset += writelen; //当前读写的情况

}

//还有什么情况呢 ?

}

if (need_write != 0) {

return xiaozhu::largefile::EXIT_DISK_OPER_INCOMPLETE;

}

return xiaozhu::largefile::TFS_SUCCESS;

}

//写文件

int FileOperation::write_file(char* buf, const int32_t nbytes)

{

return 0; //不指定偏移来写

int needwrite = nbytes;

char* tmp_buf = buf;

int i = 0;

while (needwrite > 0) {

if (i >= MAX_DISK_TIMES) {

break;

}

++i;

if (check_file() < 0) {

return -errno;

}

int write_len = ::write(fd_, tmp_buf, needwrite);

if (write_len < 0) {

write_len = -errno;

if (-write_len == EINTR || -write_len == EAGAIN) {

continue;

}

else if (EBADF == -write_len) {

fd_ = -1;

return write_len;

}

else {

continue;

}

//快速实现

}

needwrite -= write_len;

tmp_buf += write_len; //bug 指针的移动

}

if (needwrite != 0) {

return xiaozhu::largefile::EXIT_DISK_OPER_INCOMPLETE;

}

return xiaozhu::largefile::TFS_SUCCESS;

}

int FileOperation::unlink_file() {

close_file();

return unlink(filename_);

}

}

}

单元测试

main_mmap_op_file.cpp

#include "mmap_file_op.h"

using namespace xiaozhu;

using namespace largefile;

largefile::MMapOption map_option = { 1024 * 1000,4096,4096 };

int main(void) {

const char* file_Name = "./test.txt";

char write_buffer[1024 + 1];

char read_buffer[1024 + 1];

MMapFileOperation* mpt = new MMapFileOperation(file_Name);

int ret = mpt->mmap_file(map_option);

int fd = mpt->open_file();

if (fd < 0) {

fprintf(stderr, "file is not open !\n");

exit(-1);

}

write_buffer[1024] = '\0';

if (ret == largefile::TFS_EEROR) {

fprintf(stderr, "largefile::TFS_ERROR mmap_file failed\n");

exit(-1);

}

memset(write_buffer, '4', 1024);

//写进去

ret = mpt->pwrite_file(write_buffer, 1024, 0);

if (ret == largefile::TFS_EEROR) {

fprintf(stderr, "largefile::TFS_EEROR pwrite_file failed\n");

exit(-1);

}

ret = mpt->pread_file(read_buffer, 1024, 0);

if (ret == largefile::TFS_EEROR) {

fprintf(stderr, "largefile::failed pread_file failed\n");

exit(-1);

}

read_buffer[1024] = '\0';

printf("read from buffer:%s\n", read_buffer);

ret = mpt->flush_file();

if (ret == largefile::TFS_EEROR) {

fprintf(stderr, "largefile::TFS_ERROR flush_file failed\n");

exit(-1);

}

ret = mpt->mumap_file();

mpt->close_file();

return 0;

}

测试结果:

第四次单元测试

##main_index_init_test.cpp

#include "indexHandle.h"

#include "Common.h"

#include "file_op.h"

#include

添加 删除、写模块后的头文件 :index_handle.h

在这里插入代码片

index_handle.cpp

写入块,int IndexHandle::write_segment_meta(const uint64_t key, Meltainfo& meta)

#ifndef HANDLE_INDEX_H

#define HANDLE_INDEX_H

#include "Common.h"

#include "mmap_file_op.h"

namespace xiaozhu {

namespace largefile {

struct IndexHeader {

public:

IndexHeader()

{

memset(this, 0, sizeof(IndexHeader));

}

BlockInfo block_info_;

int32_t bucket_size_;

int32_t data_offset_;//指向主块的 也代表数据大小

int32_t index_file_size_; //以空间换时间 index_header + all

int32_t free_head_offset_;

};

class IndexHandle {

public :

IndexHandle(const std::string& base_path, const uint32_t main_block_id);

~IndexHandle();

int create(const uint32_t logic_block_id,const int32_t bucket_size,const MMapOption map_option);//哈希桶的大小

int load(const uint32_t logic_block_id, const int32_t bucket_size, const MMapOption map_option);

//remove unlink

int remove(const uint32_t logic_block_id);

int flush();

void commit_block_offset_data(const int file_size) const{

reinterpret_cast<IndexHeader*>(file_op_->get_map_data())->data_offset_ += file_size;

}

int updata_block_info(const OperType oper_type,const uint32_t modify_size);

IndexHeader* index_header() {

return reinterpret_cast< IndexHeader* >(file_op_->get_map_data());

}

BlockInfo* block_info() {

return reinterpret_cast<BlockInfo*>(file_op_->get_map_data());

}

int32_t bucket_sizes()const{

return reinterpret_cast<IndexHeader*>(file_op_->get_map_data())->bucket_size_; //等于bucket_size();

}

int32_t get_block_data_offset()const{

return reinterpret_cast<IndexHeader*>(file_op_->get_map_data())->data_offset_;

}

int32_t free_head_offset() {

return reinterpret_cast<IndexHeader*>(file_op_->get_map_data())->free_head_offset_;

}

int32_t* bucket_slot() {

return reinterpret_cast<int32_t*>(reinterpret_cast<char*> (file_op_->get_map_data())+ sizeof(IndexHeader));

}

int write_segment_meta(const uint64_t key,Meltainfo &meta);

int read_sengment_meta(const uint64_t key, Meltainfo& meta);

int32_t delete_segment_meta(const uint64_t key);

int hash_find(const uint64_t key, int32_t& current_offset, int32_t& previous_offset);

int32_t hash_insert(const uint64_t key,int32_t previous,Meltainfo &meta);

private:

MMapFileOperation* file_op_;

bool is_load_;

bool hash_compare(int64_t left,int64_t right);

};

}

}

#endif

单元测试

blockwritetest.cpp

#include "indexHandle.h"

#include "Common.h"

#include "file_op.h"

#include 添加后的 indexhandle.cpp

#include "indexHandle.h"

#include 测试读、可重复利用节点的删除 mainblockwrite.cpp

总结

这个淘宝分布式文件系统核心存储引擎项目,从宏观层面理解:就是通过文件来管理文件。这么直接说有点抽象,刚开始我有疑问,为什么要用文件管理文件?操作系统直接来帮我们管理了不好吗?为什么还要自己写一个程序?这是我做这个项目之初的疑问。后来我了解到,因为淘宝的数据量非常的大,如果这些数据都存在磁盘中,cpu 直接访问磁盘的速度是非常慢的,大概是 cpu 访问内存的速度的万分之1 ,然后这么多数据并不能都放在内存中,因为内存的 大小是十分有限的价格昂贵.而造成访问磁盘速度这么慢的原因是,系统在访问文件的时候需要移动这个 “磁头” 这个涉及到一些底层的物理知识,磁头的移动是十分耗时的,但是磁头得帮我们定位到文件,迫不得寻找消耗时间,阿里的大牛们,设计的这个淘宝分布式文件系统,就是不让系统来帮我们找磁盘,我们自己写一个 index 文件专门帮我们来管理文件岂不美哉 ? 这样就可以避免系统帮我们找文件磁盘移动. 这个思想的本质是,以空间来换时间,用价格相对不太昂贵的硬盘的储存空间,来换取文件的访问效率。 淘宝的这种大文件的分布式文件系统在业界堪称是最牛的设计,它的设计十分精巧.