数据湖的最佳实践

As Data drives business we need Data lake to collect data and get advantage from it. In this story, we will cover all the insights about the data lake and know it in a better way.

随着数据推动业务发展,我们需要Data Lake来收集数据并从中获得好处。 在这个故事中,我们将涵盖有关数据湖的所有见解,并以更好的方式对其进行了解。

Having intelligent services and microservice architecture, a system produces millions of bytes of data per second but if we don’t use them to drive our business and get useful insights that help us to make crucial decisions it’s just garbage for us. So here comes Data lakes into the picture.

拥有智能服务和微服务架构,系统每秒可产生数百万个字节的数据,但是如果我们不使用它们来推动业务发展并获得有用的见解来帮助我们做出重要决策,那对我们来说就是垃圾。 因此,数据湖出现了。

Before going let’s see what type of data we have!

在开始之前,让我们看看我们拥有什么类型的数据!

数据类型是什么? (What are the types of Data?)

Source: https://www.coolfiresolutions.com/blog/unstructured-structured-data/ 资料来源: https : //www.coolfiresolutions.com/blog/unstructured-structured-data/So mainly there are two types;

所以主要有两种类型;

- Structured Data: It’s neat, has known schema and could be fit in fixed fields in a table. A relational database is an example of structured data: tables are linked using unique IDs and a query language like SQL is used to interact with the data. Structured data is data created using a predefined (fixed) schema and is typically organized in a tabular format. Think of a table where each cell contains a discrete value. The schema represents the blueprint of how the data is organized, the heading row of the table used to describe the value, and the format of each column. 结构化数据:整洁,具有已知的架构,可以放在表的固定字段中。 关系数据库是结构化数据的一个示例:使用唯一ID链接表,并使用SQL之类的查询语言与数据进行交互。 结构化数据是使用预定义(固定)模式创建的数据,通常以表格格式进行组织。 考虑一个表,其中每个单元格包含一个离散值。 该模式表示如何组织数据的蓝图,用于描述值的表的标题行以及每一列的格式。

- Unstructured Data: It can be found in different forms: from web pages to emails, from blogs to social media posts, etc. 80% of the data we have is known to be unstructured. Regardless of the format used for storing the data, we are talking, in most cases, about textual documents made of sequences of words. No schema or structure eg images, application logs, etc. 非结构化数据:可以以不同的形式找到:从网页到电子邮件,从博客到社交媒体帖子等。已知我们拥有的80%数据是非结构化的。 无论用于存储数据的格式如何,在大多数情况下,我们都在谈论由单词序列构成的文本文档。 没有架构或结构,例如图像,应用程序日志等。

什么是数据湖? (What is Data Lake?)

OverView 概观Let’s begin with a brief overview of the data lake; data from various sources, structured or on-structured get into the ingestion layer, from here we store the data, organize it, process and transforms it so that it can be applied for various tasks say training a Machine learning Model, analyzing trends, making dashboards, generating reports, etc.

让我们从 对数据湖 的 简要概述 开始 ; 来自各种来源(结构化或结构化)的数据进入摄取层,我们从此处存储数据,对其进行组织,处理和转换,以便将其应用于各种任务,例如训练机器学习模型,分析趋势,仪表板,生成报告等。

Data is one of the business’s most valuable assets but there are steps that involve that we need to take in order to utilize is well for our organization.

数据是业务最有价值的资产之一, 但是对于我们的组织而言,我们需要采取一些步骤才能利用这些数据。

You may call this as the data processing ladder; so first we collect the data then we organize this data in some proper way with indexing and catalog. Once we have this data organized we can run queries over it, analyze it, and finally get interesting and useful insights, that finally help to drive business and make crucial decisions.

您可以将其称为 数据处理阶梯 ; 因此,首先我们收集数据,然后使用索引和目录以某种适当的方式组织数据。 一旦我们整理了这些数据,我们就可以对其进行查询,分析,并最终获得有趣且有用的见解,从而最终有助于推动业务发展并制定关键决策。

Coming back to the data lake, here are some of the key points that cover the use and role in short.

回到数据湖,以下是一些关键点,简要介绍了其用途和作用。

- It’s a centralized repository for structured and unstructured data storage 它是用于结构化和非结构化数据存储的集中式存储库

- Data flows from the streams (the source systems) to the lake. Users have access to the lake to examine, take samples, or dive in. 数据从溪流(源系统)流向湖泊。 用户可以进入湖泊进行检查,取样或潜水。

- Data is stored at the leaf level in an untransformed or nearly untransformed state. 数据以未转换或几乎未转换的状态存储在叶级。

- Stores raw data as in without any structure. 像没有任何结构一样存储原始数据。

- Store many types of data such as files, text, images. 存储许多类型的数据,例如文件,文本,图像。

- Data is transformed and schema is applied to fulfill the needs of analysis. 转换数据并应用架构来满足分析需求。

- Store Machine learning artifacts, real-time data, and analytics output. 存储机器学习工件,实时数据和分析输出。

It’s different from Databases and Data Warehouse.

它与数据库和数据仓库不同。

现在让我们深入了解架构 (Now lets deep dive into the Architecture)

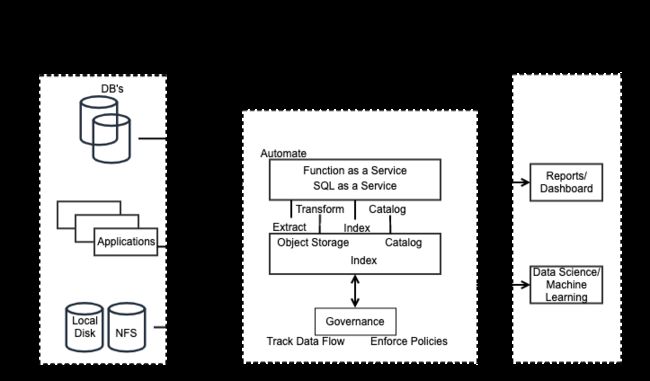

Architecture 建筑There are three main layers when it comes to designing a data lake, time to go deep into them.

在设计数据湖时,有三个主要层,这是深入研究它们的时间。

Ingestion Engine/layer

提取引擎/层

This is basically the data consumption or the collection layer. Data from multiple sources came into it and this layer further sends it for storage and processing. There are broadly three sources that supply the data.

这基本上是数据消耗或收集层。 来自多个源的数据进入其中,并且该层进一步将其发送以进行存储和处理。 大致有三个来源可以提供数据。

We can get data from the Databases, for this we need to have an SQL service, which fetches all the data from the database. In case we have a database that is running and consuming data, we also need to build a Replication mechanism as in that case we can’t just fetch the data once, we also need to keep ourselves in sync with the live DB.

我们可以从数据库中获取数据,为此,我们需要一个SQL服务 ,该服务将从数据库中获取所有数据。 如果我们有一个正在运行并且正在使用数据的数据库,我们还需要构建一个复制机制,因为在这种情况下,我们不能只获取一次数据,还需要使自己与实时数据库保持同步。

Second, we can get the data from our Applications, now since most of the firms adopt MicroService architecture, there are thousands of service running and they produce a million bites of data such as logs that can be further used for analysis. So for this, we need a Streaming mechanics (say Kafka) so that all the data from these services flows into our lake in an async manner and there is no load over the system.

其次,我们现在可以从应用程序中获取数据,因为大多数公司都采用MicroService体系结构,因此有成千上万的服务正在运行,并且它们产生一百万比特的数据(例如日志),可以进一步用于分析。 因此,为此,我们需要一个流传输机制 (例如Kafka),以使来自这些服务的所有数据以异步方式流入我们的湖泊,并且不会给系统造成负担。

And the last one is the local disk/ NFS, say I need to get a CSV file into the lake. So we require an Upload mechanism for this so that the user can upload the data into the lake. We can provide a self-serving portal where the user can upload the data. This UI can serve manual requests and for automation, we can build APIs.

最后一个是本地磁盘/ NFS,说我需要将CSV文件放入湖中。 因此,我们为此需要一种上载机制 ,以便用户可以将数据上载到湖泊中。 我们可以提供一个自助服务门户,用户可以在其中上传数据。 此UI可以满足手动请求,对于自动化,我们可以构建API。

So this is all we require to have our Ingestion layer.

因此,这就是我们拥有摄取层的全部要求。

Process

处理

Once we have all the data from the Ingestion layer we have to store it and work upon it.

一旦我们从Ingestion层获得了所有数据,就必须对其进行存储和处理。

So we store all the data, you may use AWS S3 for the storage. Only storing is not enough we need to get to know data and process it as fast as possible. So we also need to have a catalog, you may use AWS Glue for this and most importantly index our data, proper indexing is needing for fast processing.

因此,我们存储了所有数据,您可以使用AWS S3进行存储。 仅存储是不够的,我们需要了解数据并尽可能快地对其进行处理。 因此,我们还需要有一个目录 ,你可以使用AWS胶这个,最重要的指数我们的数据,正确的索引是需要快速处理。

Once we are done with storage, indexing, and catalog. We need to extract and transform this data for our needs. For this, we can have SQL as a service or function as a service, say AWS lambda. We can either automate this process or run manually that depends upon the consumption and how frequently we are getting the data. We may schedule these jobs to transform our data.

一旦完成存储,索引编制和目录编制。 我们需要提取并转换这些数据以满足我们的需求。 为此,我们可以将SQL作为服务或功能作为服务,例如AWS lambda。 我们可以自动执行此过程,也可以手动运行,具体取决于消耗情况和获取数据的频率。 我们可能安排这些工作来转换我们的数据。

Now there is another key component that is Governance, this is also an important part of this layer. It's basically responsible for having information regarding from where this data is flowing, so the data flow and secondly enforcing policies over the data; say some government policy in some region states that we can’t store user email Id and contact details for more than 1 year. So in that case this component can run a scheduled job to obfuscate all the user info that is 1 year old.

现在,还有另一个关键组件是Governance ,这也是该层的重要组成部分。 它主要负责获取有关该数据从何处流动的信息,因此要进行数据流动 ,其次是对数据执行策略 。 说某些地区的某些政府政策规定,我们不能将用户电子邮件ID和联系方式存储超过1年。 因此,在这种情况下,此组件可以运行计划的作业来混淆所有已使用1年的用户信息。

This is the main layer from here our data is ready for the insights.

这是这里的主要层,我们的数据已准备就绪,可以用于洞察。

Insights

见解

There are a lot of ways we can use this data now, say we can consume this data to make dashboards, do trend analysis, generating reports. Or say providing this data to train Machine Learning models. For instance, based on user actions or purchase history we need to recommend items to the user in the future. Or we may want to provide this data to our product folks to learn about how our user is behaving or this data may be related to some A/B tests and we need to see how well this test went. We can have other applications too, consume this data in real-time.

现在,有很多方法可以使用此数据,例如,我们可以使用此数据来制作仪表板,进行趋势分析,生成报告 。 或者说提供此数据来 训练机器学习模型。 例如,根据用户的行为或购买历史记录,我们将来需要向用户推荐商品。 或者,我们可能希望将这些数据提供给我们的产品人员,以了解我们的用户的行为方式,或者该数据可能与某些A / B测试相关,我们需要查看该测试进行得如何。 我们也可以有其他应用程序,实时使用这些数据。

There can be multiple uses of this data and it all depends on what all you are storing and why you need it at the end.

该数据可以有多种用途,而这一切都取决于您存储的内容以及最终需要它的原因。

And with this last layer, I hope you all are set to start building a Data Lake for your organization and getting data to work for you.

在最后这一层,我希望大家都可以开始为您的组织构建数据湖,并让数据为您服务。

Data Lake :: AWS方式! (Data Lake:: The AWS Way!)

AWS does provide various services that can be used to build your own Data Lake, and recently I came to attend a conference on the same, and below are some of the screenshots from the same. You can refer to AWS documents to get more insights over them.

AWS确实提供了可用于构建自己的Data Lake的各种服务,最近我来参加同一个会议,下面是来自同一会议的一些屏幕截图。 您可以参考AWS文档以获得更多关于它们的见解。

AWS Data Lake Components AWS Data Lake组件 AWS Services AWS服务If you look at the above two, they have a one to one mapping.

如果您查看以上两个,它们具有一对一的映射。

AWS IAM and other services to make Data Lake Secure AWS IAM和其他服务可确保Data Lake安全We haven’t talked about security here but that is important to consider when we are developing almost everything.

我们在这里没有谈论安全性 ,但是在开发几乎所有内容时都必须考虑这一点。

PS. I usually prefer AWS technologies a lot when it comes to the cloud but I’m sure other cloud providers say Microsoft Azure does offer these services too. The choice is yours either to use these service providers or work with open source tools or both to have your Data lake ready.

PS。 当涉及到云时,我通常通常更喜欢AWS技术,但是我敢肯定其他云提供商会说Microsoft Azure也提供这些服务。 您可以选择使用这些服务提供商或使用开源工具,或者两者都准备好您的Data Lake。

Hope you enjoyed the article and got an idea or an overview of how we go about designing Data lake. I know this is kind of brief just to provide you with the helicopter view of the system. There is a lot of insights we need to go into when it comes to working/ building these systems.

希望您喜欢这篇文章,并对我们如何设计Data Lake有一个想法或概述。 我知道这只是为了向您提供系统的直升机视图。 在工作/构建这些系统时,我们需要深入研究。

Please do provide your valuable feedback over this as; our brain does act do also data lake; it consumes this feedback and gets insights from it, that eventually drive towards improvement.

请为此提供您的宝贵反馈; 我们的大脑确实在行动,也可以记录湖泊 它吸收了这些反馈并从中获取见解,最终推动了改进。

Thanks………see you soon.

谢谢…………很快再见。

翻译自: https://medium.com/@kunal14053/data-lake-eb75b82ee1e5

数据湖的最佳实践