kebushpere扩容k8s worker节点

上次部署了一主两从,现在扩展多一个工作节点为一主三从节点。

本次增加了node3节点,并尝试使用密钥模式进行部署,有密码密钥尝试过,但是已经失败。

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master, address: 192.168.5.11, internalAddress: 192.168.5.11, user: root, password: Aa123456}

- {name: node1, address: 192.168.5.12, internalAddress: 192.168.5.12, user: root, password: Aa123456}

- {name: node2, address: 192.168.5.13, internalAddress: 192.168.5.13, user: root, password: Aa123456}

- {name: node3, address: 192.168.5.14, internalAddress: 192.168.5.14, user: root, privateKeyPath: "~/.ssh/id_ed25519bak"}

roleGroups:

etcd:

- master

master:

- master

worker:

- node1

- node2

- node3

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.20.4

imageRepo: kubesphere

clusterName: cluster.local

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: []

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.1.0

spec:

persistence:

storageClass: "" # If there is no default StorageClass in your cluster, you need to specify an existing StorageClass here.

authentication:

jwtSecret: "" # Keep the jwtSecret consistent with the Host Cluster. Retrieve the jwtSecret by executing "kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the Host Cluster.

local_registry: "" # Add your private registry address if it is needed.

etcd:

monitoring: false # Enable or disable etcd monitoring dashboard installation. You have to create a Secret for etcd before you enable it.

endpointIps: localhost # etcd cluster EndpointIps. It can be a bunch of IPs here.

port: 2379 # etcd port.

tlsEnable: true

common:

redis:

enabled: false

openldap:

enabled: false

minioVolumeSize: 20Gi # Minio PVC size.

openldapVolumeSize: 2Gi # openldap PVC size.

redisVolumSize: 2Gi # Redis PVC size.

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090 # Prometheus endpoint to get metrics data.

es: # Storage backend for logging, events and auditing.

# elasticsearchMasterReplicas: 1 # The total number of master nodes. Even numbers are not allowed.

# elasticsearchDataReplicas: 1 # The total number of data nodes.

elasticsearchMasterVolumeSize: 4Gi # The volume size of Elasticsearch master nodes.

elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.

logMaxAge: 7 # Log retention time in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks--log.

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchUrl: ""

externalElasticsearchPort: ""

console:

enableMultiLogin: true # Enable or disable simultaneous logins. It allows different users to log in with the same account at the same time.

port: 30880

alerting: # (CPU: 0.1 Core, Memory: 100 MiB) It enables users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from.

enabled: false # Enable or disable the KubeSphere Alerting System.

# thanosruler:

# replicas: 1

# resources: {}

auditing: # Provide a security-relevant chronological set of records,recording the sequence of activities happening on the platform, initiated by different tenants.

enabled: false # Enable or disable the KubeSphere Auditing Log System.

devops: # (CPU: 0.47 Core, Memory: 8.6 G) Provide an out-of-the-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image.

enabled: false # Enable or disable the KubeSphere DevOps System.

jenkinsMemoryLim: 2Gi # Jenkins memory limit.

jenkinsMemoryReq: 1500Mi # Jenkins memory request.

jenkinsVolumeSize: 8Gi # Jenkins volume size.

jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters.

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events: # Provide a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters.

enabled: false # Enable or disable the KubeSphere Events System.

ruler:

enabled: true

replicas: 2

logging: # (CPU: 57 m, Memory: 2.76 G) Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd.

enabled: false # Enable or disable the KubeSphere Logging System.

logsidecar:

enabled: true

replicas: 2

metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) It enables HPA (Horizontal Pod Autoscaler).

enabled: false # Enable or disable metrics-server.

monitoring:

storageClass: "" # If there is an independent StorageClass you need for Prometheus, you can specify it here. The default StorageClass is used by default.

# prometheusReplicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and providing high availability.

prometheusMemoryRequest: 400Mi # Prometheus request memory.

prometheusVolumeSize: 20Gi # Prometheus PVC size.

# alertmanagerReplicas: 1 # AlertManager Replicas.

multicluster:

clusterRole: none # host | member | none # You can install a solo cluster, or specify it as the Host or Member Cluster.

network:

networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods).

# Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net.

enabled: false # Enable or disable network policies.

ippool: # Use Pod IP Pools to manage the Pod network address space. Pods to be created can be assigned IP addresses from a Pod IP Pool.

type: none # Specify "calico" for this field if Calico is used as your CNI plugin. "none" means that Pod IP Pools are disabled.

topology: # Use Service Topology to view Service-to-Service communication based on Weave Scope.

type: none # Specify "weave-scope" for this field to enable Service Topology. "none" means that Service Topology is disabled.

openpitrix: # An App Store that is accessible to all platform tenants. You can use it to manage apps across their entire lifecycle.

store:

enabled: true # Enable or disable the KubeSphere App Store.

servicemesh: # (0.3 Core, 300 MiB) Provide fine-grained traffic management, observability and tracing, and visualized traffic topology.

enabled: false # Base component (pilot). Enable or disable KubeSphere Service Mesh (Istio-based).

kubeedge: # Add edge nodes to your cluster and deploy workloads on edge nodes.

enabled: false # Enable or disable KubeEdge.

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress: # At least a public IP address or an IP address which can be accessed by edge nodes must be provided.

- "" # Note that once KubeEdge is enabled, CloudCore will malfunction if the address is not provided.

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

然后执行kk命令添加工作节点,前提需要完成安装的依赖,特别是docker版本,还有docker 启动方式也需要更改。docker启动方式

$:~/code/k8s$ kk add nodes -f config-sample.yaml

+--------+------+------+---------+----------+-------+-------+-----------+----------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | conntrack | docker | nfs client | ceph client | glusterfs client | time |

+--------+------+------+---------+----------+-------+-------+-----------+----------+------------+-------------+------------------+--------------+

| node1 | y | y | y | y | y | y | y | 19.03.15 | y | | | CST 11:23:08 |

| node2 | y | y | y | y | y | y | y | 19.03.15 | y | | | CST 11:23:08 |

| node3 | y | y | y | y | y | y | y | 19.03.15 | y | | | CST 11:23:08 |

| master | y | y | y | y | y | y | y | 19.03.15 | y | | | CST 11:23:09 |

+--------+------+------+---------+----------+-------+-------+-----------+----------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, you should ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: yes

INFO[11:23:12 CST] Downloading Installation Files

INFO[11:23:12 CST] Downloading kubeadm ...

INFO[11:23:12 CST] Downloading kubelet ...

INFO[11:23:13 CST] Downloading kubectl ...

INFO[11:23:13 CST] Downloading helm ...

INFO[11:23:13 CST] Downloading kubecni ...

INFO[11:23:13 CST] Configuring operating system ...

[node1 192.168.5.12] MSG:

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

[node2 192.168.5.13] MSG:

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

[node3 192.168.5.14] MSG:

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

[master 192.168.5.11] MSG:

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

INFO[11:23:15 CST] Installing docker ...

INFO[11:23:16 CST] Start to download images on all nodes

[node3] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.2

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/etcd:v3.4.13

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.2

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.2

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.2

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.20.4

[node3] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.20.4

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.20.4

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.20.4

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.6.9

[node3] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.6.9

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.6.9

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.20.4

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

[node3] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.20.4

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.16.3

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.16.3

[node3] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.16.3

[node3] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.16.3

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.16.3

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.16.3

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.20.4

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.16.3

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.16.3

[node3] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.16.3

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.6.9

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.16.3

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.16.3

[node3] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.16.3

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.16.3

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.16.3

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.16.3

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.16.3

INFO[11:23:25 CST] Generating etcd certs

INFO[11:23:26 CST] Synchronizing etcd certs

INFO[11:23:26 CST] Creating etcd service

[master 192.168.5.11] MSG:

etcd already exists

INFO[11:23:27 CST] Starting etcd cluster

[master 192.168.5.11] MSG:

Configuration file already exists

[master 192.168.5.11] MSG:

v3.4.13

INFO[11:23:27 CST] Refreshing etcd configuration

INFO[11:23:28 CST] Backup etcd data regularly

INFO[11:23:35 CST] Get cluster status

[master 192.168.5.11] MSG:

Cluster already exists.

[master 192.168.5.11] MSG:

v1.20.4

[master 192.168.5.11] MSG:

I1104 11:23:36.269698 16513 version.go:254] remote version is much newer: v1.22.3; falling back to: stable-1.20

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

7c42d83518f102f51e0606e268759c9d65a23b9aa7174c7e06e3378c34d85f62

[master 192.168.5.11] MSG:

secret/kubeadm-certs patched

[master 192.168.5.11] MSG:

secret/kubeadm-certs patched

[master 192.168.5.11] MSG:

secret/kubeadm-certs patched

[master 192.168.5.11] MSG:

kubeadm join lb.kubesphere.local:6443 --token 89xt78.8i7zkbhqcovfz3xd --discovery-token-ca-cert-hash sha256:61aea5a01218cdfdf05c099a44896468c7ee76ae39961ad6412a9101d9ed2ffd

[master 192.168.5.11] MSG:

master v1.20.4 [map[address:192.168.5.11 type:InternalIP] map[address:master type:Hostname]]

node1 v1.20.4 [map[address:192.168.5.12 type:InternalIP] map[address:node1 type:Hostname]]

node2 v1.20.4 [map[address:192.168.5.13 type:InternalIP] map[address:node2 type:Hostname]]

node3 v1.20.4 [map[address:192.168.5.14 type:InternalIP] map[address:node3 type:Hostname]]

INFO[11:23:38 CST] Installing kube binaries

INFO[11:23:38 CST] Joining nodes to cluster

INFO[11:23:39 CST] Congratulations! Scaling cluster is successful.

但是新增节点node3,home目录没有.kube。

之前安装的两个节点home目录下有.kube。

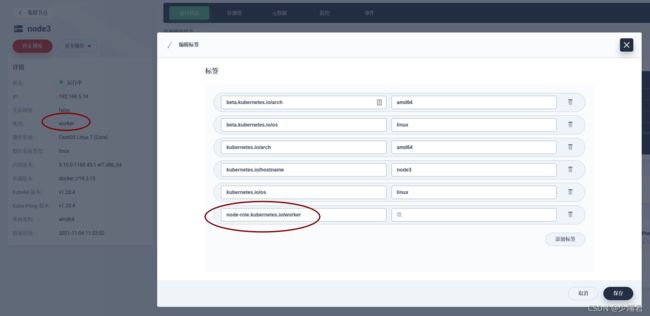

新增后,node3这个新节点需要添加如下标签,否则不会显示角色为worker属性。