Redis(6)- Redis集群

Redis(6)- Redis集群

- 前言

-

- 概念

- 原因

- 一、Redis分布式集群安装

-

- 1、Ruby环境搭建

-

- 安装Ruby环境

- 安装redis工具包

- 二、配置集群环境

-

- 1、配置redis.conf、启动集群

- 2、集群测试

-

-

-

- 获取集群节点信息

- 集群添加数据

- 集群添加节点

- 重新分配槽值

- 集群减缩节点

-

-

- 3、一主多从配置

- 三、集群说明

-

-

-

-

-

- 集群节点间通信-Gossip协议

- 集群节点间通信-消息解析流程

- 集群节点间通信-PING PONG消息交换

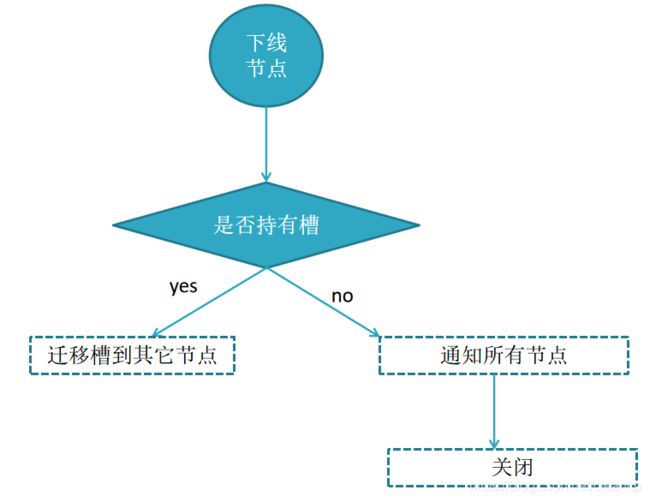

- RedisCluster下线流程

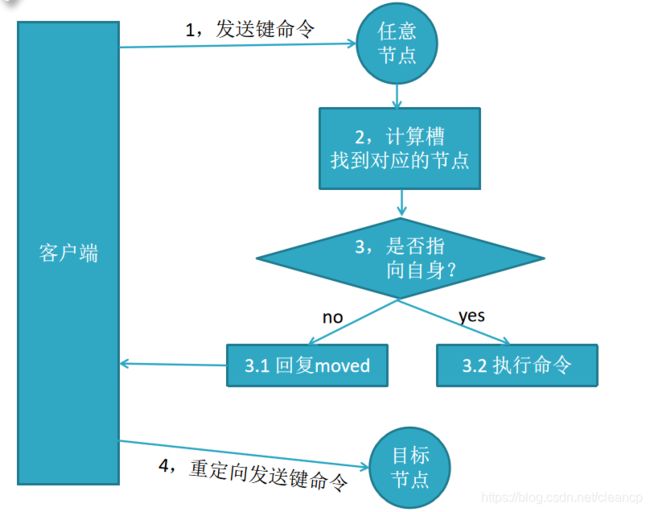

- 集群间节点通信-节点路由

- RedisCluster集群-故障转移主观下线

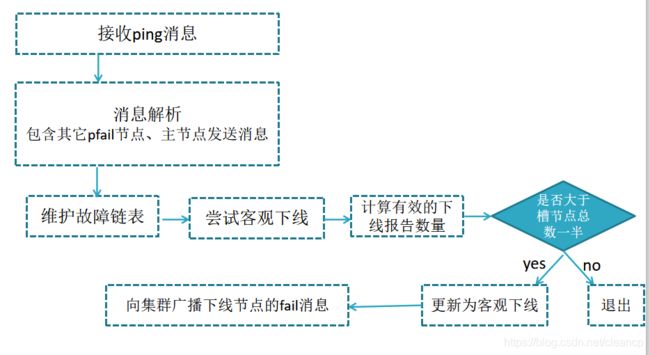

- RedisCluster集群-故障转移客观下线

- RedisCluster集群-故障修复

-

-

-

-

前言

Another-Redis-DesktopManager

主要包括Redis持久化机制,Redis主从搭建配置,Redis同步说明,多种拓扑方式说明

参考:https://blog.csdn.net/qq_41453285/article/details/103354554

扩展:codis-Redis集群方案,管理大数据量节点

概念

- 分布式数据库:分布式数据库是通过分区规则将数据划分到多个节点上,每个节点保存着这个规则划分的数据集属于整个数据的一个子集。

- 集群分区:常见分区包括哈希分区和顺序分区,Redis集群是哈希分区的虚拟槽分区。

- 哈希分区:节点取余,一致性Hash分区,虚拟槽分区。

- HASH槽:一个 redis 集群包含 16384 (0-16383 2^14-1)个哈希槽(hash slot),Redis存储数据时,通过CRC16[key]&16383算法通过判断key计算数据存储于哪一个槽,单机Redis享有所有槽,Redis集群一般是根据集群的主节点数划分哪个节点处理哪些槽(区间)。

好处:Redis增删节点只需修改节点与槽映射,解耦数据与节点关系 - RedisCluster缺陷

1、mset等批量操作支持有限,如果key分布在多个槽,不支持

2、事务支持有限,数据在不同节点,不支持事务,只支持单个节点上数据的事务

3、键是数据存储的最小粒度,不能将很大的键值对放在不同节点,不利于数据分散

4、不支持多数据库,只有0,select0

5、复制结构只支持单层结构,不支持树状结构 - 修复槽:Redis异常时可能导致槽出现问题,需要进行修复

./redis-trib.rb check ip:port #返回有问题的槽值

cluster setslot 槽值 stable

原因

#6379被kill6381成为新主节点的info信息

[root@localhost redis]# ./redis-cli -h 192.168.42.119 -p 6381 -a 12345678 info replication

# Replication

role:master

connected_slaves:1

slave0:ip=192.168.42.119,port=6380,state=online,offset=615344,lag=1

master_replid:81c2d503e26da087e4739af6b1d26597b7c7df4c

master_replid2:3f0eb9d8a3e432be3a5a925f91496426b84582eb

master_repl_offset:615344

second_repl_offset:240214

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:615344

sentinel.conf配置

#配置监听的redis主节点 mymaster是节点别名 quorum是客观下线的判定数,2:两个哨兵都认为是主观下线 就是客观下线

# sentinel monitor mymaster masterip masterport quorum

sentinel monitor mymaster 127.0.0.1 6379 2

# sentinel auth-pass redis主节点的访问密码

sentinel auth-pass mymaster 12345678

# Default is 30 seconds. mymaster 30秒之后无响应视为无效回复

sentinel down-after-milliseconds mymaster 30000

#进行客观下线时,Sentinel领导者节点会故障转移,选出新的主节点,原来的从节点会向新的主节点发起复制操作,限制每次向新的主节点发起复制操作的从节点个数为1

sentinel parallel-syncs mymaster 1

#故障转移的超时时间 3分钟

sentinel failover-timeout mymaster 180000

redis.conf配置

#故障转移时从节点的优先级高优先作为主节点

# By default the priority is 100.

slave-priority 100

一、Redis分布式集群安装

1、Ruby环境搭建

安装Ruby环境

下载Ruby安装包

tar -zxvf ruby-2.3.1.tar.gz

cd ruby-2.3.1

设置ruby的安装位置

./configure -prefix=/usr/local/ruby

编译和安装

make && make install //过程会有点慢,大概5-10分钟

安装redis工具包

#https://rubygems.org/gems/redis/versions/4.1.0

#安装gem工具

yum install gem

# 4.1.0 需要的 RUBY 版本:>= 2.2.2

gem install -l redis-4.1.0.gem

二、配置集群环境

1、配置redis.conf、启动集群

port 7001 - 7006

# 开启集群配置

cluster-enabled yes

# 节点超时时间(接收PONG消息的超时时间)

cluster-node-timeout 15000

#绑定ip

bind 192.168.42.119

# 注释requirepass

#集群配置文件 集群维护

cluster-config-file /usr/local/redis/redis-cluster/data/nodes-7001.conf

#添加启动脚本 cluster-start.sh

#脚本内容begin

../redis-server redis7001.conf &

../redis-server redis7001.conf &

../redis-server redis7003.conf &

../redis-server redis7011.conf &

../redis-server redis7012.conf &

../redis-server redis7013.conf &

# 内容end

#脚本赋权

chmod +x allredis-start.sh

#清空redis数据

flushall

# 创建集群 --replicas 0 表示无从节点

./redis-trib.rb create --replicas 0 192.168.42.119:7001 192.168.42.119:7002 192.168.42.119:7003

# --replicas 1 表示一个从节点 7001对应7004 主1,2,3 从1,2,3

./redis-trib.rb create --replicas 1 192.168.42.119:7001 192.168.42.119:7002 192.168.42.119:7003 192.168.42.119:7011 192.168.42.119:7012 192.168.42.119:7013

启动日志

[root@localhost redis-cluster]# ./redis-trib.rb create --replicas 1 192.168.42.119:7001 192.168.42.119:7002 192.168.42.119:7003 192.168.42.119:7011 192.168.42.119:7012 192.168.42.119:7013

>>> Creating cluster

>>> Performing hash slots allocation on 6 nodes...

Using 3 masters:

192.168.42.119:7001

192.168.42.119:7002

192.168.42.119:7003

Adding replica 192.168.42.119:7011 to 192.168.42.119:7001

Adding replica 192.168.42.119:7012 to 192.168.42.119:7002

Adding replica 192.168.42.119:7013 to 192.168.42.119:7003

M: ae670b2f784ce4826bc292024e696189ca1ae344 192.168.42.119:7001

slots:0-5460 (5461 slots) master

M: f501e1208d38a9fa548bf1a273033cb1463dfff3 192.168.42.119:7002

slots:5461-10922 (5462 slots) master

M: 8e0091b1597bdbaba3c459577aa81b22742a0bc0 192.168.42.119:7003

slots:10923-16383 (5461 slots) master

S: 51db504b90fecc38aa24cdb79ddc0afdef1516bc 192.168.42.119:7011

replicates ae670b2f784ce4826bc292024e696189ca1ae344

S: b6bfeb5af929d97111aad4a3b6e5390b94d20add 192.168.42.119:7012

replicates f501e1208d38a9fa548bf1a273033cb1463dfff3

S: 41a18bd61f4124a792d78a06a736da82c45504e6 192.168.42.119:7013

replicates 8e0091b1597bdbaba3c459577aa81b22742a0bc0

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join.....

>>> Performing Cluster Check (using node 192.168.42.119:7001)

M: ae670b2f784ce4826bc292024e696189ca1ae344 192.168.42.119:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 41a18bd61f4124a792d78a06a736da82c45504e6 192.168.42.119:7013

slots: (0 slots) slave

replicates 8e0091b1597bdbaba3c459577aa81b22742a0bc0

M: f501e1208d38a9fa548bf1a273033cb1463dfff3 192.168.42.119:7002

slots:5461-10922 (5462 slots) master

1 additional replica(s)

M: 8e0091b1597bdbaba3c459577aa81b22742a0bc0 192.168.42.119:7003

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: b6bfeb5af929d97111aad4a3b6e5390b94d20add 192.168.42.119:7012

slots: (0 slots) slave

replicates f501e1208d38a9fa548bf1a273033cb1463dfff3

S: 51db504b90fecc38aa24cdb79ddc0afdef1516bc 192.168.42.119:7011

slots: (0 slots) slave

replicates ae670b2f784ce4826bc292024e696189ca1ae344

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

2、集群测试

获取集群节点信息

节点ip port 、 是否主节点 , 连接的槽区间,从节点的主节点信息

192.168.42.119:7001> cluster nodes

ae670b2f784ce4826bc292024e696189ca1ae344 192.168.42.119:7001@17001 myself,master - 0 1613915801000 1 connected 0-5460

41a18bd61f4124a792d78a06a736da82c45504e6 192.168.42.119:7013@17013 slave 8e0091b1597bdbaba3c459577aa81b22742a0bc0 0 1613915804381 6 connected

f501e1208d38a9fa548bf1a273033cb1463dfff3 192.168.42.119:7002@17002 master - 0 1613915805403 2 connected 5461-10922

8e0091b1597bdbaba3c459577aa81b22742a0bc0 192.168.42.119:7003@17003 master - 0 1613915806425 3 connected 10923-16383

b6bfeb5af929d97111aad4a3b6e5390b94d20add 192.168.42.119:7012@17012 slave f501e1208d38a9fa548bf1a273033cb1463dfff3 0 1613915806000 5 connected

51db504b90fecc38aa24cdb79ddc0afdef1516bc 192.168.42.119:7011@17011 slave ae670b2f784ce4826bc292024e696189ca1ae344 0 1613915805000 4 connected

集群添加数据

hash时 节点间跳转

cluster keysolt mykey :判断mykey的槽值

192.168.42.119:7001> set name zcp

#hash name 为5798 跳转到7002去了

-> Redirected to slot [5798] located at 192.168.42.119:7002

OK

192.168.42.119:7002> get name

"zcp"

192.168.42.119:7002>

集群添加节点

新增7004主节点

cp redis7001.conf redis7004.conf

修改端口配置等信息启动

添加节点 7004 和 7001 碰面 (这样7004就认识7001的所有关联节点(朋友)啦)

[root@localhost redis-cluster]# ./redis-trib.rb add-node 192.168.42.119:7004 192.168.42.119:7001

>>> Adding node 192.168.42.119:7004 to cluster 192.168.42.119:7001

>>> Performing Cluster Check (using node 192.168.42.119:7001)

M: ae670b2f784ce4826bc292024e696189ca1ae344 192.168.42.119:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 41a18bd61f4124a792d78a06a736da82c45504e6 192.168.42.119:7013

slots: (0 slots) slave

replicates 8e0091b1597bdbaba3c459577aa81b22742a0bc0

M: f501e1208d38a9fa548bf1a273033cb1463dfff3 192.168.42.119:7002

slots:5461-10922 (5462 slots) master

1 additional replica(s)

M: 8e0091b1597bdbaba3c459577aa81b22742a0bc0 192.168.42.119:7003

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: b6bfeb5af929d97111aad4a3b6e5390b94d20add 192.168.42.119:7012

slots: (0 slots) slave

replicates f501e1208d38a9fa548bf1a273033cb1463dfff3

S: 51db504b90fecc38aa24cdb79ddc0afdef1516bc 192.168.42.119:7011

slots: (0 slots) slave

replicates ae670b2f784ce4826bc292024e696189ca1ae344

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.42.119:7004 to make it join the cluster.

[OK] New node added correctly.

# 槽还未分配没有从节点 cluster nodes

b47deb9e70a0b95caff33e866fa86893d09df89b 192.168.42.119:7004@17004 master - 0 1613916460000 0 connected

新增7014作为7004的从节点

redis-trib.rb add-node --slave --master-id b47deb9e70a0b95caff33e866fa86893d09df89b 192.168.42.119:7014 192.168.42.119:7001

--slave,表示添加的是从节点

--master-id b47deb9e70a0b95caff33e866fa86893d09df89b表示主节点7004的master_id

192.168.42.119:7014,新从节点

192.168.42.119:7001,集群原存在的旧节点

# 设置7014作为7004的从节点

[root@localhost redis-cluster]# ./redis-trib.rb add-node --slave --master-id b47deb9e70a0b95caff33e866fa86893d09df89b 192.168.42.119:7014 192.168.42.119:7001

>>> Adding node 192.168.42.119:7014 to cluster 192.168.42.119:7001

>>> Performing Cluster Check (using node 192.168.42.119:7001)

M: ae670b2f784ce4826bc292024e696189ca1ae344 192.168.42.119:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 41a18bd61f4124a792d78a06a736da82c45504e6 192.168.42.119:7013

slots: (0 slots) slave

replicates 8e0091b1597bdbaba3c459577aa81b22742a0bc0

M: b47deb9e70a0b95caff33e866fa86893d09df89b 192.168.42.119:7004

slots: (0 slots) master

0 additional replica(s)

M: f501e1208d38a9fa548bf1a273033cb1463dfff3 192.168.42.119:7002

slots:5461-10922 (5462 slots) master

1 additional replica(s)

M: 8e0091b1597bdbaba3c459577aa81b22742a0bc0 192.168.42.119:7003

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: b6bfeb5af929d97111aad4a3b6e5390b94d20add 192.168.42.119:7012

slots: (0 slots) slave

replicates f501e1208d38a9fa548bf1a273033cb1463dfff3

S: 51db504b90fecc38aa24cdb79ddc0afdef1516bc 192.168.42.119:7011

slots: (0 slots) slave

replicates ae670b2f784ce4826bc292024e696189ca1ae344

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.42.119:7014 to make it join the cluster.

Waiting for the cluster to join.

>>> Configure node as replica of 192.168.42.119:7004.

[OK] New node added correctly.

# cluster nodes 添加成功

9b10d2b1de6642cd7af8fbe6ab62fe594b26c103 192.168.42.119:7014@17014 slave b47deb9e70a0b95caff33e866fa86893d09df89b 0 1613916985836 0 connected

b47deb9e70a0b95caff33e866fa86893d09df89b 192.168.42.119:7004@17004 master - 0 1613916985000 0 connected

重新分配槽值

为新加入的节点分配槽值

redis-trib.rb reshard 192.168.42.119:7004 //为新主节点重新分配solt

How many slots do you want to move (from 1 to 16384)? 1000 //设置slot数1000

What is the receiving node ID? 464bc7590400441fafb63f2 //新节点node id

Source node #1:all //表示全部节点重新洗牌

#进行槽值重新分配

[root@localhost redis-cluster]# ./redis-trib.rb reshard 192.168.42.119:7004

>>> Performing Cluster Check (using node 192.168.42.119:7004)

M: b47deb9e70a0b95caff33e866fa86893d09df89b 192.168.42.119:7004

slots: (0 slots) master

1 additional replica(s)

M: ae670b2f784ce4826bc292024e696189ca1ae344 192.168.42.119:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: 8e0091b1597bdbaba3c459577aa81b22742a0bc0 192.168.42.119:7003

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 41a18bd61f4124a792d78a06a736da82c45504e6 192.168.42.119:7013

slots: (0 slots) slave

replicates 8e0091b1597bdbaba3c459577aa81b22742a0bc0

M: f501e1208d38a9fa548bf1a273033cb1463dfff3 192.168.42.119:7002

slots:5461-10922 (5462 slots) master

1 additional replica(s)

S: 9b10d2b1de6642cd7af8fbe6ab62fe594b26c103 192.168.42.119:7014

slots: (0 slots) slave

replicates b47deb9e70a0b95caff33e866fa86893d09df89b

S: b6bfeb5af929d97111aad4a3b6e5390b94d20add 192.168.42.119:7012

slots: (0 slots) slave

replicates f501e1208d38a9fa548bf1a273033cb1463dfff3

S: 51db504b90fecc38aa24cdb79ddc0afdef1516bc 192.168.42.119:7011

slots: (0 slots) slave

replicates ae670b2f784ce4826bc292024e696189ca1ae344

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

#要分配多少

How many slots do you want to move (from 1 to 16384)? 1000

What is the receiving node ID? b47deb9e70a0b95caff33e866fa86893d09df89b

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

#所有重新分配

Source node #1:all

Moving slot 329 from 192.168.42.119:7001 to 192.168.42.119:7004:

Moving slot 330 from 192.168.42.119:7001 to 192.168.42.119:7004:

Moving slot 331 from 192.168.42.119:7001 to 192.168.42.119:7004:

Moving slot 332 from 192.168.42.119:7001 to 192.168.42.119:7004:

省略……

# cluster nodes 展示的槽值效果

9b10d2b1de6642cd7af8fbe6ab62fe594b26c103 192.168.42.119:7014@17014 slave b47deb9e70a0b95caff33e866fa86893d09df89b 0 1613917576492 8 connected

b47deb9e70a0b95caff33e866fa86893d09df89b 192.168.42.119:7004@17004 master - 0 1613917576000 8 connected 0-332 5461-5794 10923-11255

#0 5461 10923 都属于7004的槽

集群减缩节点

步骤:

移除没有槽值的节点

有槽值的节点,需要先把槽转移走,再移除该节点

通知所有节点忘记该移除节点

干掉7001的从节点7011

# 根据id走不根据节点配置及端口走

[root@localhost redis-cluster]# ./redis-trib.rb del-node 192.168.42.119:7012 51db504b90fecc38aa24cdb79ddc0afdef1516bc

>>> Removing node 51db504b90fecc38aa24cdb79ddc0afdef1516bc from cluster 192.168.42.119:7012

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

#cluster nodes 看不到被删除的从节点记录了

删除主节点

重新分配主节点槽值

[root@localhost redis-cluster]# ./redis-trib.rb reshard 192.168.42.119:7001

>>> Performing Cluster Check (using node 192.168.42.119:7001)

M: ae670b2f784ce4826bc292024e696189ca1ae344 192.168.42.119:7001

slots:333-5460 (5128 slots) master

0 additional replica(s)

S: 41a18bd61f4124a792d78a06a736da82c45504e6 192.168.42.119:7013

slots: (0 slots) slave

replicates 8e0091b1597bdbaba3c459577aa81b22742a0bc0

M: b47deb9e70a0b95caff33e866fa86893d09df89b 192.168.42.119:7004

slots:0-332,5461-5794,10923-11255 (1000 slots) master

1 additional replica(s)

M: f501e1208d38a9fa548bf1a273033cb1463dfff3 192.168.42.119:7002

slots:5795-10922 (5128 slots) master

1 additional replica(s)

S: 9b10d2b1de6642cd7af8fbe6ab62fe594b26c103 192.168.42.119:7014

slots: (0 slots) slave

replicates b47deb9e70a0b95caff33e866fa86893d09df89b

M: 8e0091b1597bdbaba3c459577aa81b22742a0bc0 192.168.42.119:7003

slots:11256-16383 (5128 slots) master

1 additional replica(s)

S: b6bfeb5af929d97111aad4a3b6e5390b94d20add 192.168.42.119:7012

slots: (0 slots) slave

replicates f501e1208d38a9fa548bf1a273033cb1463dfff3

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

#将7001的槽移到7004 source node 是7001

How many slots do you want to move (from 1 to 16384)? 5128

What is the receiving node ID? b47deb9e70a0b95caff33e866fa86893d09df89b

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:b47deb9e70a0b95caff33e866fa86893d09df89b

*** It is not possible to use the target node as source node.

Source node #1:ae670b2f784ce4826bc292024e696189ca1ae344

Source node #2:dow^Hne^[[D

*** The specified node is not known or is not a master, please retry.

Source node #2:^[[A^H^H^H

*** The specified node is not known or is not a master, please retry.

Source node #2:done

省略……

Moving slot 5460 from 192.168.42.119:7001 to 192.168.42.119:7004:

#cluster nodes 查看7001的节点槽数据状态 此时已经没有了

ae670b2f784ce4826bc292024e696189ca1ae344 192.168.42.119:7001@17001 master - 0 1613918817072 1 connected

#删除7001节点 已完整 , 如果没有移除完整会提示

[root@localhost redis-cluster]# ./redis-trib.rb del-node 192.168.42.119:7001 b47deb9e70a0b95caff33e866fa86893d09df89b

>>> Removing node b47deb9e70a0b95caff33e866fa86893d09df89b from cluster 192.168.42.119:7001

#此处填错值 ,如果要移除的节点还有槽值 则会报错

[ERR] Node 192.168.42.119:7004 is not empty! Reshard data away and try again.

[root@localhost redis-cluster]#

[root@localhost redis-cluster]#

[root@localhost redis-cluster]#

[root@localhost redis-cluster]# ./redis-trib.rb del-node 192.168.42.119:7001 ae670b2f784ce4826bc292024e696189ca1ae344

>>> Removing node ae670b2f784ce4826bc292024e696189ca1ae344 from cluster 192.168.42.119:7001

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

# cluster nodes 此时已经没有7001节点了

192.168.42.119:7002> cluster nodes

8e0091b1597bdbaba3c459577aa81b22742a0bc0 192.168.42.119:7003@17003 master - 0 1613919040101 3 connected 11256-16383

9b10d2b1de6642cd7af8fbe6ab62fe594b26c103 192.168.42.119:7014@17014 slave b47deb9e70a0b95caff33e866fa86893d09df89b 0 1613919040000 8 connected

b47deb9e70a0b95caff33e866fa86893d09df89b 192.168.42.119:7004@17004 master - 0 1613919041120 8 connected 0-5794 10923-11255

41a18bd61f4124a792d78a06a736da82c45504e6 192.168.42.119:7013@17013 slave 8e0091b1597bdbaba3c459577aa81b22742a0bc0 0 1613919038067 6 connected

b6bfeb5af929d97111aad4a3b6e5390b94d20add 192.168.42.119:7012@17012 slave f501e1208d38a9fa548bf1a273033cb1463dfff3 0 1613919039084 5 connected

f501e1208d38a9fa548bf1a273033cb1463dfff3 192.168.42.119:7002@17002 myself,master - 0 1613919039000 2 connected 5795-10922

3、一主多从配置

./redis-trib.rb create --replicas 2

192.168.42.119:7001 192.168.42.119:7002 192.168.42.119:7003

192.168.42.119:7011 192.168.42.119:7012 192.168.42.119:7013

192.168.42.119:7021 192.168.42.119:7022 192.168.42.119:7023

三、集群说明

集群节点间通信-Gossip协议

Gossip协议:https://zhuanlan.zhihu.com/p/41228196

Btcoin使用该协议

节点间不间断通信,最终一致性协议

- Gossip 过程是由种子节点发起,当一个种子节点有状态需要更新到网络中的其他节点时,它会随机的选择周围几个节点散播消息,收到消息的节点也会重复该过程,直至最终网络中所有的节点都收到了消息。这个过程可能需要一定的时间,由于不能保证某个时刻所有节点都收到消息,但是理论上最终所有节点都会收到消息,因此它是一个最终一致性协议

Gossip协议的主要职责就是信息交换,信息交换的载体就是节点之间彼此发送的Gossip消息,常用的Gossip消息有ping消息、pong消息、meet消息、fail消息

- meet消息:用于通知新节点加入,消息发送者通知接收者加入到当前集群,meet消息通信完后,接收节点会加入到集群中,并进行周期性ping pong交换

- ping消息:集群内交换最频繁的消息,集群内每个节点每秒向其它节点发ping消息,用于检测节点是在在线和状态信息,ping消息发送封装自身节点和其他节点的状态数据;

- pong消息,当接收到ping meet消息时,作为响应消息返回给发送方,用来确认正常通信,pong消息也封闭了自身状态数据;

- fail消息:当节点判定集群内的另一节点下线时,会向集群内广播一个fail消息

- 10000 端口:

每个节点都有一个专门用于节点间通信的端口,就是自己提供服务的端口号+10000,比如 6379,那么用于节点间通信的就是16379端口。每个节点每隔一段时间都会往另外几个节点发送 ping 消息,同时其它几个节点接收到 ping 之后返回 pong。

交换的信息:信息包括故障信息,节点的增加和删除,hash slot 信息等等。

集群节点间通信-消息解析流程

所有消息格式为:消息头、消息体。

消息头:包含发送节点自身状态数据(比如节点ID、槽映射、节点角色、是否下线等),接收节点根据消息头可以获取到发送节点的相关数据。

消息体:包含其它节点信息

收到节点的PING消息,解析,获取消息头(发送节点信息),消息体(附带的其它节点信息),判断获取的信息是否是MEET类型,MEET判断节点是否是新节点,如果是新节点则发送MEET类型消息与其它节点握手,恢复

新节点加入:关联的内部节点会发送meet消息给新节点,通知新节点加入集群,新节点发送meet消息给其它节点,其它节点就保存了该节点信息

集群节点间通信-PING PONG消息交换

cluster-node-timeout 在redis.conf配置

# milliseconds

# cluster-node-timeout 15000

RedisCluster下线流程

节点下线需释放所有槽

从节点无槽直接下线

主节点有槽移给其它节点,然后再下线

集群间节点通信-节点路由

moved :回复客户端 并返回目标节点的ip port

客户端获取moved 访问目标节点 发送命令

RedisCluster集群-故障转移主观下线

无效回复标记为pfail状态

cluster-node-timeout

RedisCluster集群-故障转移客观下线

接收ping消息,发现pfail节点,ping该节点,标记pfail,尝试客观下线,

计算有效的下线报告数量,大于槽节点总数一半更新为客观下线,集群广播fail消息

RedisCluster集群-故障修复

主节点下线-选择从节点作为主节点,保证高可用

主节点开始选举