Android渲染--重温硬件加速上

Android中绘图的API很多,比如2D的基于Skia的接口,3D的绘图OpenGLES,Vulkan等。Android早期系统多数都是采用2D的绘图模式,比如绘制一张Bitmap图片。随着用户对视觉效果的追求以及硬件的能力突破,原有的渲染已经无法满足要求。所以Android在4.4后开始默认打开硬件加速来帮助加速渲染。

硬件加速,直白的说就是依赖GPU实现图形绘制加速,软硬件加速的区别主要是图形的绘制究竟是GPU来处理还是CPU,如果是GPU,就认为是硬件加速绘制,反之,软件绘制。为什么要用GPU替代CPU?

在渲染过程中,尤其是动画过程中,经常涉及位置、大小、插值、缩放、旋转、透明度变化、动画过渡、毛玻璃模糊,甚至包括3D变换、物理运动的计算,逻辑的处理相对较少,还可能会涉及到浮点计算,CPU和GPU本身的能力决定了他们可发挥的能力,GPU更适合做计算处理,也是移动端异构运算的一种实现。

然而在Android中,硬件加速还做了其他方面优化,不仅仅限定在绘制方面,绘制之前,在如何构建绘制区域上,硬件加速也做出了很大优化,因此硬件加速特性可以从下面两部分来分析:

- 前期策略:如何构建需要绘制的区域

- 后期绘制:单独渲染线程,依赖GPU进行绘制

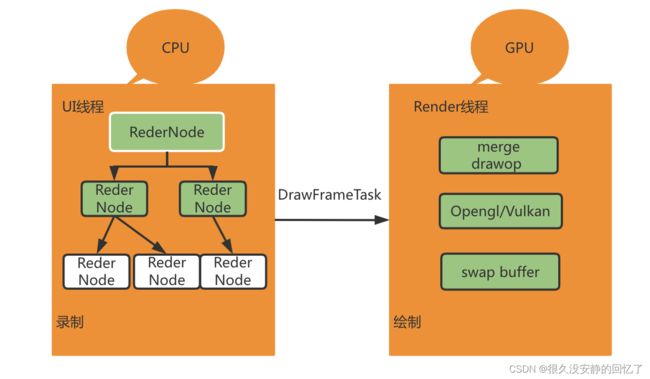

上面的策略展开来讲第一步是将2D的绘图操纵转换为对应的3D的绘图操纵,这个转换过程我们把它叫做录制。第二步在RenderThread线程用OpenGLES/Vulkan通过GPU去渲染。在上层view的绘制过程中就有硬件加速控制的相关代码如下:

boolean draw(Canvas canvas, ViewGroup parent, long drawingTime) {

//判断是否开启硬件加速

final boolean hardwareAcceleratedCanvas = canvas.isHardwareAccelerated();

......

if (hardwareAcceleratedCanvas) {

// Clear INVALIDATED flag to allow invalidation to occur during rendering, but

// retain the flag's value temporarily in the mRecreateDisplayList flag

mRecreateDisplayList = (mPrivateFlags & PFLAG_INVALIDATED) != 0;

mPrivateFlags &= ~PFLAG_INVALIDATED;

}

......

if (drawingWithRenderNode) { // 硬件加速

// Delay getting the display list until animation-driven alpha values are

// set up and possibly passed on to the view

renderNode = updateDisplayListIfDirty();

if (!renderNode.hasDisplayList()) {

// Uncommon, but possible. If a view is removed from the hierarchy during the call

// to getDisplayList(), the display list will be marked invalid and we should not

// try to use it again.

renderNode = null;

drawingWithRenderNode = false;

}

}

.....

if (!drawingWithDrawingCache) {

if (drawingWithRenderNode) {

mPrivateFlags &= ~PFLAG_DIRTY_MASK;

((RecordingCanvas) canvas).drawRenderNode(renderNode); // 硬件加速

} else {

// Fast path for layouts with no backgrounds

if ((mPrivateFlags & PFLAG_SKIP_DRAW) == PFLAG_SKIP_DRAW) {

mPrivateFlags &= ~PFLAG_DIRTY_MASK;

dispatchDraw(canvas);

} else {

draw(canvas);

}

}

} else if (cache != null) {

mPrivateFlags &= ~PFLAG_DIRTY_MASK;

if (layerType == LAYER_TYPE_NONE || mLayerPaint == null) {

// no layer paint, use temporary paint to draw bitmap

Paint cachePaint = parent.mCachePaint;

if (cachePaint == null) {

cachePaint = new Paint();

cachePaint.setDither(false);

parent.mCachePaint = cachePaint;

}

cachePaint.setAlpha((int) (alpha * 255));

canvas.drawBitmap(cache, 0.0f, 0.0f, cachePaint);

} else {

// use layer paint to draw the bitmap, merging the two alphas, but also restore

int layerPaintAlpha = mLayerPaint.getAlpha();

if (alpha < 1) {

mLayerPaint.setAlpha((int) (alpha * layerPaintAlpha));

}

canvas.drawBitmap(cache, 0.0f, 0.0f, mLayerPaint);

if (alpha < 1) {

mLayerPaint.setAlpha(layerPaintAlpha);

}

}

}

......

return more;

}所谓构建就是递归遍历所有视图,将需要的操作缓存下来,之后再交给单独的Render线程利用OpenGL渲染。在Android硬件加速框架中,View视图被抽象成RenderNode节点,View中的绘制都会被抽象成一个个DrawOp(DisplayListOp),比如View中drawLine,构建中就会被抽象成一个DrawLintOp,drawBitmap操作会被抽象成DrawBitmapOp,每个子View的绘制被抽象成DrawRenderNodeOp,每个DrawOp有对应的OpenGL绘制命令,同时内部也握着绘图所需要的数据。

构建完成后,就可以将这个绘图Op树交给Render线程进行绘制,这里是同软件绘制很不同的地方,软件绘制时,View一般都在主线程中完成绘制,而硬件加速,除非特殊要求,一般都是在单独线程中完成绘制,如此一来就分担了主线程很多压力,提高了UI线程的响应速度。

HardwareRenderer构建DrawOp

HardwareRenderer是整个硬件加速的入口:

public HardwareRenderer() {

// 创建rendernode

mRootNode = RenderNode.adopt(nCreateRootRenderNode());

mRootNode.setClipToBounds(false);

// 创建RenderProxy

mNativeProxy = nCreateProxy(!mOpaque, mIsWideGamut, mRootNode.mNativeRenderNode);

if (mNativeProxy == 0) {

throw new OutOfMemoryError("Unable to create hardware renderer");

}

Cleaner.create(this, new DestroyContextRunnable(mNativeProxy));

ProcessInitializer.sInstance.init(mNativeProxy);

}hwui部分native的代码在libs/hwui/renderthread/下面,大家有兴趣可行查阅,下面贴一些核心的代码片段:

RenderProxy::RenderProxy(bool translucent, RenderNode* rootRenderNode,

39 IContextFactory* contextFactory)

40 : mRenderThread(RenderThread::getInstance()), mContext(nullptr) {

41 mContext = mRenderThread.queue().runSync([&]() -> CanvasContext* {

42 return CanvasContext::create(mRenderThread, translucent, rootRenderNode, contextFactory);

43 });

44 mDrawFrameTask.setContext(&mRenderThread, mContext, rootRenderNode,

45 pthread_gettid_np(pthread_self()), getRenderThreadTid());

46 }从RenderThread::getInstance()可以看出,RenderThread是一个单例,每个进程最多只有一个硬件渲染线程。

void RenderThread::initThreadLocals() {

232 setupFrameInterval();

233 initializeChoreographer();

234 mEglManager = new EglManager();

235 mRenderState = new RenderState(*this);

236 mVkManager = VulkanManager::getInstance();

237 mCacheManager = new CacheManager();

238 }会初始化Opengl和Vulkan的管理器,按需求选用是OpenGl还是Vulkan,Vulkan相关的也都在该包下。

DisplayListCanvas的JNI实现如下:

* frameworks/base/core/jni/android_view_DisplayListCanvas.cpp

const char* const kClassPathName = "android/view/DisplayListCanvas";

static JNINativeMethod gMethods[] = {

// ------------ @FastNative ------------------

{ "nCallDrawGLFunction", "(JJLjava/lang/Runnable;)V",

(void*) android_view_DisplayListCanvas_callDrawGLFunction },

// ------------ @CriticalNative --------------

{ "nCreateDisplayListCanvas", "(JII)J", (void*) android_view_DisplayListCanvas_createDisplayListCanvas },

{ "nResetDisplayListCanvas", "(JJII)V", (void*) android_view_DisplayListCanvas_resetDisplayListCanvas },

{ "nGetMaximumTextureWidth", "()I", (void*) android_view_DisplayListCanvas_getMaxTextureWidth },

{ "nGetMaximumTextureHeight", "()I", (void*) android_view_DisplayListCanvas_getMaxTextureHeight },

{ "nInsertReorderBarrier", "(JZ)V", (void*) android_view_DisplayListCanvas_insertReorderBarrier },

{ "nFinishRecording", "(J)J", (void*) android_view_DisplayListCanvas_finishRecording },

{ "nDrawRenderNode", "(JJ)V", (void*) android_view_DisplayListCanvas_drawRenderNode },

{ "nDrawLayer", "(JJ)V", (void*) android_view_DisplayListCanvas_drawLayer },

{ "nDrawCircle", "(JJJJJ)V", (void*) android_view_DisplayListCanvas_drawCircleProps },

{ "nDrawRoundRect", "(JJJJJJJJ)V",(void*) android_view_DisplayListCanvas_drawRoundRectProps },

};RecordingCanvas实现

RecordingCanvas::RecordingCanvas(size_t width, size_t height)

: mState(*this), mResourceCache(ResourceCache::getInstance()) {

resetRecording(width, height);

}

void RecordingCanvas::resetRecording(int width, int height, RenderNode* node) {

LOG_ALWAYS_FATAL_IF(mDisplayList, "prepareDirty called a second time during a recording!");

mDisplayList = new DisplayList();

mState.initializeRecordingSaveStack(width, height);

mDeferredBarrierType = DeferredBarrierType::InOrder;

}RecordingCanvas里面定义了各种OP,

struct DrawArc final : Op {

231 static const auto kType = Type::DrawArc;

232 DrawArc(const SkRect& oval, SkScalar startAngle, SkScalar sweepAngle, bool useCenter,

233 const SkPaint& paint)

234 : oval(oval)

235 , startAngle(startAngle)

236 , sweepAngle(sweepAngle)

237 , useCenter(useCenter)

238 , paint(paint) {}

239 SkRect oval;

240 SkScalar startAngle;

241 SkScalar sweepAngle;

242 bool useCenter;

243 SkPaint paint;

244 void draw(SkCanvas* c, const SkMatrix&) const {

245 c->drawArc(oval, startAngle, sweepAngle, useCenter, paint);

246 }

247 };上面提到说RenderNode会保存Op的数据和操作信息:

void RenderNode::prepareTreeImpl(TreeObserver& observer, TreeInfo& info, bool functorsNeedLayer) {

218 if (mDamageGenerationId == info.damageGenerationId) {

219 // We hit the same node a second time in the same tree. We don't know the minimal

220 // damage rect anymore, so just push the biggest we can onto our parent's transform

221 // We push directly onto parent in case we are clipped to bounds but have moved position.

222 info.damageAccumulator->dirty(DIRTY_MIN, DIRTY_MIN, DIRTY_MAX, DIRTY_MAX);

223 }

224 info.damageAccumulator->pushTransform(this);

225

226 if (info.mode == TreeInfo::MODE_FULL) {

227 pushStagingPropertiesChanges(info);

228 }

229

230 if (!mProperties.getAllowForceDark()) {

231 info.disableForceDark++;

232 }

233 if (!mProperties.layerProperties().getStretchEffect().isEmpty()) {

234 info.stretchEffectCount++;

235 }

236

237 uint32_t animatorDirtyMask = 0;

238 if (CC_LIKELY(info.runAnimations)) {

239 animatorDirtyMask = mAnimatorManager.animate(info);

240 }

241

242 bool willHaveFunctor = false;

243 if (info.mode == TreeInfo::MODE_FULL && mStagingDisplayList) {

244 willHaveFunctor = mStagingDisplayList.hasFunctor();

245 } else if (mDisplayList) {

246 willHaveFunctor = mDisplayList.hasFunctor();

247 }

248 bool childFunctorsNeedLayer =

249 mProperties.prepareForFunctorPresence(willHaveFunctor, functorsNeedLayer);

250

251 if (CC_UNLIKELY(mPositionListener.get())) {

252 mPositionListener->onPositionUpdated(*this, info);

253 }

254

255 prepareLayer(info, animatorDirtyMask);

256 if (info.mode == TreeInfo::MODE_FULL) {

257 pushStagingDisplayListChanges(observer, info);

258 }

259

260 if (mDisplayList) {

261 info.out.hasFunctors |= mDisplayList.hasFunctor();

262 mHasHolePunches = mDisplayList.hasHolePunches();

263 bool isDirty = mDisplayList.prepareListAndChildren(

264 observer, info, childFunctorsNeedLayer,

265 [this](RenderNode* child, TreeObserver& observer, TreeInfo& info,

266 bool functorsNeedLayer) {

267 child->prepareTreeImpl(observer, info, functorsNeedLayer);

268 mHasHolePunches |= child->hasHolePunches();

269 });

270 if (isDirty) {

271 damageSelf(info);

272 }

273 } else {

274 mHasHolePunches = false;

275 }

276 pushLayerUpdate(info);

277

278 if (!mProperties.getAllowForceDark()) {

279 info.disableForceDark--;

280 }

281 if (!mProperties.layerProperties().getStretchEffect().isEmpty()) {

282 info.stretchEffectCount--;

283 }

284 info.damageAccumulator->popTransform();

285 }void RenderNode::pushLayerUpdate(TreeInfo& info) {

174 #ifdef __ANDROID__ // Layoutlib does not support CanvasContext and Layers

175 LayerType layerType = properties().effectiveLayerType();

176 // If we are not a layer OR we cannot be rendered (eg, view was detached)

177 // we need to destroy any Layers we may have had previously

178 if (CC_LIKELY(layerType != LayerType::RenderLayer) || CC_UNLIKELY(!isRenderable()) ||

179 CC_UNLIKELY(properties().getWidth() == 0) || CC_UNLIKELY(properties().getHeight() == 0) ||

180 CC_UNLIKELY(!properties().fitsOnLayer())) {

181 if (CC_UNLIKELY(hasLayer())) {

182 this->setLayerSurface(nullptr);

183 }

184 return;

185 }

186

187 if (info.canvasContext.createOrUpdateLayer(this, *info.damageAccumulator, info.errorHandler)) {

188 damageSelf(info);

189 }

190

191 if (!hasLayer()) {

192 return;

193 }

194

195 SkRect dirty;

196 info.damageAccumulator->peekAtDirty(&dirty);

197 info.layerUpdateQueue->enqueueLayerWithDamage(this, dirty);

198 if (!dirty.isEmpty()) {

199 mStretchMask.markDirty();

200 }

201

202 // There might be prefetched layers that need to be accounted for.

203 // That might be us, so tell CanvasContext that this layer is in the

204 // tree and should not be destroyed.

205 info.canvasContext.markLayerInUse(this);

206 #endif

207 }核心流程里绘制的Ops都放在mDisplayList中,这边会去递归的调用每个RenderNode的prepareTreeImpl。

pushLayerUpdate,将要更新的RenderNode都加到TreeInfo的layerUpdateQueue中,还有其对应的damage大小。

累加器的popTransform,就是将该Node的DirtyStack生效,收集好DisplayList后就是绘制了。