K8S—集群部署

1、K8S概述:

K8s是容器集群管理系统,是一个开源的平台,可以实现容器集群的自动化部署、自动扩展容维护等功能,k8s的原名是kubernetes。

1.1、K8s的功能:

- 有大量跨主机的容器需要管理

- 快速部署应用快速扩展应用

- 无缝对接新的应用功能

- 节省资源,优化硬件资源的使用情况

- 服务发现与负载均衡

- 自动化部署与回滚、自动修复、自动扩缩容、维护等功能

1.2、核心角色与功能:

- master(管理节点):

作用:管理节点,提供集群的控制,对集群进行全局决策,检测和响应集群事件

组成:apiserver,scheduler,etcd和controllermanager服务组成

- node(计算节点)

作用:运行容器的实际节点,维护运行Pod,并提供具体应用的运行环境

组成:kubelet、kube-proxy和docker组成

- 镜像仓库

1.3、master节点服务

Api server:是整个系统的对外接口,供客户端和其它组件调用。

scheduler:负责对集群内部的资源进行调度,相当于“调度室”。两个过程:一种筛选,一种优选。

controller manager:负责管理控制器,相当于“大总管”。

etcd:kubernetes在运行过程中产生的元数据全部存储在etcd中。在键的组织上etcd采用了层次化的空间结构

2、集群部署(kubeadm方式)

2.1、环境准备

master(管理主机):2CPU、4G 192.168.4.10

node01(计算节点):2CPU、2G 192.168.4.11

node02(计算节点):2CPU、2G 192.168.4.12

2.2、以下操作需要在所有节点上执行

1)所有节点操作系统初始化,关闭swap、selinux、firewalld,关闭不需要的服务

[root@master ~]# swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

[root@master ~]# setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@master ~]# systemctl stop firewalld && systemctl disable firewalld

[root@master ~]# systemctl stop postfix.service && systemctl disable postfix.service 2)Hosts文件的相互解析

[root@master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.4.10 master

192.168.4.11 node01

192.168.4.12 node023)针对Kubernetes调整内核参数,文件本身不存在

[root@master ~]# vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1 # 开启桥设备内核监控(ipv6)

net.bridge.bridge-nf-call-iptables = 1 # 开启桥设备内核监控(ipv4)

net.ipv4.ip_forward = 1 # 开启路由转发

[root@master ~]# modprobe br_netfilter # 加载内核模块,开启netfilter对bridge设备的监控

[root@master ~]# sysctl --system # 加载上面的k8s.conf配置文件4)时间同步

[root@master ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

DNS1=8.8.8.8

[root@master ~]# systemctl restart network

[root@master ~]# yum -y install ntpdate

[root@master ~]# ntpdate time.windows.com5)安装IPVS代理软件包

[root@master ~]# yum install -y ipvsadm ipset # 使用lvs负载均衡调用集群的负载均衡

[root@master ~]# ipvsadm -Ln # 查看设置的规则6)配置yum源

[root@master ~]# yum -y install wget

# 安装epel源,并将repo 配置中的地址替换为阿里云镜像站地址

[root@master ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@master ~]# sed -i 's|^#baseurl=https://download.fedoraproject.org/pub|baseurl=https://mirrors.aliyun.com|' /etc/yum.repos.d/epel*

[root@master ~]# sed -i 's|^metalink|#metalink|' /etc/yum.repos.d/epel*

# 下载阿里云的yum源文件

[root@master ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.cloud.tencent.com/repo/centos7_base.repo

[root@master ~]# yum clean all && yum makecache

[root@master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

# 配置docker源

[root@master ~]# wget https://download.docker.com/linux/centos/docker-ce.repo -P /etc/yum.repos.d/

# 添加阿里云软件

[root@master ~]# cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF7)配置镜像加速器

[root@master ~]# mkdir /etc/docker

[root@master ~]# cat > /etc/docker/daemon.json <8)安装软件

master节点上安装docker、kubeadm、kubelet、kubectl

node节点上安装docker、kubeadm、kubelet

[root@master ~]# systemctl daemon-reload

[root@master ~]# yum install -y docker-ce kubelet-1.23.0 kubeadm-1.23.0 kubectl-1.23.0

[root@master ~]# systemctl enable docker && systemctl start docker

[root@master ~]# systemctl enable kubelet

[root@master ~]# docker info

[root@master ~]# docker info | grep "Cgroup Driver"

Cgroup Driver: systemd9)设置tab键,可以使得kubeadm可以直接tab出来

[root@master ~]# kubectl completion bash >/etc/bash_completion.d/kubectl

[root@master ~]# kubeadm completion bash >/etc/bash_completion.d/kubeadm

[root@master ~]# exit2.3、master节点上初始化集群

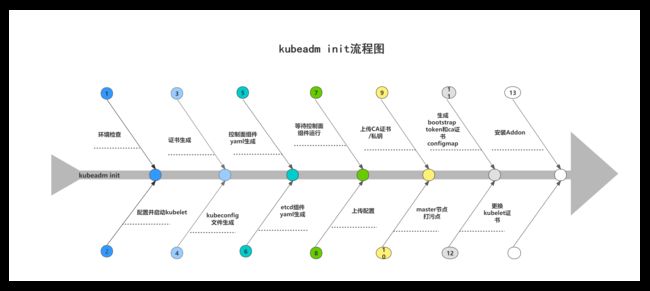

1)kubeadm部署K8S集群

[root@master ~]# kubeadm config images list # 查询K8S集群镜像的清单,可以用docker pull下载

[root@master ~]# kubeadm init \

--apiserver-advertise-address=192.168.4.10 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.23.10 \

--service-cidr=10.254.0.0/16 \

--pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address 集群通告地址

--image-repository 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

--kubernetes-version K8s版本,与上面安装的一致

--service-cidr 集群内部虚拟网络,Pod统一访问入口

--pod-network-cidr Pod网络,与下面部署的CNI网络组件yaml中保持一致

2)执行命令授权,该命令在安装完k8s后会有提示,完成集群的授权

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

3)验证kubeadm的安装

[root@master ~]# kubectl version

[root@master ~]# kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""} 3)获取master的token,认证文件token存放在/etc/kubernetes/pki/ca.crt里

[root@master ~]# cat /etc/kubernetes/pki/ca.crt

[root@master ~]# kubeadm token list # 列出当前的token

TOKEN TTL EXPIRES USAGES DESCRIPTION

EXTRA GROUPSsks6id.zmd12cv1c4h975da 23h 2022-05-29T13:09:20Z authentication,signing The default bootstrap token generated

by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

[root@master ~]# kubeadm token delete sks6id.zmd12cv1c4h975da # 删除原有的token,因为有时间限制

bootstrap token "sks6id" deleted

[root@master ~]# kubeadm token create --ttl=0 --print-join-command # 创建生命周期为无限的token

kubeadm join 192.168.4.10:6443 --token 9scoj9.5fmlb4vqdxh8k51m --discovery-token-ca-cert-hash sha256:1d333f91bbe47d87a94ae65

cb8ce474db19e8c98fe49550c438408a8d1fef009

[root@master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt |openssl rsa -pubin -outform der |openssl dgst -sha256 -hex # 获取token_hash

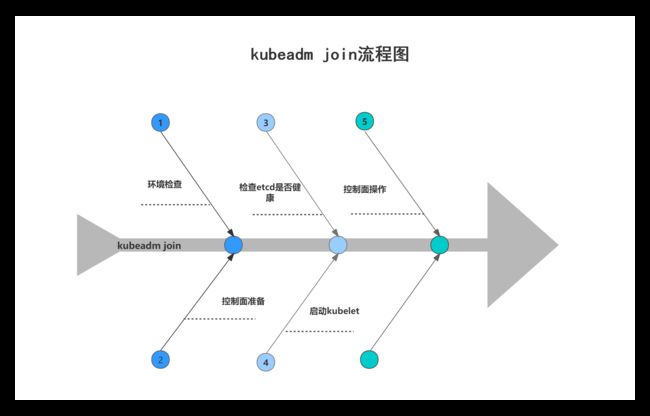

2.4、node节点加入集群

[root@node01 ~]# kubeadm join 192.168.4.10:6443 --token \

--discovery-token-ca-cert-hash sha256:

1)flaanel网络插件

- 方式一

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml- 方式二

[root@master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@master ~]# vim kube-flannel.yml

128 "Network": "10.254.0.0/16", # 与--pod-network-cidr保持一致

182 image: rancher/mirrored-flannelcni-flannel:v0.17.0

197 image: rancher/mirrored-flannelcni-flannel:v0.17.0

[root@master ~]# kubectl apply -f kube-flannel.yml 2.5、验证

[root@master ~]# kubectl create deployment nginx --image=nginx

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

[root@master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-85b98978db-pv6ns 1/1 Running 0 62m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.254.0.1 443/TCP 41h

service/nginx NodePort 10.254.55.99 80:31317/TCP 61m - 浏览器访问: http://NodeIP:31317

3、集群搭建(二进制包方式)

3.1、以下操作需要在所有节点上执行

1)所有节点操作系统初始化,关闭swap、selinux、firewalld,关闭不需要的服务

[root@master ~]# systemctl stop firewalld

[root@master ~]# systemctl disable firewalld

[root@master ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@master ~]# setenforce 0

[root@master ~]# swapoff -a

[root@master ~]# sed -ri 's/.*swap.*/#&/' /etc/fstab

2)配置hosts文件

[root@master ~]# cat >> /etc/hosts << EOF

192.168.4.10 master

192.168.4.21 node01

192.168.4.22 node02

192.168.4.23 node03

EOF3)将桥接的IPV4流量传递到iptables的链接

[root@master ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@master ~]# sysctl --system4)时间同步

[root@master ~]# yum install ntpdate -y

[root@master ~]# ntpdate time.windows.com3.2、部署Etcd集群

Etcd是一个分布式键值存储系统,Kubernetes使用Etcd进行数据存储,所以先准备一个Etcd数据库,为解决Etcd单点故障,应采用集群方式部署,这里使用3台组建集群,可容忍1台机器故障,当然,也可以使用5台组建集群,可容忍2台机器故障。

3.2.1、节点明细

这里做实验,为了节省机器,与K8S节点机器复用。也可以独立于k8s集群之外部署,只要apiserver能连接到就行。

- etcd-1:192.168.4.21

- etcd-2:192.168.4.22

- etcd-3:192.168.4.23

3.2.2、准备cfssl证书生成工具

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl更方便使用。找任意一台服务器操作,这里用node01节点。

[root@master ~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@master ~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@master ~]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

[root@master ~]# chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

[root@master ~]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@master ~]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@master ~]# mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

3.2.3、生成Etcd证书,自签证书颁发机构(CA)

1)创建工作目录

[root@master ~]# mkdir -p ~/TLS/{etcd,k8s}

[root@master ~]# cd TLS/etcd/2)自签CA

[root@master etcd]# cat > ca-config.json<< EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

[root@master etcd]# cat > ca-csr.json<< EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF3)生成证书

[root@master etcd]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

[root@master etcd]# ls *pem

ca-key.pem ca.pem3.2.4、使用自签 CA 签发 Etcd HTTPS 证书

1)创建证书申请文件

[root@master etcd]# cat > server-csr.json<< EOF

{

"CN": "etcd",

"hosts": [

"192.168.4.10",

"192.168.4.21",

"192.168.4.22"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

- 注:上述文件 hosts 字段中 IP 为所有 etcd 节点的集群内部通信 IP,一个都不能少!为了方便后期扩容可以多写几个预留的 IP。

2)生成证书

[root@master etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

[root@master etcd]# ls server*pem

server-key.pem server.pem3.2.5、部署 Etcd 集群

1)下载二进制文件

[root@master ~]# wget https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

2) 创建工作目录并解压二进制包

[root@master ~]# mkdir -p /opt/etcd/{bin,cfg,ssl}

[root@master ~]# tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

[root@master ~]# mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/3)创建etcd配置文件

[root@master ~]# cat > /opt/etcd/cfg/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.4.10:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.4.10:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.4.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.4.10:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.4.10:2380,etcd-2=https://192.168.4.21:2380,etcd-3=https://192.168.4.22:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

参数说明:

ETCD_NAME:节点名称,集群中唯一ETCD_DATA_DIR:数据目录ETCD_LISTEN_PEER_URLS:集群通信监听地址ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址ETCD_INITIAL_CLUSTER:集群节点地址ETCD_INITIAL_CLUSTER_TOKEN:集群 TokenETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new 是新集群,existing 表示加入已有集群

4)systemd 管理 etcd

[root@master ~]# cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF[root@master ~]# cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/6)启动并设置开机自启

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start etcd

[root@master ~]# systemctl enable etcd7)将上面生成的文件拷贝到其他节点

[root@master ~]# scp -r /opt/etcd/ 192.168.4.21:/opt/

[root@master ~]# scp /usr/lib/systemd/system/etcd.service 192.168.4.21:/usr/lib/systemd/system/

[root@master ~]# scp -r /opt/etcd/ 192.168.4.22:/opt/

[root@master ~]# scp /usr/lib/systemd/system/etcd.service 192.168.4.22:/usr/lib/systemd/system/7)所有节点参照以下步骤,分别修改 etcd.conf 配置文件中的节点名称和当前服务器 IP,并启动服务

[root@node01 ~]# vim /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="etcd-2" # 修改节点名称,对应ETCD_INITIAL_CLUSTER

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.4.21:2380" # 修改为本机IP

ETCD_LISTEN_CLIENT_URLS="https://192.168.4.21:2379" # 修改为本机IP

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.4.21:2380" # 修改为本机IP

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.4.21:2379" # 修改为本机IP

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.4.10:2380,etcd-

2=https://192.168.4.21:2380,etcd-3=https://192.168.4.22:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@node01 ~]# systemctl daemon-reload

[root@node01 ~]# systemctl start etcd

[root@node01 ~]# systemctl enable etcd

8)查看集群状态

[root@node02 ~]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.4.10:2379,https://192.168.4.21:2379,https://192.168.4.22:2379" endpoint health

https://192.168.4.22:2379 is healthy: successfully committed proposal: took = 24.275342ms

https://192.168.4.21:2379 is healthy: successfully committed proposal: took = 26.717474ms

https://192.168.4.10:2379 is healthy: successfully committed proposal: took = 25.68052ms3.3、安装docker

3.3.1、这里采用二进制安装,用 yum 安装也一样。

[root@master ~]# wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

[root@master ~]# tar zxvf docker-19.03.9.tgz

[root@master ~]# mv docker/* /usr/bin

[root@master ~]# cat > /usr/lib/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF3.3.2、创建配置文件

[root@master ~]# mkdir /etc/docker

[root@master ~]# cat > /etc/docker/daemon.json << EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["http://f1361db2.m.daocloud.io"]

}

EOF3.3.3、启动并设置开机启动

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start docker

[root@master ~]# systemctl enable docker3.4、部署 Master Node

[root@master ~]# cat > ca-config.json<< EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

[root@master ~]# cat > ca-csr.json<< EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF[root@master ~]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

[root@master ~]# ls *pem

ca-key.pem ca.pem- 创建证书申请文件

[root@master ~]# cat > server-csr.json<< EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.4.10",

"192.168.4.21",

"192.168.4.22",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF- 生成证书

[root@master ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

[root@master ~]# ls server*pem

server-key.pem server.pem3.4.2、 从 Github 下载二进制文件

网址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.9.md#server-binaries

[root@master ~]# wget https://dl.k8s.io/v1.9.11/kubernetes-server-linux-amd64.tar.gz

1)解压二制进包

[root@master ~]# mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

[root@master ~]# tar zxvf kubernetes-server-linux-amd64.tar.gz

[root@master ~]# cd kubernetes/server/bin/

[root@master bin]# cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin/

[root@master bin]# cp kubectl /usr/bin/3.4.3、部署 kube-apiserver

1)创建配置文件

[root@master ~]# cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--etcdservers=https://192.168.4.10:2379,https://192.168.4.21:2379,https://192.168.4.22:2379 \\

--bind-address=192.168.4.10 \\

--secure-port=6443 \\

--advertise-address=192.168.4.10 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--enable-admissionplugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-32767 \\

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

EOF

- 参数说明:

–logtostderr:启用日志–v:日志等级–log-dir:日志目录–etcd-servers:etcd 集群地址–bind-address:监听地址–secure-port:https 安全端口–advertise-address:集群通告地址–allow-privileged:启用授权–service-cluster-ip-range:Service 虚拟 IP 地址段–enable-admission-plugins:准入控制模块–authorization-mode:认证授权,启用 RBAC 授权和节点自管理–enable-bootstrap-token-auth:启用 TLS bootstrap 机制–token-auth-file:bootstrap token 文件–service-node-port-range:Service nodeport 类型默认分配端口范围–kubelet-client-xxx:apiserver 访问 kubelet 客户端证书–tls-xxx-file:apiserver https 证书–etcd-xxxfile:连接 Etcd 集群证书–audit-log-xxx:审计日志

2)把刚才生成的证书拷贝到配置文件中的路径

[root@master ~]# cp ca*pem server*pem /opt/kubernetes/ssl/

[root@master ~]# cd /opt/kubernetes/ssl/

[root@master ssl]# ls

ca-key.pem ca.pem server-key.pem server.pem3)启用 TLS Bootstrapping 机制

[root@master ~]# cat > /opt/kubernetes/cfg/token.csv << EOF

b7a3261a2cee7f7aa693468a84fc79bf,kubelet-bootstrap,10001,"system:nodebootstrapper"

EOF- token 也可自行生成替换

[root@master ~]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

b7a3261a2cee7f7aa693468a84fc79bf4)systemd 管理 apiserver

[root@master ~]# cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF5)启动并设置开机启动

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start kube-apiserver

[root@master ~]# systemctl enable kube-apiserver6)授权 kubelet-bootstrap 用户允许请求证书

[root@master ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap3.4.4、部署 kube-controller-manager

1)创建配置文件

[root@master ~]# cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF

> KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

> --v=2 \\

> --log-dir=/opt/kubernetes/logs \\

> --leader-elect=true \\

> --master=127.0.0.1:8080 \\

> --bind-address=127.0.0.1 \\

> --allocate-node-cidrs=true \\

> --cluster-cidr=10.244.0.0/16 \\

> --service-cluster-ip-range=10.0.0.0/24 \\

> --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

> --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

> --root-ca-file=/opt/kubernetes/ssl/ca.pem \\

> --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

> --experimental-cluster-signing-duration=87600h0m0s"

> EOF参数说明:

–master:通过本地非安全本地端口 8080 连接 apiserver。–leader-elect:当该组件启动多个时,自动选举(HA)–cluster-signing-cert-file/–cluster-signing-key-file:自动为 kubelet 颁发证书 的 CA,与 apiserver 保持一致

2)systemd 管理 controller-manager

[root@master ~]# cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

> [Unit]

> Description=Kubernetes Controller Manager

> Documentation=https://github.com/kubernetes/kubernetes

> [Service]

> EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

> ExecStart=/opt/kubernetes/bin/kube-controller-manager

> \$KUBE_CONTROLLER_MANAGER_OPTS

> Restart=on-failure

> [Install]

> WantedBy=multi-user.target

> EOF3)启动并设置开机启动

待补充……